#knowledgedistillation search results

🔥 Read our Highly Cited Paper 📚MicroBERT: Distilling MoE-Based #Knowledge from BERT into a Lighter Model 🔗mdpi.com/2076-3417/14/1… 👨🔬by Dashun Zheng et al. @mpu1991 #naturallanguageprocessing #knowledgedistillation

🔥 Read our Paper 📚Discriminator-Enhanced #KnowledgeDistillation Networks 🔗mdpi.com/2076-3417/13/1… 👨🔬by Zhenping Li et al. @CAS__Science #knowledgedistillation #reinforcementlearning

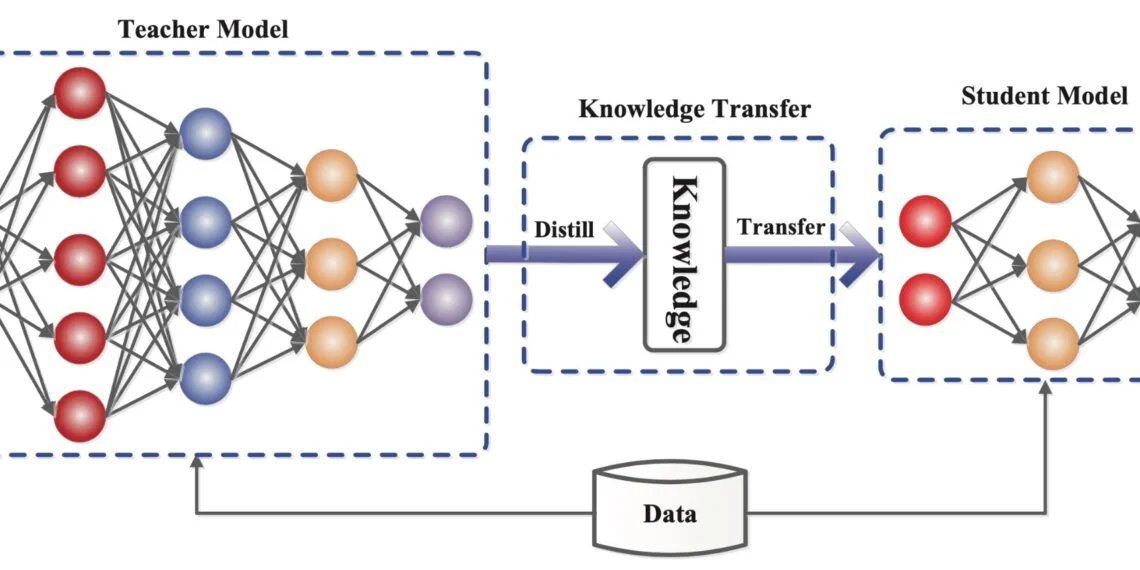

📱Big model, small device?📉 Knowledge distillation helps you shrink LLMs without killing performance. Learn how teacher–student training works👉labelyourdata.com/articles/machi… #MachineLearning #LLM #KnowledgeDistillation

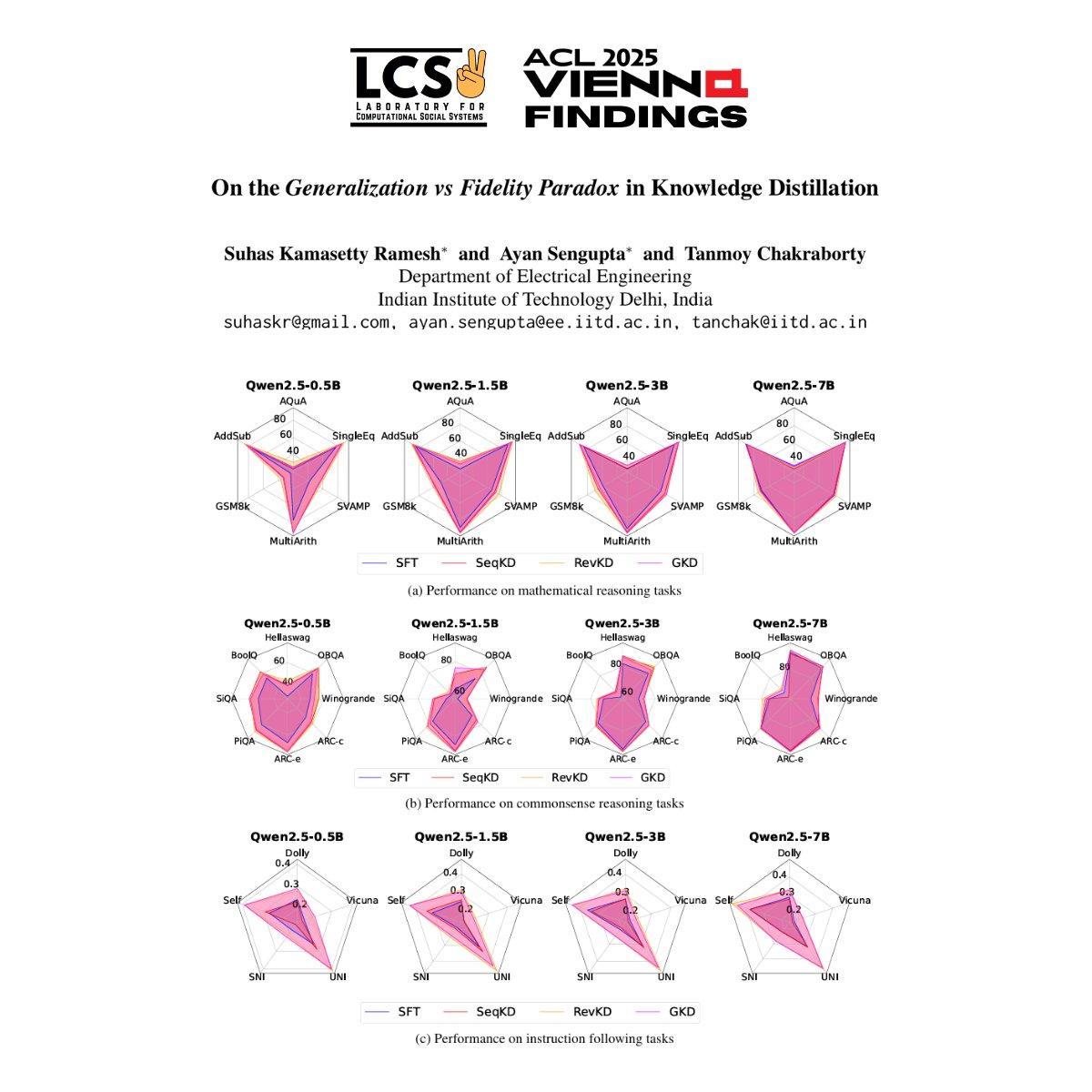

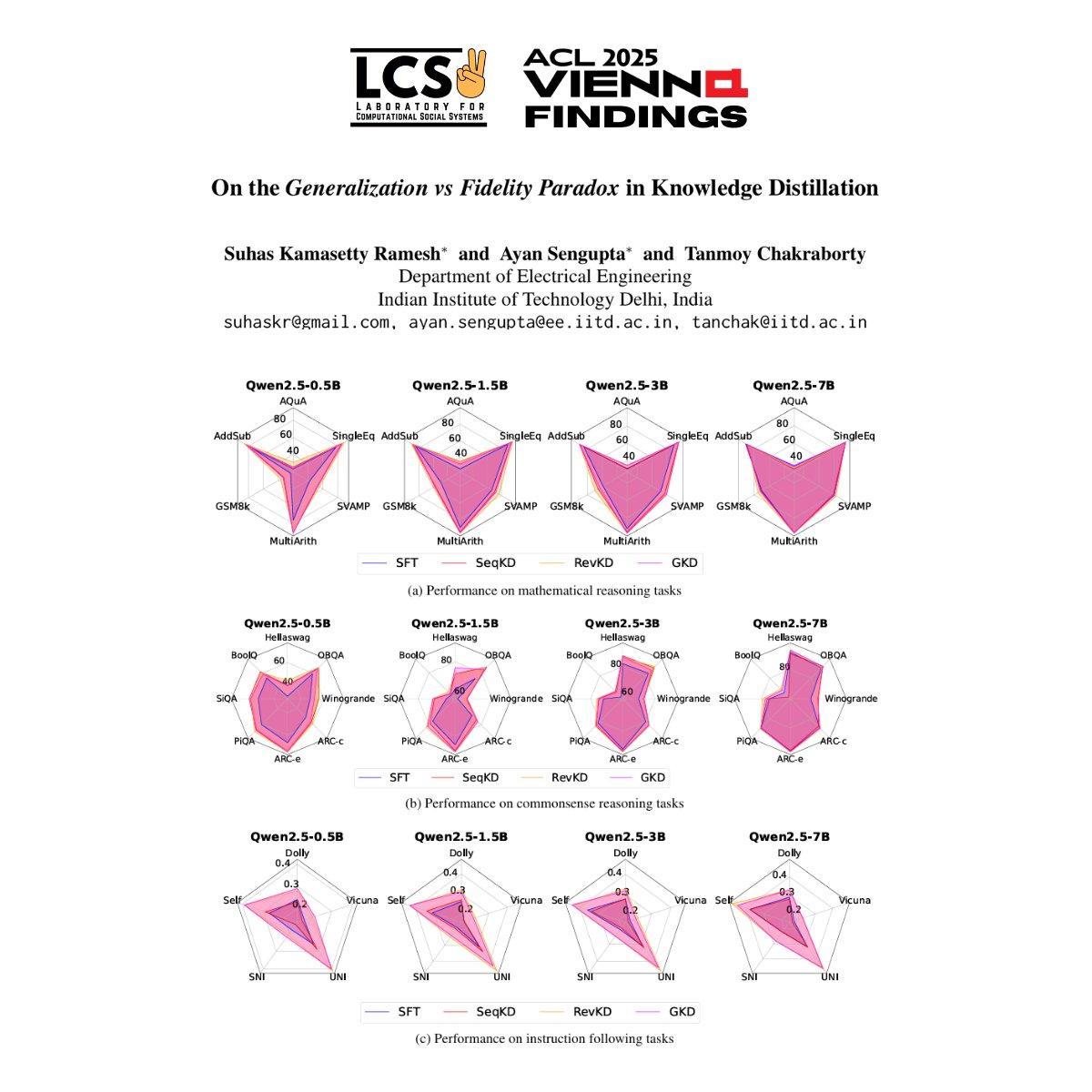

📢 New #ACL2025Findings Paper 📢 Can smaller language models learn how large ones reason, or just what they conclude? Our latest paper in #ACLFindings explores the overlooked tension in #KnowledgeDistillation - generalization vs reasoning fidelity. #NLProc

📚🤖 Knowledge Distillation = small AI model learning from a big one! Smarter, faster, efficient. Perfect for NLP & vision! 🚀📱 See here - techchilli.com/artificial-int… #KnowledgeDistillation #AI2025 #DeepLearning #TechChilli #ModelCompression

What Is Knowledge Distillation? Want to understand how neural networks are trained using this powerful technique with LeaderGPU®? Get all the details on our site: leadergpu.com/catalog/606-wh… #AI #NeuralNetworks #KnowledgeDistillation #GPU #DedicatedServer #Instance #GPUCloud…

AI’s strategic importance is undeniable, with #knowledgedistillation enhancing user experiences through more efficient, tailored models. However, the challenges—limited context windows, #hallucinations, and high #computational costs—require deliberate strategies to overcome.…

Esa conversación con Mr. Tavito sobre #DeepSeek y la magia de la destilación de conocimiento... de ahí nació mi tesis. Hoy, al ver mi propio modelo destilado funcionar, la lección es clara: el único límite somos nosotros mismos. ¡A seguir mejorando! 💪 #KnowledgeDistillation

#KnowledgeDistillation helps you train a smaller #AIModel to mimic a larger one—perfect when data is limited or devices have low resources. Use it for similar tasks with less compute. Learn more with @tenupsoft tenupsoft.com/blog/ai-model-… #AI #AITraining #EdgeAI

Check out the blog: superteams.ai/blog/a-hands-o… #AIInfrastructure #ModelCompression #KnowledgeDistillation

superteams.ai

A Hands-on Guide to Knowledge Distillation - Superteams.ai

Compress large AI models for cost-efficient deployment using Knowledge Distillation.

NTCE-KD: Non-Target-Class-Enhanced Knowledge Distillation mdpi.com/1424-8220/24/1… #knowledgedistillation

🌟Visual Intelligence highly cited papers No. 7🌟 Check out this cross-camera self-distillation framework for unsupervised person Re-ID to alleviate the effect of camera variation. 🔗Read more: link.springer.com/article/10.100… #Unsupervisedlearning #Personreid #Knowledgedistillation

Enhanced Knowledge Distillation for Advanced Recognition of Chinese Herbal Medicine mdpi.com/1424-8220/24/5… #knowledgedistillation

🌟Visual Intelligence highly cited papers No. 4🌟 Check out this novel semantic-aware knowledge distillation scheme to fill in the dimension gap between intermediate features of teacher and student. 🔗Read more: link.springer.com/article/10.100… #Knowledgedistillation #Selfattention #llm

#Knowledgedistillation is one of the most underrated shifts in AI. It lets you take the power of large models and transfer it into smaller, specialized ones — faster, cheaper, and easier to control. It’s not just compression. It’s focus. #KnowledgeDistillation #SLMs #AI

Want your smaller language model to punch *way* above its weight? 🥊 This paper introduces DSKD, a clever way to distill knowledge from LLMs *even* if they use different vocabularies! #AI #KnowledgeDistillation

. @soon_svm #SOONISTHEREDPILL 118. soon_svm's knowledge - distillation support can transfer knowledge from a large model to a smaller one. #soon_svm #KnowledgeDistillation

🔥 Read our Highly Cited Paper 📚MicroBERT: Distilling MoE-Based #Knowledge from BERT into a Lighter Model 🔗mdpi.com/2076-3417/14/1… 👨🔬by Dashun Zheng et al. @mpu1991 #naturallanguageprocessing #knowledgedistillation

Back when we used to get stuck we had our teacher who having more capability, understood the problem better and taught us the easier way to do it. Well, the same could be done in #DeepLearning too, using what is known as #KnowledgeDistillation. 🧵👇

Talking about how we make the most of "knowledge distillation" to level up our labeling process using foundation models at Google for Startups YoloVision Conference @ultralytics 🧠🤖🔍 #AI #Labeling #KnowledgeDistillation #YV23 #ComputerVision

A #MachineLearning model can be compressed by #KnowledgeDistillation to be used on less powerful hardware. A method proposed a @TsinghuaSIGS team can minimize knowledge loss during the compression of pattern detector models. For more: bit.ly/3X34is0

Assoc. Prof. Wang Zhi’s team from @TsinghuaSIGS won the Best Paper Award at @IEEEorg ICME 2023. Their paper focuses on collaborative data-free #KnowledgeDistillation via multi-level feature sharing, representing the latest developments in #multimedia.

🔥 Read our Paper 📚Discriminator-Enhanced #KnowledgeDistillation Networks 🔗mdpi.com/2076-3417/13/1… 👨🔬by Zhenping Li et al. @CAS__Science #knowledgedistillation #reinforcementlearning

🧠 How do you distill the power of large language models (#LLMs) into smaller, efficient models without losing their reasoning prowess? ✨ Sequence-level #KnowledgeDistillation (KD) is the key. It isn’t just about mimicking outcomes—it’s about transferring the reasoning…

Researchers have proposed a general & effective #knowledgedistillation method for #objectdetection, which helps to transfer the teacher's focal and global knowledge to the student. #machinelearning More at: bit.ly/3UB2swS

Knowledge Distillation in Deep Learning rb.gy/xaraa #KnowledgeDistillation #NaturalLanguageProcessing #TransferLearning #DataDistillation #MachineLearning #ArtificialNeurons #SmartSystems

Our work "Sliding Cross Entropy for Self-Knowledge Distillation" is accepted in CIKM 2022. #CV #Computervision #KnowledgeDistillation #KD #CIKM2022

We introduce a knowledge distillation method for multi-crop, multi-disease detection, creating lightweight models with high accuracy. Ideal for smart agriculture devices. #PlantDiseaseDetection #KnowledgeDistillation #SmartFarming Details: spj.science.org/doi/10.34133/p…

Investigating the effectiveness of state-of-the-art #knowledgedistillation techniques for mapping weeds with drones: “Applying Knowledge Distillation to Improve #WeedMapping With Drones” by G. Castellano, P. De Marinis, G. Vessio. ACSIS Vol. 35 p. 393–400; tinyurl.com/4kzjnn9d

Understanding Language Model Distillation itinai.com/understanding-… #KnowledgeDistillation #AI #ArtificialIntelligence #DistillKit #ArceeAI #ai #news #llm #ml #research #ainews #innovation #artificialintelligence #machinelearning #technology #deeplearning @vlruso

NTCE-KD: Non-Target-Class-Enhanced Knowledge Distillation mdpi.com/1424-8220/24/1… #knowledgedistillation

Esa conversación con Mr. Tavito sobre #DeepSeek y la magia de la destilación de conocimiento... de ahí nació mi tesis. Hoy, al ver mi propio modelo destilado funcionar, la lección es clara: el único límite somos nosotros mismos. ¡A seguir mejorando! 💪 #KnowledgeDistillation

RT CODIR: Train smaller, faster NLP models dlvr.it/RpvMSW #machinelearning #knowledgedistillation #naturallanguageprocessing

A beginner’s guide to #KnowledgeDistillation in #DeepLearning buff.ly/33GA7Qt #fintech #AI #ArtificialIntelligence #MachineLearning @Analyticsindiam

📢 New #ACL2025Findings Paper 📢 Can smaller language models learn how large ones reason, or just what they conclude? Our latest paper in #ACLFindings explores the overlooked tension in #KnowledgeDistillation - generalization vs reasoning fidelity. #NLProc

RT Data Distillation for Object Detection dlvr.it/SJxX9J #yolo #deeplearning #knowledgedistillation #computervision

Something went wrong.

Something went wrong.

United States Trends

- 1. Marshawn Kneeland 18.3K posts

- 2. Nancy Pelosi 22.6K posts

- 3. #MichaelMovie 31.2K posts

- 4. ESPN Bet 2,219 posts

- 5. #영원한_넘버원캡틴쭝_생일 24.7K posts

- 6. #NO1ShinesLikeHongjoong 24.9K posts

- 7. Gremlins 3 2,655 posts

- 8. Jaafar 9,703 posts

- 9. Good Thursday 35.7K posts

- 10. Joe Dante N/A

- 11. Chimecho 4,823 posts

- 12. #thursdayvibes 2,863 posts

- 13. Madam Speaker N/A

- 14. Baxcalibur 3,388 posts

- 15. #BrightStar_THE8Day 36.4K posts

- 16. Penn 9,502 posts

- 17. Votar No 27.9K posts

- 18. Chris Columbus 2,404 posts

- 19. Happy Friday Eve 1,001 posts

- 20. Barstool 1,631 posts