#schemaevolution search results

Schema changes breaking pipelines? With our Schema Handling Framework, minimize false alerts, reuse up to 95% of code and ensure 100% auditability — making your data pipelines faster, smarter, and more reliable. 📩 [email protected] #Databricks #SchemaEvolution #XponentAI

Next step will read from #S3 and flatten the JSON structure and merges it with #schemaevolution to the property #deltatable.

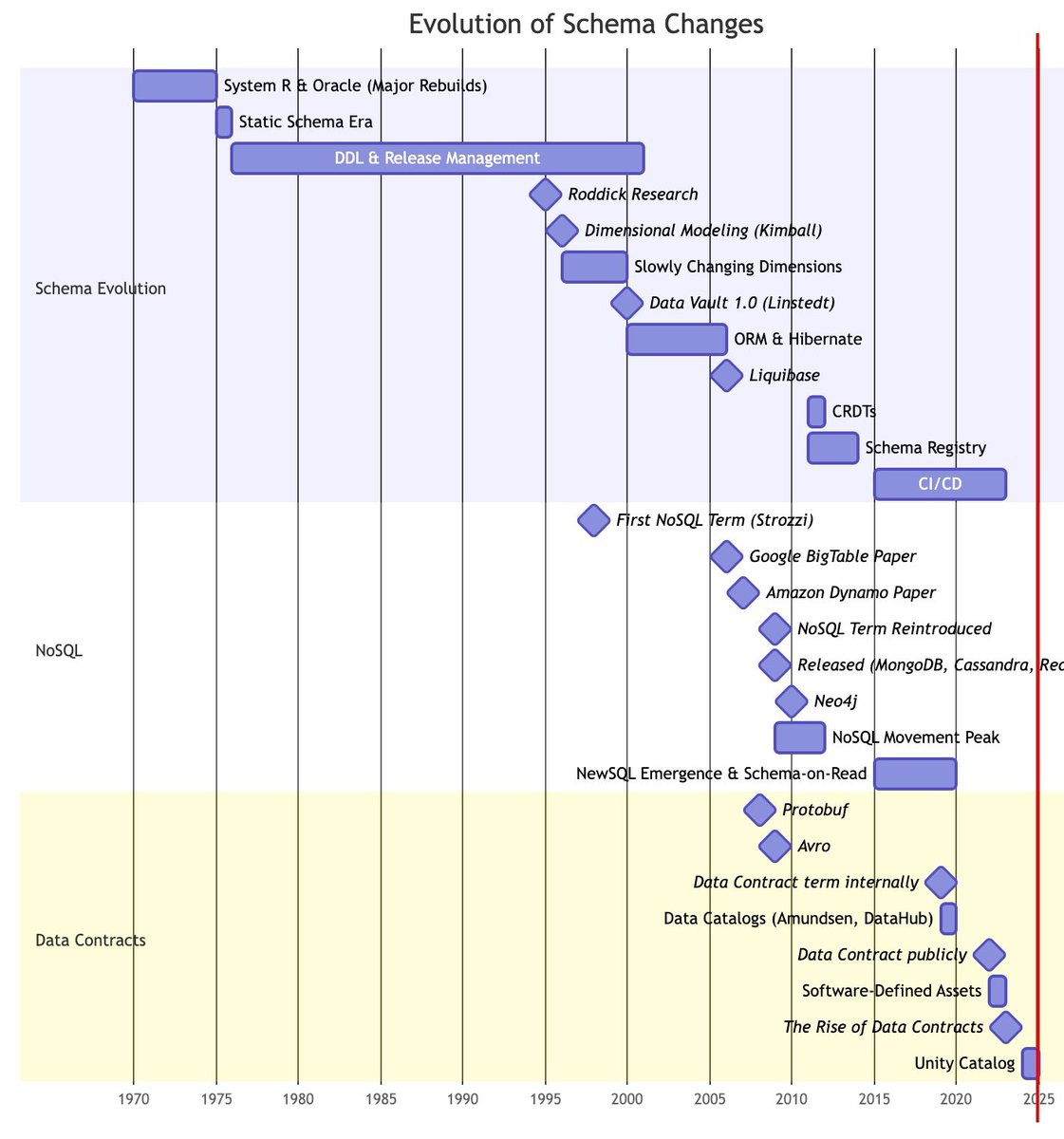

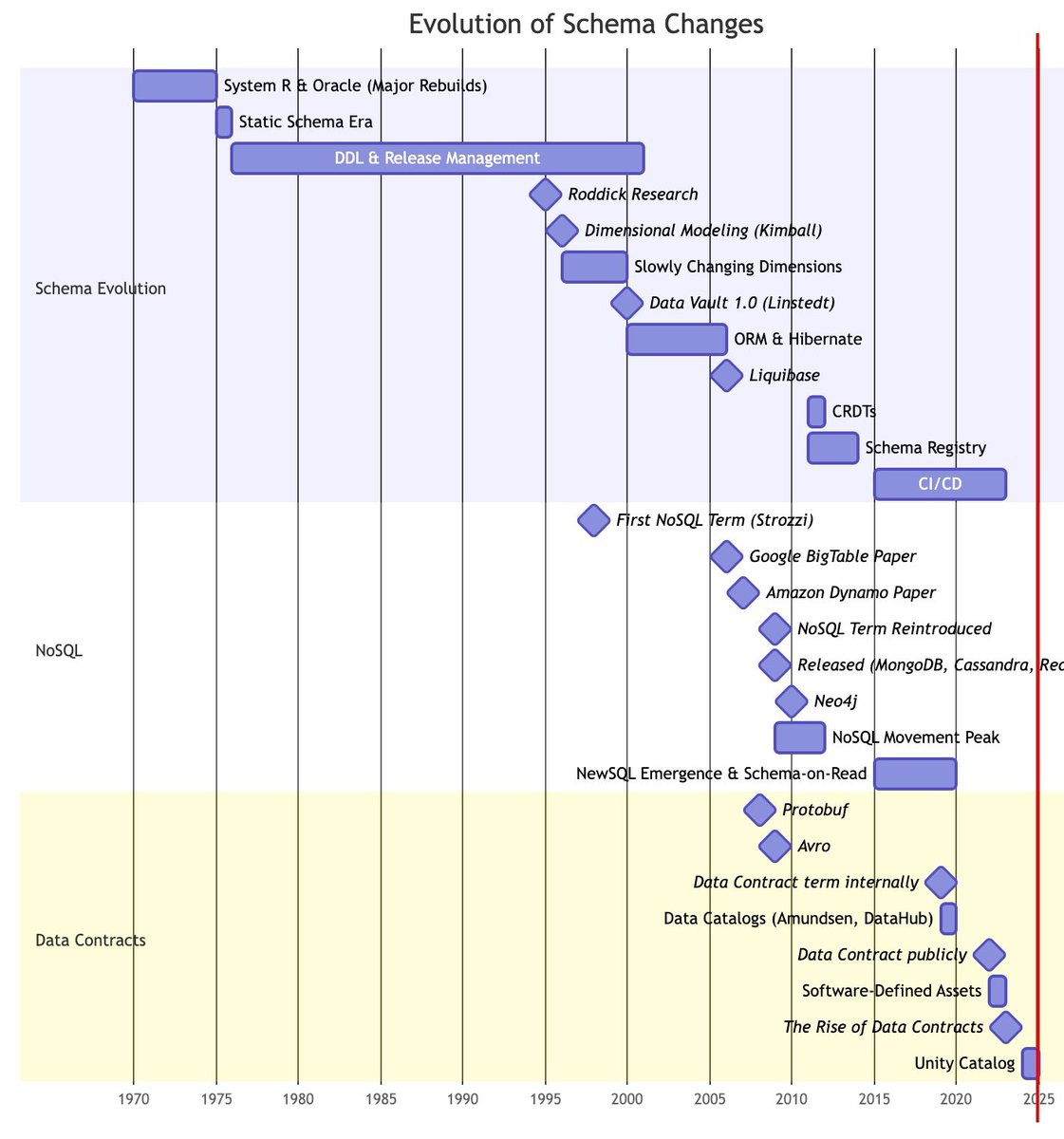

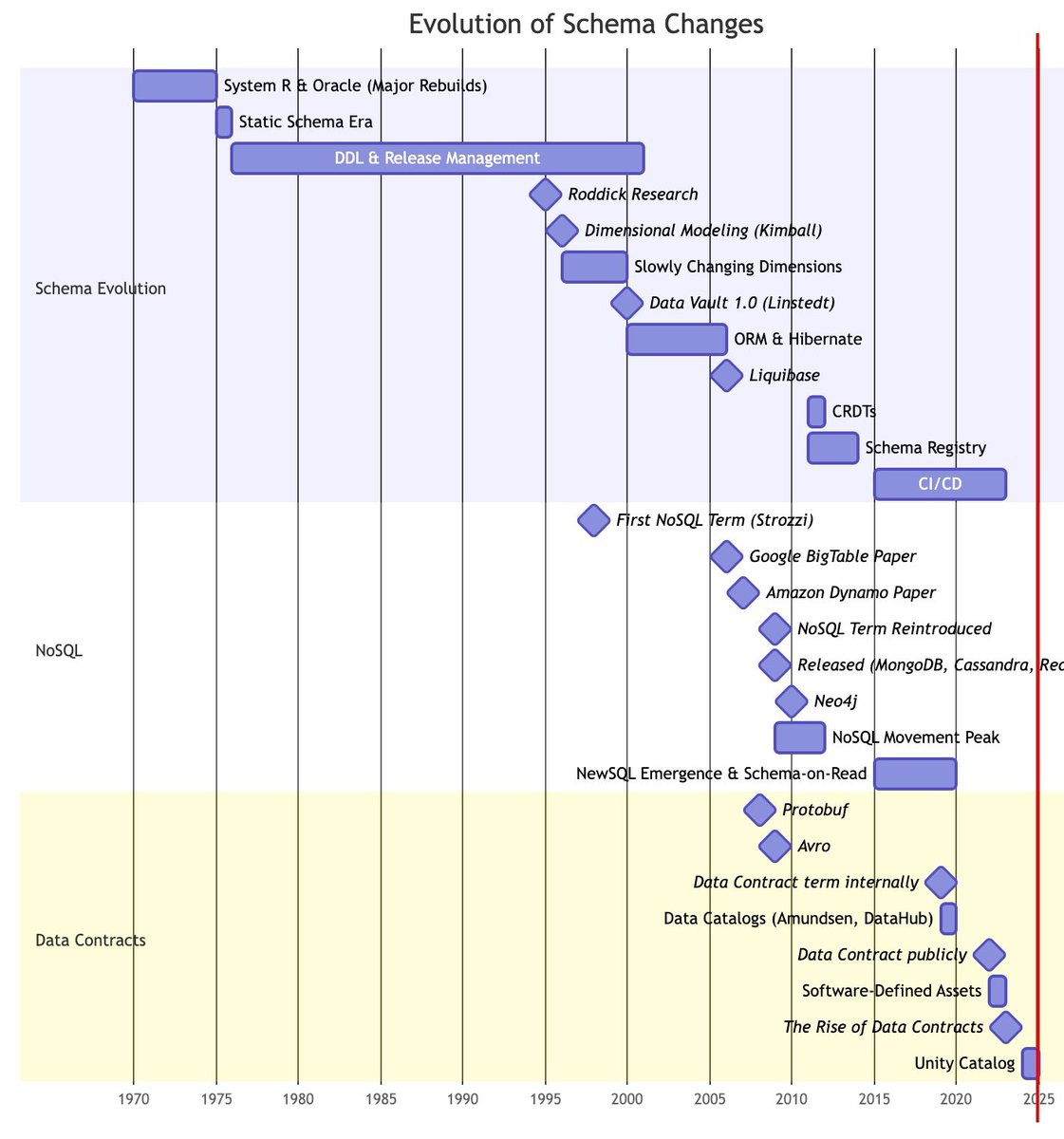

📖 New chapter: Evolution of Schema Change and database change management. > #SchemaEvolution: Managing database changes while preserving integrity > #NoSQL: Flexible schemas; speed over consistency > #DataContracts: Producer-consumer agreements with automated validation…

With Schema Evolution, you're no longer restricted by rigid table structures! #SchemaEvolution empowers you to adapt your table's structure seamlessly as your data evolves. It's particularly useful when adding new columns during data appending operations. Credit: Mohsen Madiouni

Crafting schema migration through small execution steps with custom readers. #SchemaEvolution with @ValentinKasas @functional_jvm

#SchemaEvolution empowers you to adapt your table's structure seamlessly as your data evolves. This is particularly useful when adding new columns during data appending operations. ✅Stay flexible ✅Stay efficient Credit to: Mohsen Madiouni / DataBeans #deltalake #lakehouse

Learn how to version-code your reporting schema. EOM changed? Just bump version. #SchemaEvolution @IIBA @IIBAToronto @credly #MDMarketInsights #BusinessAnalysis #CapitalMarkets #FinancialServices #TradeFloor #FinanceIndustry #InvestmentAnalysis #DataAnalytics #RiskManagement…

Tweet 3/5 Step 2: Add a field tomorrow → ZERO downtime! Old code skips “vip”. New code sees default false. #Kafka #SchemaEvolution Watch the magic ↓

Schemas are a great way to make versioning event-driven systems easier, and @ApacheAvro has the best schema evolution capabilities out there. And, you can use Avro with @NServiceBus too! Check out the samples: docs.particular.net/shape-the-futu… #avro #schemaevolution #eda #serdes

docs.particular.net

NServiceBus and Apache Avro • NServiceBus

Using NServiceBus and Apache Avro.

In data integration, a flexible data format is your ally in navigating schema evolution challenges. Avro, JSON, Parquet, or Protobuf are ideal choices, backed by robust schema evolution support. #DataStrategy #SchemaEvolution #aldefi

#SchemaEvolution is a priority! It is key to ensuring data integrity, minimizing downtime, and maintaining analytics accuracy. Learn more about managing schema evolution in data pipelines: bit.ly/45FAqZF #DataPipeline #SchemaDrift #DataEngineering #DASCA

As data structures evolve over time, managing schema changes becomes critical for data integrity and analysis. I’ll provide more details around schema evolution in data lakes in the next post 👌#dataengineering #schemaevolution

🔄 The Problem of Schema Evolution As systems grow, data formats must adapt. Schema evolution ensures new data fields can be added (or old ones changed) without breaking existing systems. Key for forward and backward compatibility! #SchemaEvolution #SystemDesign

#schemaevolution, #db4o has a refactoring API, http://tinyurl.com/yemaup6, would love to have somehting similar in Terracotta!?

This article focuses on a possible way to handle the Schema Evolution in GCS to Big Query Ingestion Pattern via Big Query API Client Library. #schemaevolution #gcpcloud #datamesh #cloud #bigquery #gcs google.smh.re/26ps

In which I take another sneak peek at the next #Druid release, this time around schema inference! #realtimeanalytics #schemaevolution lnkd.in/ex29Vwpm

This article focuses on a possible way to handle the Schema Evolution in GCS to Big Query Ingestion Pattern via Big Query API Client Library. #schemaevolution #gcpcloud #datamesh #cloud #bigquery #gcs google.smh.re/26TU

"Navigate schema evolution in message queues with ease! Learn from Ivelin Yanev's insights on adapting to changing needs and maintaining operational continuity. #SchemaEvolution #MessageQueues #IvelinYanev" ift.tt/CuGU9W6

dev.to

The Challenge of Evolving Schemas in Message Queues

In systems built on message queues like RabbitMQ or Pub/Sub, schema evolution is inevitable. It...

Watch it now on-demand Diving into #DeltaLake - Enforcing and Evolving the Schema youtube.com/watch?v=tjb10n… #SchemaEnforcement #SchemaEvolution

Schema changes breaking pipelines? With our Schema Handling Framework, minimize false alerts, reuse up to 95% of code and ensure 100% auditability — making your data pipelines faster, smarter, and more reliable. 📩 [email protected] #Databricks #SchemaEvolution #XponentAI

Schemas are a great way to make versioning event-driven systems easier, and @ApacheAvro has the best schema evolution capabilities out there. And, you can use Avro with @NServiceBus too! Check out the samples: docs.particular.net/shape-the-futu… #avro #schemaevolution #eda #serdes

docs.particular.net

NServiceBus and Apache Avro • NServiceBus

Using NServiceBus and Apache Avro.

Schemas are a great way to make versioning event-driven systems easier, and @ApacheAvro has the best schema evolution capabilities out there. And, you can use Avro with @NServiceBus too! Check out the samples: docs.particular.net/shape-the-futu… #avro #schemaevolution #eda #serdes

docs.particular.net

NServiceBus and Apache Avro • NServiceBus

Using NServiceBus and Apache Avro.

Schemas are a great way to make versioning event-driven systems easier, and @ApacheAvro has the best schema evolution capabilities out there. And, you can use Avro with @NServiceBus too! Check out the samples: docs.particular.net/shape-the-futu… #avro #schemaevolution #eda #serdes

docs.particular.net

NServiceBus and Apache Avro • NServiceBus

Using NServiceBus and Apache Avro.

#SchemaEvolution is a priority! It is key to ensuring data integrity, minimizing downtime, and maintaining analytics accuracy. Learn more about managing schema evolution in data pipelines: bit.ly/45FAqZF #DataPipeline #SchemaDrift #DataEngineering #DASCA

Learn how to version-code your reporting schema. EOM changed? Just bump version. #SchemaEvolution @IIBA @IIBAToronto @credly #MDMarketInsights #BusinessAnalysis #CapitalMarkets #FinancialServices #TradeFloor #FinanceIndustry #InvestmentAnalysis #DataAnalytics #RiskManagement…

📖 New chapter: Evolution of Schema Change and database change management. > #SchemaEvolution: Managing database changes while preserving integrity > #NoSQL: Flexible schemas; speed over consistency > #DataContracts: Producer-consumer agreements with automated validation…

🔄 The Problem of Schema Evolution As systems grow, data formats must adapt. Schema evolution ensures new data fields can be added (or old ones changed) without breaking existing systems. Key for forward and backward compatibility! #SchemaEvolution #SystemDesign

Managing Schema Evolution in MongoDB WhatsApp Us: +91 988-620-5050 Email: [email protected] Website : icertglobal.com Our Blog: icertglobal.com/managing-schem… #mongodb #schemaevolution #databasemanagement #nosql #datamanagement #techtips #backenddevelopment #mongodbtips

📝 #Blogged: I wrote this article to raise awareness of the importance of #SchemaEvolution for #realtime data pipelines built on top of #ChangeDataCapture. It discusses how to change data models without breaking compatibility with downstream consumers. decodable.co/blog/schema-ev…

decodable.co

Schema Evolution in Change Data Capture Pipelines

The importance of schema evolution for real-time data pipelines and how to modify data models without breaking compatibility with downstream consumers.

I recall a project where we didn't account for schema evolution. When the first major change came, half our pipelines broke. From then on, I’ve always planned for backward-compatible schema changes. It’s non-negotiable. 🧩 #SchemaEvolution #DataEngineering

"Navigate schema evolution in message queues with ease! Learn from Ivelin Yanev's insights on adapting to changing needs and maintaining operational continuity. #SchemaEvolution #MessageQueues #IvelinYanev" ift.tt/CuGU9W6

dev.to

The Challenge of Evolving Schemas in Message Queues

In systems built on message queues like RabbitMQ or Pub/Sub, schema evolution is inevitable. It...

Unlock the power of #SchemaEvolution in #Kafka. Discover best practices for resilient #StreamingApplications. Link: medium.com/@josh.magady/m…

In about 30 minutes, we're kicking off our next virtual #dataMinds evening session. @UstDoesTech is joining us and will be bringing tails from the trenches about #schemaEvolution in #Lakehouse You can still register: dataminds.be/schema-evoluti…

dataminds.be

Don’t be afraid of change! Learn about schema evolution and watch your data grow and evolve

Don’t be afraid of change! Learn about schema evolution and watch your data grow and evolve

As data structures evolve over time, managing schema changes becomes critical for data integrity and analysis. I’ll provide more details around schema evolution in data lakes in the next post 👌#dataengineering #schemaevolution

In data integration, a flexible data format is your ally in navigating schema evolution challenges. Avro, JSON, Parquet, or Protobuf are ideal choices, backed by robust schema evolution support. #DataStrategy #SchemaEvolution #aldefi

#SchemaEvolution empowers you to adapt your table's structure seamlessly as your data evolves. This is particularly useful when adding new columns during data appending operations. ✅Stay flexible ✅Stay efficient Credit to: Mohsen Madiouni / DataBeans #deltalake #lakehouse

With Schema Evolution, you're no longer restricted by rigid table structures! #SchemaEvolution empowers you to adapt your table's structure seamlessly as your data evolves. It's particularly useful when adding new columns during data appending operations. Credit: Mohsen Madiouni

👉Schema Evolution 🔄 Parquet supports schema evolution, which means you can change the structure of your data without breaking existing queries. This flexibility is a big win for big data workflows that evolve over time. 🛠️ #SchemaEvolution #dataengineering #DataScience

Are you confused about the difference between the "mergeSchema" option and the "autoMerge" configuration in Delta Lake schema evolution? Let's break it down! 🧵👇🏻 1/8 #DeltaLake #SchemaEvolution #Spark

Learn how to version-code your reporting schema. EOM changed? Just bump version. #SchemaEvolution @IIBA @IIBAToronto @credly #MDMarketInsights #BusinessAnalysis #CapitalMarkets #FinancialServices #TradeFloor #FinanceIndustry #InvestmentAnalysis #DataAnalytics #RiskManagement…

Crafting schema migration through small execution steps with custom readers. #SchemaEvolution with @ValentinKasas @functional_jvm

Next step will read from #S3 and flatten the JSON structure and merges it with #schemaevolution to the property #deltatable.

With Schema Evolution, you're no longer restricted by rigid table structures! #SchemaEvolution empowers you to adapt your table's structure seamlessly as your data evolves. It's particularly useful when adding new columns during data appending operations. Credit: Mohsen Madiouni

#SchemaEvolution empowers you to adapt your table's structure seamlessly as your data evolves. This is particularly useful when adding new columns during data appending operations. ✅Stay flexible ✅Stay efficient Credit to: Mohsen Madiouni / DataBeans #deltalake #lakehouse

📖 New chapter: Evolution of Schema Change and database change management. > #SchemaEvolution: Managing database changes while preserving integrity > #NoSQL: Flexible schemas; speed over consistency > #DataContracts: Producer-consumer agreements with automated validation…

Schema changes breaking pipelines? With our Schema Handling Framework, minimize false alerts, reuse up to 95% of code and ensure 100% auditability — making your data pipelines faster, smarter, and more reliable. 📩 [email protected] #Databricks #SchemaEvolution #XponentAI

#SchemaEvolution is a priority! It is key to ensuring data integrity, minimizing downtime, and maintaining analytics accuracy. Learn more about managing schema evolution in data pipelines: bit.ly/45FAqZF #DataPipeline #SchemaDrift #DataEngineering #DASCA

Tweet 3/5 Step 2: Add a field tomorrow → ZERO downtime! Old code skips “vip”. New code sees default false. #Kafka #SchemaEvolution Watch the magic ↓

In data integration, a flexible data format is your ally in navigating schema evolution challenges. Avro, JSON, Parquet, or Protobuf are ideal choices, backed by robust schema evolution support. #DataStrategy #SchemaEvolution #aldefi

Something went wrong.

Something went wrong.

United States Trends

- 1. Grammy 248K posts

- 2. Clipse 14.9K posts

- 3. Dizzy 8,790 posts

- 4. Kendrick 53.2K posts

- 5. olivia dean 12.3K posts

- 6. addison rae 19.4K posts

- 7. AOTY 17.6K posts

- 8. Katseye 102K posts

- 9. Leon Thomas 15.4K posts

- 10. gaga 90.9K posts

- 11. #FanCashDropPromotion 3,485 posts

- 12. Kehlani 30.5K posts

- 13. ravyn lenae 2,797 posts

- 14. lorde 11K posts

- 15. Durand 4,522 posts

- 16. Alfredo 2 N/A

- 17. Album of the Year 56K posts

- 18. The Weeknd 10.4K posts

- 19. Alex Warren 6,160 posts

- 20. Burning Blue 1,508 posts