#visionlanguagemodel 검색 결과

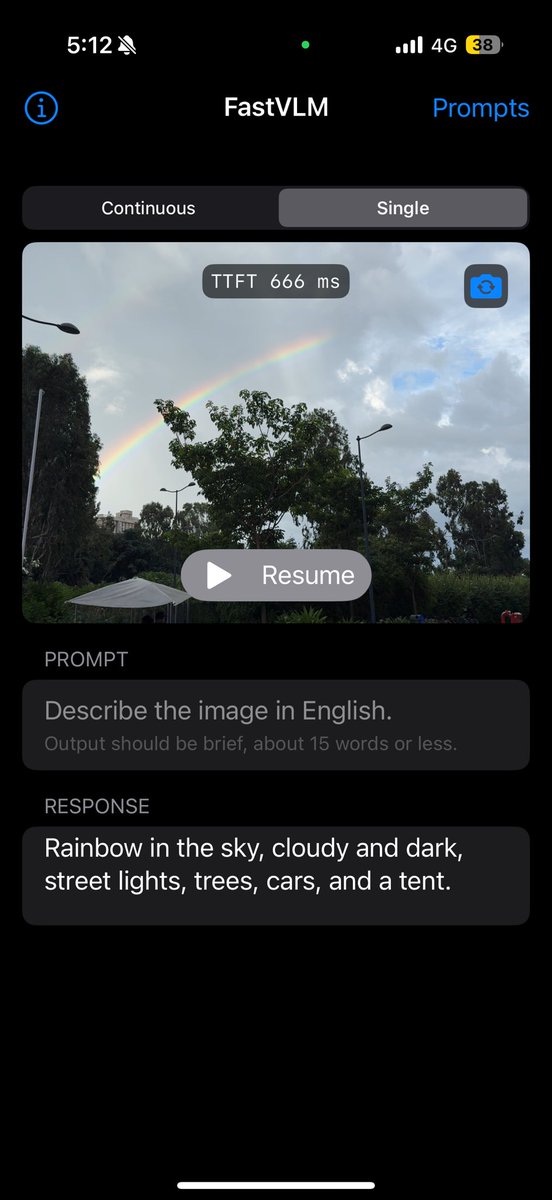

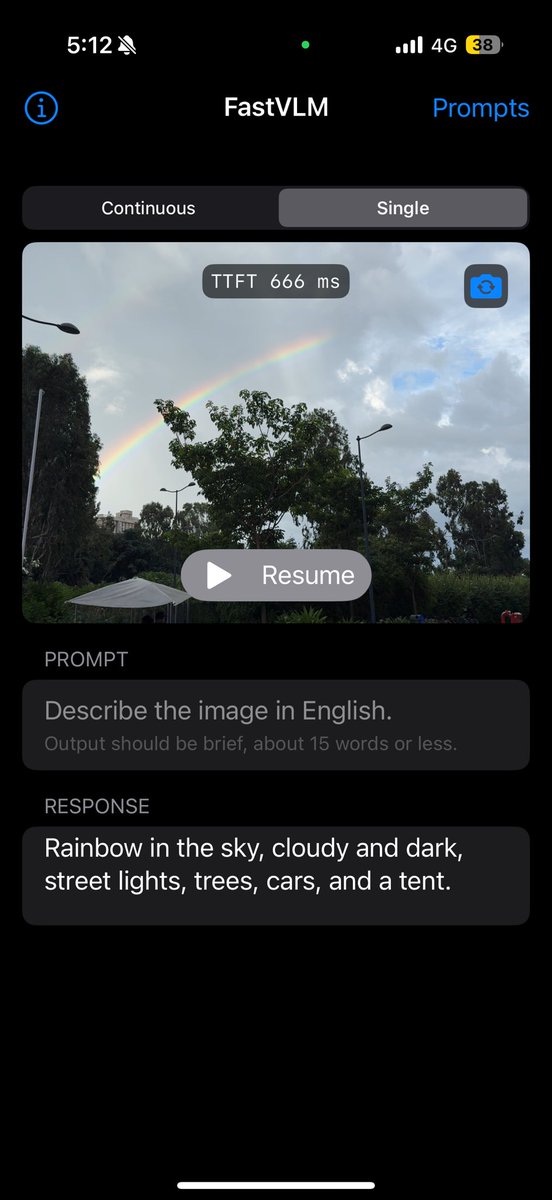

👀 เบื้องหลัง VLM: ตา-ล่าม-สมอง (ฉบับเข้าใจง่าย) 💡 ใช้ได้จริงแล้วในหลายงาน ทั้งสรุปสไลด์ อ่านเอกสาร ค้นข้อมูลจากรูป หรือใช้เป็นผู้ช่วยวิเคราะห์ข้อมูลภาพ–ข้อความแบบเรียลไทม์ aideta.com/blog/arin13qgl… #AI #VisionLanguageModel #AITransformation

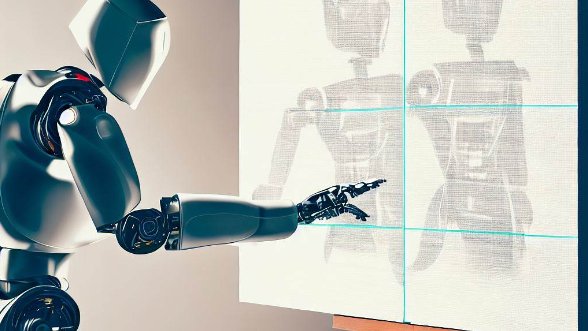

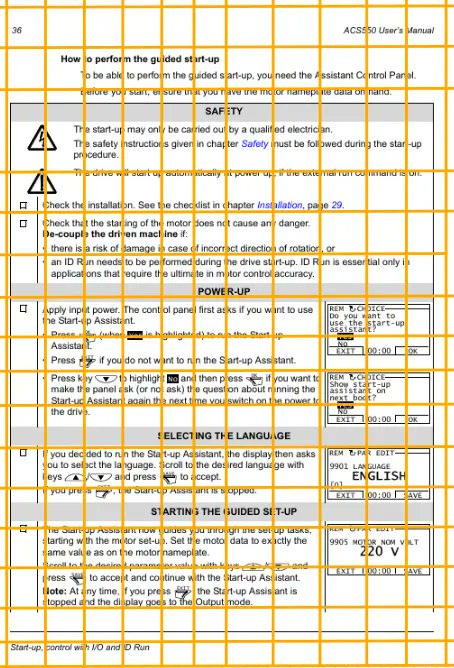

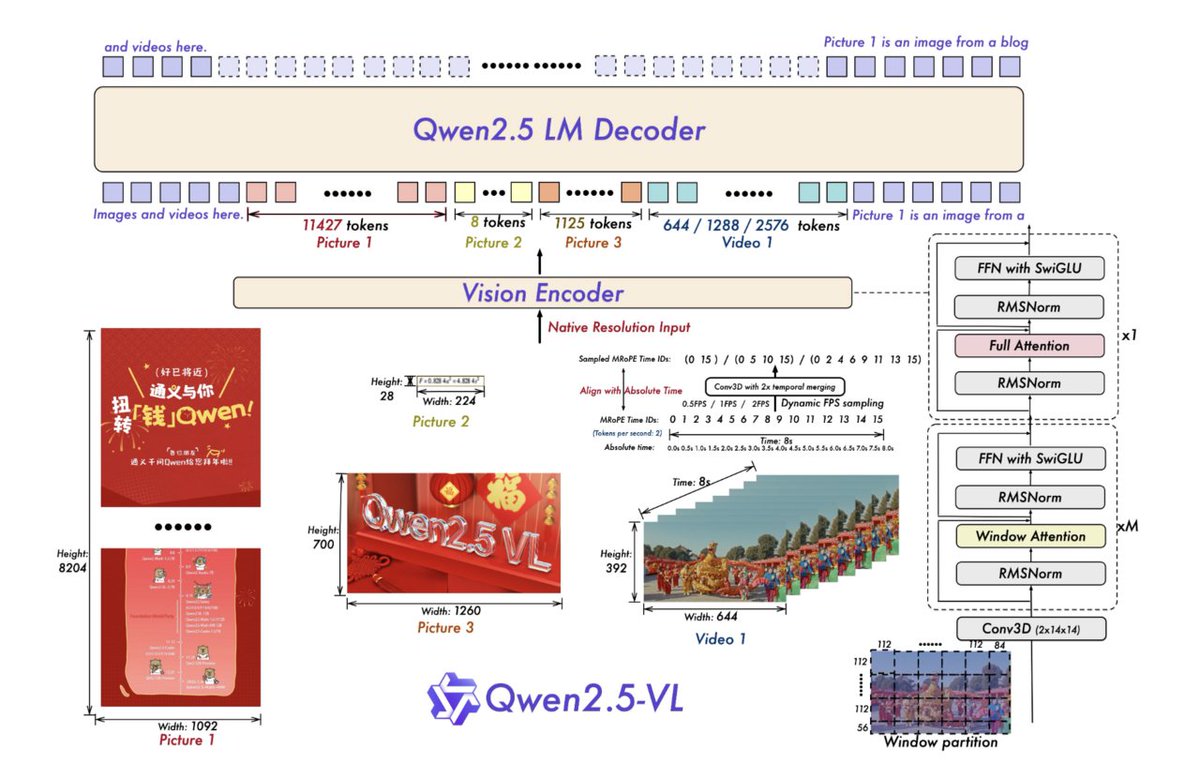

We are excited to be among the very first groups selected by @NVIDIARobotics to test the new @NVIDIA #Thor. We have managed to run a #VisionLanguageModel (Qwen 2.5 VL) for semantic understanding of the environment, along with a monocular depth model (#DepthAnything v2), for safe…

ByteDance Unveils Seed3D 1.0 for AI-Driven 3D Simulation Read More: lnkd.in/gd7CG6HM @BytedanceTalk #VisionLanguageModel #RoboticManipulation #MagicArticulate #DigitalTwinSystems

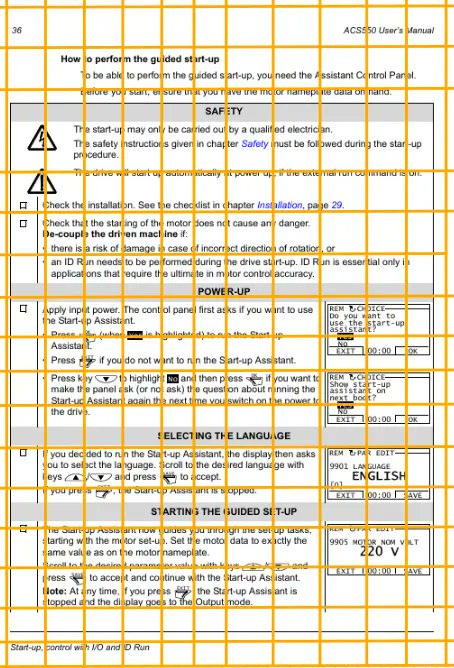

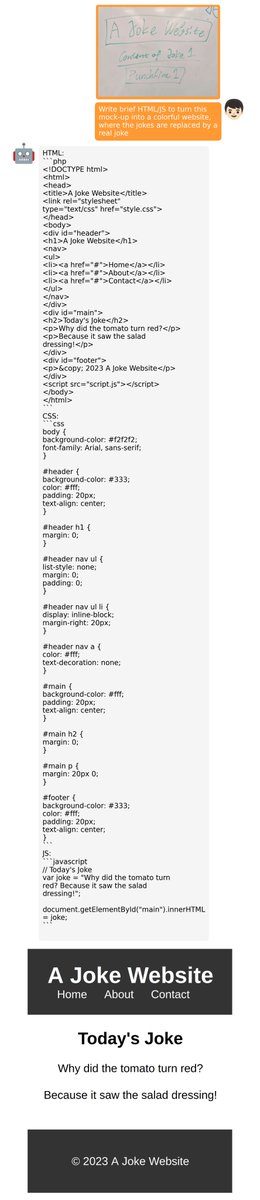

The 32×32 Patch Grid Why does ColPali “see” so well? Each page is divided into patch grids—so it knows exactly where an image ends and text begins. That local + global context means no detail is missed, from small icons to big headers. #colpali #visionlanguagemodel

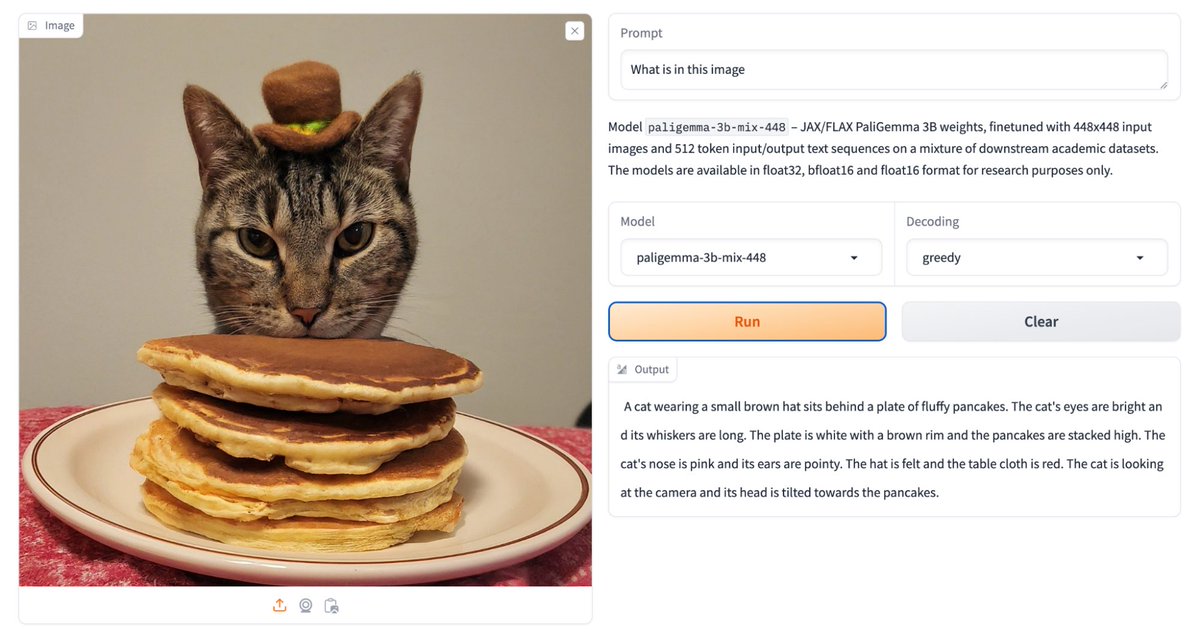

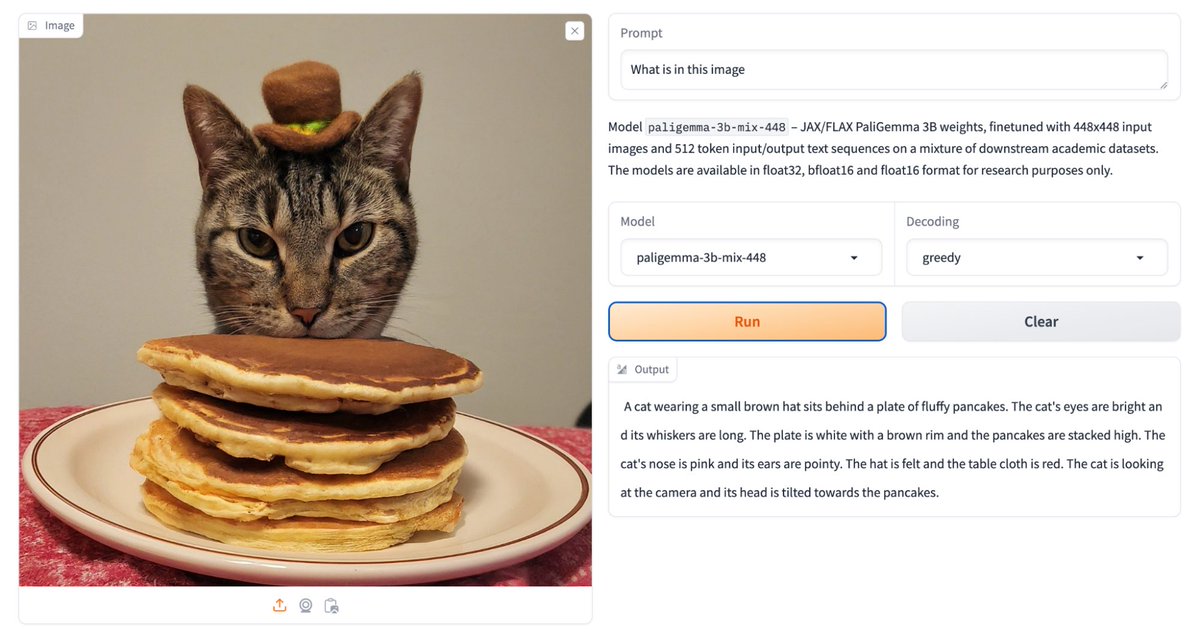

Google 发布了新的视觉语言模型 PaliGemma,它可以接收图像和文本输入,并输出文本。PaliGemma 包含预训练模型、混合模型和微调模型三种类型,具有图像字幕、视觉问答、目标检测、指代分割等多种能力。 #GoogleAI #PaliGemma #VisionLanguageModel huggingface.co/blog/paligemma

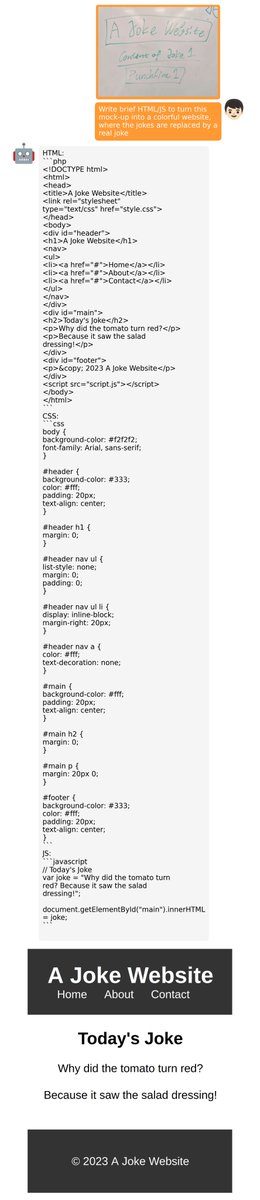

#UITARS Desktop: The Future of Computer Control through Natural Language 🖥️ 🎯 #ByteDance introduces GUI agent powered by #VisionLanguageModel for intuitive computer control Code: lnkd.in/eNKasq56 Paper: lnkd.in/eN5UPQ6V Models: lnkd.in/eVRAwA-9 #ai 🧵 ↓

2/ 🎯 MiniGPT-4 empowers image description generation, story writing, problem-solving, and more! 💻 Open source availability fuels innovation and collaboration. ✨ The future of vision-language models is here! minigpt-4.github.io #AI #MiniGPT4 #VisionLanguageModel

Save your time QCing label quality with @Labellerr1 new feature and do it 10X faster. See the demo below- #qualitycontrol #imagelabeling #visionlanguagemodel #visionai

5/ 🚀 MiniGPT-4 is a game-changer in the field of vision-language models. 🔥 Its impressive performance and advanced multi-modal capabilities are propelling AI to new frontiers. #MiniGPT4 #VisionLanguageModel #AI #innovation nobraintech.com/2023/06/minigp…

nobraintech.com

MiniGPT-4: Empowering Vision and Language with Open Source Brilliance

In the ever-evolving landscape of artificial intelligence (AI) , the latest advancements have taken us into uncharted territory. The release...

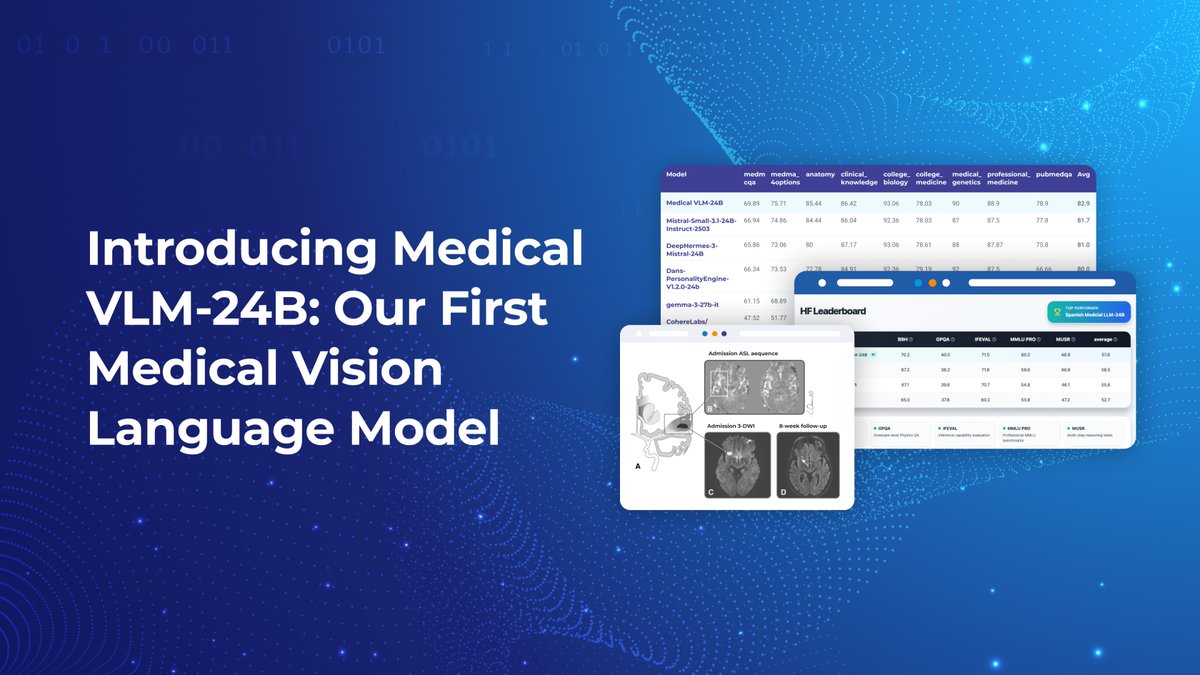

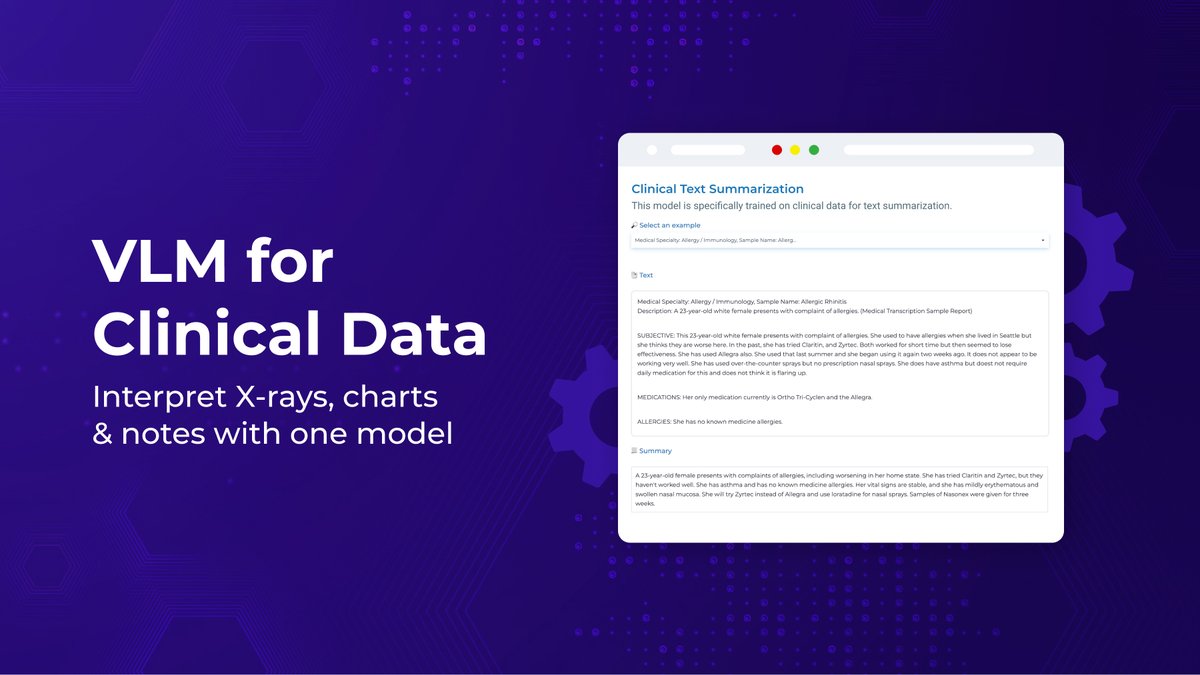

Explore the model on AWS Marketplace: hubs.li/Q03mQ5qT0 #MedicalAI #VisionLanguageModel #HealthcareAI #ClinicalDecisionSupport #RadiologyAI #GenerativeAI #JohnSnowLabs #LLM #RAG #MedicalImaging #NLPinHealthcare

Read the full article: hubs.li/Q03F78zL0 #MedicalAI #VisionLanguageModel #RadiologyAI #HealthcareAI #GenerativeAI #MedicalImaging #NLPinHealthcare #JohnSnowLabs

Alibaba's QWEN 2.5 VL: A Vision Language Model That Can Control Your Computer #alibaba #qwen #visionlanguagemodel #AI #viral #viralvideos #technology #engineering #trending #tech #engineer #reelsvideo contentbuffer.com/issues/detail/…

Discover #GPT4RoI, the #VisionLanguageModel that supports multi-region spatial instructions for detailed region-level understanding. #blogger #bloggers #bloggingcommunity #WritingCommunity #blogs #blogposts #LanguageModels #AI #MachineLearning #AIModel socialviews81.blogspot.com/2023/07/gpt4ro…

ByteDance Unveils Seed3D 1.0 for AI-Driven 3D Simulation Read More: lnkd.in/gd7CG6HM @BytedanceTalk #VisionLanguageModel #RoboticManipulation #MagicArticulate #DigitalTwinSystems

Seeing #VisionLanguageModel with Qwen 2.5 VL + DepthAnything v2 running live on Jetson Thor is next-level for robotics. Fusing semantic/context with real-time depth makes agile, adaptive bots possible. What benchmarks should we watch for? #AI

We are excited to be among the very first groups selected by @NVIDIARobotics to test the new @NVIDIA #Thor. We have managed to run a #VisionLanguageModel (Qwen 2.5 VL) for semantic understanding of the environment, along with a monocular depth model (#DepthAnything v2), for safe…

We are excited to be among the very first groups selected by @NVIDIARobotics to test the new @NVIDIA #Thor. We have managed to run a #VisionLanguageModel (Qwen 2.5 VL) for semantic understanding of the environment, along with a monocular depth model (#DepthAnything v2), for safe…

Read the full article: hubs.li/Q03F78zL0 #MedicalAI #VisionLanguageModel #RadiologyAI #HealthcareAI #GenerativeAI #MedicalImaging #NLPinHealthcare #JohnSnowLabs

This 6 hours video from Umar Jamil @hkproj, has to be the finest video on VLM from scratch. Next Goal, Fine-tuning on image segmentation or object detection. youtube.com/watch?v=vAmKB7… #LargeLanguageModel #VisionLanguageModel

youtube.com

YouTube

Coding a Multimodal (Vision) Language Model from scratch in PyTorch...

👀 เบื้องหลัง VLM: ตา-ล่าม-สมอง (ฉบับเข้าใจง่าย) 💡 ใช้ได้จริงแล้วในหลายงาน ทั้งสรุปสไลด์ อ่านเอกสาร ค้นข้อมูลจากรูป หรือใช้เป็นผู้ช่วยวิเคราะห์ข้อมูลภาพ–ข้อความแบบเรียลไทม์ aideta.com/blog/arin13qgl… #AI #VisionLanguageModel #AITransformation

Google 发布了新的视觉语言模型 PaliGemma,它可以接收图像和文本输入,并输出文本。PaliGemma 包含预训练模型、混合模型和微调模型三种类型,具有图像字幕、视觉问答、目标检测、指代分割等多种能力。 #GoogleAI #PaliGemma #VisionLanguageModel huggingface.co/blog/paligemma

ByteDance Unveils Seed3D 1.0 for AI-Driven 3D Simulation Read More: lnkd.in/gd7CG6HM @BytedanceTalk #VisionLanguageModel #RoboticManipulation #MagicArticulate #DigitalTwinSystems

1/ ⚙️ Efficient training with only a single linear projection layer. 🌐 Promising results from finetuning on high-quality, well-aligned datasets. 📈 Comparable performance to the impressive GPT-4 model. #MiniGPT4 #VisionLanguageModel #MachineLearning

2/ 🎯 MiniGPT-4 empowers image description generation, story writing, problem-solving, and more! 💻 Open source availability fuels innovation and collaboration. ✨ The future of vision-language models is here! minigpt-4.github.io #AI #MiniGPT4 #VisionLanguageModel

The 32×32 Patch Grid Why does ColPali “see” so well? Each page is divided into patch grids—so it knows exactly where an image ends and text begins. That local + global context means no detail is missed, from small icons to big headers. #colpali #visionlanguagemodel

Discover #GPT4RoI, the #VisionLanguageModel that supports multi-region spatial instructions for detailed region-level understanding. #blogger #bloggers #bloggingcommunity #WritingCommunity #blogs #blogposts #LanguageModels #AI #MachineLearning #AIModel socialviews81.blogspot.com/2023/07/gpt4ro…

Read the full article: hubs.li/Q03F78zL0 #MedicalAI #VisionLanguageModel #RadiologyAI #HealthcareAI #GenerativeAI #MedicalImaging #NLPinHealthcare #JohnSnowLabs

Had a fantastic time at the event where @ritwik_raha delivered an insightful session on PaliGemma! It was very interactive and informative. #Paligeema #google #visionlanguagemodel #AI

Explore the model on AWS Marketplace: hubs.li/Q03mQ5qT0 #MedicalAI #VisionLanguageModel #HealthcareAI #ClinicalDecisionSupport #RadiologyAI #GenerativeAI #JohnSnowLabs #LLM #RAG #MedicalImaging #NLPinHealthcare

Alibaba's QWEN 2.5 VL: A Vision Language Model That Can Control Your Computer #alibaba #qwen #visionlanguagemodel #AI #viral #viralvideos #technology #engineering #trending #tech #engineer #reelsvideo contentbuffer.com/issues/detail/…

Qwen AI Releases Qwen2.5-VL: A Powerful Vision-Language Model for Seamless Computer Interaction #QwenAI #VisionLanguageModel #AIInnovation #TechForBusiness #MachineLearning itinai.com/qwen-ai-releas…

buff.ly/41bdyNy New, open-source AI vision model emerges to take on ChatGPT — but it has issues #AIImpactTour #NousHermes2Vision #VisionLanguageModel

See how domain specialization transforms medical reasoning: hubs.li/Q03nRpCk0 #MedicalAI #VisionLanguageModel #HealthcareAI #ClinicalDecisionSupport #GenerativeAI #RadiologyAI #LLM #NLPinHealthcare

SpatialRGPT.com available for sale #SpatialRGPT is an advanced region-level #VisionLanguageModel (#VLM) designed to comprehend both two-dimensional and three-dimensional spatial configurations. It has the capability to analyze any form of region proposal, such as…

각종 Vision VLM 모델로 이미지 생성 비교하기 by Ollama (Llama 3.2, llava-llama3, llama-phi) <ComfyUI워크플로우 포함> URL: blog.naver.com/beyond-zero/22… #ComfyUI #Llama #visionlanguagemodel #VLM #LLM #이미지생성형

Clinical notes, X-rays, charts—our new VLM interprets them all. See the model in AWS Marketplace: 🔗 hubs.li/Q03sfVDZ0 #VisionLanguageModel #RadiologyAI #ClinicalAI #MedicalImaging #GenerativeAI

Something went wrong.

Something went wrong.

United States Trends

- 1. Comet 27.3K posts

- 2. Fame 55.2K posts

- 3. Amon Ra 1,009 posts

- 4. TPUSA 83.3K posts

- 5. Letitia James 14.9K posts

- 6. Amorim 54K posts

- 7. Matt Campbell 1,305 posts

- 8. Teslaa 1,971 posts

- 9. The Supreme Court 29.6K posts

- 10. Oviedo 3,886 posts

- 11. #LightningStrikes N/A

- 12. The Password 3,093 posts

- 13. Spaghetti 10.7K posts

- 14. fnaf 2 17K posts

- 15. ARSB N/A

- 16. Ugarte 13.8K posts

- 17. Ingram 2,447 posts

- 18. Jameson Williams 1,837 posts

- 19. #MissVenezuela2025 N/A

- 20. Wray 29.2K posts