#visionlanguagemodels ผลการค้นหา

Hugging Face FineVision: The Ultimate Multimodal Dataset for Vision-Language Model Training #FineVision #VisionLanguageModels #HuggingFace #AIResearch #MultimodalDataset itinai.com/hugging-face-f… Understanding the Impact of FineVision on Vision-Language Models Hugging Face has …

🚀✨ Exciting Publication from @UrbanAI_Lab The paper “Sparkle: Mastering Basic Spatial Capabilities in Vision Language Models Elicits Generalization to Spatial Reasoning” has been accepted to EMNLP 2025! Link: arxiv.org/pdf/2410.16162 #UrbanAI #VisionLanguageModels

Read here: hubs.li/Q03Fs2V30 #MedicalAI #VisionLanguageModels #HealthcareAI #MedicalImaging #ClinicalDecisionSupport #GenerativeAI

🚀 Exciting news! PaddleOCR-VL has rocketed to #1 on @huggingface Trending in just 16 hours! Dive in: huggingface.co/PaddlePaddle/P… #OCR #AI #VisionLanguageModels

1/ 🗑️ in, 🗑️ out With advances in #VisionLanguageModels, there is growing interest in automated #RadiologyReporting. It's great to see such high research interest, BUT... 🚧 Technique seems intriguing, but the figures raise serious doubts about this paper's merit. 🧵 👇

Last week, I gave an invited talk at the 1st workshop on critical evaluation of generative models and their impact on society at #ECCV2024, focusing on unmasking and tackling bias in #VisionLanguageModels. Thanks to the organizers for the invitation!

Say goodbye to manual data labeling and hello to instant insights! Our new #VisionLanguageModels can extract features from aerial images using just simple prompts. Simply upload an image and ask a question such as "What do you see?" Learn more: ow.ly/FsbV50WKnC3

New research reveals a paradigm-shifting approach to data curation in vision-language models, unlocking their intrinsic capabilities for more accurate and efficient AI understanding. A big step forward in bridging visual and textual data! 🤖📊 #AI #VisionLanguageModels

Apple’s FastVLM: 85x Faster Hybrid Vision Encoder Revolutionizing AI Models #FastVLM #VisionLanguageModels #AIInnovation #MultimodalProcessing #AppleTech itinai.com/apples-fastvlm… Apple has made a significant leap in the field of Vision Language Models (VLMs) with the introducti…

Did you know most vision-language models (like Claude, OpenAI, Gemini) totally suck at reading analog clocks ⏰? (Except Molmo—it’s actually trained for that) #AI #MachineLearning #VisionLanguageModels #vibecoding

Alhamdulillah! Thrilled to share that our work "O-TPT" has been accepted at #CVPR2025! Big thanks to my supervisor and co-authors for the support! thread(1/n) #MachineLearning #VisionLanguageModels #CVPR2025

Exploring the limitations of Vision-Language Models (VLMs) like GPT-4V in complex visual reasoning tasks. #AI #VisionLanguageModels #DeductiveReasoning

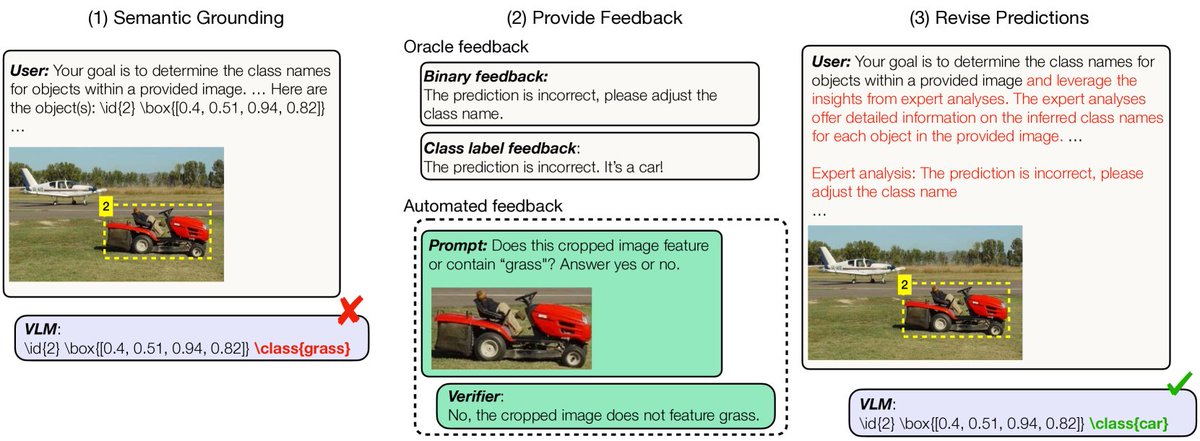

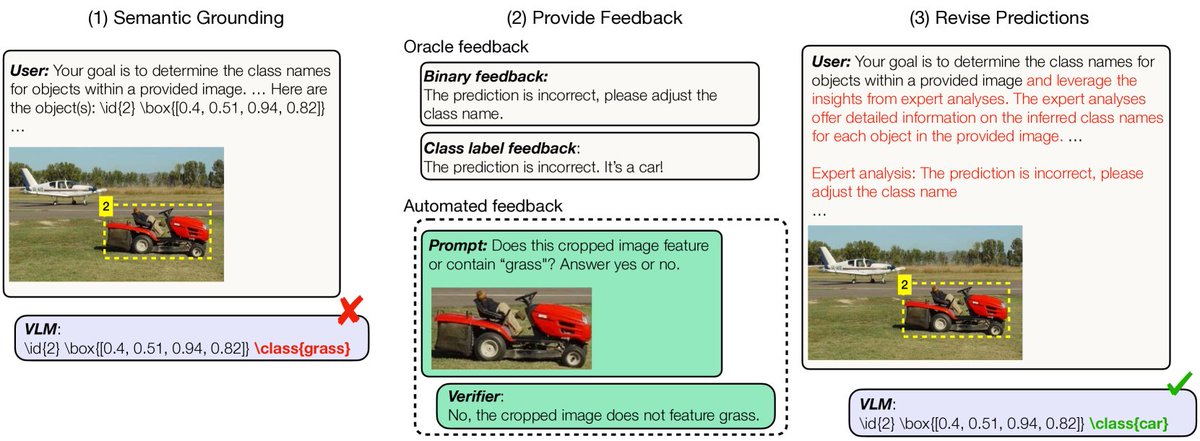

1/5 Can feedback improve semantic grounding in large vision-language models? A recent study delves into this question, exploring the potential of feedback in enhancing the alignment between visual and textual representations. #AI #VisionLanguageModels

Thrilled to share that we have two papers accepted at #CVPR2025! 🚀 A big thank you to all the collaborators for their contributions. Stay tuned for more updates! Titles in the thread (1/n) #CVPR #VisionLanguageModels #ModelCalibration #EarthObservation

Read more: hubs.li/Q03C0tJY0 #RadiologyAI #VisionLanguageModels #MedicalImaging #ClinicalAI #HealthcareAI #GenerativeAI #JohnSnowLabs

1/5 BRAVE: A groundbreaking approach to enhancing vision-language models (VLMs)! By combining features from multiple vision encoders, BRAVE creates a more versatile and robust visual representation. #AI #VisionLanguageModels

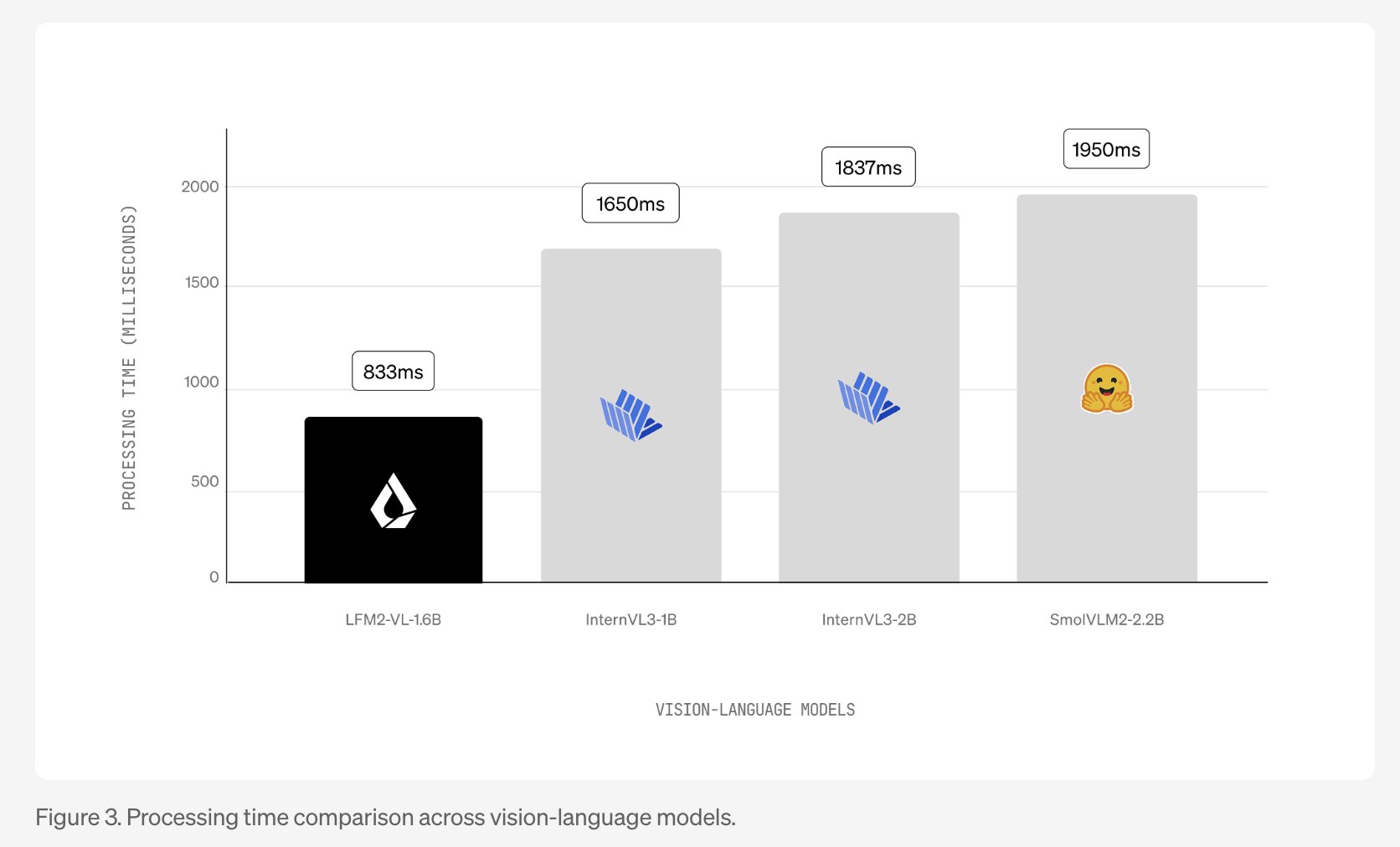

Exciting news from Liquid AI! 🚀 Introducing LFM2-VL: super-fast, open-weight vision-language models perfect for low-latency, on-device deployment. Revolutionizing AI for smartphones, laptops, wearables, and more! #AI #VisionLanguageModels marktechpost.com/2025/08/20/liq…

Exploring the capabilities of multimodal LLMs in visual network analysis. #LargeLanguageModels #VisualNetworkAnalysis #VisionLanguageModels

Investigating vision-language models on Raven's Progressive Matrices showcases gaps in visual deductive reasoning. #VisualReasoning #DeductiveReasoning #VisionLanguageModels

Introducing a comprehensive benchmark and large-scale dataset to evaluate and improve LVLMs' abilities in multi-turn and multi-image conversations. #DialogUnderstanding #VisionLanguageModels #MultiImageConversations

2/4 The score is computed in three stages: baseline accuracy, degradation under noise, degradation under crafted attacks, then blended with tunable weights w₁ + w₂ = 1 to reflect specific risk profiles. #VisionLanguageModels

🚀 Exciting news! PaddleOCR-VL has rocketed to #1 on @huggingface Trending in just 16 hours! Dive in: huggingface.co/PaddlePaddle/P… #OCR #AI #VisionLanguageModels

A key challenge for VLMs is "grounding" - correctly linking text to visual elements. The latest research uses techniques like bounding box annotations and negative captioning to teach models to see and understand with greater accuracy. #DeepLearning #AI #VisionLanguageModels

💻 We have open-sourced the code at github.com/ServiceNow/Big… 🙌 This was a collaboration effort between @ServiceNowRSRCH , @Mila_Quebec , and @YorkUniversity. #COLM2025 #AI #VisionLanguageModels #Charts #BigCharts

🚀✨ Exciting Publication from @UrbanAI_Lab The paper “Sparkle: Mastering Basic Spatial Capabilities in Vision Language Models Elicits Generalization to Spatial Reasoning” has been accepted to EMNLP 2025! Link: arxiv.org/pdf/2410.16162 #UrbanAI #VisionLanguageModels

New research reveals a paradigm-shifting approach to data curation in vision-language models, unlocking their intrinsic capabilities for more accurate and efficient AI understanding. A big step forward in bridging visual and textual data! 🤖📊 #AI #VisionLanguageModels

This paper summarizes a comprehensive framework for typographic attacks, proving their effectiveness and transferability against Vision-LLMs like LLaVA - hackernoon.com/future-of-ad-s… #visionlanguagemodels #visionllms

This article presents an empirical study on the effectiveness and transferability of typographic attacks against major Vision-LLMs using AD-specific datasets. - hackernoon.com/empirical-stud… #visionlanguagemodels #visionllms

This article explores the physical realization of typographic attacks, categorizing their deployment into background and foreground elements - hackernoon.com/foreground-vs-… #visionlanguagemodels #visionllms

Hugging Face FineVision: The Ultimate Multimodal Dataset for Vision-Language Model Training #FineVision #VisionLanguageModels #HuggingFace #AIResearch #MultimodalDataset itinai.com/hugging-face-f… Understanding the Impact of FineVision on Vision-Language Models Hugging Face has …

Read here: hubs.li/Q03Fs2V30 #MedicalAI #VisionLanguageModels #HealthcareAI #MedicalImaging #ClinicalDecisionSupport #GenerativeAI

Apple’s FastVLM: 85x Faster Hybrid Vision Encoder Revolutionizing AI Models #FastVLM #VisionLanguageModels #AIInnovation #MultimodalProcessing #AppleTech itinai.com/apples-fastvlm… Apple has made a significant leap in the field of Vision Language Models (VLMs) with the introducti…

Say goodbye to manual data labeling and hello to instant insights! Our new #VisionLanguageModels can extract features from aerial images using just simple prompts. Simply upload an image and ask a question such as "What do you see?" Learn more: ow.ly/FsbV50WKnC3

1/ 🗑️ in, 🗑️ out With advances in #VisionLanguageModels, there is growing interest in automated #RadiologyReporting. It's great to see such high research interest, BUT... 🚧 Technique seems intriguing, but the figures raise serious doubts about this paper's merit. 🧵 👇

Read more: hubs.li/Q03C0tJY0 #RadiologyAI #VisionLanguageModels #MedicalImaging #ClinicalAI #HealthcareAI #GenerativeAI #JohnSnowLabs

1/5 Can feedback improve semantic grounding in large vision-language models? A recent study delves into this question, exploring the potential of feedback in enhancing the alignment between visual and textual representations. #AI #VisionLanguageModels

1/5 BRAVE: A groundbreaking approach to enhancing vision-language models (VLMs)! By combining features from multiple vision encoders, BRAVE creates a more versatile and robust visual representation. #AI #VisionLanguageModels

🚀 Exciting news! PaddleOCR-VL has rocketed to #1 on @huggingface Trending in just 16 hours! Dive in: huggingface.co/PaddlePaddle/P… #OCR #AI #VisionLanguageModels

Vision Language Models: Learning From Text & Images Together Read more on govindhtech.com/vision-languag… #VisionLanguageModels #LanguageModels #AI #generativemodels #HuggingFaceHub #OpenVLM #News #Technews #Technology #Technologynews #Technologytrends #Govindhtech @TechGovind70399

Exploring the limitations of Vision-Language Models (VLMs) like GPT-4V in complex visual reasoning tasks. #AI #VisionLanguageModels #DeductiveReasoning

Exploring the capabilities of multimodal LLMs in visual network analysis. #LargeLanguageModels #VisualNetworkAnalysis #VisionLanguageModels

From Pixels to Words -- Towards Native Vision-Language Primitives at Scale 👥 Haiwen Diao, Mingxuan Li, Silei Wu et al. #VisionLanguageModels #AIResearch #DeepLearning #OpenSource #ComputerVision 🔗 trendtoknow.ai

📢 Call for Papers — JBHI Special Issue: “Transparent Large #VisionLanguageModels in Healthcare” Seeking research on: ✔️ Explainable VLMs ✔️ Medical image-text alignment ✔️ Fair & interpretable AI 📅 Deadline: Sep 30, 2025 🔗 Info: tinyurl.com/4a7d69t2

Thrilled to share that we have two papers accepted at #CVPR2025! 🚀 A big thank you to all the collaborators for their contributions. Stay tuned for more updates! Titles in the thread (1/n) #CVPR #VisionLanguageModels #ModelCalibration #EarthObservation

Alhamdulillah! Thrilled to share that our work "O-TPT" has been accepted at #CVPR2025! Big thanks to my supervisor and co-authors for the support! thread(1/n) #MachineLearning #VisionLanguageModels #CVPR2025

Investigating vision-language models on Raven's Progressive Matrices showcases gaps in visual deductive reasoning. #VisualReasoning #DeductiveReasoning #VisionLanguageModels

Mini-Gemini, an innovative #framework from CUHK, optimizes #VisionLanguageModels with a dual-encoder, expanded data, and #LargeLanguageModels. #AI #ComputerVision arxiv.org/abs/2403.18814

Pixel-SAIL: A Revolutionary Single-Transformer Model for Pixel-Level Vision-Language Tasks #PixelSAIL #VisionLanguageModels #ArtificialIntelligence #MachineLearning #Innovation itinai.com/pixel-sail-a-r…

Introducing a comprehensive benchmark and large-scale dataset to evaluate and improve LVLMs' abilities in multi-turn and multi-image conversations. #DialogUnderstanding #VisionLanguageModels #MultiImageConversations

Something went wrong.

Something went wrong.

United States Trends

- 1. #UFC321 112K posts

- 2. Gane 126K posts

- 3. Aspinall 113K posts

- 4. Jon Jones 10.4K posts

- 5. Ryan Williams N/A

- 6. Liverpool 168K posts

- 7. Mizzou 4,840 posts

- 8. South Carolina 11.1K posts

- 9. Ty Simpson 1,486 posts

- 10. Mateer 5,894 posts

- 11. Matt Zollers N/A

- 12. Aaron Henry N/A

- 13. Iowa 16.2K posts

- 14. Kirby Moore N/A

- 15. June Lockhart 4,735 posts

- 16. Slot 110K posts

- 17. Pribula 1,156 posts

- 18. Arch 18.1K posts

- 19. Sark 4,322 posts

- 20. Brentford 71.6K posts