#visionlanguagemodels 搜尋結果

TRACE: A Framework for Analyzing and Enhancing Stepwise Reasoning in Vision-Language Models Preprint: TRACE introduces a framework to diagnose reasoning errors in vision-lan… arxiv.org/abs/2512.05943 #AI #VisionLanguageModels #MachineLearning #Preprint #Arxiv #ScienceNews

1/ 🗑️ in, 🗑️ out With advances in #VisionLanguageModels, there is growing interest in automated #RadiologyReporting. It's great to see such high research interest, BUT... 🚧 Technique seems intriguing, but the figures raise serious doubts about this paper's merit. 🧵 👇

🚀✨ Exciting Publication from @UrbanAI_Lab The paper “Sparkle: Mastering Basic Spatial Capabilities in Vision Language Models Elicits Generalization to Spatial Reasoning” has been accepted to EMNLP 2025! Link: arxiv.org/pdf/2410.16162 #UrbanAI #VisionLanguageModels

Last week, I gave an invited talk at the 1st workshop on critical evaluation of generative models and their impact on society at #ECCV2024, focusing on unmasking and tackling bias in #VisionLanguageModels. Thanks to the organizers for the invitation!

Using #VisionLanguageModels to Process Millions of Documents | Towards Data Science towardsdatascience.com/using-vision-l…

1/5 Can feedback improve semantic grounding in large vision-language models? A recent study delves into this question, exploring the potential of feedback in enhancing the alignment between visual and textual representations. #AI #VisionLanguageModels

Say goodbye to manual data labeling and hello to instant insights! Our new #VisionLanguageModels can extract features from aerial images using just simple prompts. Simply upload an image and ask a question such as "What do you see?" Learn more: ow.ly/FsbV50WKnC3

1/5 BRAVE: A groundbreaking approach to enhancing vision-language models (VLMs)! By combining features from multiple vision encoders, BRAVE creates a more versatile and robust visual representation. #AI #VisionLanguageModels

Alhamdulillah! Thrilled to share that our work "O-TPT" has been accepted at #CVPR2025! Big thanks to my supervisor and co-authors for the support! thread(1/n) #MachineLearning #VisionLanguageModels #CVPR2025

Exploring the limitations of Vision-Language Models (VLMs) like GPT-4V in complex visual reasoning tasks. #AI #VisionLanguageModels #DeductiveReasoning

Thrilled to share that we have two papers accepted at #CVPR2025! 🚀 A big thank you to all the collaborators for their contributions. Stay tuned for more updates! Titles in the thread (1/n) #CVPR #VisionLanguageModels #ModelCalibration #EarthObservation

Read here: hubs.li/Q03Fs2V30 #MedicalAI #VisionLanguageModels #HealthcareAI #MedicalImaging #ClinicalDecisionSupport #GenerativeAI

Read more: hubs.li/Q03C0tJY0 #RadiologyAI #VisionLanguageModels #MedicalImaging #ClinicalAI #HealthcareAI #GenerativeAI #JohnSnowLabs

Did you know most vision-language models (like Claude, OpenAI, Gemini) totally suck at reading analog clocks ⏰? (Except Molmo—it’s actually trained for that) #AI #MachineLearning #VisionLanguageModels #vibecoding

Can machines truly understand what they see? Vision-language models are blurring the line between perception & cognition with real-world impact. Centific’s VerityAI is built to power that shift. Explore how VLMs are evolving: centific.com/blog/are-visio… #VisionLanguageModels

Exploring the capabilities of multimodal LLMs in visual network analysis. #LargeLanguageModels #VisualNetworkAnalysis #VisionLanguageModels

Unlocking the Power of Vision Language Models: Exploring VLM and Its Applications #VisionLanguageModels #MultimodalModels #ImageQuestionAnswering #HuggingFaceLeaderboard #AIResearch #NLP #MachineLearning #ArtificialIntelligence #DataScience #Technology

Investigating vision-language models on Raven's Progressive Matrices showcases gaps in visual deductive reasoning. #VisualReasoning #DeductiveReasoning #VisionLanguageModels

A key challenge for VLMs is "grounding" - correctly linking text to visual elements. The latest research uses techniques like bounding box annotations and negative captioning to teach models to see and understand with greater accuracy. #DeepLearning #AI #VisionLanguageModels

TRACE: A Framework for Analyzing and Enhancing Stepwise Reasoning in Vision-Language Models Preprint: TRACE introduces a framework to diagnose reasoning errors in vision-lan… arxiv.org/abs/2512.05943 #AI #VisionLanguageModels #MachineLearning #Preprint #Arxiv #ScienceNews

Reviews state-of-the-art MLLMs. Highlights the challenge of expanding current models beyond the simple one-to-one image text relationship. - hackernoon.com/mllm-adapters-… #visionlanguagemodels #multimodallearning

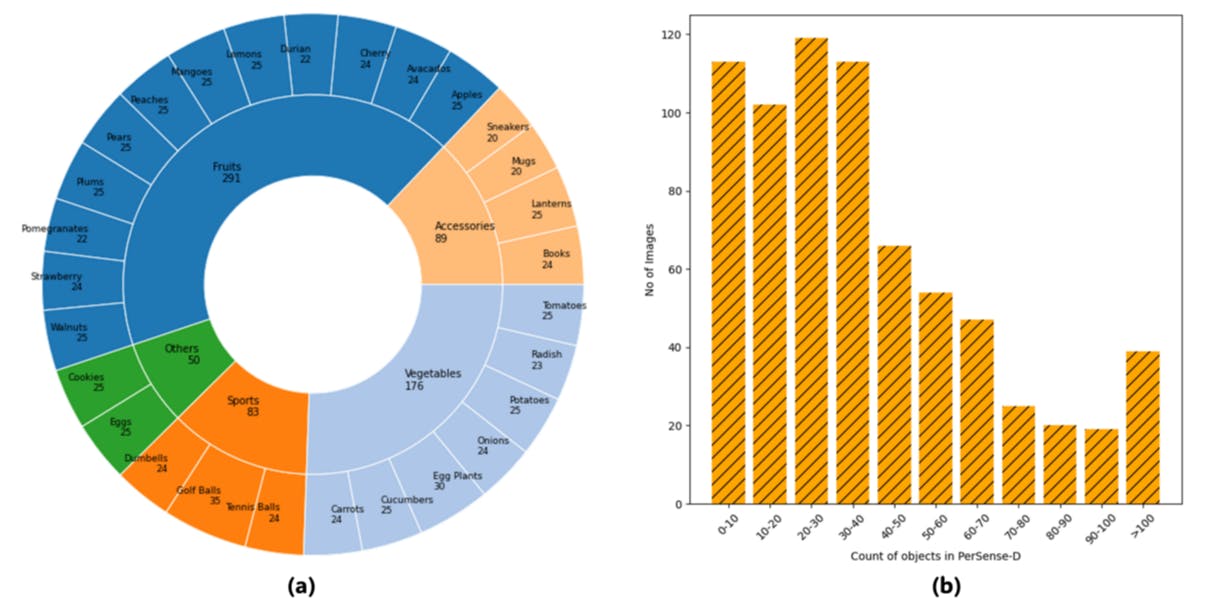

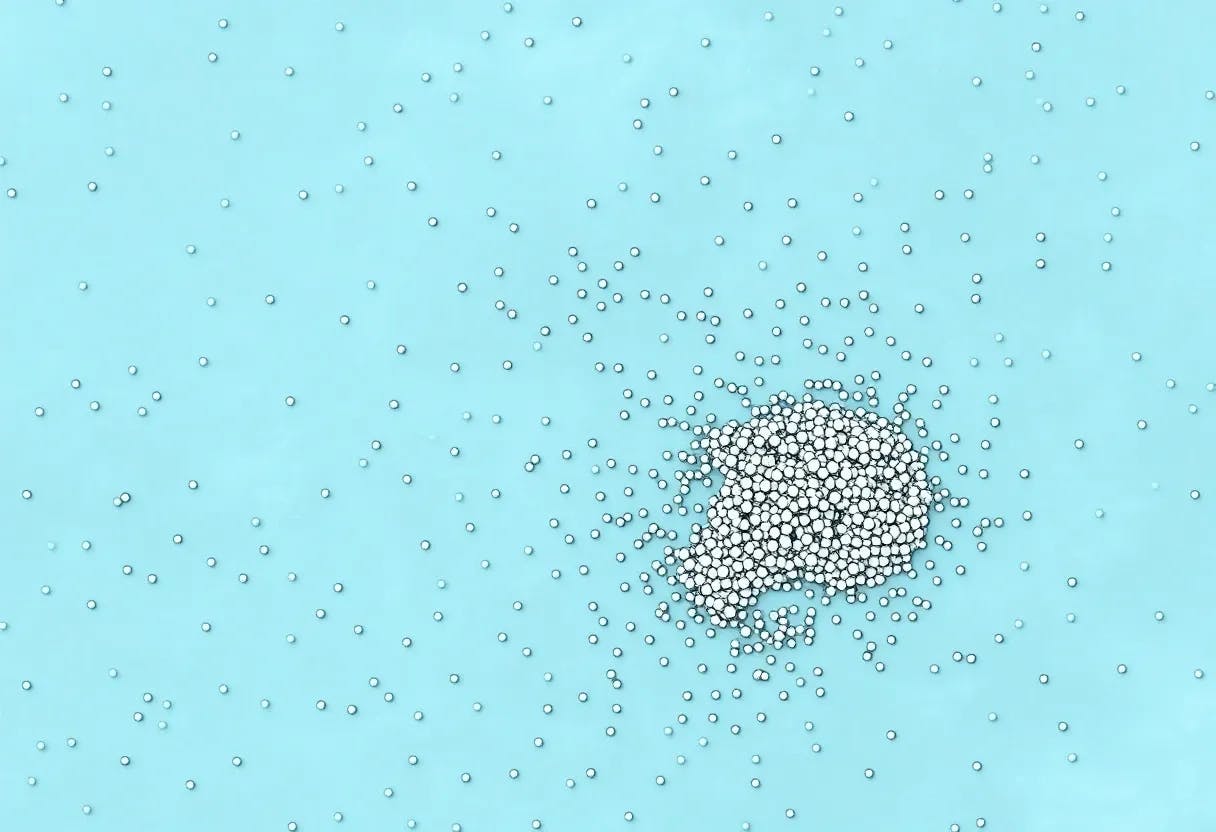

PerSense-D is a new benchmark dataset for personalized dense image segmentation, advancing AI accuracy in crowded visual environments. - hackernoon.com/new-dataset-pe… #visionlanguagemodels #denseimagesegmentation

Adaptive prompts, density maps, and VLMs are used in PerSense's training-free one-shot segmentation framework for dense picture interpretation. - hackernoon.com/persense-deliv… #visionlanguagemodels #denseimagesegmentation

PerSense is a model-aware, training-free system for one-shot tailored instance division in dense images based on density and vision-language cues. - hackernoon.com/persense-a-one… #visionlanguagemodels #denseimagesegmentation

2/4 The score is computed in three stages: baseline accuracy, degradation under noise, degradation under crafted attacks, then blended with tunable weights w₁ + w₂ = 1 to reflect specific risk profiles. #VisionLanguageModels

(3/3) 🤝 Open to #Collaboration and #Internship Opportunities on: 🧠 Data-centric AI 🤖 Vision-language Model training and evaluation Shoutout to amazing co-authors @JoLiang17 @zhoutianyi ! #VisionLanguageModels #DCAI #DataCentric #ResponsibleAI #ICCV #AI #ML #ComputerVision

🚀 Exciting news! PaddleOCR-VL has rocketed to #1 on @huggingface Trending in just 16 hours! Dive in: huggingface.co/PaddlePaddle/P… #OCR #AI #VisionLanguageModels

A key challenge for VLMs is "grounding" - correctly linking text to visual elements. The latest research uses techniques like bounding box annotations and negative captioning to teach models to see and understand with greater accuracy. #DeepLearning #AI #VisionLanguageModels

💻 We have open-sourced the code at github.com/ServiceNow/Big… 🙌 This was a collaboration effort between @ServiceNowRSRCH , @Mila_Quebec , and @YorkUniversity. #COLM2025 #AI #VisionLanguageModels #Charts #BigCharts

🚀 Submissions open for VLM4RWD @ NeurIPS 2025! Let’s make VLMs efficient & ready for the real world 🌎💡 🗓️ Deadline: Oct 31 📍 Mexico City 🇲🇽 🔗 openreview.net/group?id=NeurI… #NeurIPS2025 #VLM4RWD #VisionLanguageModels

1/ 🗑️ in, 🗑️ out With advances in #VisionLanguageModels, there is growing interest in automated #RadiologyReporting. It's great to see such high research interest, BUT... 🚧 Technique seems intriguing, but the figures raise serious doubts about this paper's merit. 🧵 👇

Say goodbye to manual data labeling and hello to instant insights! Our new #VisionLanguageModels can extract features from aerial images using just simple prompts. Simply upload an image and ask a question such as "What do you see?" Learn more: ow.ly/FsbV50WKnC3

1/5 Can feedback improve semantic grounding in large vision-language models? A recent study delves into this question, exploring the potential of feedback in enhancing the alignment between visual and textual representations. #AI #VisionLanguageModels

From Pixels to Words -- Towards Native Vision-Language Primitives at Scale 👥 Haiwen Diao, Mingxuan Li, Silei Wu et al. #VisionLanguageModels #AIResearch #DeepLearning #OpenSource #ComputerVision 🔗 trendtoknow.ai

1/5 BRAVE: A groundbreaking approach to enhancing vision-language models (VLMs)! By combining features from multiple vision encoders, BRAVE creates a more versatile and robust visual representation. #AI #VisionLanguageModels

🚀 Exciting news! PaddleOCR-VL has rocketed to #1 on @huggingface Trending in just 16 hours! Dive in: huggingface.co/PaddlePaddle/P… #OCR #AI #VisionLanguageModels

Read more: hubs.li/Q03C0tJY0 #RadiologyAI #VisionLanguageModels #MedicalImaging #ClinicalAI #HealthcareAI #GenerativeAI #JohnSnowLabs

Read here: hubs.li/Q03Fs2V30 #MedicalAI #VisionLanguageModels #HealthcareAI #MedicalImaging #ClinicalDecisionSupport #GenerativeAI

📢 Call for Papers — JBHI Special Issue: “Transparent Large #VisionLanguageModels in Healthcare” Seeking research on: ✔️ Explainable VLMs ✔️ Medical image-text alignment ✔️ Fair & interpretable AI 📅 Deadline: Sep 30, 2025 🔗 Info: tinyurl.com/4a7d69t2

Moondream 2 is a superstar in the world of vision-and-language models, but what makes it tick? This post unveils the magic behind it: Curious to learn more? ➡️ hubs.la/Q02sWg4R0 #Moondream2 #VisionLanguageModels #AIInnovation

Exploring the limitations of Vision-Language Models (VLMs) like GPT-4V in complex visual reasoning tasks. #AI #VisionLanguageModels #DeductiveReasoning

Exploring the capabilities of multimodal LLMs in visual network analysis. #LargeLanguageModels #VisualNetworkAnalysis #VisionLanguageModels

🚀 New tutorial just dropped! Synthetic Data Generation Using the BLIP and PaliGemma Models 👉 Read the full tutorial: pyimg.co/xiy4r ✍️ Author: @cosmo3769 #AI #VisionLanguageModels #SyntheticData #VQA #BLIP #PaliGemma #MachineLearning #HuggingFace #OpenSourceAI

Thrilled to share that we have two papers accepted at #CVPR2025! 🚀 A big thank you to all the collaborators for their contributions. Stay tuned for more updates! Titles in the thread (1/n) #CVPR #VisionLanguageModels #ModelCalibration #EarthObservation

Headed to #GEOINT2025? Don’t miss Dr. Brian Clipp’s session on #VisionLanguageModels: 🧠 Explainable segmentation, tracking & detection 🧰 Compositional programming for analyst queries 🗓️ May 19 | 7:30 AM ow.ly/mE4r50VR5lI #GeospatialIntelligence

Investigating vision-language models on Raven's Progressive Matrices showcases gaps in visual deductive reasoning. #VisualReasoning #DeductiveReasoning #VisionLanguageModels

Alhamdulillah! Thrilled to share that our work "O-TPT" has been accepted at #CVPR2025! Big thanks to my supervisor and co-authors for the support! thread(1/n) #MachineLearning #VisionLanguageModels #CVPR2025

Introducing a comprehensive benchmark and large-scale dataset to evaluate and improve LVLMs' abilities in multi-turn and multi-image conversations. #DialogUnderstanding #VisionLanguageModels #MultiImageConversations

[1/6] 🚀 Exciting News! Our paper has been accepted at hashtag #CVPR2025 ! 🎉 We’re thrilled to introduce "ProKeR: A Kernel Perspective on Few-Shot Adaptation of Large Vision-Language Models" 📄 ybendou.github.io/ProKeR/ #VisionLanguageModels #FewShotLearning #ComputerVision

![YBendou's tweet image. [1/6] 🚀 Exciting News! Our paper has been accepted at hashtag #CVPR2025 ! 🎉

We’re thrilled to introduce "ProKeR: A Kernel Perspective on Few-Shot Adaptation of Large Vision-Language Models"

📄 ybendou.github.io/ProKeR/

#VisionLanguageModels #FewShotLearning #ComputerVision](https://pbs.twimg.com/media/Gk0KtGRX0AAW3MT.jpg)

Something went wrong.

Something went wrong.

United States Trends

- 1. Starlight Christmas N/A

- 2. Piers 39K posts

- 3. Tracy Morgan 1,483 posts

- 4. Chargers 18.3K posts

- 5. Jasmine Crockett 27.4K posts

- 6. Pulisic 12.1K posts

- 7. Alina Habba 21.4K posts

- 8. Busta 2,601 posts

- 9. Wolves 107K posts

- 10. Kyle 33.5K posts

- 11. Paramount 87.4K posts

- 12. Farmers 102K posts

- 13. Carragher 56.2K posts

- 14. Cunha 18.7K posts

- 15. Go Birds 7,371 posts

- 16. #WOLMUN 10.7K posts

- 17. Gronk 3,112 posts

- 18. Nick Fuentes 41.9K posts

- 19. #MUFC 19.9K posts

- 20. Amorim 46.9K posts