#adversarialexamples نتائج البحث

#Sprachassistenten lassen sich mit versteckten Audiosignalen manipulieren. Das hat ein @HGI_Bochum-Forschungsteam herausgefunden und erklärt, wie so ein Angriff funktioniert: 👉 news.rub.de/wissenschaft/2… #AdversarialExamples (Video: Agentur der RUB) ^tst

#AdversarialExamples: it seems that PGD is a *new*, powerful attack. Well, it's what we've been doing since 2013, to (iteratively) optimize a nonlinear function over a constrained domain. Are we reinventing the wheel over and over? arxiv.org/abs/1708.06131 arxiv.org/abs/1708.06939

Our paper was accepted for publication in 9th ACM Conference on Data and Application Security and Privacy! There we presented how to attack developer's identity in open-source projects like GitHub. We also developed multiple protection methods. #codaspy #acm #AdversarialExamples

Research and development of state-of-the-art deepfake detection analytics with intuitive explanations and robustness to open-world variations as well as malicious adversarial examples. #adversarialexamples #deepfakedetection #robustai

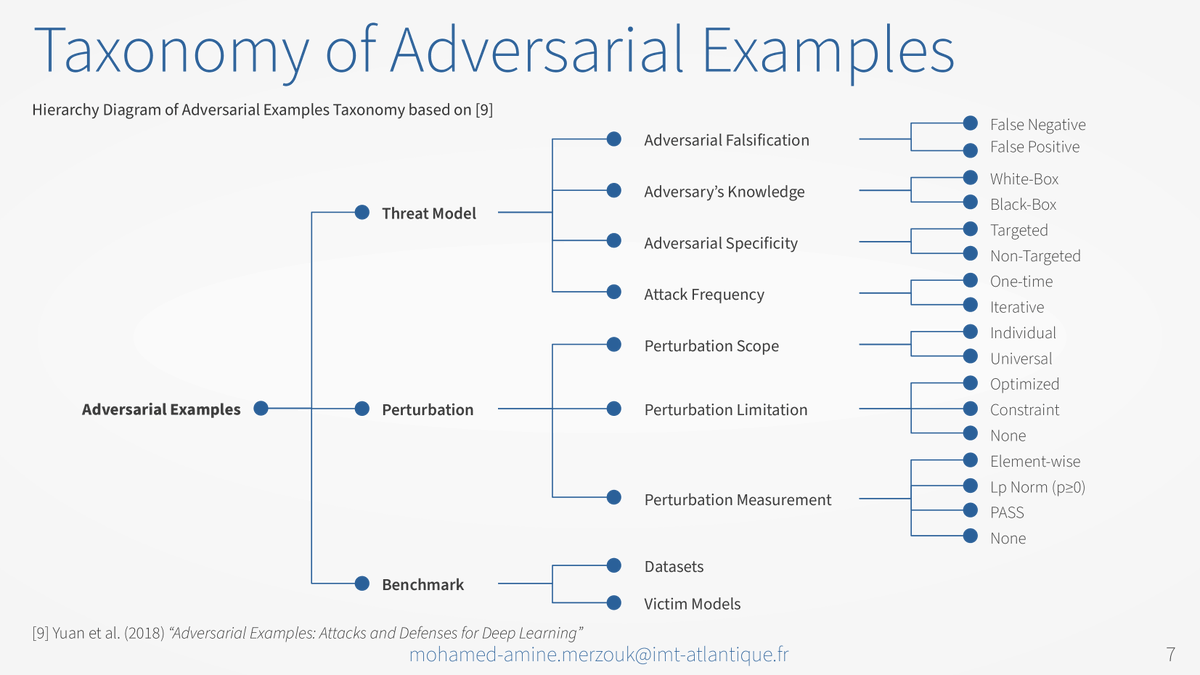

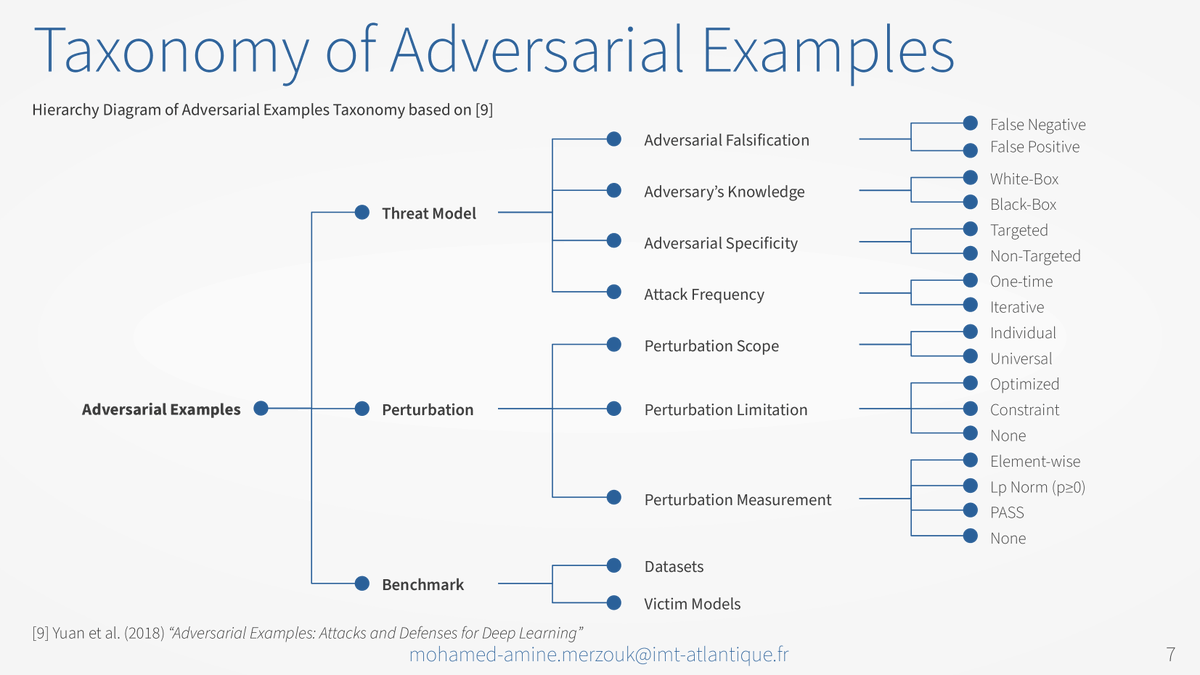

I want to share this hierarchy diagram I made for a presentation. It shows the taxonomy of Adversarial Examples based on Yuan et al. (2018), a very interesting survey on adversarial examples. (arxiv.org/abs/1712.07107) #deeplearning #adversarialexamples #taxonomy #diagram

At our #MachineLearning colloquium today, Sascha presents his Master’s thesis on the „Localization of #AdversarialExamples in feature space for reject options in #DeepNeuralNetworks“. #DeepLearning

A new set of images that fool AI could help make it more hacker-proof buff.ly/2FrFiEG #adversarialattacks #adversarialexamples #ai #machinelearning

Discover Transferability of Adversarial Attacks! #adversarialattacks #adversarialexamples #AIattacks #AIsecurity #deeplearning #foolingAImodels #MachineLearning #modelvulnerability #transferability aicompetence.org/adversarial-at…

Ruse - Mobile Camera-Based Application That Attempts To Alter Photos To Preserve Their Utility To Humans While Making Them Unusable For Facial Recognition Systems dlvr.it/S4n88V #Adversarial #AdversarialExamples #Assembly #Camera #Capture

Mercoledì alle 11 darò un seminario al dipartimento di informatica di @unimib su #AdversarialExamples nei modelli di #DeepLearning, e come contrastarli con la #DifferentialPrivacy. Dettagli nella locandina. Se siete in zona, siete benvenuti! Il seminario sarà anche registrato.

Be careful! ⚠️ RLHF is not true RL! The models are gamed, so crop the training after a few hundred updates to avoid the model finding the adversarial examples. #RLHF #AdversarialExamples #MachineLearning

Explore how adversarial examples challenge AI and the quest for robustness. 🛡️🤖 #AI #AdversarialExamples #RobustAI #AIBrilliance

#AI #AIHallucinations #AdversarialExamples #GenerativeAI #ComputerVision #AIModels #FalseNews #Accuracy #Reliability #Trustworthiness #DataTraining #DataAccuracy #TextGeneration #ImageGeneration #ArtificialIntelligence #MachineLearning #ChatGPT ai-talks.org/2023/04/09/ai-…

@RRR59651376 @realDonaldTrump @ABCPolitics #surveillance, #adversarialexamples #WhatTriggersConservatives #WhatTriggersLiberals Being automatically picked out of a crowd, identified and databased bother you? Maybe do something about it: redrabbitresearch.com

A new set of images that fool AI could help make it more hacker-proof buff.ly/2FrFiEG #adversarialattacks #adversarialexamples #ai #machinelearning

A new paper published by Xiaohui Cui et al. from China. Deepfake-Image Anti-Forensics with Adversarial Examples Attacks #adversarialexamples #deepfake #generaldetectors #Poissonnoise mdpi.com/1999-5903/13/1…

敵対的サンプルの中間者攻撃。ユーザーがWebにアップロードした画像を攻撃者が傍受・改ざんして敵対的サンプルに仕立て上げる。 #adversarialexamples arxiv.org/abs/2112.05634

16/22Adversarial examples in computer vision make this worse. Attackers can create images that look normal to humans but cause AI vision systems to "see" malicious text or instructions that aren't actually there. It's optical illusions for machines. #AdversarialExamples

4/15 I’ve seen cases where voice assistants were tricked by adversarial audio—commands embedded in noise that humans can’t hear, but AI can. It’s spooky and real. #VoiceSecurity #AdversarialExamples

Be careful! ⚠️ RLHF is not true RL! The models are gamed, so crop the training after a few hundred updates to avoid the model finding the adversarial examples. #RLHF #AdversarialExamples #MachineLearning

Discover Transferability of Adversarial Attacks! #adversarialattacks #adversarialexamples #AIattacks #AIsecurity #deeplearning #foolingAImodels #MachineLearning #modelvulnerability #transferability aicompetence.org/adversarial-at…

Uncover Adversarial Examples. 🧩🚫 Inputs crafted to mislead machine learning models into making incorrect predictions. #AdversarialExamples #AI #MachineLearning #DataScience #Aibrilliance. Learn More at aibrilliance.com.

AIは攻撃に対して脆弱です。安全に使用できるでしょうか? #ArtificialIntelligence #NeuralNetworks #AdversarialExamples #Chatbots prompthub.info/30354/

prompthub.info

AIは攻撃に対して脆弱です。安全に使用できるでしょうか? - プロンプトハブ

2015年、GoogleのIan Goodfellowと彼の同僚がAIの最も有名な失敗を記述 人間の目には区別

Explore how adversarial examples challenge AI and the quest for robustness. 🛡️🤖 #AI #AdversarialExamples #RobustAI #AIBrilliance

If you're interested in reading our TinyPaper, "SD-NAE: Generating Natural Adversarial Examples with Stable Diffusion," you can find it on OpenReview: openreview.net/forum?id=D87ri… We appreciate any feedback or thoughts you might have! #ICLR2024 #StableDiffusion #AdversarialExamples

Strategizing AI Fairness: Redefining Unlearning Dynamics Sans Retraining #adversarialexamples #AI #AItechnology #artificialintelligence #Deepneuralnetworks #instancewiseunlearning #llm #machinelearning #misclassification #unlearningstrategies multiplatform.ai/strategizing-a…

Check out my article on: "Understanding Adversarial Examples and Defence Mechanisms" #GenAI #GANs #adversarialexamples #computervision #machinelearning #DeepLearning #ml #dropsofai dropsofai.com/understanding-… via @kartikgill96

dropsofai.com

Understanding Adversarial Examples and Defence Mechanisms - Drops of AI

This article covers the background related to attacks of adversarial examples and defence mechanisms against them.

2/ I'm deeply involved in the research and development of state-of-the-art deepfake detection analytics, ensuring robustness to open-world variations and adversarial examples. 🛡️🤯 #adversarialexamples #robustai #deepfakedetection #techinnovation

Research and development of state-of-the-art deepfake detection analytics with intuitive explanations and robustness to open-world variations as well as malicious adversarial examples. #adversarialexamples #deepfakedetection #robustai

"Discover the fascinating world of physical adversarial examples (PAEs) with our new blog post. Learn about the challenges and safety concerns they pose to deep neural networks in real-world scenarios. Find out more at bit.ly/3sk52P2 #technology #adversarialexamples"

Lecture 5 proposes a defense towards #AdversarialExamples in #DeepLearning of cryptographic flavor, namely based on #DifferentialPrivacy. youtube.com/watch?v=sNYNTU…

youtube.com

YouTube

Lecture 5 - Differential Privacy for Adversarial Robustness

#AdversarialExamples against #Flash #Malware detection with #MachineLearning @maiorcasecurity #AdversarialTraining #Security #SWF

After successful DNN classification, I had to tell my wife that it is not ok to give a rifle to our two year old daughter 😁 #InsideJoke #DeepLearning #AdversarialExamples

#AdversarialTraining is not effective against #AdversarialExamples if your feature representation is #vulnerable - #Evasion #MachineLearning #Flash #Malware #DeepLearning - arxiv.org/pdf/1710.10225…

Attack #MachineLearning w #AdversarialExamples @OpenAI <experiment breaking your models - #cleverhans #opensource> buff.ly/2nl2KcB

Research and development of state-of-the-art deepfake detection analytics with intuitive explanations and robustness to open-world variations as well as malicious adversarial examples. #adversarialexamples #deepfakedetection #robustai

RT @basecamp_ai: Fooling Neural Networks in the Physical World with 3D Adversarial Objectshttp://www.labsix.org/physical-objects-that-fool-neural-nets/ #ImageRecognition #AdversarialExamples #NeuralNetworks

I want to share this hierarchy diagram I made for a presentation. It shows the taxonomy of Adversarial Examples based on Yuan et al. (2018), a very interesting survey on adversarial examples. (arxiv.org/abs/1712.07107) #deeplearning #adversarialexamples #taxonomy #diagram

Discover Transferability of Adversarial Attacks! #adversarialattacks #adversarialexamples #AIattacks #AIsecurity #deeplearning #foolingAImodels #MachineLearning #modelvulnerability #transferability aicompetence.org/adversarial-at…

#AdversarialExamples: it seems that PGD is a *new*, powerful attack. Well, it's what we've been doing since 2013, to (iteratively) optimize a nonlinear function over a constrained domain. Are we reinventing the wheel over and over? arxiv.org/abs/1708.06131 arxiv.org/abs/1708.06939

Countering #AdversarialExamples against #iCub #robot #humanoid arxiv.org/abs/1708.06939 #deeplearning #security #robotics #MachineLearning

#SupportVectorMachines vs #AdversarialExamples back in 2014 (#AdversarialMachineLearning before #Security of #DeepLearning). Discussing #Evasion, #Poisoning and #Privacy attacks, along with possible countermeasures @bipr arxiv.org/abs/1401.7727

#KI ist aus unserem Leben kaum wegzudenken – umso wichtiger ihre Sicherheit! Auf der AI.BAY 2023 am 24./25.02.2023 stellen wir unsere Forschungsergebnisse zur Manipulation & Absicherung von KI vor: #Deepfake #AdversarialExamples Kostenlos zum Online-Event: aisec.fraunhofer.de/de/presse-und-…

AI Hallucination: An Ongoing Challenge for Artificial Intelligence in Today's Business Landscape #adversarialexamples #AI #AIhallucination #AItechnology #artificialintelligence #cautioususeofAI #computervisionoutputs #fabricatingfalsenewsreports multiplatform.ai/ai-hallucinati…

Unsere Wissenschaftler zeigen heute auf der AI.BAY 2023 ihre neusten Forschungsergebnisse zum Schutz von #KI vor Manipulation & Angriffen. Jetzt kostenlos dazuschalten: aisec.fraunhofer.de/de/presse-und-… #Deepfake #AdversarialExamples #WeKnowCybersecurity

Our paper was accepted for publication in 9th ACM Conference on Data and Application Security and Privacy! There we presented how to attack developer's identity in open-source projects like GitHub. We also developed multiple protection methods. #codaspy #acm #AdversarialExamples

A new set of images that fool AI could help make it more hacker-proof buff.ly/2FrFiEG #adversarialattacks #adversarialexamples #ai #machinelearning

Something went wrong.

Something went wrong.

United States Trends

- 1. Jayden Daniels 24.5K posts

- 2. #BangChanxFendi 5,942 posts

- 3. #RomanEmpireByBangChan 3,842 posts

- 4. ROMAN EMPIRE OUT NOW 3,851 posts

- 5. Dan Quinn 6,991 posts

- 6. jungkook 587K posts

- 7. Jake LaRavia 5,825 posts

- 8. #River 7,278 posts

- 9. Seahawks 38.4K posts

- 10. Perle Labs 5,050 posts

- 11. Sam Darnold 15.1K posts

- 12. Commanders 50.2K posts

- 13. #MondayMotivation 24.1K posts

- 14. #RaiseHail 8,808 posts

- 15. Bronny 15K posts

- 16. Godzilla 42.7K posts

- 17. 60 Minutes 75.2K posts

- 18. Marcus Smart 3,549 posts

- 19. Ware 5,087 posts

- 20. Jovic 1,093 posts