#batchgradientdescent resultados de búsqueda

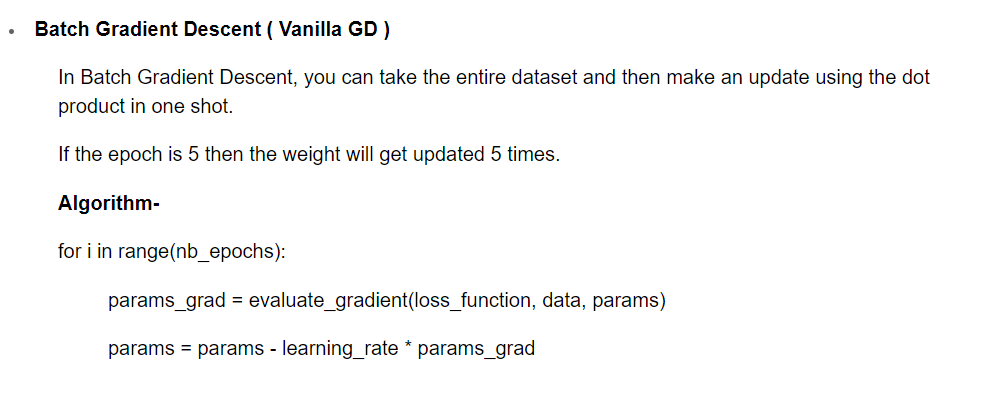

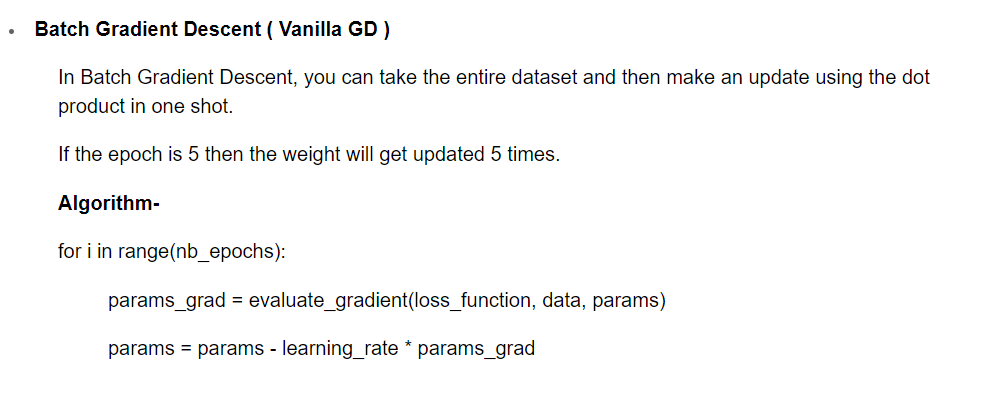

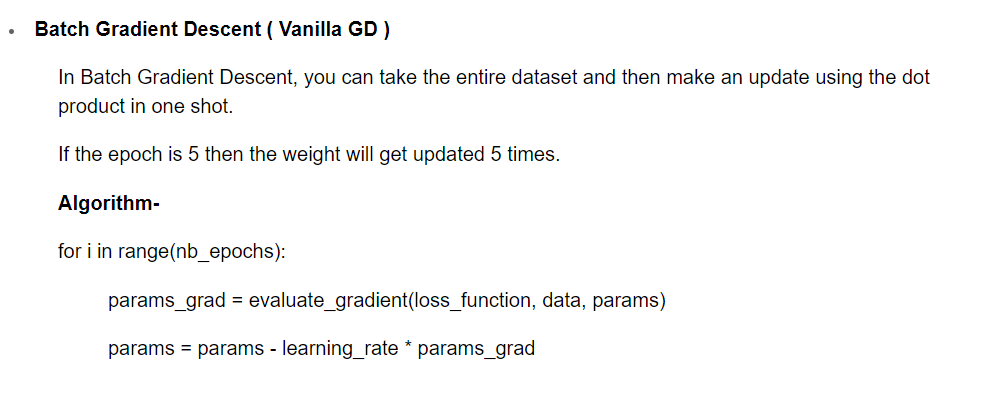

▫️ learning rate η� determines size of steps we take to reach a (local) minimum 1) #BatchGradientDescent ( Vanilla GD )

RT Dealing with Large Datasets: the Present Conundrum dlvr.it/S5jg8g #batchgradientdescent #dataparallelism #stochasticgradient

2 ways to train a Linear Regression Model-Part 2 - websystemer.no/2-ways-to-trai… #batchgradientdescent #gradientdescent #linearregression #machinelearning #stochasticgradient

"Batch Gradient Descent is a popular optimization algorithm in machine learning. It calculates the gradient of the error for the entire training dataset, making it robust but computationally expensive. #BatchGradientDescent #ML"

"#BatchGradientDescent is an optimization algorithm in #ML that updates model parameters by calculating the gradient of error over the entire dataset at each iteration. It's powerful but computationally expensive. #GradientDescent #MachineLearning"

"BatchGradientDescent is an optimization algorithm in machine learning that helps minimize error by adjusting parameters iteratively. It's like fine-tuning a model to reach its peak performance! #BatchGradientDescent #MachineLearning"

"Batch Gradient Descent is a powerful optimization algorithm used in machine learning to minimize model error. It iteratively calculates the gradient using the entire training dataset, making it a bit slower but more accurate. 💡🔍 #BatchGradientDescent #MachineLearning"

#BatchGradientDescent is an optimization algorithm used to train machine learning models by updating the parameters in small batches. This can be more efficient than updating the parameters on each individual data point. #ML #GradientDescent

Just learned about Batch Gradient Descent today! 📉 It's amazing how this algorithm processes the entire dataset at once to minimize the cost function. Excited to dive deeper into machine learning! 💻 #GradientDescent #MachineLearning #BatchGradientDescent

Week 10 done! Course: Machine Learning by Andrew Ng (Stanford University) (lnkd.in/dr2RPpa) #machinelearning #AndrewNg #BatchGradientDescent #StochasticGradientDescent #mapreduce #OnlineLearning

Batch Gradient vs Stochastic Gradient Descent for Linear Regression - websystemer.no/batch-gradient… #batchgradientdescent #gradientdescent #machinelearning #optimization #stochasticgradient

Checkout my blog on 👉 Medium #GradientDescent #BatchGradientDescent #StochasticGradientDescent #Mini_BatchGradientDescent lnkd.in/gxb6GmHi

Just learned about Batch Gradient Descent today! 📉 It's amazing how this algorithm processes the entire dataset at once to minimize the cost function. Excited to dive deeper into machine learning! 💻 #GradientDescent #MachineLearning #BatchGradientDescent

▫️ learning rate η� determines size of steps we take to reach a (local) minimum 1) #BatchGradientDescent ( Vanilla GD )

"#BatchGradientDescent is an optimization algorithm in #ML that updates model parameters by calculating the gradient of error over the entire dataset at each iteration. It's powerful but computationally expensive. #GradientDescent #MachineLearning"

"Batch Gradient Descent is a popular optimization algorithm in machine learning. It calculates the gradient of the error for the entire training dataset, making it robust but computationally expensive. #BatchGradientDescent #ML"

"Batch Gradient Descent is a powerful optimization algorithm used in machine learning to minimize model error. It iteratively calculates the gradient using the entire training dataset, making it a bit slower but more accurate. 💡🔍 #BatchGradientDescent #MachineLearning"

"BatchGradientDescent is an optimization algorithm in machine learning that helps minimize error by adjusting parameters iteratively. It's like fine-tuning a model to reach its peak performance! #BatchGradientDescent #MachineLearning"

#BatchGradientDescent is an optimization algorithm used to train machine learning models by updating the parameters in small batches. This can be more efficient than updating the parameters on each individual data point. #ML #GradientDescent

Checkout my blog on 👉 Medium #GradientDescent #BatchGradientDescent #StochasticGradientDescent #Mini_BatchGradientDescent lnkd.in/gxb6GmHi

RT Dealing with Large Datasets: the Present Conundrum dlvr.it/S5jg8g #batchgradientdescent #dataparallelism #stochasticgradient

2 ways to train a Linear Regression Model-Part 2 - websystemer.no/2-ways-to-trai… #batchgradientdescent #gradientdescent #linearregression #machinelearning #stochasticgradient

Batch Gradient vs Stochastic Gradient Descent for Linear Regression - websystemer.no/batch-gradient… #batchgradientdescent #gradientdescent #machinelearning #optimization #stochasticgradient

Week 10 done! Course: Machine Learning by Andrew Ng (Stanford University) (lnkd.in/dr2RPpa) #machinelearning #AndrewNg #BatchGradientDescent #StochasticGradientDescent #mapreduce #OnlineLearning

RT Dealing with Large Datasets: the Present Conundrum dlvr.it/S5jg8g #batchgradientdescent #dataparallelism #stochasticgradient

2 ways to train a Linear Regression Model-Part 2 - websystemer.no/2-ways-to-trai… #batchgradientdescent #gradientdescent #linearregression #machinelearning #stochasticgradient

▫️ learning rate η� determines size of steps we take to reach a (local) minimum 1) #BatchGradientDescent ( Vanilla GD )

Something went wrong.

Something went wrong.

United States Trends

- 1. Good Friday 49.6K posts

- 2. #FridayVibes 3,250 posts

- 3. Reagan 63.4K posts

- 4. Aaron Gordon 31.4K posts

- 5. ORM CENTRAL CHIDLOM ANN 399K posts

- 6. #CentralAnniversaryxOrm 424K posts

- 7. #FridayFeeling 1,702 posts

- 8. #FridayMotivation 2,486 posts

- 9. Halle 22.6K posts

- 10. Steph 72.5K posts

- 11. Jokic 24.2K posts

- 12. Chelsea Clinton 8,037 posts

- 13. #EAT_IT_UP_SPAGHETTI 274K posts

- 14. Wentz 26.3K posts

- 15. Oval Office 19K posts

- 16. Sven 9,161 posts

- 17. Ontario 62.9K posts

- 18. JPMorgan 17.3K posts

- 19. Digital ID 101K posts

- 20. Warriors 101K posts