#computationalflexibility 搜索结果

Efficient training of neural networks is difficult. Our second Connectionism post introduces Modular Manifolds, a theoretical step toward more stable and performant training by co-designing neural net optimizers with manifold constraints on weight matrices.…

Announcing Flexible Masked Diffusion Models (FlexMDMs)—a new diffusion language model for flexible-length sequences. 🚨 Solves MDMs' fixed-length issue + retrains any-order sampling 🚨 <1000 GPU-hrs to fine-tune LLaDA-8B into FlexMDM (GSM8K 58→67%, HumanEval-infill: 52→65%)

AI's flexibility is both a strength and a weakness. Without proper guidance, it can lead to costly mistakes, like saving money but losing revenue. The key is using AI where it truly fits.

We updated our whitepaper! The focus has shifted from verifiable compute to building an open, programmable compute economy. The problem: compute demand is growing faster than supply. AI and ZK workloads all face the same bottleneck. Our approach: ComputeFi. Here's what that…

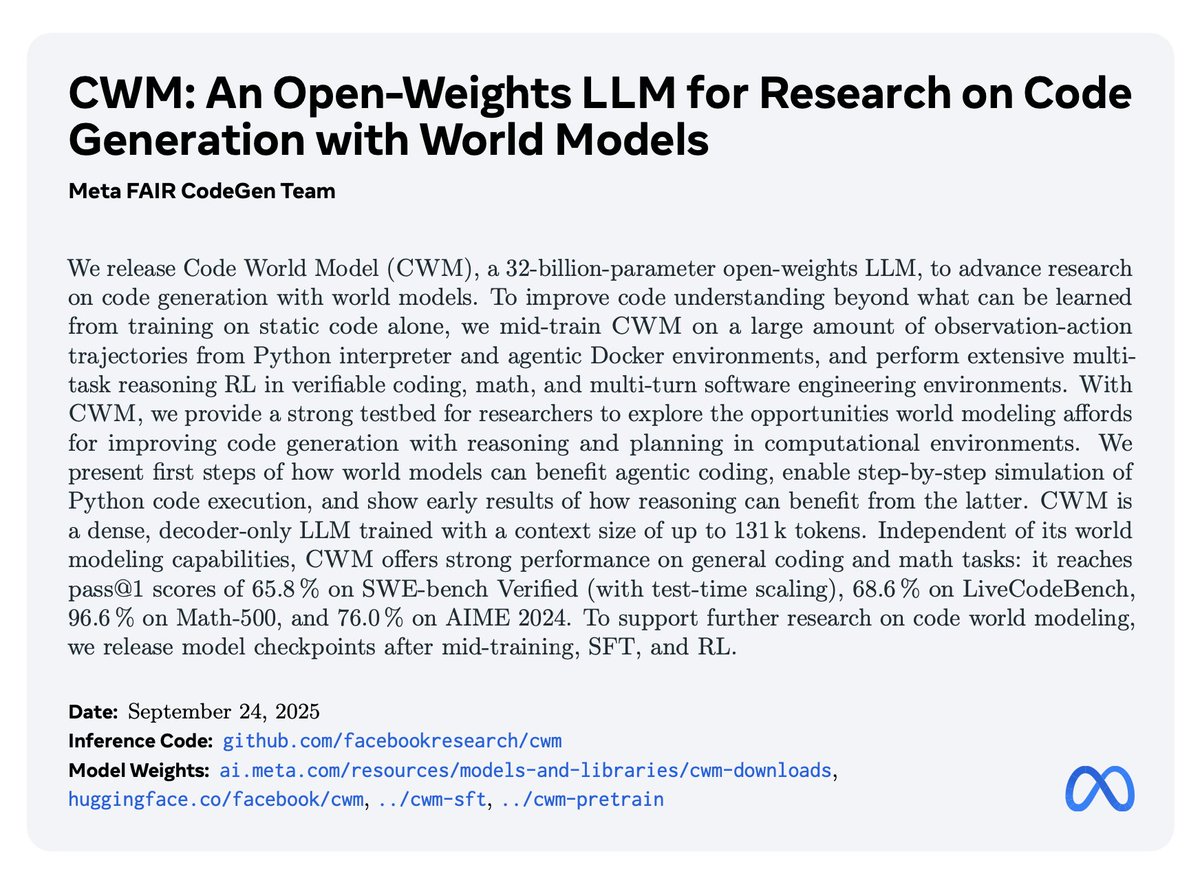

New from Meta FAIR: Code World Model (CWM), a 32B-parameter research model designed to explore how world models can transform code generation and reasoning about code. We believe in advancing research in world modeling and are sharing CWM under a research license to help empower…

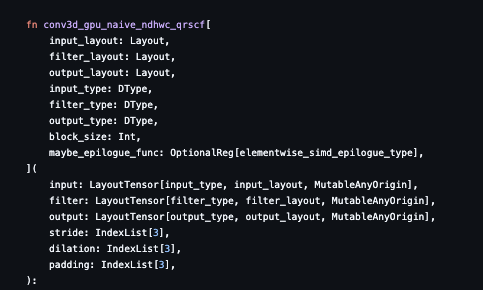

So I finally got a chance to look at Mojo/Modular. It's not what I thought it was, it's an OpenCL replacement + implementations of kernels, not an AI compiler. While this makes it a lot easier to get full performance quickly, I think Turing completeness is a mistake for this…

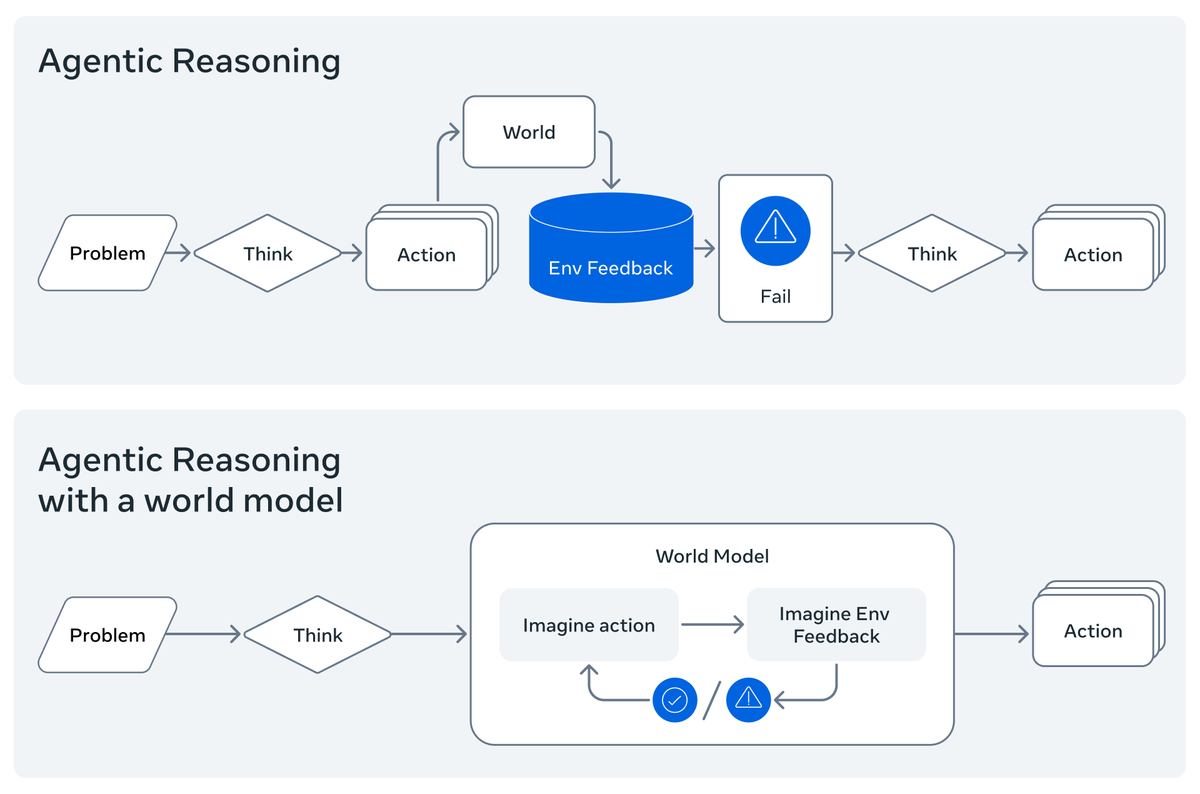

Code World Model: producing code by imagining the effect of executing instructions and planning instructions that produce the desired effect.

(🧵) Today, we release Meta Code World Model (CWM), a 32-billion-parameter dense LLM that enables novel research on improving code generation through agentic reasoning and planning with world models. ai.meta.com/research/publi…

We released CWM, a 32B LLM for code reasoning, agents, and world modeling research🚀 (pre/mid/post checkpoints, tech report, RL envs, inference stack): github.com/facebookresear…. I'm fortunate to lead Agentic RL and co-lead joint RL training, empowering CWM as a reasoning agent 🧵

4/ Here is an example of the Code World Model tracing the execution of the piece of code counting the "r"s in "strawberry". Think of it like a neural `pdb` that you can set to any initial frame state, and that reasoning can query as a tool in token space.

2/ When humans plan, we imagine the possible outcomes of different actions. When we reason about code we simulate part of its execution in our head. The current generation of LLMs struggles to do this. What kind of research will an explicitly trained code world model enable?

Can data owners & LM developers collaborate to build a strong shared model while each retaining data control? Introducing FlexOlmo💪, a mixture-of-experts LM enabling: • Flexible training on your local data without sharing it • Flexible inference to opt in/out your data…

(🧵) Today, we release Meta Code World Model (CWM), a 32-billion-parameter dense LLM that enables novel research on improving code generation through agentic reasoning and planning with world models. ai.meta.com/research/publi…

BREAKING: NVIDIA's FlexiCubes revolutionizes 3D Mesh Generation From reconstructing scenes that match images to generating assets for interactive experiences, the future of 3D is about to take a giant leap forward Here's the breakdown into A THREAD 1/6

PC-NCLaws: Physics-Embedded Conditional Neural Constitutive Laws for Elastoplastic Materials. arxiv.org/abs/2510.21404

new research from Meta FAIR: Code World Model (CWM), a 32B research model we encourage the research community to research this open-weight model! pass@1 evals, for the curious: 65.8 % on SWE-bench Verified 68.6 % on LiveCodeBench 96.6 % on Math-500 76.0 % on AIME 2024 🧵

I wonder what they used for code execution 👀

(🧵) Today, we release Meta Code World Model (CWM), a 32-billion-parameter dense LLM that enables novel research on improving code generation through agentic reasoning and planning with world models. ai.meta.com/research/publi…

Escape the confinements of inflexible IT barriers. Embrace Composable IT to achieve agility and scalability. Bid farewell to outdated limitations and welcome seamless integration. Read more: bit.ly/4o1K6nx

Data center flexibility is the ability to shift workloads to different times of day when renewable energy generation is high or prices are low. bit.ly/3JgnMHB

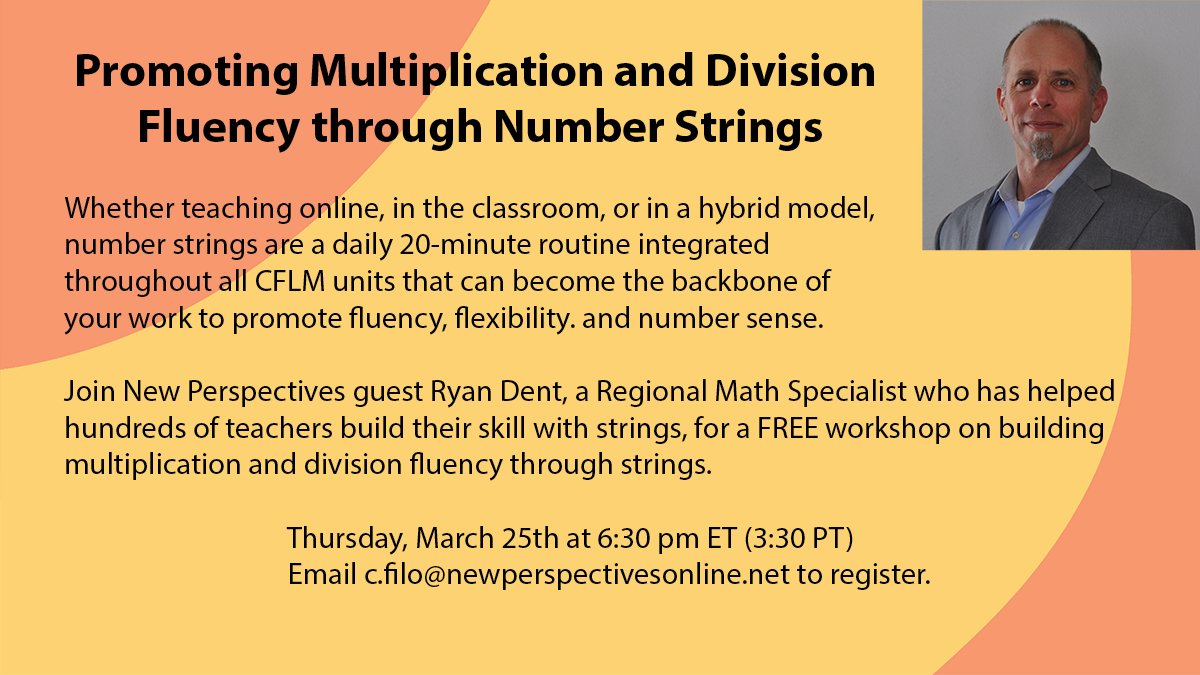

Powerful #mathroutine to develop #computationalflexibility So excited for this!!!

We're excited #CFLM fan and Regional Math Coordinator Ryan Dent (@4ryandent) will be partnering with New Perspectives to offer a FREE workshop next Thursday--Promoting Multiplication and Division Fluency through Number Strings. Email [email protected] to register.

Something went wrong.

Something went wrong.

United States Trends

- 1. $ZOOZ N/A

- 2. #IDontWantToOverreactBUT 1,019 posts

- 3. #MondayMotivation 32.8K posts

- 4. Jamaica 76.3K posts

- 5. Victory Monday 2,371 posts

- 6. SNAP 624K posts

- 7. Good Monday 47.5K posts

- 8. $QCOM 11.1K posts

- 9. Jungkook 305K posts

- 10. #ElCaribeBajoAmenaza 1,648 posts

- 11. #MondayVibes 3,024 posts

- 12. #MondayMood 1,678 posts

- 13. MRIs 1,067 posts

- 14. Milei 661K posts

- 15. Walter Reed 3,715 posts

- 16. Category 5 20.1K posts

- 17. Hurricane Melissa 45.4K posts

- 18. Brock Lesnar 1,271 posts

- 19. Jack DeJohnette 1,139 posts

- 20. Lockett N/A