#computationallearningtheory ผลการค้นหา

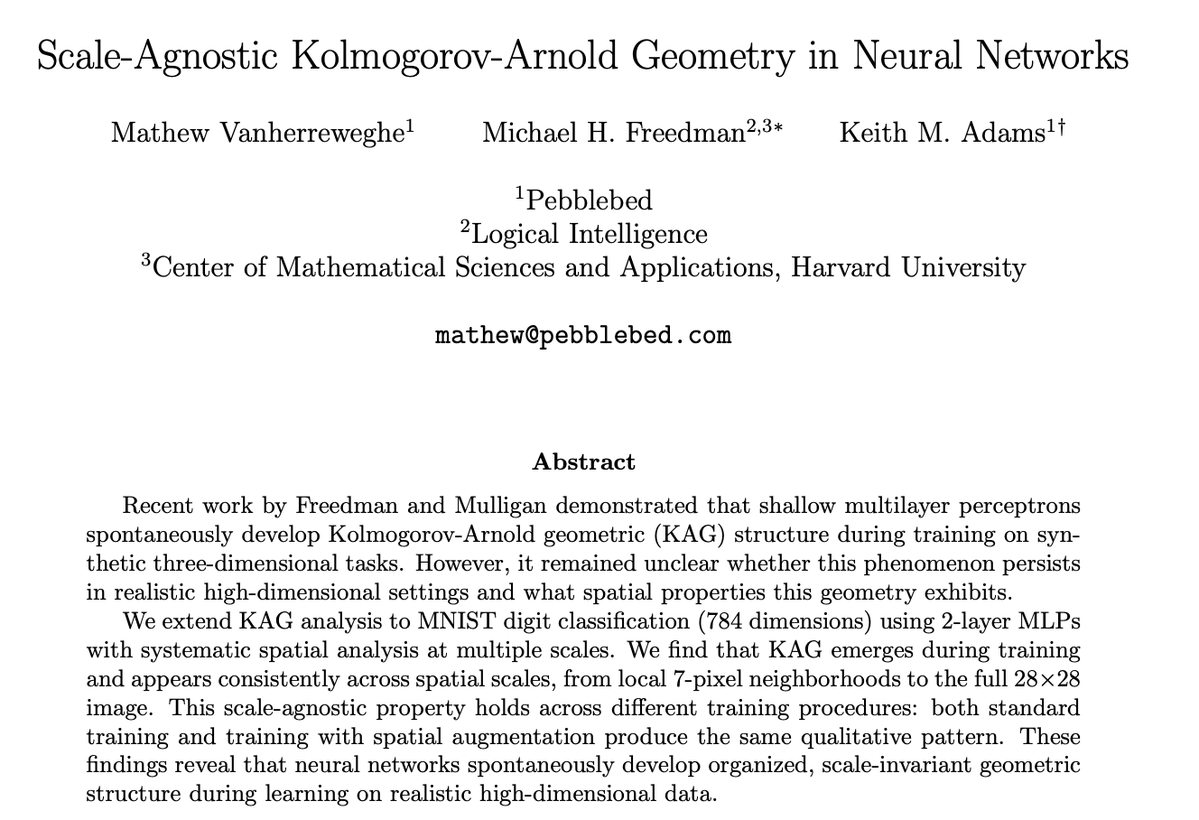

Neural networks don’t learn randomly-shaped representations. They consistently collapse toward structured internal geometry — even in tiny MLPs on MNIST. A new paper shows that Kolmogorov–Arnold–style structure appears early in training, persists across widths, survives spatial…

Not thinking "sensing". 1. "...the apparent reasoning prowess of Chain-of-Thought (CoT) is largely a brittle mirage." 2. "Together, these findings suggest that LLMs are not principled reasoners but rather sophisticated simulators of reasoning-like text." arxiv.org/html/2508.0119…

😊 Curious whether CoT reasoning in LLMs is truly 𝘳𝘦𝘢𝘴𝘰𝘯𝘪𝘯𝘨—or just a 𝘱𝘢𝘵𝘵𝘦𝘳𝘯-𝘮𝘢𝘵𝘤𝘩𝘪𝘯𝘨 𝘪𝘭𝘭𝘶𝘴𝘪𝘰𝘯? 👏 Join us this Saturday at 9 PM EST for a talk on “Is Chain-of-Thought Reasoning of LLMs a Mirage?” by @ChengshuaiZhao. 👻 Register here:…

3. From Imitation to Discrimination: Toward A Generalized Curriculum Advantage Mechanism Enhancing Cross-Domain Reasoning Tasks 🔑 Keywords: Curriculum Advantage Policy Optimization, Reinforcement Learning, Advantage Signals, Generalization, Mathematical Reasoning 💡 Category:…

AI models learn only as well as the data they’re trained on @codatta_io fixes this by treating every sample, label, and validation as a traceable “building block” of knowledge. That means cleaner data, fair rewards for contributors, and AI that performs better in the real world

exactly,curriculum learning is known to prevent models from getting stuck in bad local optima by learning foundational concepts/known priors first and then progressively increasing signal from there - volume coupled with the right degree of curation is the recipe i believe

Learning How to Think: Meta Chain-of-Thought (Meta-CoT) Just dropped a new explainer on Meta Chain of Thought. Meta CoT takes normal chain of thought further by helping models actually search and revise their reasoning instead of sticking to one path. The idea is to move…

youtube.com

YouTube

Learning How to Think: Meta Chain-of-Thought (Meta-CoT)

This is fair, I think the canonical continual learning stuff gets broken down into class-incremental, task-incremental etc. so depending on the particular definition you're using it could simply be Imagenet/incremental CIFAR-10 etc, arxiv.org/abs/1606.09282 ecva.net/papers/eccv_20…

Continual learning in AI means models can learn new tasks over time without forgetting old ones (avoiding "catastrophic forgetting"). The post jokingly claims it's "solved" via this algorithm from online convex optimization theory, which updates predictions by following…

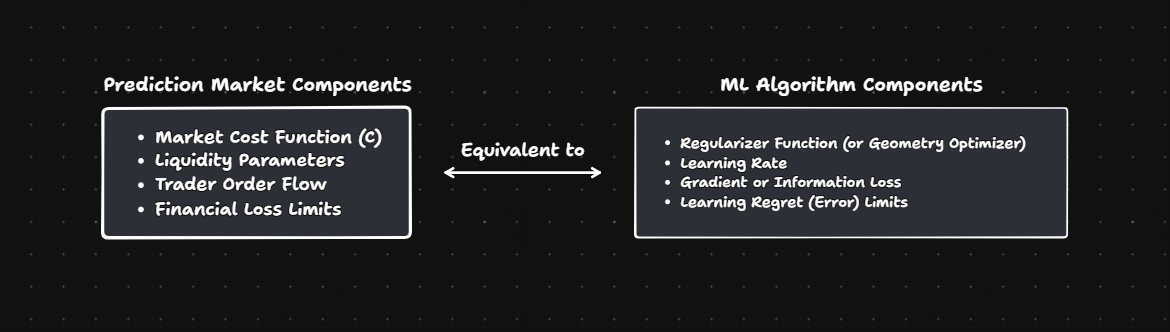

5\ Computer science theory shows that prediction markets based on cost-function market makers (CFMs) can be formally mapped to a learning algorithm called Follow-The-Regularized-Leader (FTRL). See pict below.

desired form of continual learning: in conversation with a model, you give it feedback on an output, it permanently internalizes your feedback and generalizes that feedback to other situations the way a human would, without context window limitations, and preserving learnings…

✅ CT provably modulates the curvature of the model function, thereby controlling downstream generalization & robustness. ✅ The method is grounded in spline theory of deep learning, making it simple and interpretable.

Continued Pre-training (CPT) had minimal effect on CLS-only models but substantially improved entity-aware architectures. The replacement strategy generalized better to natural text than boundary marking, likely due to reduced reliance on lexical identity.

Continued Pre-training (CPT) had minimal effect on CLS-only models but substantially improved entity-aware architectures. The replacement strategy generalized better to natural text than boundary marking, likely due to reduced reliance on lexical identity.

Something went wrong.

Something went wrong.

United States Trends

- 1. Lakers 44.6K posts

- 2. Spurs 36.4K posts

- 3. Doug Dimmadome 9,752 posts

- 4. JUNGKOOK FOR CHANEL BEAUTY 31.7K posts

- 5. Godzilla 20.6K posts

- 6. Michigan 122K posts

- 7. Marcus Smart 2,186 posts

- 8. Wemby 5,413 posts

- 9. Dolly Parton 2,660 posts

- 10. Sherrone Moore 63.4K posts

- 11. #Survivor49 5,217 posts

- 12. Jim Ward 10K posts

- 13. Erika 177K posts

- 14. PETA 25.8K posts

- 15. #PorVida 1,512 posts

- 16. #AEWDynamite 26.9K posts

- 17. Stephon Castle 3,763 posts

- 18. Gabe Vincent 1,160 posts

- 19. Gainax 4,229 posts

- 20. TOP CALL 10K posts