#efficientml search results

"Want to make your Large #ML Model training cheaper & faster but don't know where to start?" Look no further! @bartoldson (@Livermore_Comp), @davisblalock (@MosaicML), & I went through hundreds of papers to compose a comprehensive document on #EfficientML Here is TL;DR: [1/6]

![bkailkhu's tweet image. "Want to make your Large #ML Model training cheaper & faster but don't know where to start?" Look no further!

@bartoldson (@Livermore_Comp), @davisblalock (@MosaicML), & I went through hundreds of papers to compose a comprehensive document on #EfficientML

Here is TL;DR: [1/6]](https://pbs.twimg.com/media/FiRB5sqWQAAiCJd.png)

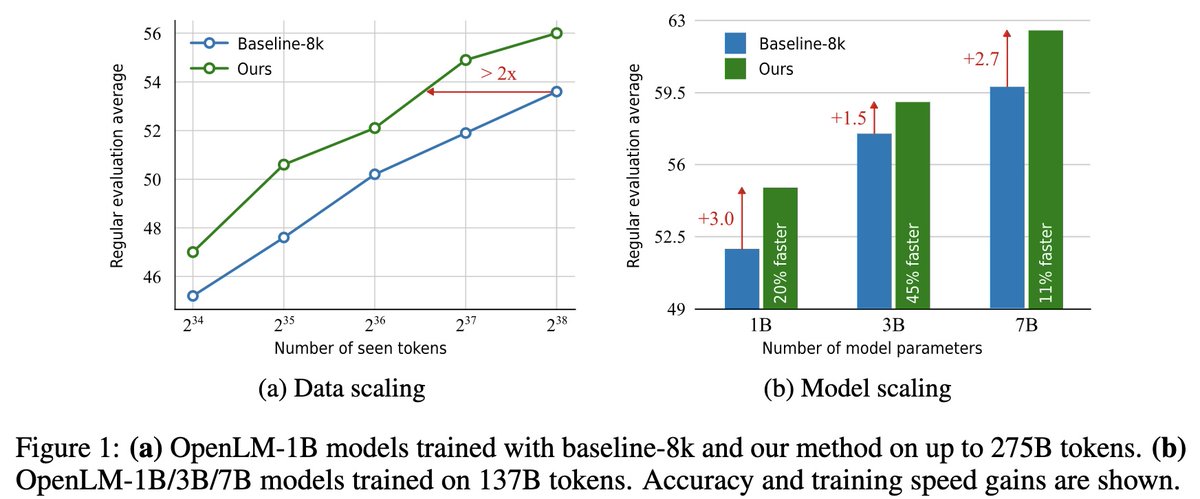

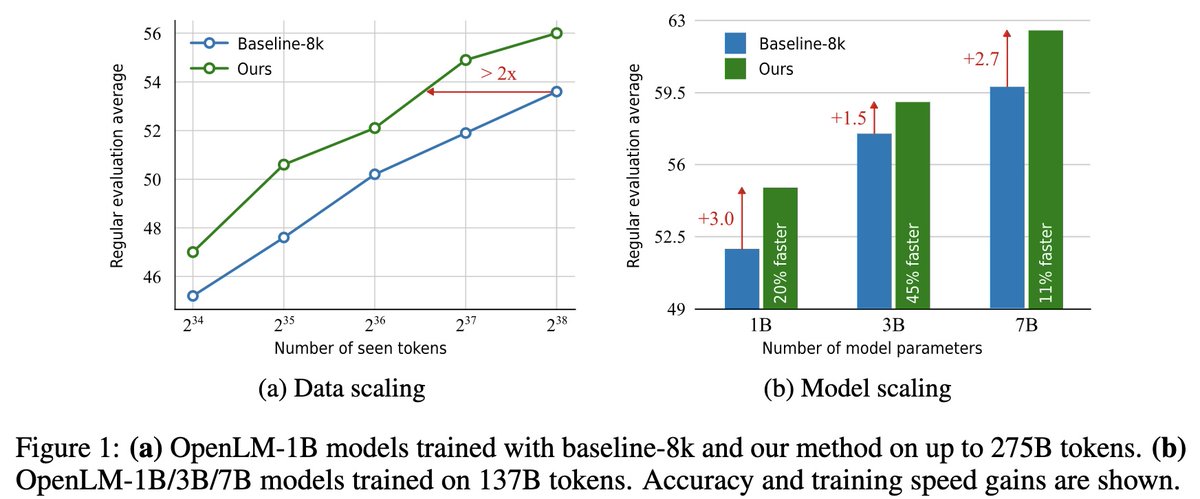

Check out ‘Dataset Decomposition’, a new paper from the Machine Learning Research team @Apple that improves the training speed of LLMs by up to 3x: arxiv.org/abs/2405.13226 Hadi Pouransari et al. #EfficientML #AI #ML #Apple

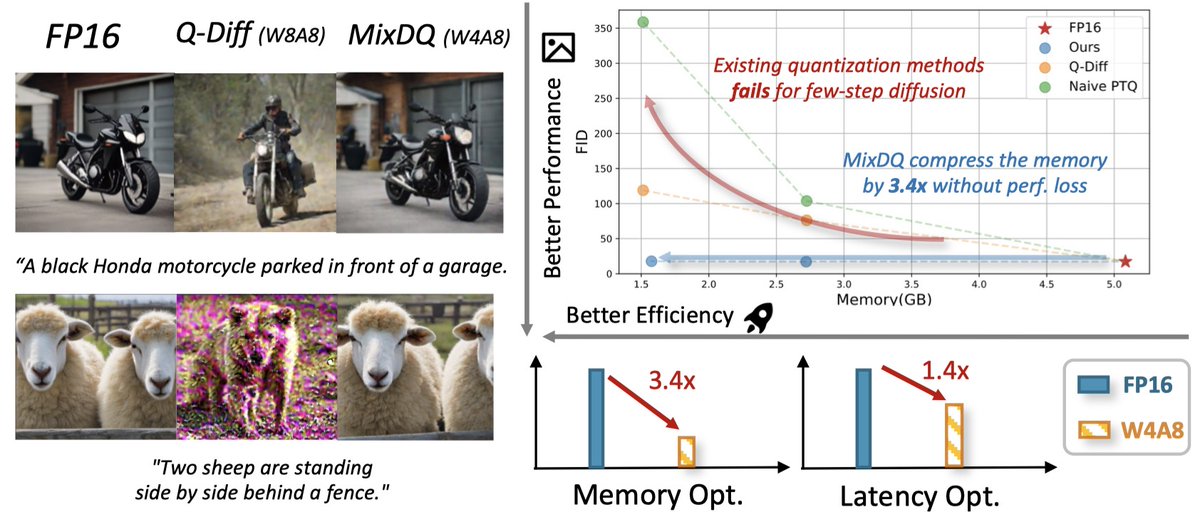

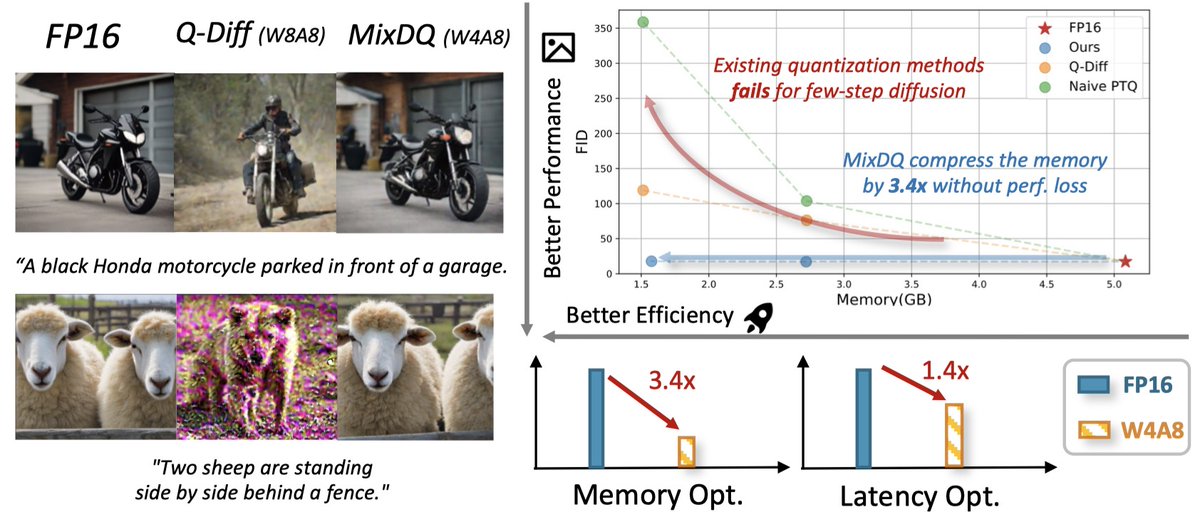

Having trouble running large text-to-image models (e.g., SDXL) on a desktop GPU due to memory constraints? We introduce MixDQ, a quantization method that achieves 2-3x memory and 1.45x e2e latency optimization without performance loss. (1/3) #arxiv #efficientML #stablediffusion

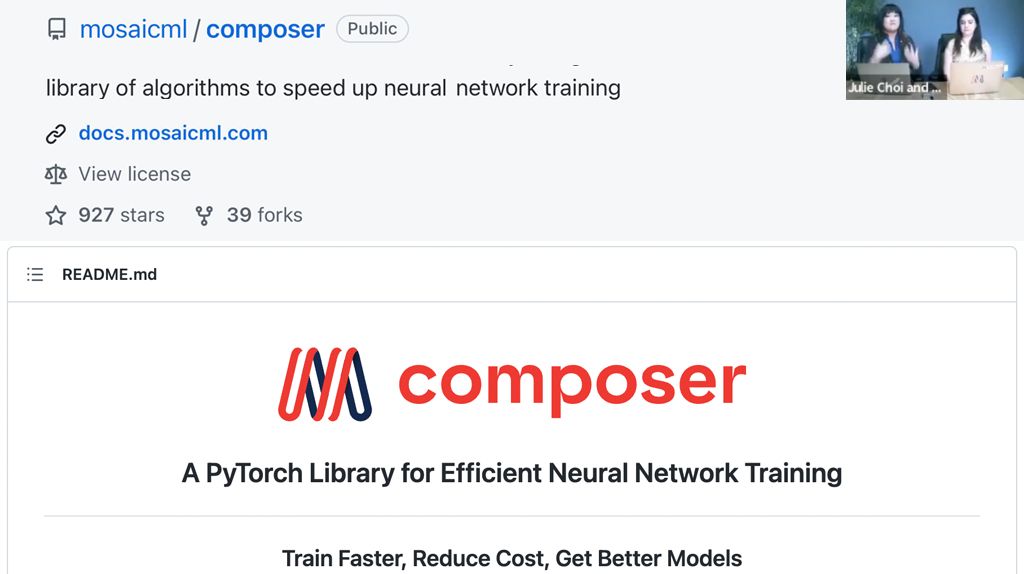

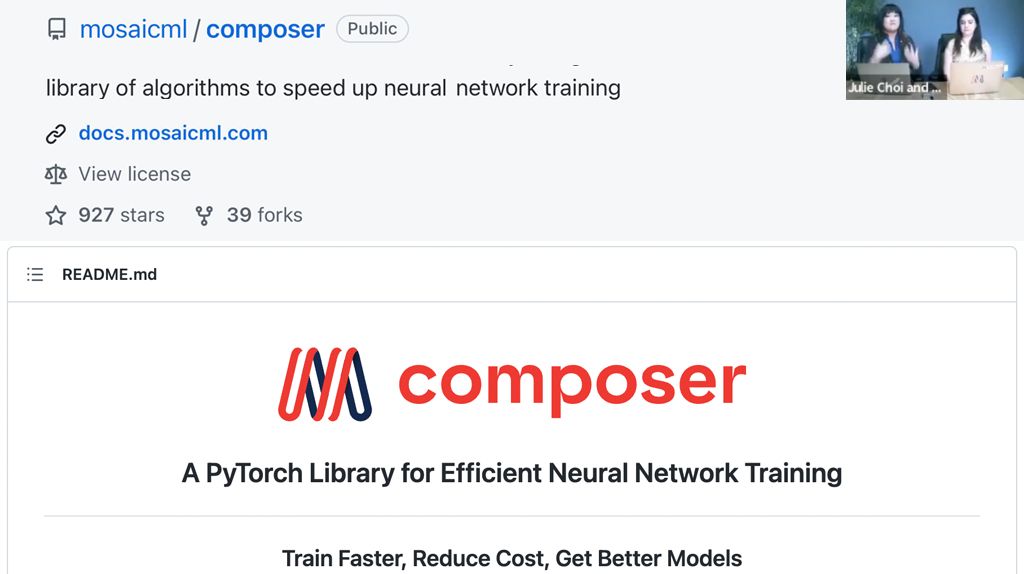

Laura Florescu (ML Researcher) and Julie Choi (CGO) talk about their unique journeys to ML careers, and how #efficientML is the goal of Composer library on @GitHub, training vision and speech models up to 4x faster @MosaicML. girlgeek.io/top-10-tech-ta…

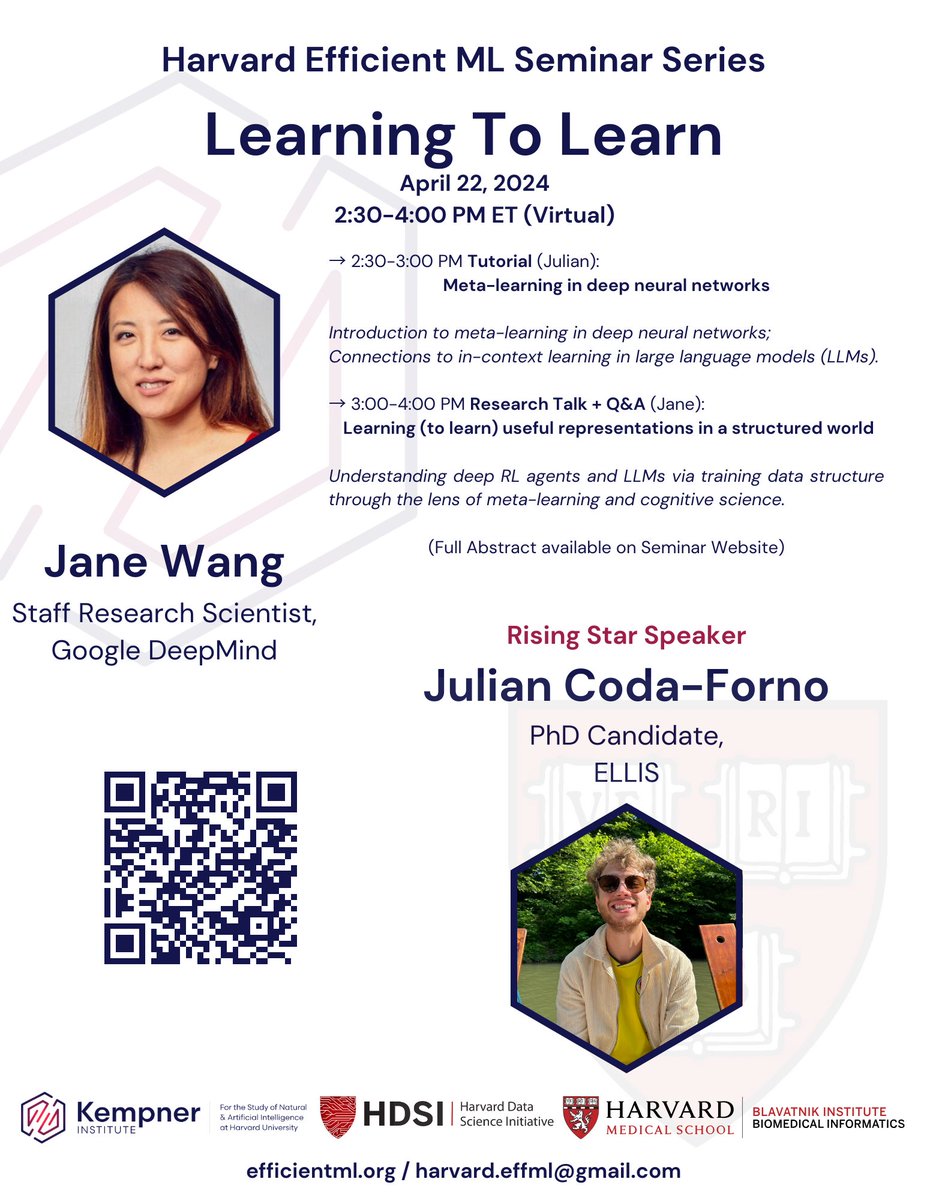

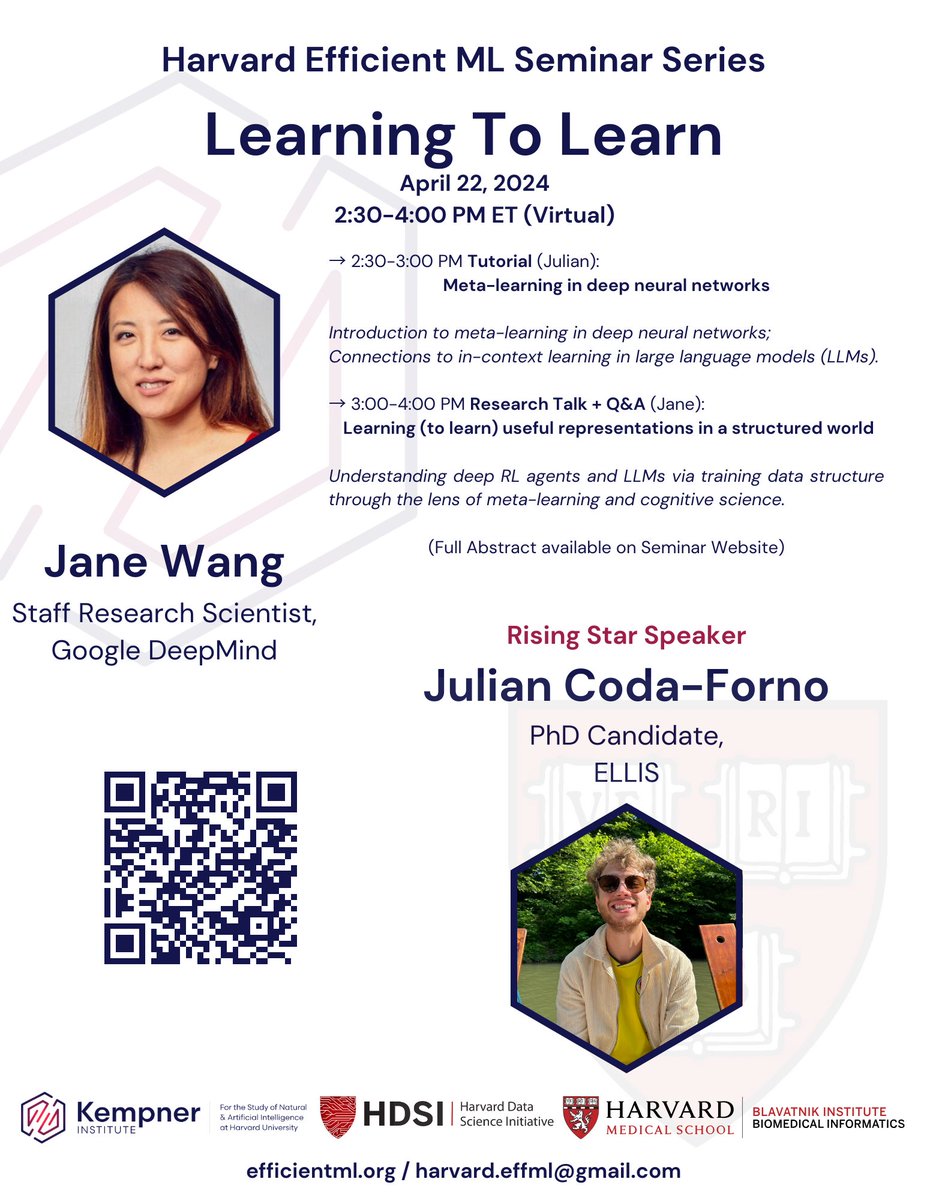

🚨Announcing the next Harvard #efficientml seminar on Learning to learn, featuring @janexwang and Rising Star @juliancodaforno ! 🧠 ➡️ April 22, 2:30 ET 📅Registration: tinyurl.com/yyzt9c96 🔗 Website: efficientml.org @harvard_data @HarvardDBMI @KempnerInst

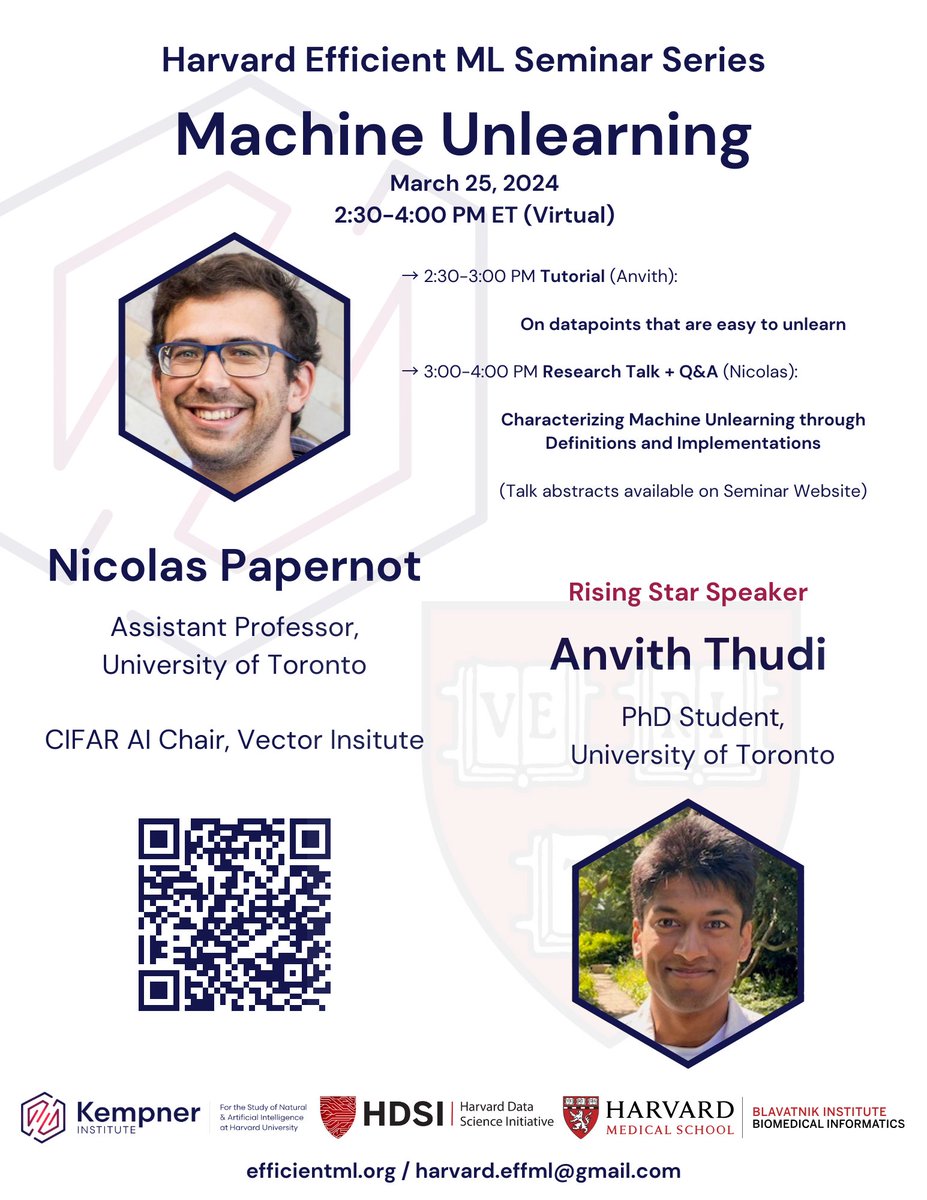

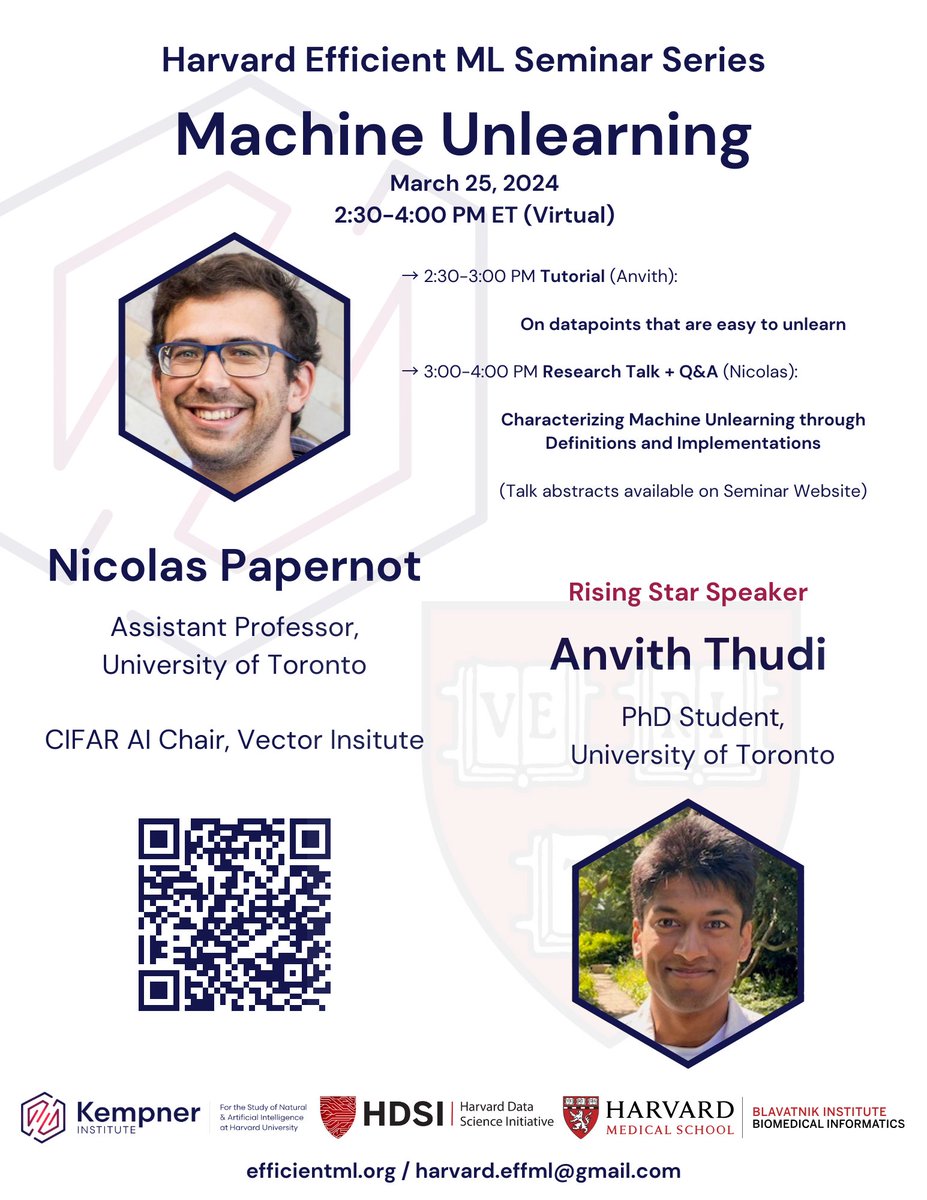

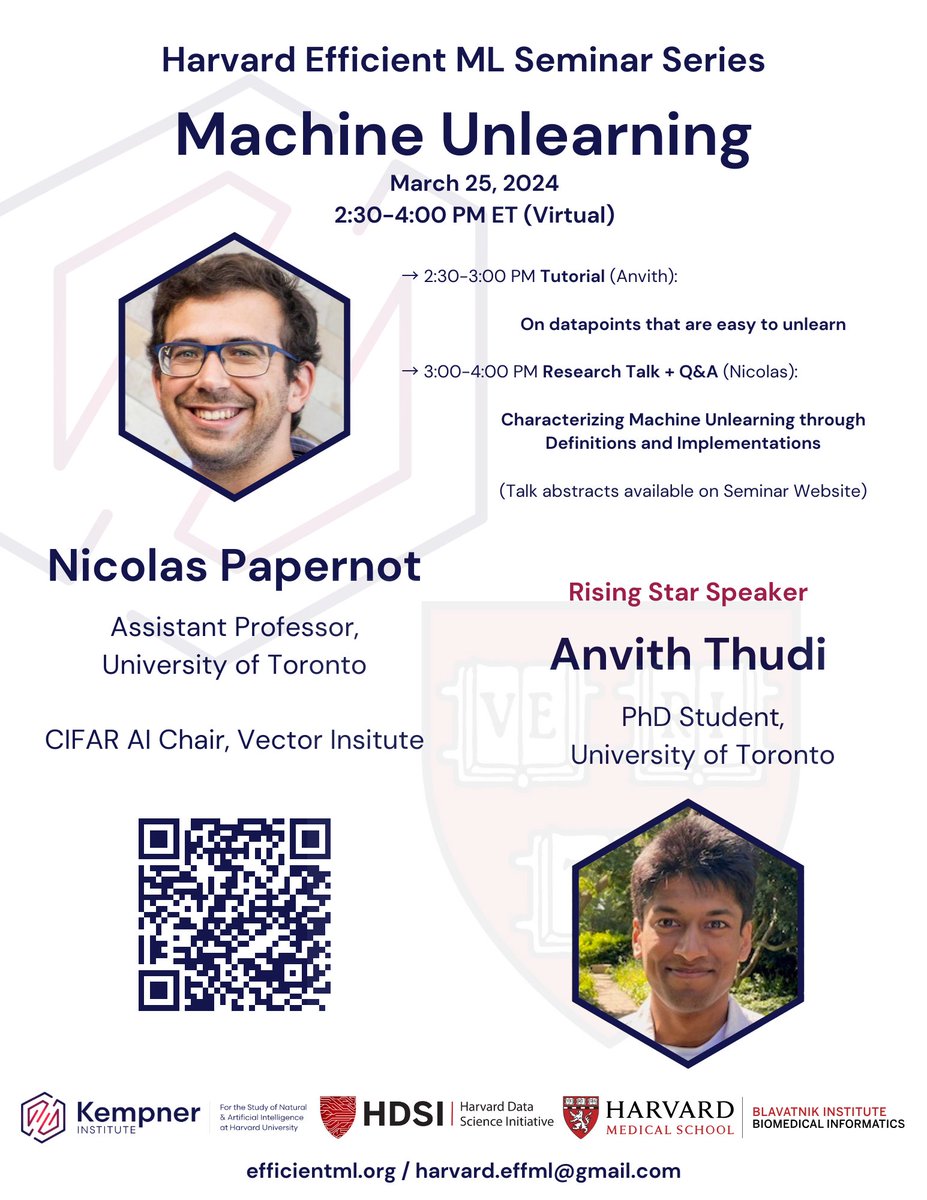

🚨Announcing the next Harvard #efficientml eminar on Machine Unlearning, featuring @NicolasPapernot and Rising Star Anvith Thudi! 🧠 ➡️ March 25 @ 2:30pm ET 📅 Registration: tinyurl.com/5n8kke2a 🔗 Website: efficientml.org @harvard_data @HarvardDBMI @KempnerInst

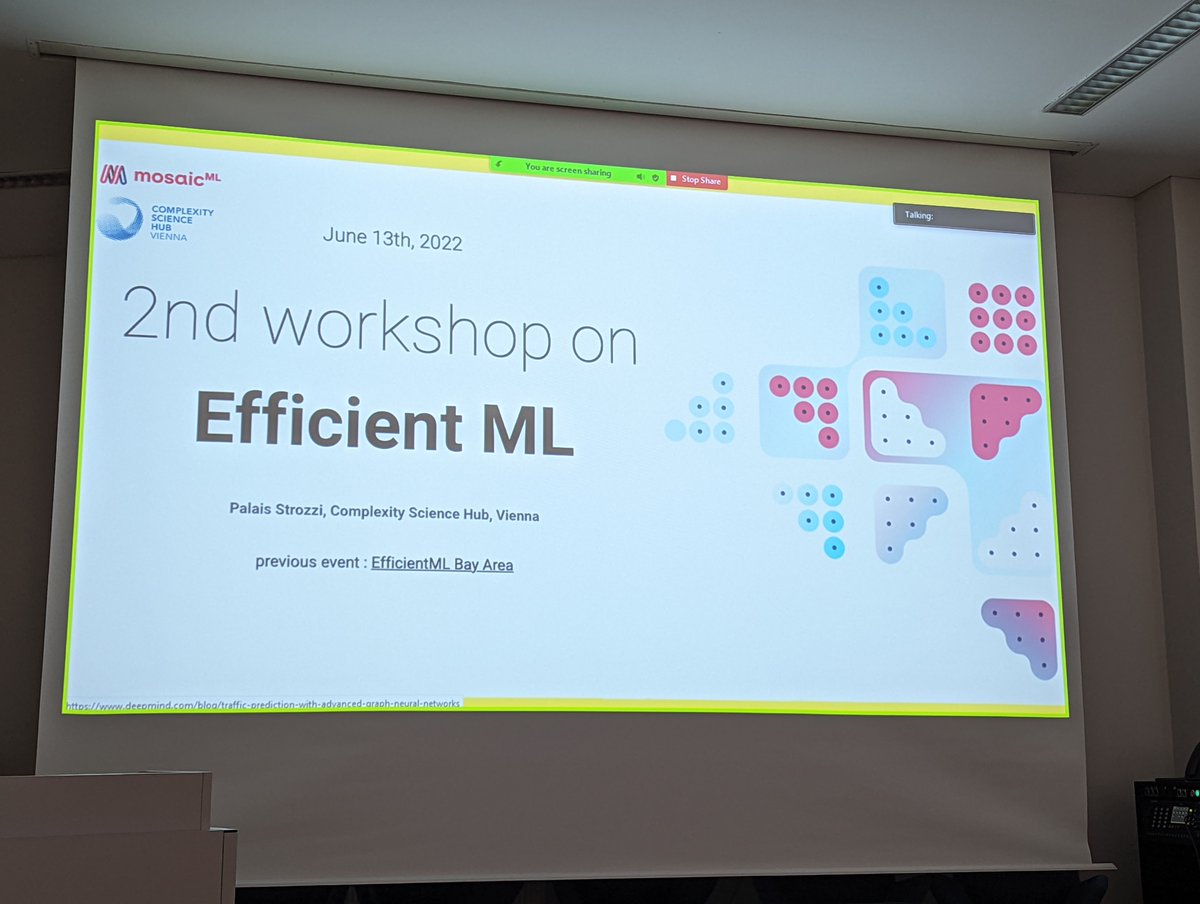

It has been a week, but we're still excited about the connections we made at #MLSys2022. Thanks again to the entire team at @MLSysConf for bringing the community together. Thanks to all who attended our #EfficientML Social event. We can't wait for the next one!

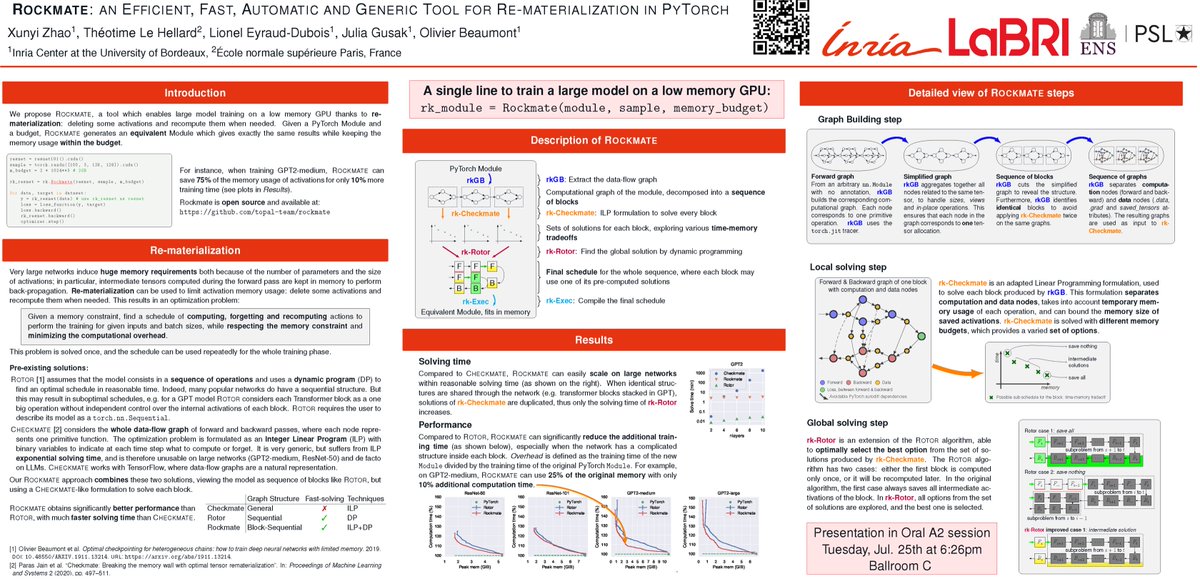

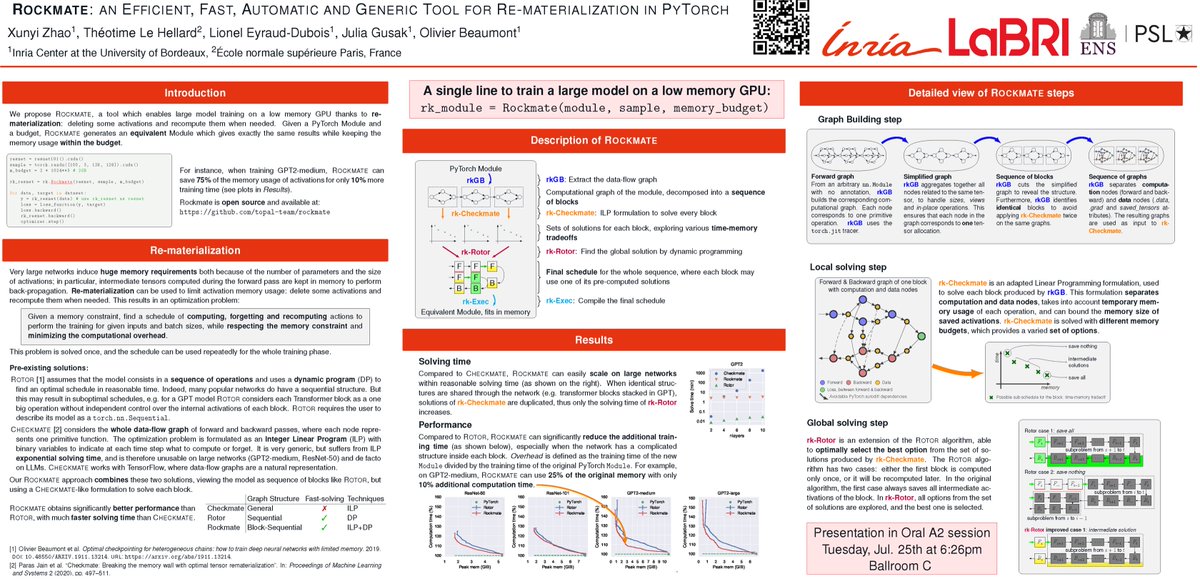

Thrilled to have two papers presented at @icmlconf! Dive in and explore our research on memory-efficient training of neural networks! #ICML2023 #EfficientML

Heading to #NeurIPS2022 in a few weeks? Laissez les bon temps roulez - and join us at our #efficientml social on Weds 11/30! RSVP today - spots are limited: eventbrite.com/e/mosaicml-soc…

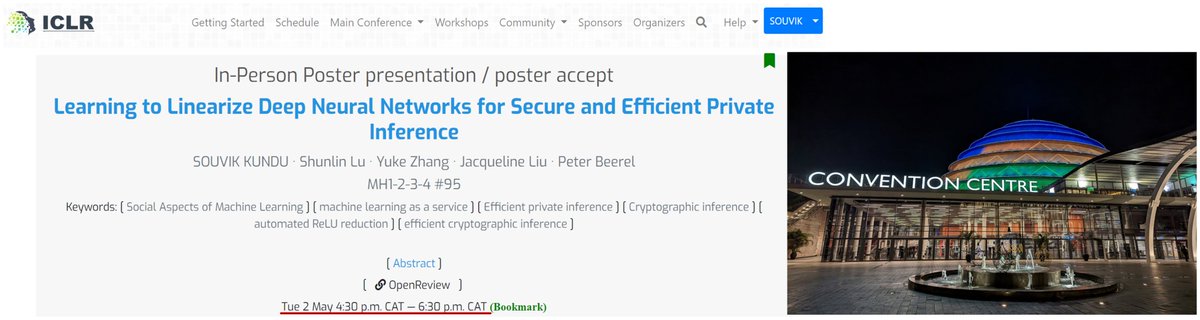

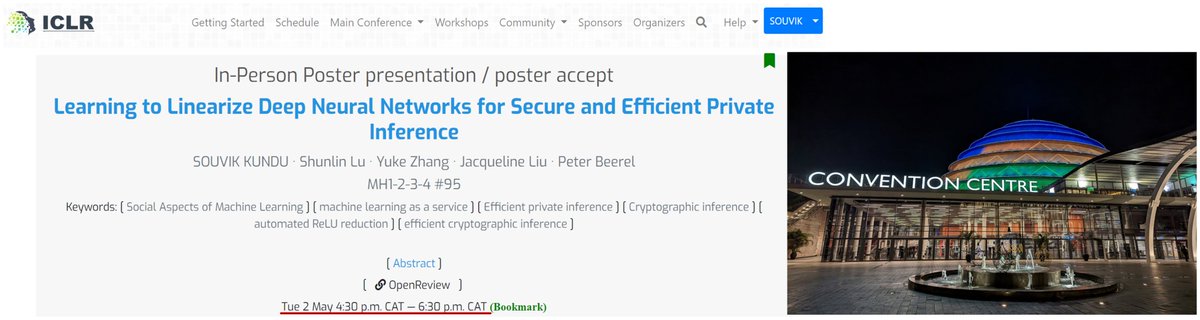

#ICLR2023 Hi from Kigali, Rwanda!! Presenting our work "Learning to Linearize DNNs" today in-person at 4:30 pm CAT! Attending @iclr_conf in person? Feel free to have some chat/stop-by. #privateML #efficientML #LinearizedDNN

Built on PyTorch with inspiration from DeepSeek Physical AI and Sundew adaptive gating. Tagging the ML community: @karpathy @AndrewYNg @ylecun @hardmaru @fchollet @soumithchintala Would love your thoughts on pushing this to ImageNet/LLM scale! 🚀 #EfficientML #PyTorch

Happy NYE-Eve! As we get ready to jump into 2023, it's a great time to check out one of the 23 blogs MosaicML shipped in 2022. They're all about #EfficientML (and none were written by GPT-3) --> mosaicml.com/blog

Will be giving a talk at @iiscbangalore later today, with hopes of setting up collaborations related to my ongoing research. #EfficientML #Sustainable #ArtificialIntelligence

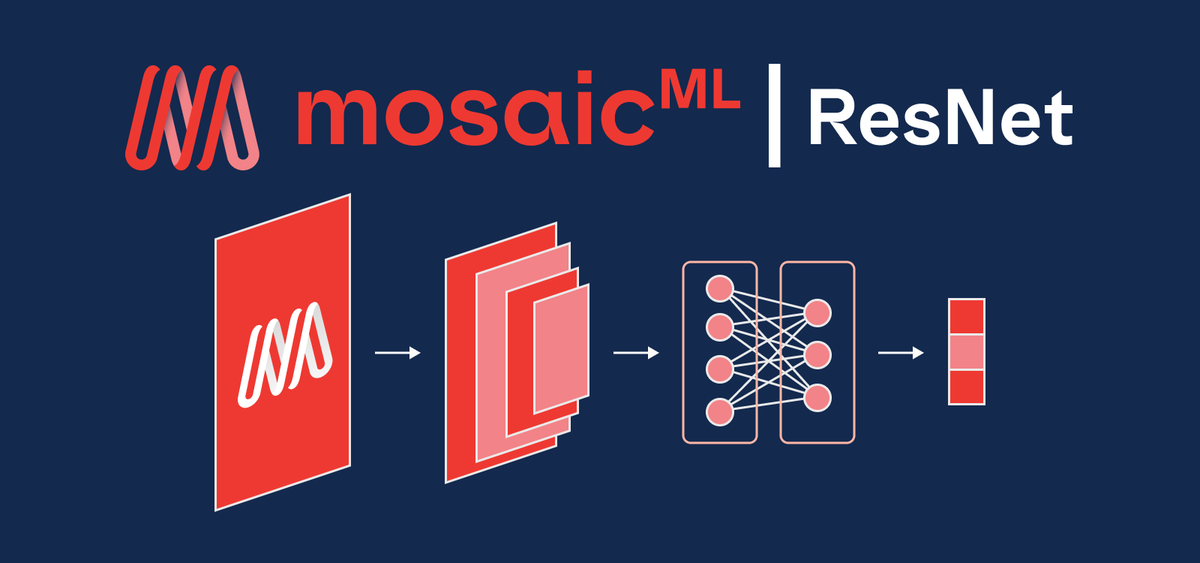

Last week I joined @MosaicML to create a community of researchers for #EfficientML Model Training. Yesterday, I created the prettiest representation of an Image Classification Model to exist on the internet for our new ResNet Blog (mosaicml.com/blog/mosaic-re…) Life is good. 😀

One of my favorite parts of our blog post announcing the @MosaicML ResNet Recipes (mosaicml.com/blog/mosaic-re…) is the recipe card, designed by the talented @ericajiyuen. BTW these times are for 8x-A100

Heading to #NeurIPS2022 in a few weeks? Laissez les bon temps roulez - and join us at our #efficientml social on Weds 11/30! RSVP today - spots are limited: eventbrite.com/e/mosaicml-soc #ml #MachineLearning #ai

@sarahookr asks the question: is bigger really better when it comes to #AI models? Check out @harvardmagazine's feature on #KempnerInstitute's new #efficientml seminar series, in partnership with @harvardmed and @harvard_data, organized by @schwarzjn_

A driving force of competition between top AI companies is dataset expansion. But is bigger really better? @cohere's @sarahookr argues scaling could be inefficient. @schwarzjn_ of @HarvardDBMI believes smaller models can adapt. From @nhpasquini: #harvard harvardmagazine.com/2024/03/scalin…

🎓PhD students & researchers, 🚀join our public EfficientML Reading Group! 📚 A wide range of topics on ML (resource-)efficiency and beyond. Learn & discuss recent papers with peers! 📖 Check out the schedule: sites.google.com/view/efficient… #EfficientML #MachineLearning #ReadingGroup

Built on PyTorch with inspiration from DeepSeek Physical AI and Sundew adaptive gating. Tagging the ML community: @karpathy @AndrewYNg @ylecun @hardmaru @fchollet @soumithchintala Would love your thoughts on pushing this to ImageNet/LLM scale! 🚀 #EfficientML #PyTorch

📱 Imagine: • Your phone running advanced AI locally • Instant personalization without cloud delays • All-day battery life even with heavy AI use #OnDeviceAI #GoogleAI #EfficientML 👇 Devs, start building: x.com/googleaidevs/s…

Introducing Gemma 3 270M! 🚀 It sets a new standard for instruction-following in compact models, while being extremely efficient for specialized tasks. developers.googleblog.com/en/introducing…

AI/ML プロジェクトの構造化: 混沌から明確化へ | Tarun Singh 著 | 2024 年 12 月 | Towards AI #AIprojects #MLsuccess #EfficientML #StructuredAI prompthub.info/74317/

prompthub.info

AI/ML プロジェクトの構造化: 混沌から明確化へ | Tarun Singh 著 | 2024 年 12 月 | Towards AI - プロンプトハブ

AI/MLプロジェクトの適切な構造化は長期的成功に重要。 構造化されたプロジェクトは効率性、再現性、協力につな

AI/ML プロジェクトの構造化: 混沌から明確化へ | Tarun Singh 著 | 2024 年 12 月 | Medium #AIProjectStructure #MLSuccess #EfficientML #CollaborativeAI prompthub.info/74147/

prompthub.info

AI/ML プロジェクトの構造化: 混沌から明確化へ | Tarun Singh 著 | 2024 年 12 月 | Medium - プロンプトハブ

要約: AI/MLプロジェクトの適切な構築が成功の鍵 データ前処理、特徴量エンジニアリング、モデルトレーニング

AI/ML プロジェクトの構造化: 混沌から明確化へ | Tarun Singh 著 | 2024 年 12 月 | Medium #AIProjects #MLStructure #EfficientML #CollaborativeLearning prompthub.info/74132/

prompthub.info

AI/ML プロジェクトの構造化: 混沌から明確化へ | Tarun Singh 著 | 2024 年 12 月 | Medium - プロンプトハブ

要約 AI/MLプロジェクトの適切な構築が長期的な成功に重要 データ前処理、特徴量エンジニアリング、モデルトレ

When starting from scratch, sometimes watching through a class is much more effective than chatting with LLMs. I built a foundation knowledge for model pruning now. Thanks @songhan_mit #EfficientML youtu.be/kgcvCs_1rzY?si…

youtube.com

YouTube

EfficientML.ai Lecture 4 - Pruning and Sparsity Part II (MIT 6.5940,...

[5/6] This research demonstrates the potential for micro-sized models to deliver mighty performance in always-on applications, balancing accuracy, memory usage, and speed. #EdgeAI #EfficientML

5/5 🔍 Want to learn more? Follow me for updates on the preprint and code release. Let's make AI more efficient and accessible for resource-constrained devices together! 🤝📱💻 #EdgeAI #EfficientML

Will be giving a talk at @iiscbangalore later today, with hopes of setting up collaborations related to my ongoing research. #EfficientML #Sustainable #ArtificialIntelligence

Having trouble running large text-to-image models (e.g., SDXL) on a desktop GPU due to memory constraints? We introduce MixDQ, a quantization method that achieves 2-3x memory and 1.45x e2e latency optimization without performance loss. (1/3) #arxiv #efficientML #stablediffusion

Check out ‘Dataset Decomposition’, a new paper from the Machine Learning Research team @Apple that improves the training speed of LLMs by up to 3x: arxiv.org/abs/2405.13226 Hadi Pouransari et al. #EfficientML #AI #ML #Apple

🚨Announcing the next Harvard #efficientml seminar on Learning to learn, featuring @janexwang and Rising Star @juliancodaforno ! 🧠 ➡️ April 22, 2:30 ET 📅Registration: tinyurl.com/yyzt9c96 🔗 Website: efficientml.org @harvard_data @HarvardDBMI @KempnerInst

@sarahookr asks the question: is bigger really better when it comes to #AI models? Check out @harvardmagazine's feature on #KempnerInstitute's new #efficientml seminar series, in partnership with @harvardmed and @harvard_data, organized by @schwarzjn_

A driving force of competition between top AI companies is dataset expansion. But is bigger really better? @cohere's @sarahookr argues scaling could be inefficient. @schwarzjn_ of @HarvardDBMI believes smaller models can adapt. From @nhpasquini: #harvard harvardmagazine.com/2024/03/scalin…

Join the #KempnerInstitute for the next Harvard #efficientML seminar on Machine Unlearning with @NicolasPapernot and Anvith Thudi. March 25 @ 2:30pm. Free, virtual, open to the public.

🚨Announcing the next Harvard #efficientml eminar on Machine Unlearning, featuring @NicolasPapernot and Rising Star Anvith Thudi! 🧠 ➡️ March 25 @ 2:30pm ET 📅 Registration: tinyurl.com/5n8kke2a 🔗 Website: efficientml.org @harvard_data @HarvardDBMI @KempnerInst

🚨Announcing the next Harvard #efficientml eminar on Machine Unlearning, featuring @NicolasPapernot and Rising Star Anvith Thudi! 🧠 ➡️ March 25 @ 2:30pm ET 📅 Registration: tinyurl.com/5n8kke2a 🔗 Website: efficientml.org @harvard_data @HarvardDBMI @KempnerInst

Leverage this model to get maximum efficiency for high quality images 😍 Want to do the same to your own models automatically? Contact us and get access to the Pruna Model Smasher 💥 #AI #ML #EfficientML #Smash #GenAI

"Want to make your Large #ML Model training cheaper & faster but don't know where to start?" Look no further! @bartoldson (@Livermore_Comp), @davisblalock (@MosaicML), & I went through hundreds of papers to compose a comprehensive document on #EfficientML Here is TL;DR: [1/6]

![bkailkhu's tweet image. "Want to make your Large #ML Model training cheaper & faster but don't know where to start?" Look no further!

@bartoldson (@Livermore_Comp), @davisblalock (@MosaicML), & I went through hundreds of papers to compose a comprehensive document on #EfficientML

Here is TL;DR: [1/6]](https://pbs.twimg.com/media/FiRB5sqWQAAiCJd.png)

Check out ‘Dataset Decomposition’, a new paper from the Machine Learning Research team @Apple that improves the training speed of LLMs by up to 3x: arxiv.org/abs/2405.13226 Hadi Pouransari et al. #EfficientML #AI #ML #Apple

Laura Florescu (ML Researcher) and Julie Choi (CGO) talk about their unique journeys to ML careers, and how #efficientML is the goal of Composer library on @GitHub, training vision and speech models up to 4x faster @MosaicML. girlgeek.io/top-10-tech-ta…

Having trouble running large text-to-image models (e.g., SDXL) on a desktop GPU due to memory constraints? We introduce MixDQ, a quantization method that achieves 2-3x memory and 1.45x e2e latency optimization without performance loss. (1/3) #arxiv #efficientML #stablediffusion

🚨Announcing the next Harvard #efficientml seminar on Learning to learn, featuring @janexwang and Rising Star @juliancodaforno ! 🧠 ➡️ April 22, 2:30 ET 📅Registration: tinyurl.com/yyzt9c96 🔗 Website: efficientml.org @harvard_data @HarvardDBMI @KempnerInst

🚨Announcing the next Harvard #efficientml eminar on Machine Unlearning, featuring @NicolasPapernot and Rising Star Anvith Thudi! 🧠 ➡️ March 25 @ 2:30pm ET 📅 Registration: tinyurl.com/5n8kke2a 🔗 Website: efficientml.org @harvard_data @HarvardDBMI @KempnerInst

Thrilled to have two papers presented at @icmlconf! Dive in and explore our research on memory-efficient training of neural networks! #ICML2023 #EfficientML

#ICLR2023 Hi from Kigali, Rwanda!! Presenting our work "Learning to Linearize DNNs" today in-person at 4:30 pm CAT! Attending @iclr_conf in person? Feel free to have some chat/stop-by. #privateML #efficientML #LinearizedDNN

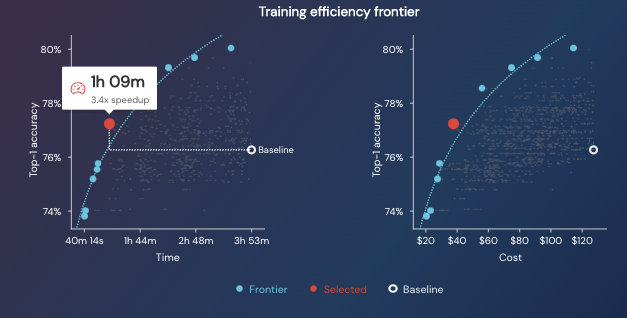

Simply enable the toggle for a category, and you’ll see the graphs update, with color-coded points and Pareto curves for each option. Check it out — we’re excited to share this with you, and can’t wait to hear your feedback! #EfficientML

Will be giving a talk at @iiscbangalore later today, with hopes of setting up collaborations related to my ongoing research. #EfficientML #Sustainable #ArtificialIntelligence

We measure these time/quality tradeoffs on real cloud instances and maintain a dashboard on the MosaicML Explorer: app.mosaicml.com -- #EfficientML

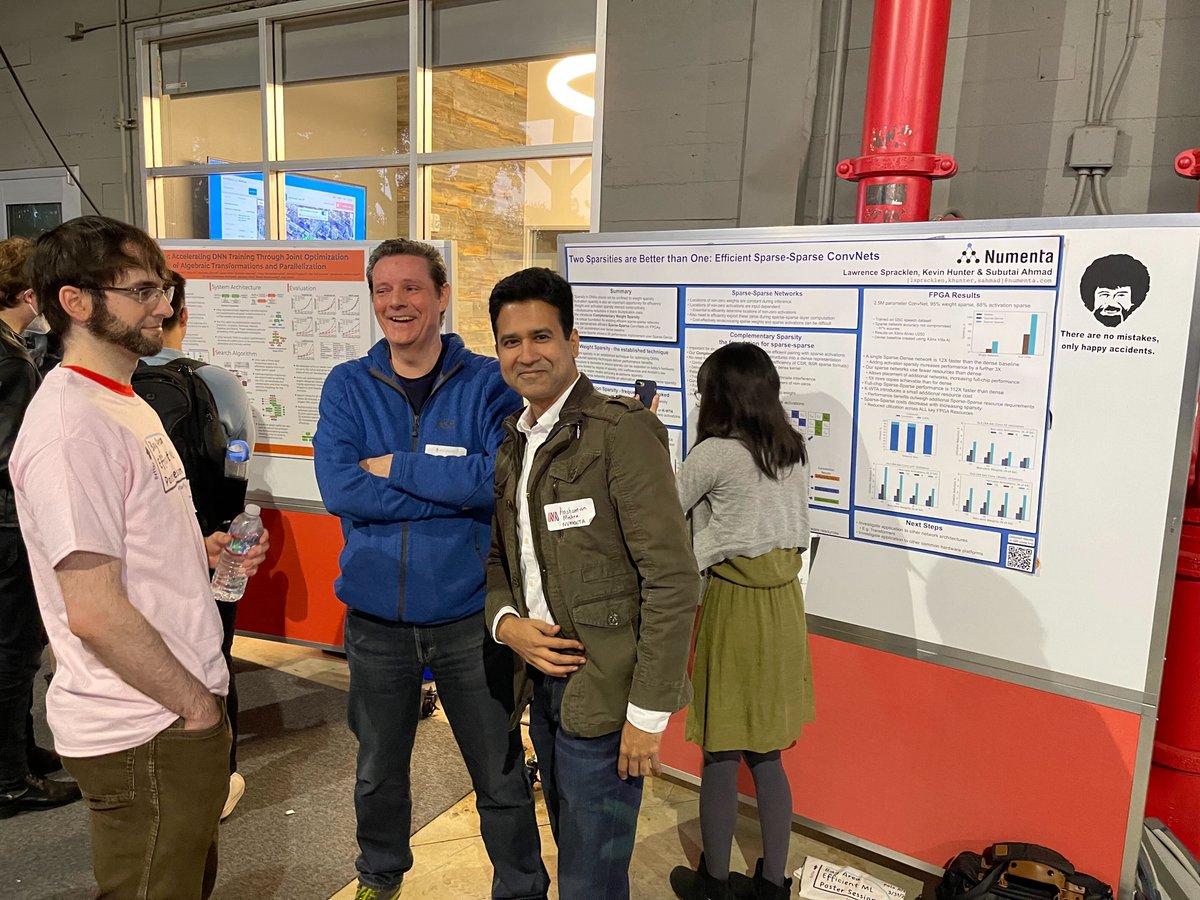

Our first in-person poster session in over two years! We had so much fun discussing our research and chatting with others at the #efficientML meetup yesterday. @SubutaiAhmad @spracklen @anshumanmishra In case you missed it, check out our poster here: numenta.com/neuroscience-r…

Heading to #NeurIPS2022 in a few weeks? Laissez les bon temps roulez - and join us at our #efficientml social on Weds 11/30! RSVP today - spots are limited: eventbrite.com/e/mosaicml-soc #ml #MachineLearning #ai

The first #EfficientML Bay Area Meetup brought together over 190 participants, 12 posters, 60 organizations, and everyone had a great time. Gratitude for @ml_collective and our co-organizers. Until next time!

Heading to #NeurIPS2022 in a few weeks? Laissez les bon temps roulez - and join us at our #efficientml social on Weds 11/30! RSVP today - spots are limited: eventbrite.com/e/mosaicml-soc…

Last week I joined @MosaicML to create a community of researchers for #EfficientML Model Training. Yesterday, I created the prettiest representation of an Image Classification Model to exist on the internet for our new ResNet Blog (mosaicml.com/blog/mosaic-re…) Life is good. 😀

One of my favorite parts of our blog post announcing the @MosaicML ResNet Recipes (mosaicml.com/blog/mosaic-re…) is the recipe card, designed by the talented @ericajiyuen. BTW these times are for 8x-A100

Happy NYE-Eve! As we get ready to jump into 2023, it's a great time to check out one of the 23 blogs MosaicML shipped in 2022. They're all about #EfficientML (and none were written by GPT-3) --> mosaicml.com/blog

We are delighted to be participating in the 2022 @MIT Efficient AI and Computing Technologies Conference this week. In their talks, @mcarbin and @juliechoi will share more about how we think about Efficient Deep Learning at scale. ilp.mit.edu/attend/22-effi… #efficientML

Something went wrong.

Something went wrong.

United States Trends

- 1. #Fivepillarstoken 1,451 posts

- 2. Cyber Monday 35.1K posts

- 3. #IDontWantToOverreactBUT N/A

- 4. TOP CALL 11.2K posts

- 5. #MondayMotivation 8,052 posts

- 6. Alina Habba 12.5K posts

- 7. Good Monday 39.4K posts

- 8. Mainz Biomed N/A

- 9. Check Analyze N/A

- 10. Token Signal 2,822 posts

- 11. #GivingTuesday 1,989 posts

- 12. Victory Monday 1,206 posts

- 13. Market Focus 2,468 posts

- 14. #JungkookxRollingStone 25.3K posts

- 15. New Month 407K posts

- 16. Clarie 2,847 posts

- 17. JUST ANNOUNCED 18.9K posts

- 18. White House Christmas 8,901 posts

- 19. Rosa Parks 3,379 posts

- 20. Shopify 3,372 posts