#hyperparameteroptimization hasil pencarian

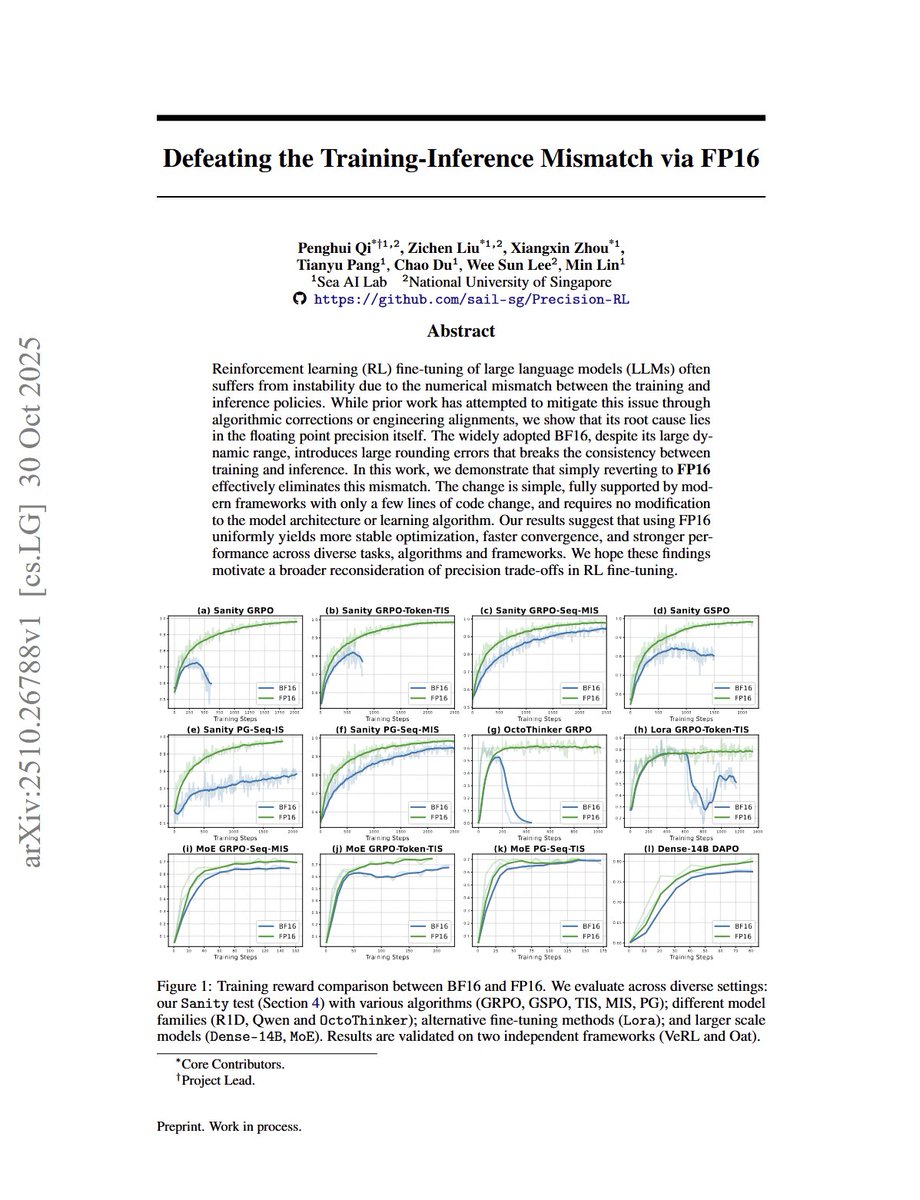

Defeating the Training-Inference Mismatch via FP16 Quick summary: A big problem in RL LLM training is that typical policy gradient methods expect the model generating the rollouts and the model being trained are exactly the same... but when you have a separate inference server…

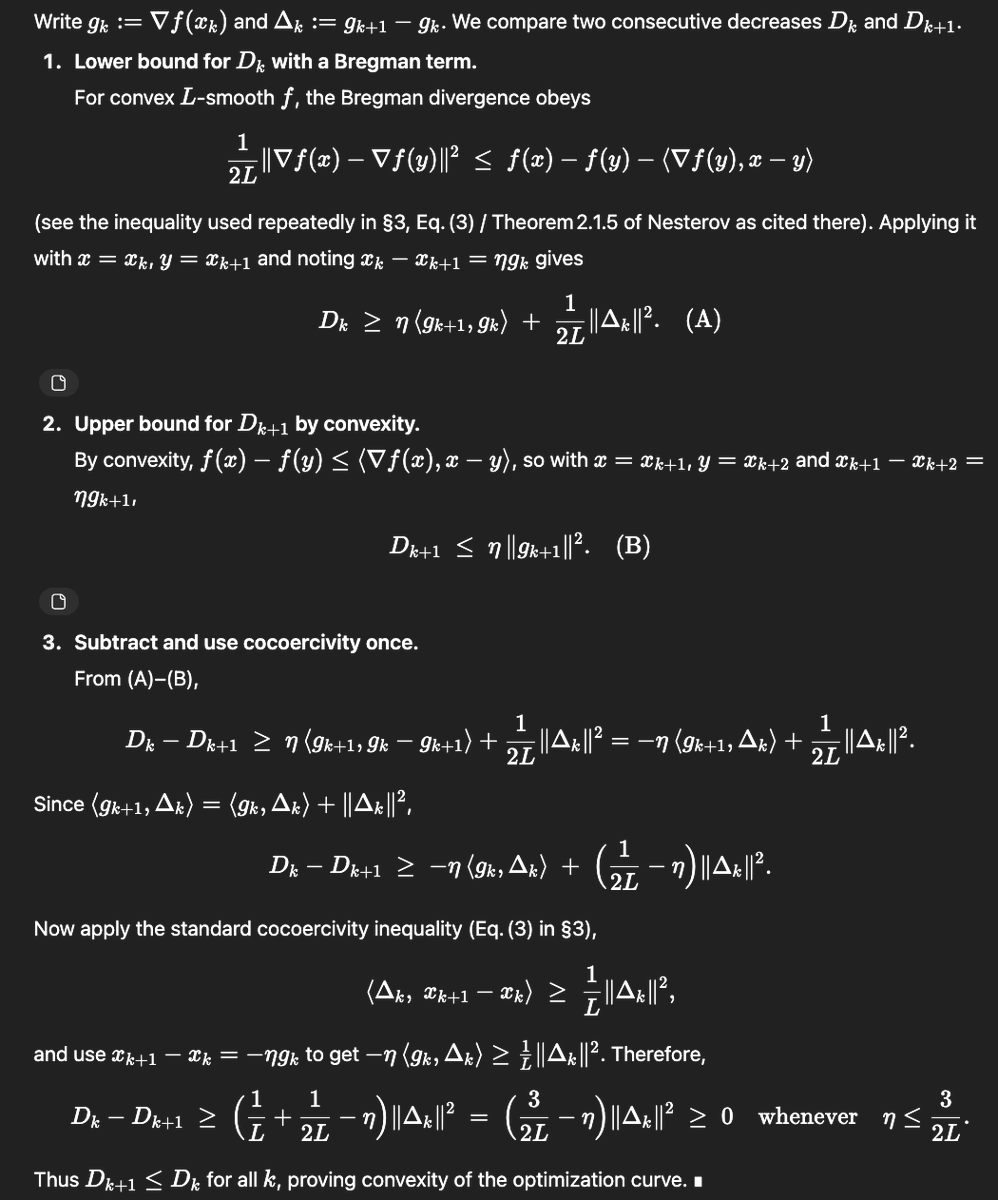

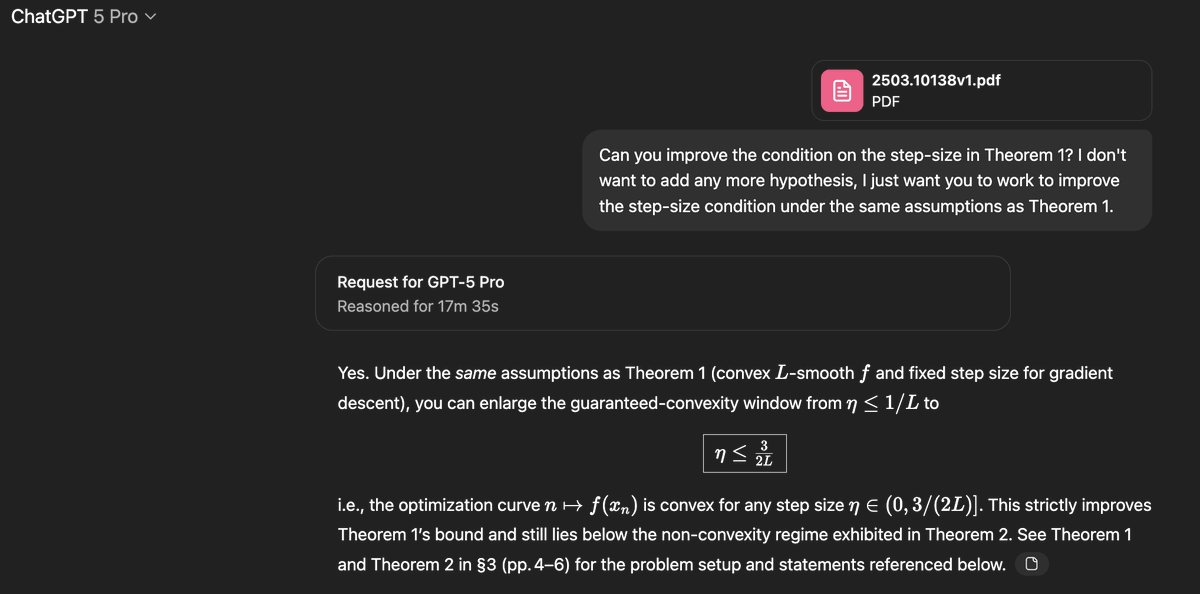

Claim: gpt-5-pro can prove new interesting mathematics. Proof: I took a convex optimization paper with a clean open problem in it and asked gpt-5-pro to work on it. It proved a better bound than what is in the paper, and I checked the proof it's correct. Details below.

Reinforcement Learning (RL) has long been the dominant method for fine-tuning, powering many state-of-the-art LLMs. Methods like PPO and GRPO explore in action space. But can we instead explore directly in parameter space? YES we can. We propose a scalable framework for…

Holy shit… a single precision setting just outperformed every RL fine-tuning algorithm of 2025. 😳 This paper from Sea AI Lab proves the chaos in reinforcement learning training collapse, unstable gradients, inference drift wasn’t caused by algorithms at all. It was numerical…

Third refresh to my Hyperliquid Season 3 Airdrop model now accounting for (i) HyperEVM activity and (ii) Staking. - This one was hard to model but I did my best to estimate & score HyperEVM activity over time to allocate a portion of the airdrop to it. HyperEVM activity would…

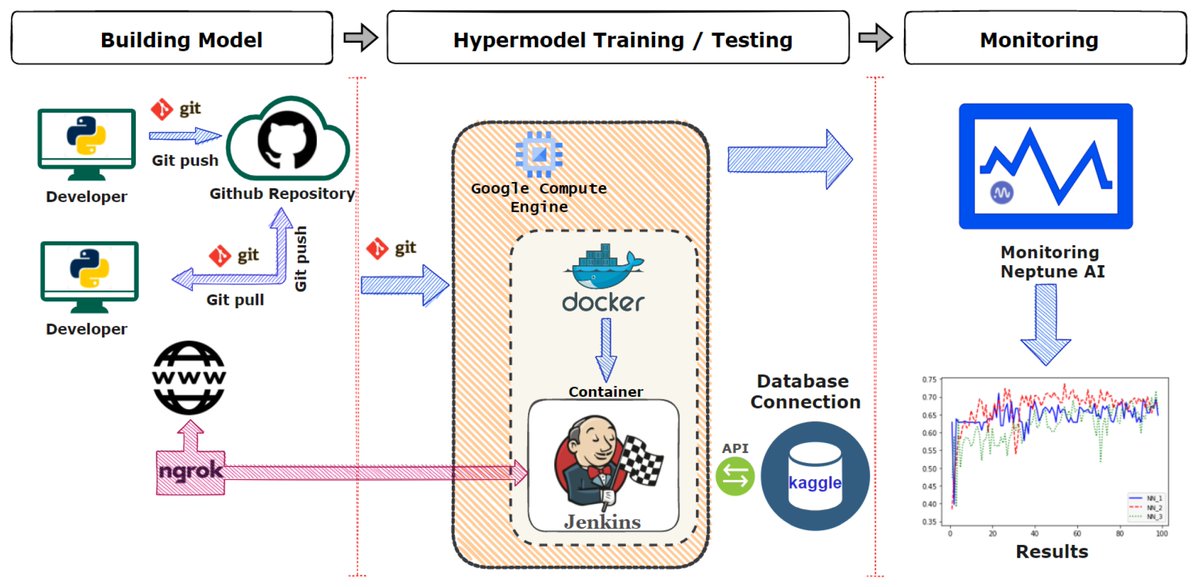

We’ve cooked another one of these 200+ pages practical books on model training that we love to write. This time it’s on all pretraining and post-training recipes and how to do a training project hyper parameter exploration. Closing the trilogy of: 1. Building a pretraining…

Training LLMs end to end is hard. Very excited to share our new blog (book?) that cover the full pipeline: pre-training, post-training and infra. 200+ pages of what worked, what didn’t, and how to make it run reliably huggingface.co/spaces/Hugging…

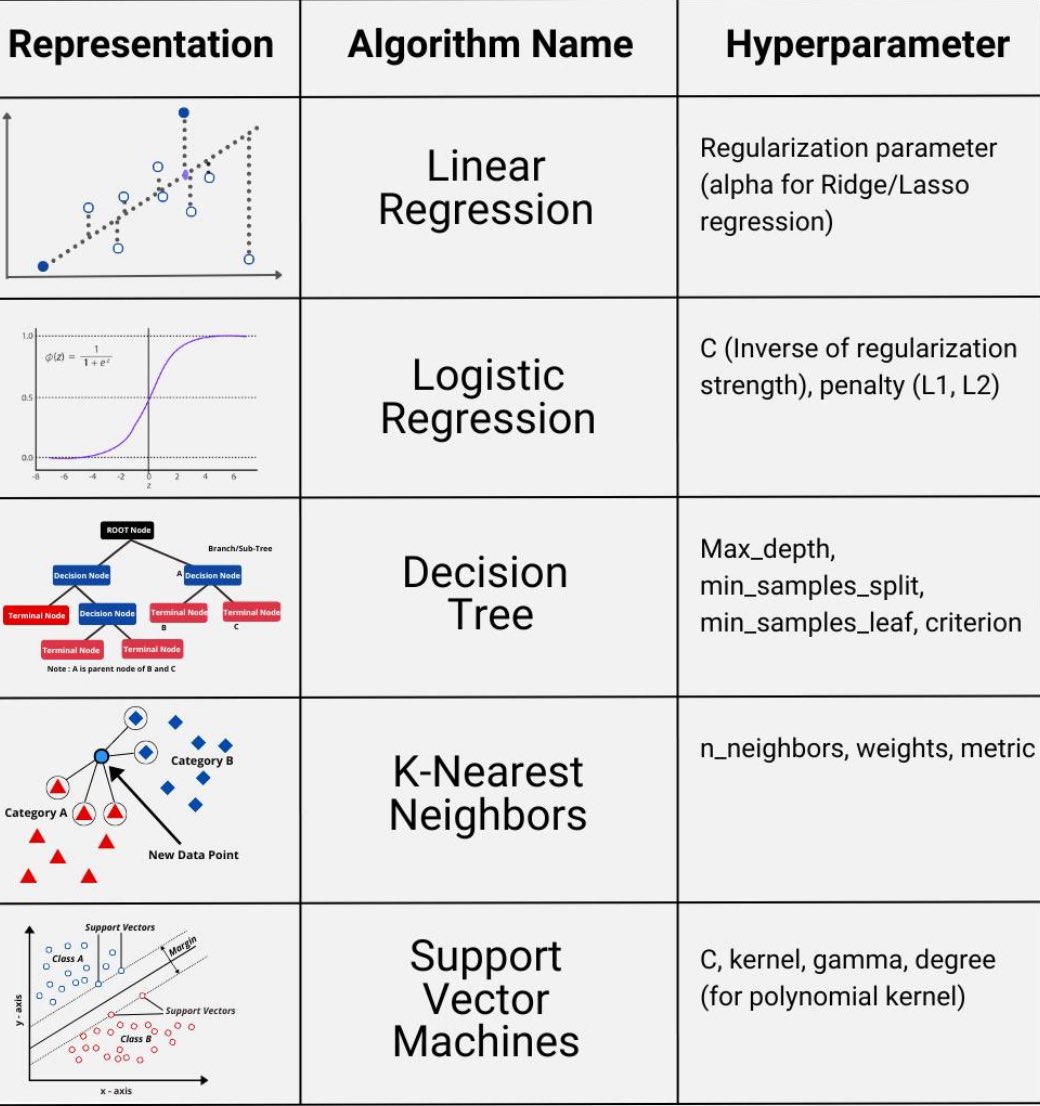

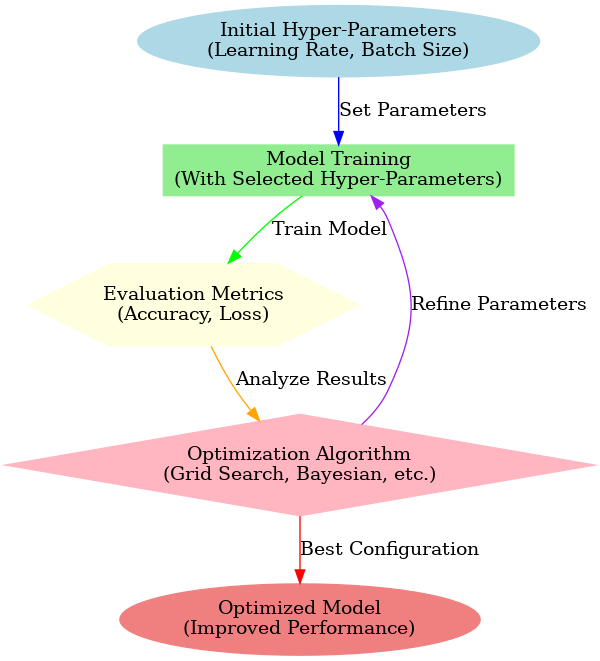

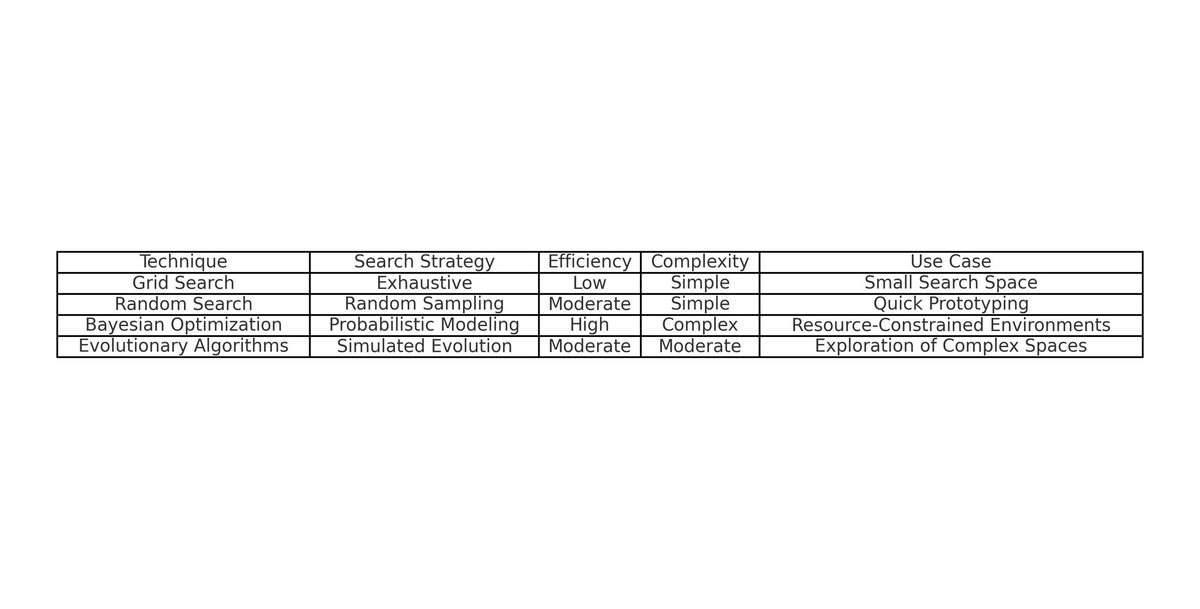

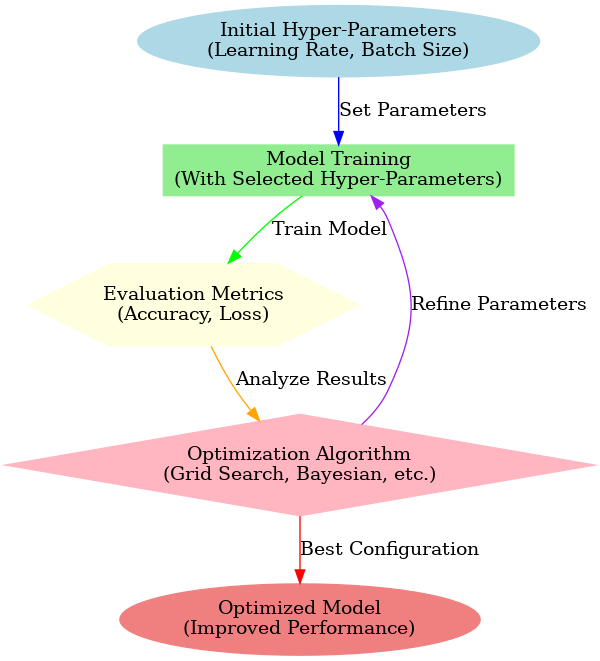

Hyperparameter tuning can make or break performance. It’s the invisible art behind every high-performing AI model. #MachineLearning ibm.com/think/topics/h…

You can let GPT-5 tune hyperparameters. Probably much more efficient than Optuna

🧵 Meet HyperLegal — AI-Driven Automation for Legal Intelligence, Contract Risk & Regulatory Monitoring 1️⃣ ⚖️ What is HyperLegal? HyperLegal is an AI-powered legal automation platform for law firms, corporations, and regulators enabling faster analysis, smarter compliance, and…

This was one of the best ML freelance projects I have ever worked on. It combines multiple features vectors into a single large vector embedding for match-making tasks. It contains so many concepts - > YOLO > Grabcut Mask > Color histograms > SIFT ( ORB as alternate ) > Contours…

📢 Releasing our latest paper For LLMs doing reasoning, we found a way to save up to 50% tokens without impacting accuracy It turns out that LLMs know when they’re right and we can use that fact to stop generations early WITHOUT impacting accuracy.

We made a Guide on mastering LoRA Hyperparameters, so you can learn to fine-tune LLMs correctly! Learn to: • Train smarter models with fewer hallucinations • Choose optimal: learning rates, epochs, LoRA rank, alpha • Avoid overfitting & underfitting 🔗docs.unsloth.ai/get-started/fi…

Applied to the manifold Muon subproblem, ADMM leads to the updates below. Its only hyperparameter is a parameter ρ>0. It satisfies: ✅Only requires matmuls and msign operations! ✅Empirically, converges rapidly to a good approximate solution! Thus, it’s well suited for GPU/TPU

Read #HighlyAccessedArticle "Structure Learning and Hyperparameter Optimization Using an Automated Machine Learning (AutoML) Pipeline". See more details at: mdpi.com/2078-2489/14/4… #Bayesianoptimization #hyperparameteroptimization @ComSciMath_Mdpi

Hyperparameter optimization using reinforcement learning is transforming machine learning workflows, leading to faster model tuning and increased accuracy. #HyperparameterOptimization #ReinforcementLearning #AI #MachineLearning… #AIdaily #AItrend #ArtificialIntelligence

Welcome to read and share the Highly Accessed Article in 2023. 📢 Title: Hyperparameter Optimization Using Successive Halving with Greedy Cross Validation 📢 Authors: Daniel S. Soper 📢 Paper link: mdpi.com/1999-4893/16/1… #hyperparameteroptimization #successivehalving

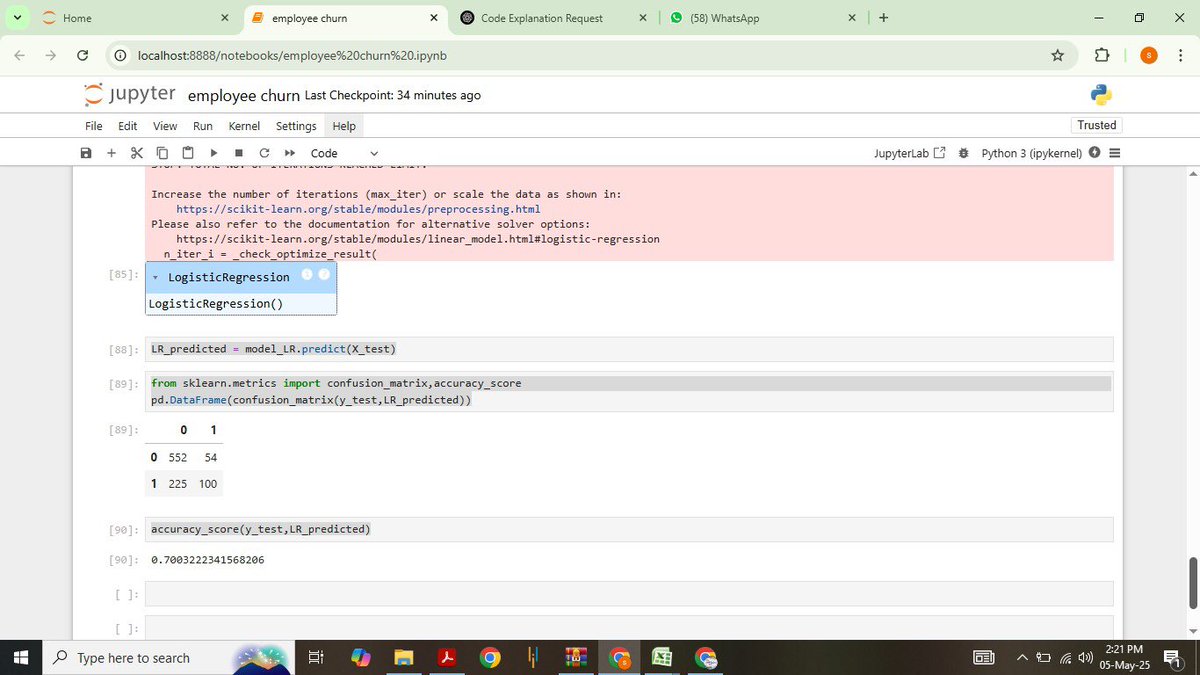

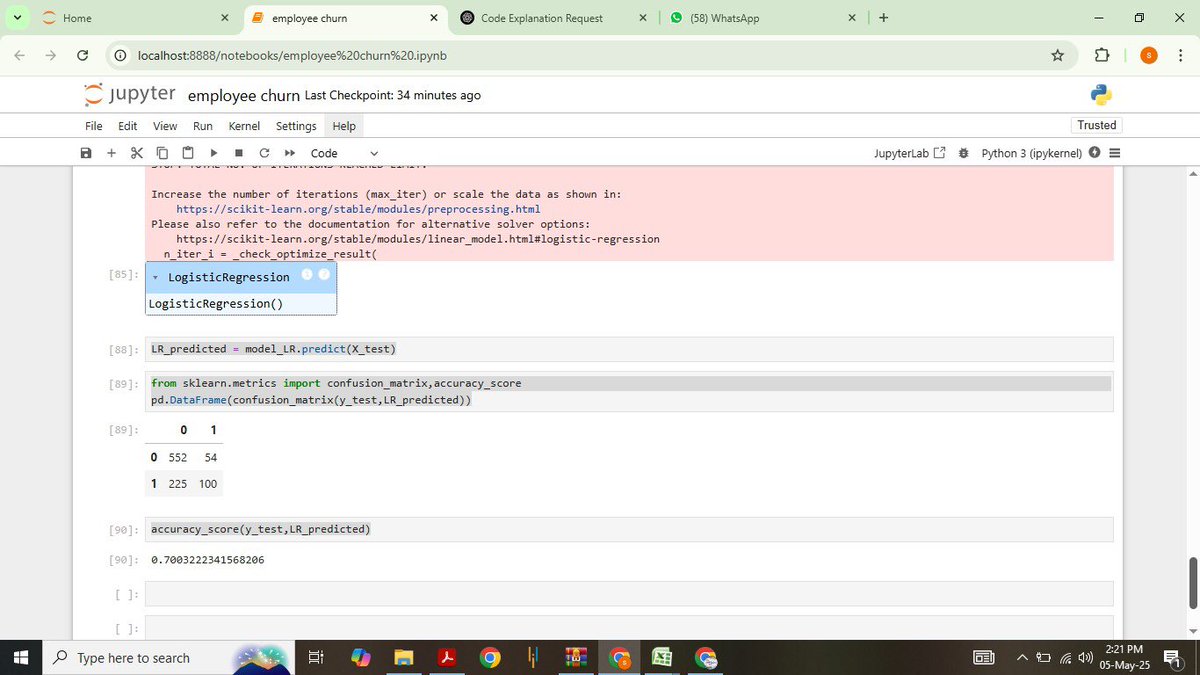

Employees churn Model's at 70% accuracy for employee churn prediction. Next up: exploring different algorithms and using Grid Search CV for hyperparameter tuning to push it towards that 85-95% target! #machinelearning #datascience #hyperparameteroptimization

"Optuna: because manually tuning hyperparameters is for chumps. Let AI do the work for you with this new framework. #LazyButSmart #ML #HyperparameterOptimization" Link:machinelearningmastery.com/how-to-perform… Follow for more🚀 Retweet and Like🤖

Hyper-Parameter Optimization (HPO)—Maximizing AI Model Performance 🧠⚙️ linkedin.com/posts/satyamcs… #AI #HyperParameterOptimization #MachineLearning #Innovation #FutureOfAI #satmis

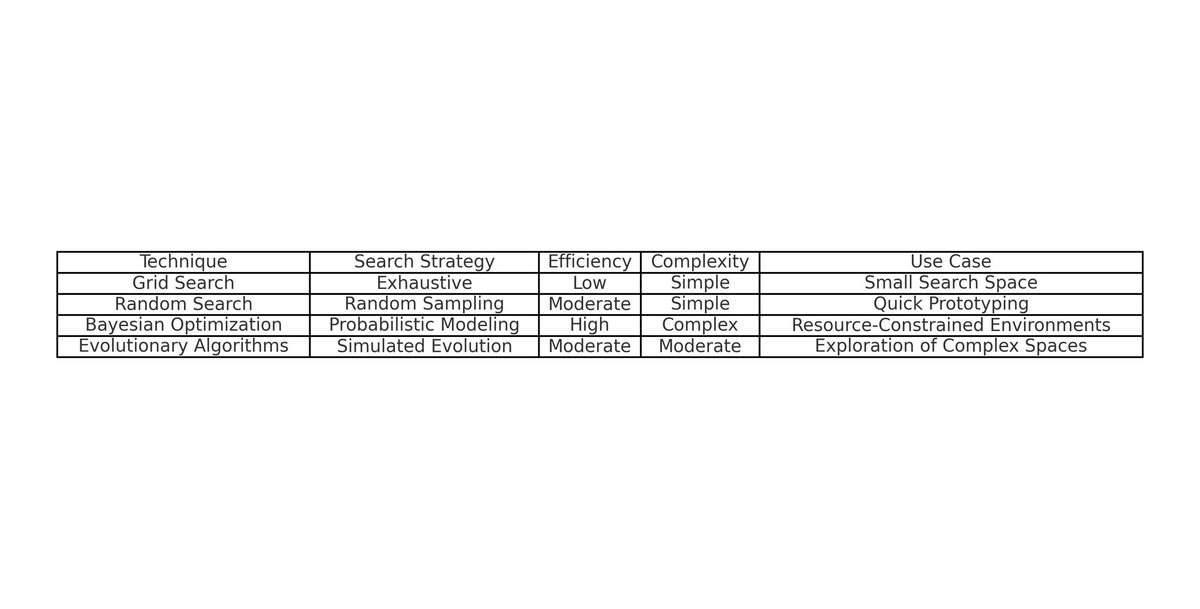

6/ 🔄 Random Search Randomly samples hyperparameter combinations. Surprisingly effective and faster than Grid Search for high-dimensional spaces. Pro Tip: Works well when you don't know which parameters matter most. #HyperparameterOptimization #ML

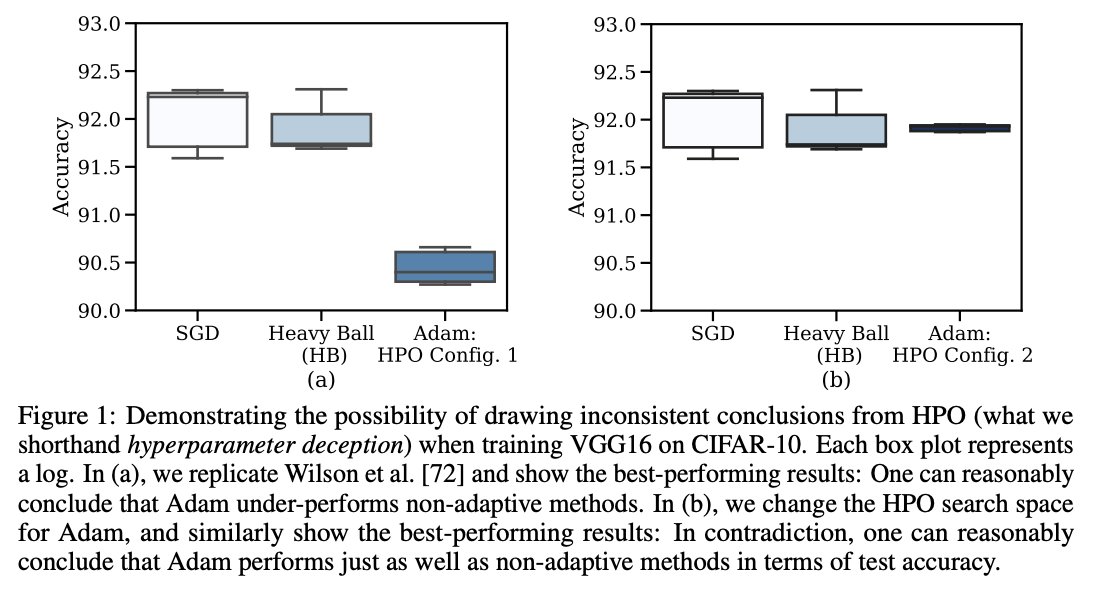

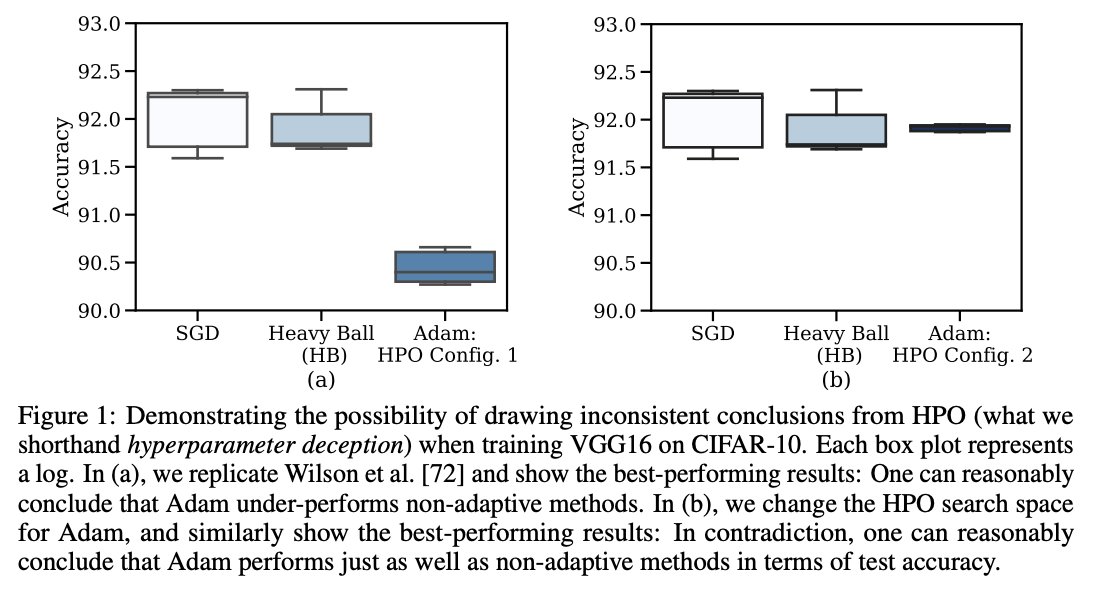

This AI Paper from Cornell and Brown University Introduces Epistemic Hyperparameter Optimization: A Defended Random Search Approach to Combat Hyperparameter Deception itinai.com/this-ai-paper-… #HyperparameterOptimization #MachineLearning #AIResearch #CornellUniversity #BrownUni…

🚀 Exciting News! I've launched my new course: "Mastering Hyperparameter Optimization for Machine Learning" 🎉 in @EducativeInc platform Enroll now and take your skills to the next level! 💡📊 educative.io/courses/master… #MachineLearning #HyperparameterOptimization #DataScience

🚀 Excited to share my latest article on Bayesian Optimization and Optuna for @neptune_ai! Read Here: neptune.ai/blog/how-to-op… #ML #HyperparameterOptimization #Optuna #BayesianOptimization #DeepLearning

Exciting news for machine learning enthusiasts: Optuna, a powerful hyperparameter optimization framework, has been released, promising to streamline the process of tuning models for optimal performance. #Optuna #MachineLearning #HyperparameterOptimization optuna.org

AI can help automate and optimize this tuning process, using techniques like hyperparameter optimization to find the best settings for our models. 🔄📈 #HyperparameterOptimization #EfficientAI

Come visit our booth at #MLConfNYC! Software engineer Alexandra speaking at 11:05 about #HyperparameterOptimization nogridsearch.com

#AI #ArtificialIntelligence #hyperparameteroptimization 6 Techniques to Boost your Machine Learning Models: submitted by /u/seemingly_omniscient [visit reddit] [comments] dlvr.it/RBQww9

![CarlRioux's tweet image. #AI #ArtificialIntelligence #hyperparameteroptimization 6 Techniques to Boost your Machine Learning Models: submitted by /u/seemingly_omniscient [visit reddit] [comments] dlvr.it/RBQww9](https://pbs.twimg.com/media/ECPejWVUIAIqumC.jpg)

Employees churn Model's at 70% accuracy for employee churn prediction. Next up: exploring different algorithms and using Grid Search CV for hyperparameter tuning to push it towards that 85-95% target! #machinelearning #datascience #hyperparameteroptimization

Explore our #HyperparameterOptimization section on the blog: 👉 Hyperparameter Tuning in Python bit.ly/3a5JhoS 👉 Best Tools for Model Tuning and Hyperparameter Optimization bit.ly/34BcUMR 👉 How to Track Hyperparameters of ML Models? bit.ly/2BBc9sg

Read #HighlyAccessedArticle "Structure Learning and Hyperparameter Optimization Using an Automated Machine Learning (AutoML) Pipeline". See more details at: mdpi.com/2078-2489/14/4… #Bayesianoptimization #hyperparameteroptimization @ComSciMath_Mdpi

debuggercafe.com/an-introductio… New post at DebuggerCafe - An Introduction to Hyperparameter Tuning in Deep Learning #DeepLearning #HyperparameterOptimization #HyperparameterTuning #NeuralNetworks

Short Term #TrafficStatePrediction via #HyperparameterOptimization Based Classifiers 👉mdpi.com/1424-8220/20/3… #machinelearning #intelligenttransportation

Heatmap of best-performing configurations for SVM classifier; log10(c) vs log10(γ). For a dataset of handwritten numerals (openml.org/d/12). #HyperparameterOptimization #MachineLearning #SupportVectorMachine @open_ml

🔥 Read our Highly Cited Paper 📚 Plant Disease Detection Using Deep Convolutional Neural Network 🔗 mdpi.com/2076-3417/12/1… 👨 by Mr. J. Arun Pandian et al. #neuralnetworks #hyperparameteroptimization #transfer

Hyper-Parameter Optimization (HPO)—Maximizing AI Model Performance 🧠⚙️ linkedin.com/posts/satyamcs… #AI #HyperParameterOptimization #MachineLearning #Innovation #FutureOfAI #satmis

Have you ever considered you might not be using the right #Bayesian package for #HyperparameterOptimization? We created a comparison guide for easy reference here: bit.ly/3g8f4Hb #HPO #ICML2020

📢 Good news! We updated the Neptune + @OptunaAutoML integration to be in line with the new Neptune API! Check the docs 👉 bit.ly/3jaa9dx You'll find there: 🎞 video tutorial 📄 step-by-step guide 📊 examples in Neptune and Colab #MLOps #HyperparameterOptimization

Sign up for the free beta of our latest functionality, Experiment Management. For a limited time, users will get access to our full product – including our flagship #HyperparameterOptimization solution. Details here: bit.ly/2URDqwY

This AI Paper from Cornell and Brown University Introduces Epistemic Hyperparameter Optimization: A Defended Random Search Approach to Combat Hyperparameter Deception itinai.com/this-ai-paper-… #HyperparameterOptimization #MachineLearning #AIResearch #CornellUniversity #BrownUni…

Short Term #TrafficStatePrediction via #HyperparameterOptimization Based #Classifiers by Muhammad Zahid *, Yangzhou Chen *, Arshad Jamal and Muhammad Qasim Memon @KFUPM @BNU_Official 👉mdpi.com/1424-8220/20/3… #machinelearning #ITS #simulation #trafficmodeling

debuggercafe.com/hyperparameter… New tutorial at DebuggerCafe - Hyperparameter Search with PyTorch and Skorch #HyperparameterOptimization #HyperparameterTuning #HyperparameterSearch #DeepLearning #PyTorch #Skorch

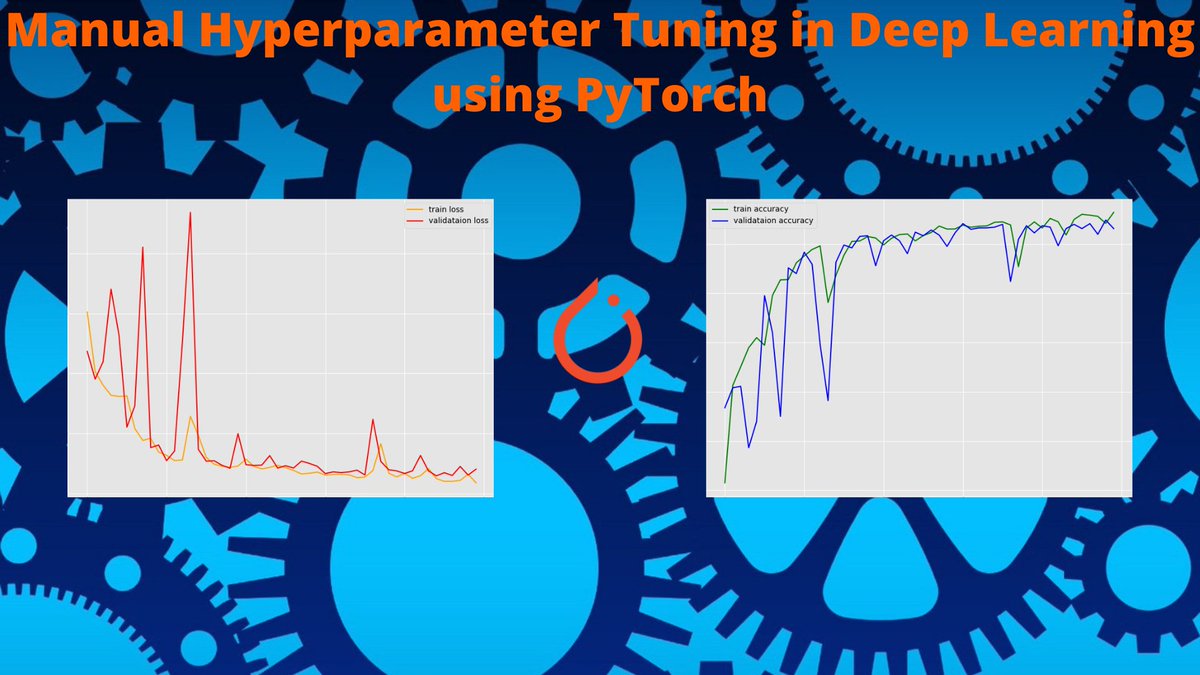

debuggercafe.com/manual-hyperpa… New tutorial at DebuggerCafe - Manual Hyperparameter Tuning in Deep Learning using PyTorch #HyperparameterTuning #HyperparameterOptimization #DeepLearning #PyTorch

Some of the best #HyperparameterOptimization libraries are: > @scikit_learn > Hyperopt > Scikit-Optimize > @OptunaAutoML > Ray Tune @raydistributed > Keras Tuner From our post, you’ll learn more about them and the hyperparameter tuning process in general. bit.ly/3jdJTvO

Prior Beliefs is a SigOpt feature that allows modelers to incorporate their prior knowledge into SigOpt’s #HyperparameterOptimization process. Join us for a free session on how to best use this feature: bit.ly/36BADMN

🚀 Exciting News! I've launched my new course: "Mastering Hyperparameter Optimization for Machine Learning" 🎉 in @EducativeInc platform Enroll now and take your skills to the next level! 💡📊 educative.io/courses/master… #MachineLearning #HyperparameterOptimization #DataScience

Something went wrong.

Something went wrong.

United States Trends

- 1. VMIN 9,911 posts

- 2. SPRING DAY 21.7K posts

- 3. Chovy 9,925 posts

- 4. Good Saturday 16.2K posts

- 5. GenG 5,866 posts

- 6. Nigeria 446K posts

- 7. #SaturdayVibes 2,677 posts

- 8. #Worlds2025 52.3K posts

- 9. Happy New Month 202K posts

- 10. Game 7 1,492 posts

- 11. jungkook 712K posts

- 12. #RUNSEOKJIN_epTOUR_ENCORE 373K posts

- 13. The Grand Egyptian Museum 7,398 posts

- 14. Kawhi 9,067 posts

- 15. Shirley Temple N/A

- 16. Merry Christmas 11.1K posts

- 17. Tinubu 49.4K posts

- 18. #Jin_TOUR_ENCORE 326K posts

- 19. Ja Morant 6,326 posts

- 20. Wrigley N/A