#lineartransformation search results

RT Linear Algebra: Orthogonal Vectors dlvr.it/SlB9NL #orthogonalvector #mathematics #lineartransformation #linearalgebra

4d Vector Decomposition . . . #linearalgebra #lineartransformation #matrices #vectors #vectorspace #3dart #3ddesign #4dvector #4dart #higherdimensions #higherdimensionalart #parametricdesign #creativecoding #visualcoding #matplotlib #matplotlibpyplot #vignette

I reverse engineered the quadratic functions ... that whomever it was ... simplified ... I just undid what he simplified - to see what he was looking at. #ReverseEngineering a #LinearTransformation

Dim and Small Target Detection with a Combined New Norm and Self-Attention Mechanism of Low-Rank Sparse Inversion mdpi.com/1424-8220/23/1… @UCAS1978 #infraredtargetdetection; #lineartransformation

Great video of explaining rotation. #LinearTransformation youtu.be/lPWfIq5DzqI

Level up your data science vocabulary: Linear Transformation deepai.org/machine-learni… #MachineLearning #LinearTransformation

deepai.org

Linear Transformation

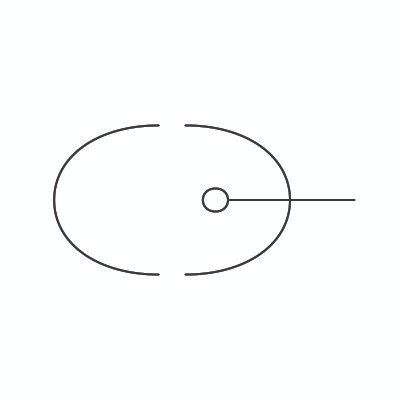

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

Think of it this way, if you have a vector [ 1 2] and you apply a #lineartransformation on it, it would basically do an operation on the vector but still keep it in the same space. Meaning your vector could either get rotated or scaled but it still stays in the same plane.

Level up your data science vocabulary: Linear Transformation deepai.org/machine-learni… #Vector #LinearTransformation

deepai.org

Linear Transformation

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

Definition of Eigenfunction deepai.org/machine-learni… #LinearTransformation #Eigenfunction

Definition of Eigenspace deepai.org/machine-learni… #LinearTransformation #Eigenspace

deepai.org

Eigenspace

An eigenspace is the collection of eigenvectors associated with each eigenvalue for a given linear transformation.

From the Machine Learning & Data Science glossary: Orthogonal Matrix deepai.org/machine-learni… #LinearTransformation #OrthogonalMatrix

deepai.org

Orthogonal Matrix

An orthogonal matrix is a square matrix in which all of the vectors that make up the matrix are orthonormal to each other. In terms of geometry, orthogonal means that two vectors are perpendicular to...

Level up your data science vocabulary: Linear Transformation deepai.org/machine-learni… #Vector #LinearTransformation

deepai.org

Linear Transformation

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

Neural Differential Equations for Learning to Program Neural Nets Through Continuous Learning Rules deepai.org/publication/ne… by Kazuki Irie et al. #OrdinaryDifferentialEquation #LinearTransformation

deepai.org

Neural Differential Equations for Learning to Program Neural Nets Through Continuous Learning Rules

06/03/22 - Neural ordinary differential equations (ODEs) have attracted much attention as continuous-time counterparts of deep residual neura...

Momentum Transformer: Closing the Performance Gap Between Self-attention and Its Linearization deepai.org/publication/mo… by Tan Nguyen et al. #ResidualConnections #LinearTransformation

deepai.org

Momentum Transformer: Closing the Performance Gap Between Self-attention and Its Linearization

08/01/22 - Transformers have achieved remarkable success in sequence modeling and beyond but suffer from quadratic computational and memory c...

Waveformer: Linear-Time Attention with Forward and Backward Wavelet Transform deepai.org/publication/wa… by Yufan Zhuang et al. including @ZihanWa54274484 #FourierTransform #LinearTransformation

deepai.org

Waveformer: Linear-Time Attention with Forward and Backward Wavelet Transform

10/05/22 - We propose Waveformer that learns attention mechanism in the wavelet coefficient space, requires only linear time complexity, and ...

Biologically-Plausible Determinant Maximization Neural Networks for Blind Separation of Correlated Sources deepai.org/publication/bi… by Bariscan Bozkurt et al. #LinearTransformation #NeuralNetwork

Check out the groundbreaking paper "XrayGPT: Chest Radiographs Summarization using Medical Vision-Language Models" 🔥 Lowkey Goated When Open Source Is The Vibe 🔥 by @raoanwer, et al. #LinearTransformation deepai.org/publication/xr…

deepai.org

XrayGPT: Chest Radiographs Summarization using Medical Vision-Language Models

06/13/23 - The latest breakthroughs in large vision-language models, such as Bard and GPT-4, have showcased extraordinary abilities in perfor...

From the Machine Learning & Data Science glossary: Orthogonal Matrix deepai.org/machine-learni… #LinearTransformation #OrthogonalMatrix

deepai.org

Orthogonal Matrix

An orthogonal matrix is a square matrix in which all of the vectors that make up the matrix are orthonormal to each other. In terms of geometry, orthogonal means that two vectors are perpendicular to...

I'm excited to share my latest article: "Linear Transformation: The Responsiveness of User Interface in Flutter". Read the article: medium.com/@zacchaeusoluw… #Flutter #Mathematics #LinearTransformation #UI #UX #Responsiveness #Code #Program #Tech

Dim and Small Target Detection with a Combined New Norm and Self-Attention Mechanism of Low-Rank Sparse Inversion mdpi.com/1424-8220/23/1… @UCAS1978 #infraredtargetdetection; #lineartransformation

Rotating shapes on TI-30X / TI-36X Pro / CASIO fx-991EX TURN ON SUBS and use Translate! youtu.be/e5sdqKryutI @TICalculators @CasioMaths @CasioEducation @CasioEduFrance @cp4at #LinearAlgebra #LinearTransformation #matrix #vector

Check out the groundbreaking paper "XrayGPT: Chest Radiographs Summarization using Medical Vision-Language Models" 🔥 Lowkey Goated When Open Source Is The Vibe 🔥 by @raoanwer, et al. #LinearTransformation deepai.org/publication/xr…

deepai.org

XrayGPT: Chest Radiographs Summarization using Medical Vision-Language Models

06/13/23 - The latest breakthroughs in large vision-language models, such as Bard and GPT-4, have showcased extraordinary abilities in perfor...

🤯 Stop what you're doing right now and check out this mind-blowing linear transformation! 🤯 #LinearTransformation deepai.org/machine-learni…

deepai.org

Linear Transformation

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

RT Linear Algebra: Orthogonal Vectors dlvr.it/SlB9NL #orthogonalvector #mathematics #lineartransformation #linearalgebra

From the Machine Learning & Data Science glossary: Orthogonal Matrix deepai.org/machine-learni… #LinearTransformation #OrthogonalMatrix

deepai.org

Orthogonal Matrix

An orthogonal matrix is a square matrix in which all of the vectors that make up the matrix are orthonormal to each other. In terms of geometry, orthogonal means that two vectors are perpendicular to...

Level up your data science vocabulary: Linear Transformation deepai.org/machine-learni… #Vector #LinearTransformation

deepai.org

Linear Transformation

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

Level up your data science vocabulary: Linear Transformation deepai.org/machine-learni… #Vector #LinearTransformation

deepai.org

Linear Transformation

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

From the Machine Learning & Data Science glossary: Orthogonal Matrix deepai.org/machine-learni… #LinearTransformation #OrthogonalMatrix

deepai.org

Orthogonal Matrix

An orthogonal matrix is a square matrix in which all of the vectors that make up the matrix are orthonormal to each other. In terms of geometry, orthogonal means that two vectors are perpendicular to...

Level up your data science vocabulary: Linear Transformation deepai.org/machine-learni… #MachineLearning #LinearTransformation

deepai.org

Linear Transformation

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

Waveformer: Linear-Time Attention with Forward and Backward Wavelet Transform deepai.org/publication/wa… by Yufan Zhuang et al. including @ZihanWa54274484 #FourierTransform #LinearTransformation

deepai.org

Waveformer: Linear-Time Attention with Forward and Backward Wavelet Transform

10/05/22 - We propose Waveformer that learns attention mechanism in the wavelet coefficient space, requires only linear time complexity, and ...

Definition of Eigenfunction deepai.org/machine-learni… #LinearTransformation #Eigenfunction

Biologically-Plausible Determinant Maximization Neural Networks for Blind Separation of Correlated Sources deepai.org/publication/bi… by Bariscan Bozkurt et al. #LinearTransformation #NeuralNetwork

Level up your data science vocabulary: Linear Transformation deepai.org/machine-learni… #Vector #LinearTransformation

deepai.org

Linear Transformation

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

From the Machine Learning & Data Science glossary: Orthogonal Matrix deepai.org/machine-learni… #LinearTransformation #OrthogonalMatrix

deepai.org

Orthogonal Matrix

An orthogonal matrix is a square matrix in which all of the vectors that make up the matrix are orthonormal to each other. In terms of geometry, orthogonal means that two vectors are perpendicular to...

Level up your data science vocabulary: Linear Transformation deepai.org/machine-learni… #MachineLearning #LinearTransformation

deepai.org

Linear Transformation

A Linear Transformation, also known as a linear map, is a mapping of a function between two modules that preserves the operations of addition and scalar multiplication.

Momentum Transformer: Closing the Performance Gap Between Self-attention and Its Linearization deepai.org/publication/mo… by Tan Nguyen et al. #ResidualConnections #LinearTransformation

deepai.org

Momentum Transformer: Closing the Performance Gap Between Self-attention and Its Linearization

08/01/22 - Transformers have achieved remarkable success in sequence modeling and beyond but suffer from quadratic computational and memory c...

From the Machine Learning & Data Science glossary: Orthogonal Matrix deepai.org/machine-learni… #LinearTransformation #OrthogonalMatrix

deepai.org

Orthogonal Matrix

An orthogonal matrix is a square matrix in which all of the vectors that make up the matrix are orthonormal to each other. In terms of geometry, orthogonal means that two vectors are perpendicular to...

RT Linear Algebra: Orthogonal Vectors dlvr.it/SlB9NL #orthogonalvector #mathematics #lineartransformation #linearalgebra

Dim and Small Target Detection with a Combined New Norm and Self-Attention Mechanism of Low-Rank Sparse Inversion mdpi.com/1424-8220/23/1… @UCAS1978 #infraredtargetdetection; #lineartransformation

Something went wrong.

Something went wrong.

United States Trends

- 1. Veterans Day 382K posts

- 2. Woody 13.2K posts

- 3. #stateofplay 7,233 posts

- 4. Toy Story 5 18.2K posts

- 5. Tangle and Whisper 1,293 posts

- 6. Nico 143K posts

- 7. Luka 84.6K posts

- 8. Gambit 42.9K posts

- 9. Travis Hunter 3,959 posts

- 10. Payne 12.3K posts

- 11. SBMM 1,517 posts

- 12. Tish 5,467 posts

- 13. Square Enix 5,231 posts

- 14. Mavs 33K posts

- 15. Sabonis 3,891 posts

- 16. Vets 33.9K posts

- 17. Wike 124K posts

- 18. De Minaur 2,669 posts

- 19. Antifa 192K posts

- 20. Jonatan Palacios 2,352 posts