#llm4code wyniki wyszukiwania

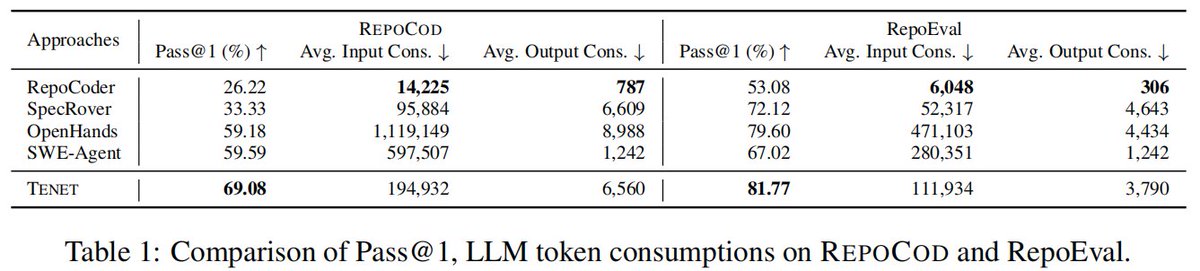

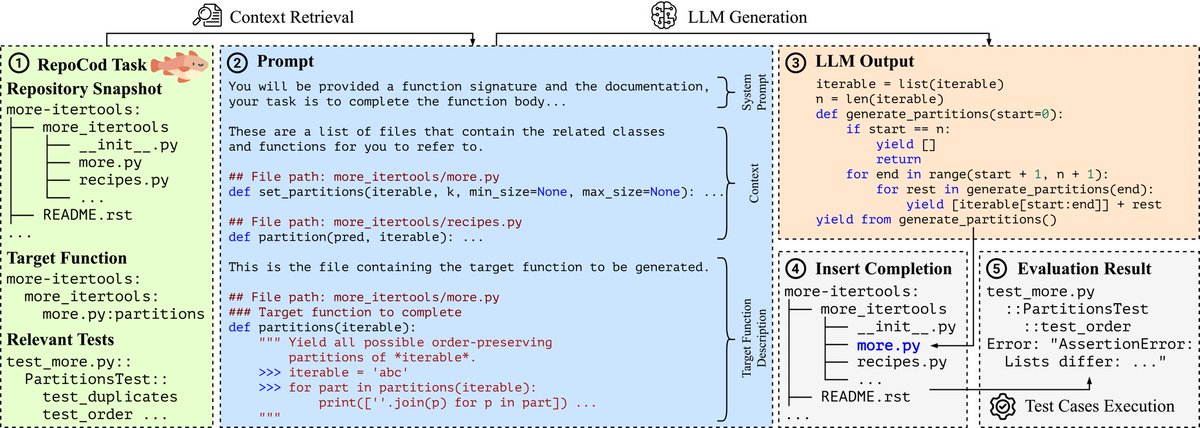

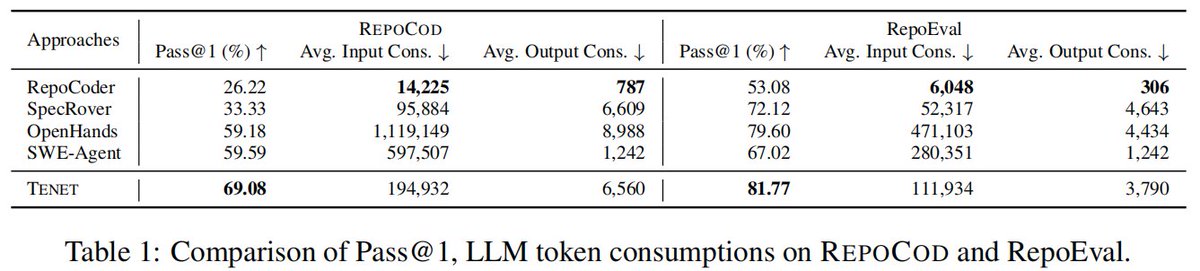

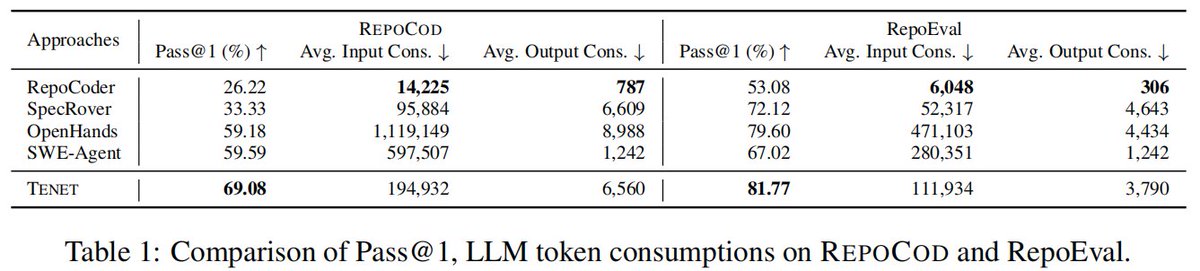

Curious about how tests can guide the entire code generation process, not just validate it afterward? 🚀 Check out TENET, our test-driven LLM agent for repository-level code generation! 🔗 Paper Link: arxiv.org/abs/2509.24148 #LLMs #Agents #LLM4Code #CodeGeneration

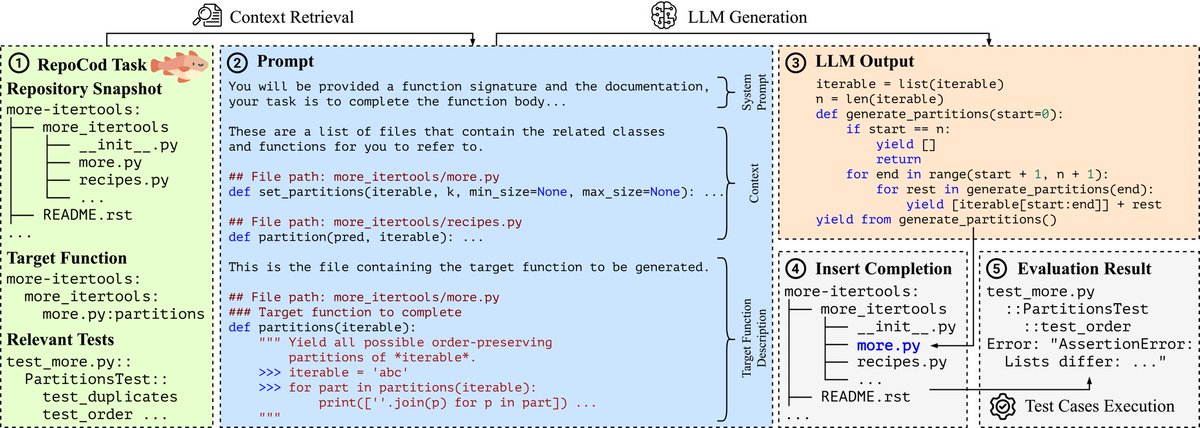

All LLMs including GPT-4o achieve < 30% pass@1 on real-world code completion: Check out 🐟REPOCOD, a real-world code generation benchmark: - Repository-level context - Whole function generation - Validation with test cases - 980 instances from 11 Python projects #LLM #LLM4Code

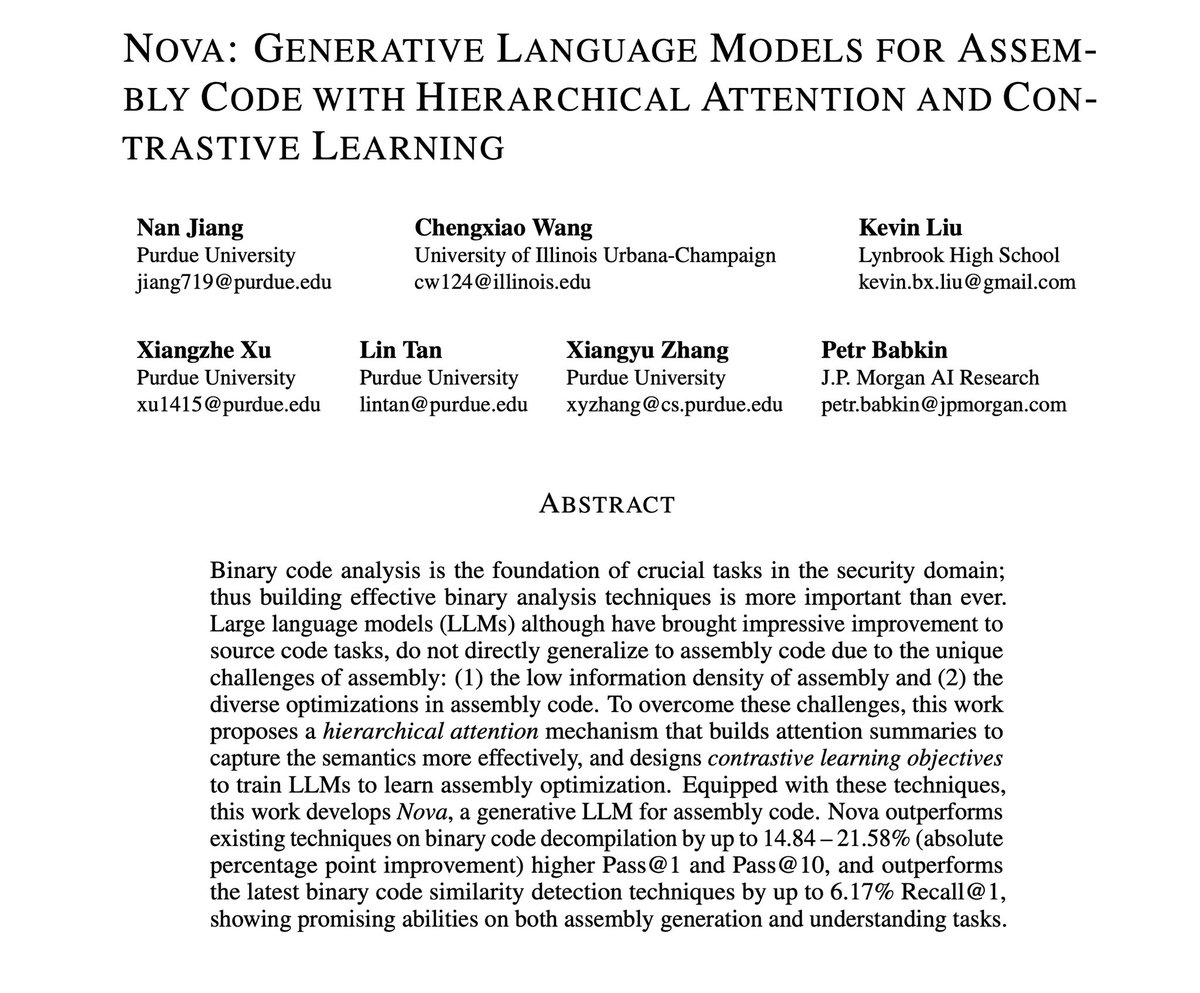

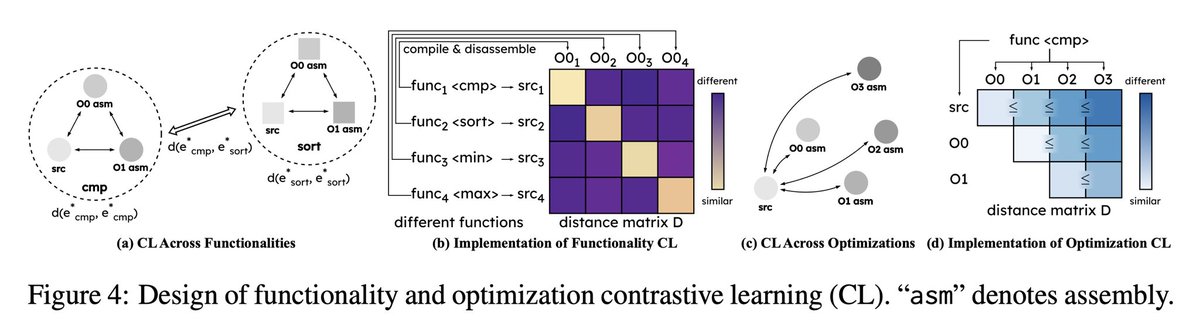

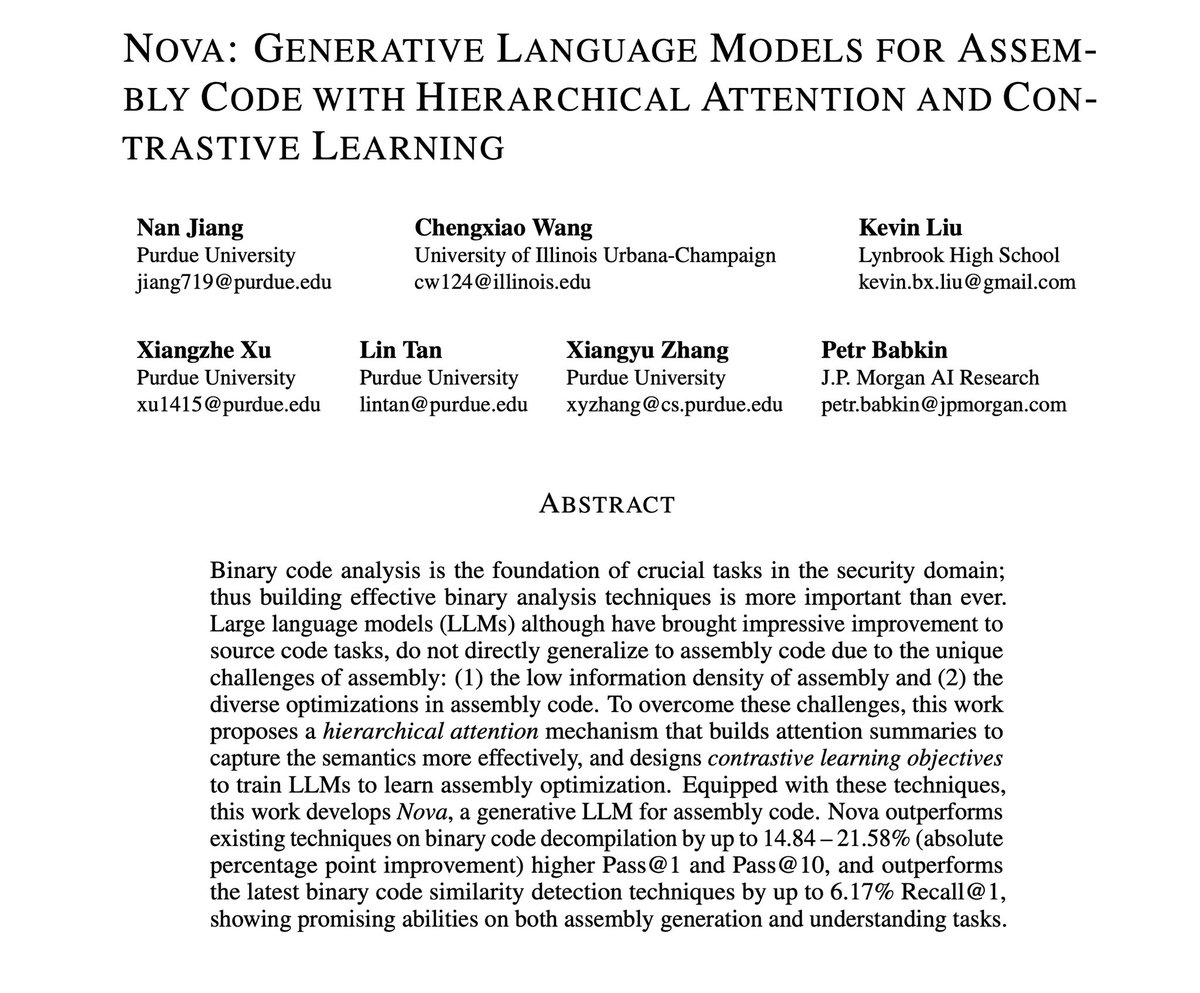

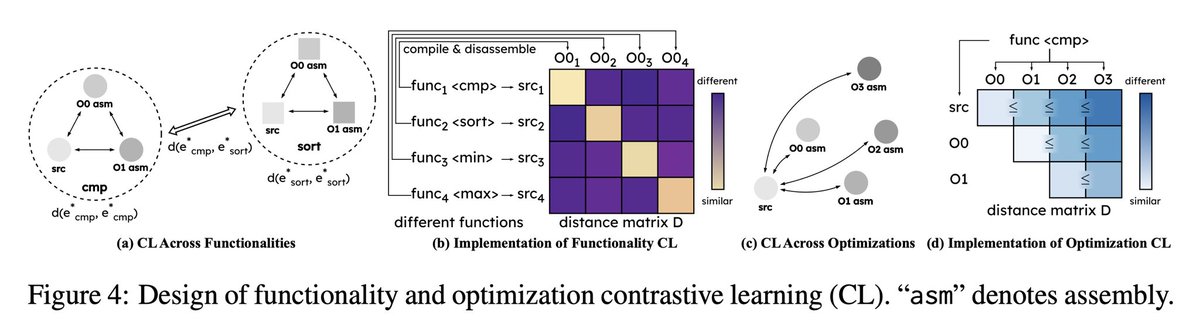

Introducing Nova, a series of foundation models for binary/assembly code. We have also released fine-tuned models for binary code decompilation. #LLM #LLM4Code #BinaryAnalysis #Security @NanJiang719, Chengxiao Wang, Kevin Liu, @XiangzheX, Xiangyu Zhang, Petr Babkin.

Wanna generate webpages automatically? Meet🧇WAFFLE: multimodal #LLMs for generating HTML from UI images! It uses structure-aware attention & contrastive learning. arxiv.org/abs/2410.18362 #LLM4Code #MLLM #FrontendDev #WebDev @LiangShanchao @NanJiang719 Shangshu Qian

Two of our papers have been accepted to the #ACL2025 main conference! Try our code generation benchmark 🐟RepoCod (lt-asset.github.io/REPOCOD/) and website generation tool 🧇WAFFLE (github.com/lt-asset/Waffle)! #LLM4Code #MLLM #FrontendDev #WebDev #LLM #CodeGeneration #Security

Very excited to share the 1st @llm4code workshop has attracted 170+ registrations! If you’re at @ICSEconf and interested in LLMs, pls join us on April 20, we have 24 presentations and two keynotes on Code Llama (@b_roziere) and StarCoder2 (@LoubnaBenAllal1)! #icse24 #llm4code

🎉 Excited to announce that our paper "ReSym: Harnessing LLMs to Recover Variable and Data Structure Symbols from Stripped Binaries" has won the Distinguished Paper Award at #CCS2024! I'll present tomorrow afternoon in 9-4 session at @acm_ccs. Hope to see you! #LLM #LLM4Code

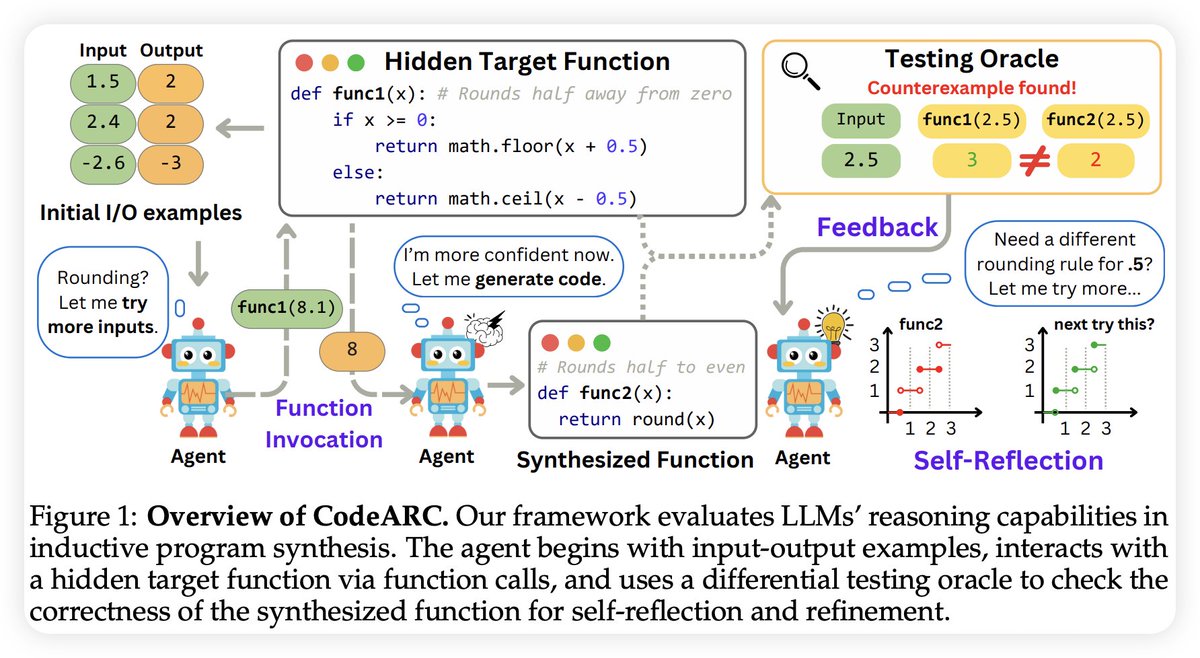

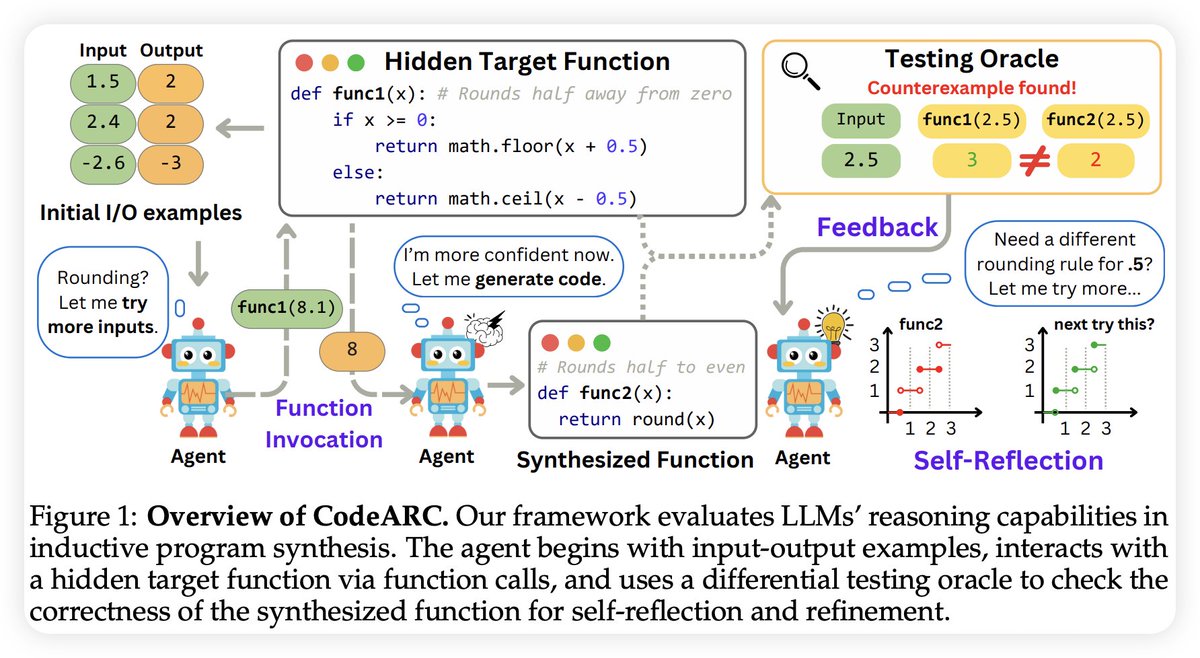

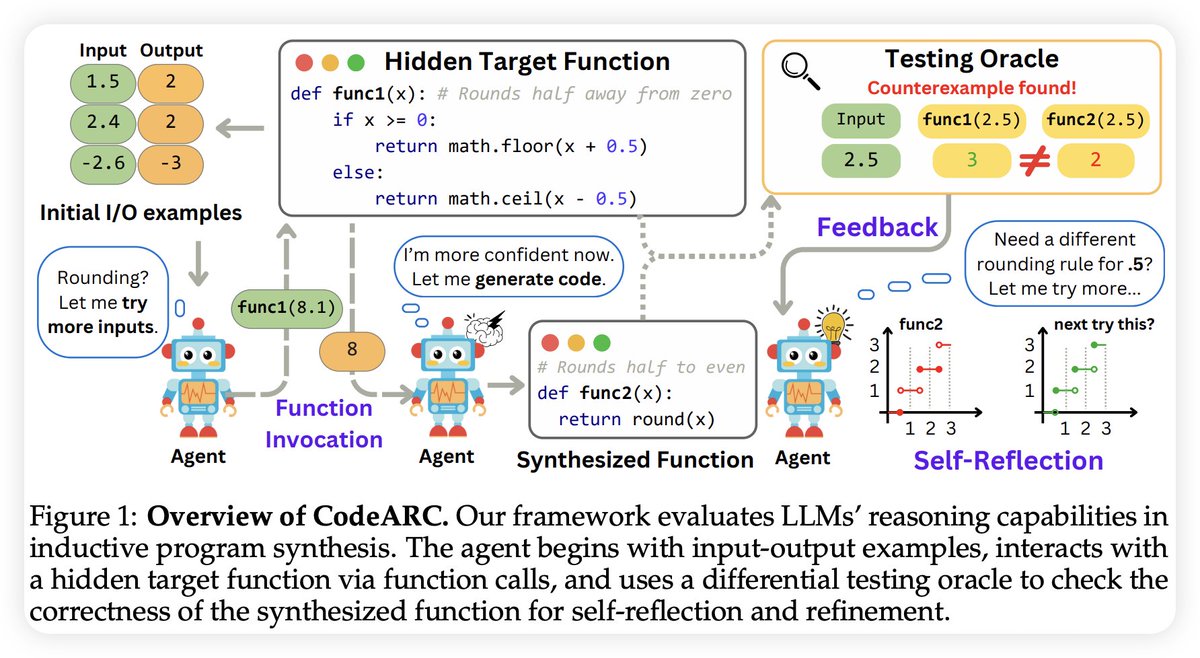

We introduce CodeARC, a new benchmark for evaluating LLMs’ inductive reasoning. Agents must synthesize functions from I/O examples—no natural language, just reasoning. 📄 arxiv.org/pdf/2503.23145 💻 github.com/Anjiang-Wei/Co… 🌐 anjiang-wei.github.io/CodeARC-Websit… #LLM #Reasoning #LLM4Code #ARC

Announcing the 3rd workshop on #LLM4Code, co-located with @ICSEconf 2026 in Rio de Janeiro, Brazil 🇧🇷! 🎯We are calling for submissions: 🌟Website: llm4code.github.io 🌟Deadline: Oct 20, 2025 🌟8-page research paper / 4-page position paper (including references) Our…

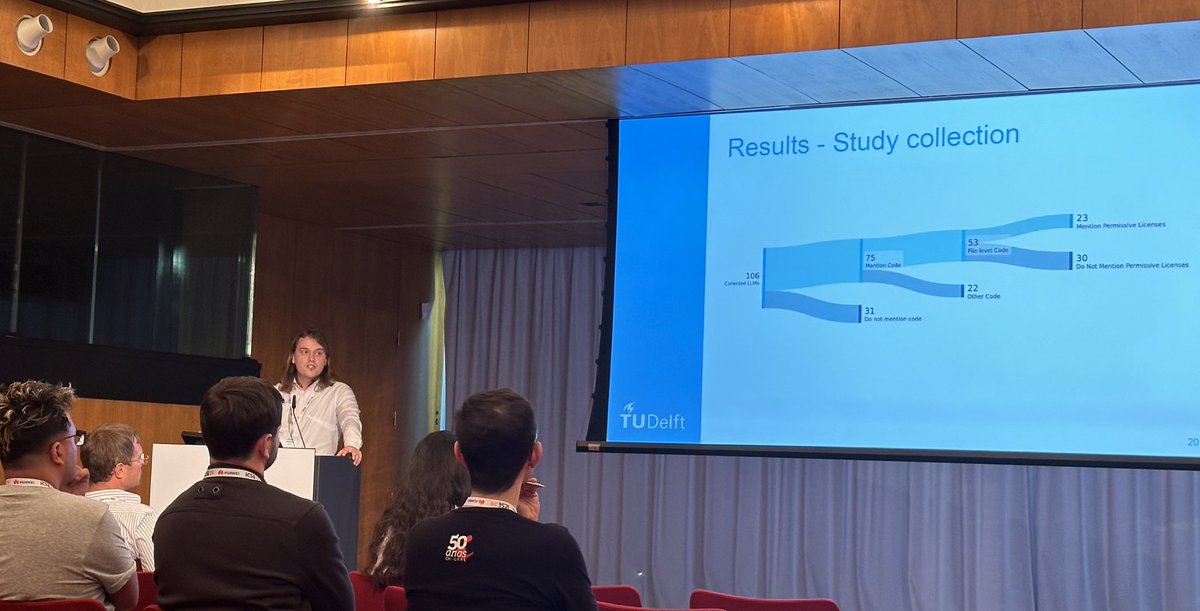

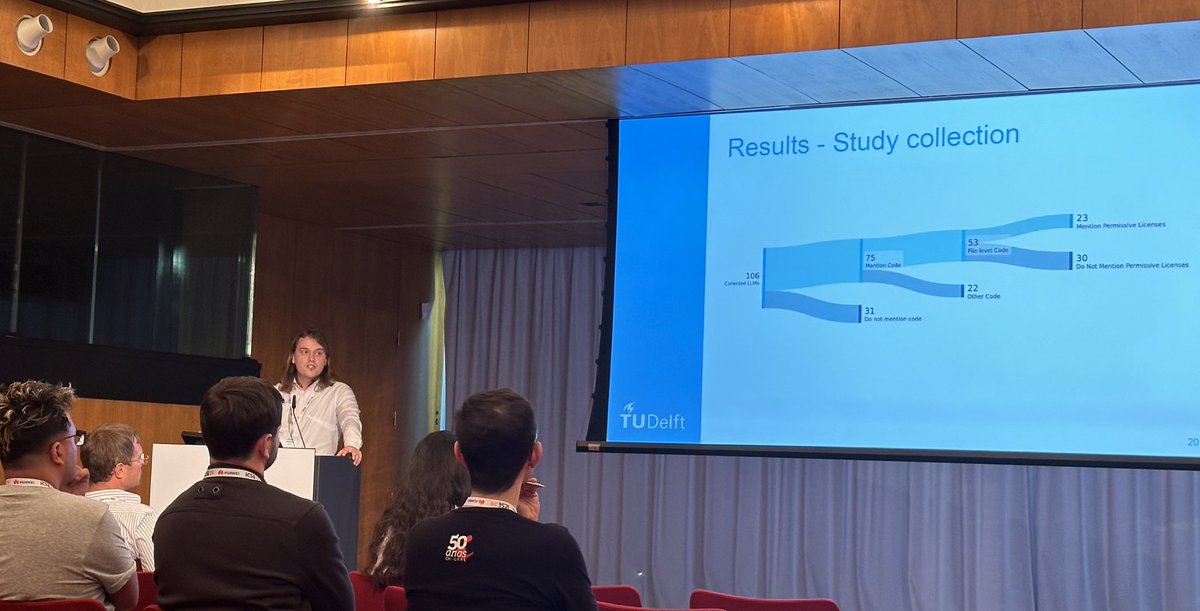

@katzy_jonathan presenting our #FORGE24 paper on “An Exploratory Investigation into Code License Infringements in Large Language Model Training Datasets”! Talk to him if U are interested in the data used for training a wide range of #llm4code and their implications! @ConfForge

The Memory Problem: Why #LLMs Sometimes Forget Your Conversation? share.google/FYEURfHfUi2eKp… #LLM4Code #genai @CurieuxExplorer @Eli_Krumova @enilev @ingliguori

Last week, I enjoyed a visit to Japan, where I participated in the FM+SE #Shonan seminar & gave a talk about our #LLM4Code studies in @AISE_TUDelft at the FM+SE Summit 2024 Tokyo! Big thanks to Prof. Ahmed Hassan, Jack Jiang & Kamei Yasutaka for organizing this incredible event

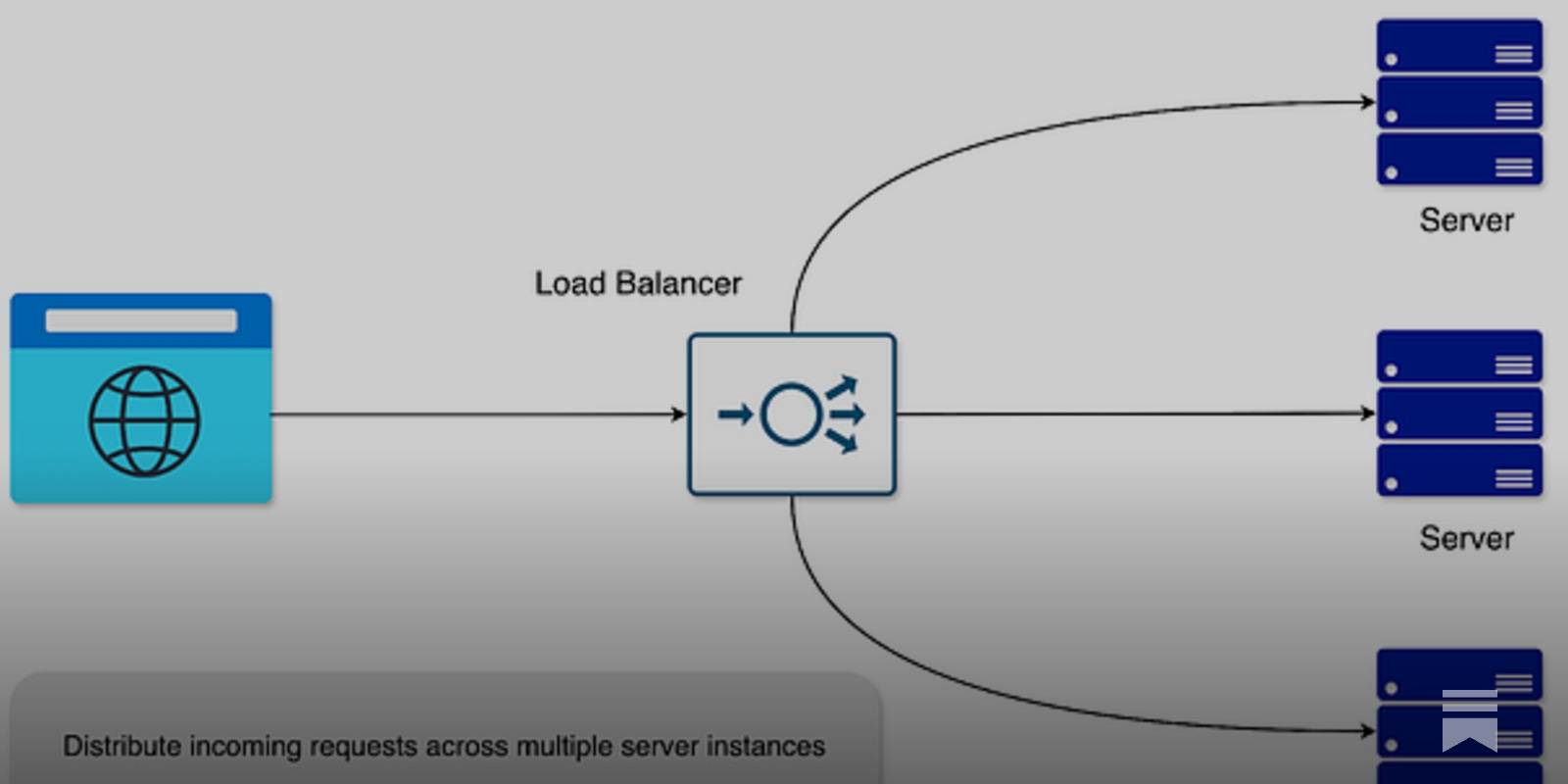

Access the latest LLMs from one place. With the Kognie API, you can connect to GPT-5, Claude 4.5, Nano Banana, Flux Kontext, and more all with a single API key. Build smarter. Scale faster. 👉 kognie.com/api #LLMs #LLM4Code #developersarmy #MLOps #DeveloperCommunity

GPT 4.5 ranks only 10th for realistic complex coding tasks from GitHub repositories lt-asset.github.io/REPOCOD/#lite RepoCod tasks are - General code generation tasks, and - complex tasks: longest average canonical solution length (331.6 tokens) x.com/lin0tan/status… #LLM4Code

Excited to be at #ICSE2025 in #Ottawa! Join me for our talk: "Between Lines of Code: Unraveling the Distinct Patterns of Machine and Human Programmers" on 📅 Thursday, May 1st, at 2pm in📌 Room 207. Looking forward to discussing more about this fascinating topic! #LLM4Code #AI

📌 Modern code LLMs rely on subword tokenization (like BPE). But these tokenizers have no idea about code grammar, they just merge characters by frequency. So what happens when subword tokenization ignores grammar? 🤔 🤗 Our Paper : huggingface.co/papers/2510.14… #LLM4Code (1/n)

🚨 Preprint Alert: Empirical study of the perception and usage of LLMs in an academic software engineering project. Accepted at #LLM4Code workshop co-located with #ICSE 2024. Provides insights into the role of LLMs for SE in an academic setting. arxiv.org/abs/2401.16186

The Memory Problem: Why #LLMs Sometimes Forget Your Conversation? share.google/FYEURfHfUi2eKp… #LLM4Code #genai @CurieuxExplorer @Eli_Krumova @enilev @ingliguori

Access the latest LLMs from one place. With the Kognie API, you can connect to GPT-5, Claude 4.5, Nano Banana, Flux Kontext, and more all with a single API key. Build smarter. Scale faster. 👉 kognie.com/api #LLMs #LLM4Code #developersarmy #MLOps #DeveloperCommunity

AI can ship your MVP. But it can’t design your architecture. Fundamentals are the new 10x skill. 💥 #LLMs #LLM4Code

linkedin.com/pulse/challeng… My musings on LLM development #LLMs #LLM4Code #claudecode #codex #geminicli

Anthropic's Skills for Claude, which are conceptually very simple, may become a bigger deal than MCP, whose high token usage is its most significant limitation. simonwillison.net/2025/Oct/16/cl… #Claude #MCP #LLM4Code

To all my fellow devs and nerds. If you were to choose a single model to subscribe to for complex dev related work. Which one would you pick? I am torn between Anthropic and Grok4. #LLM4Code #DeveloperCommunity

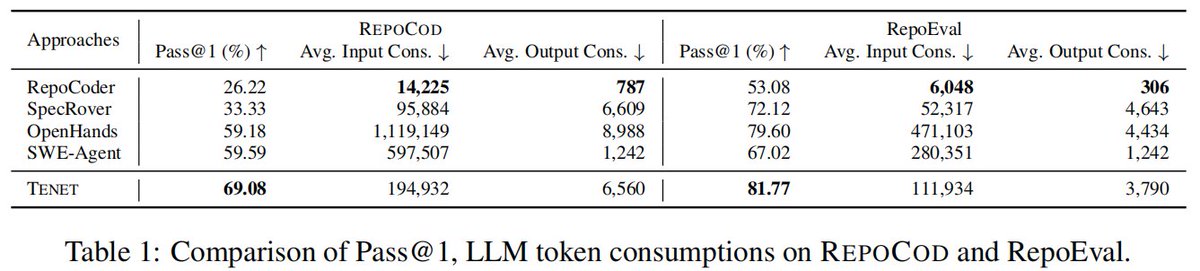

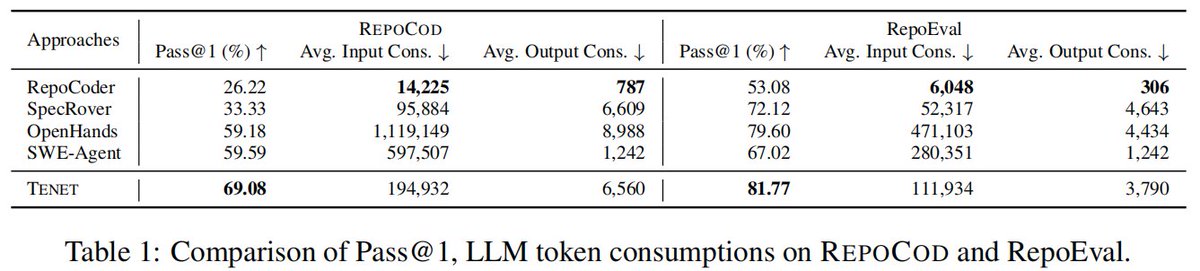

In the Agentic AI era, tests are the new specs! 🚀Check out our TENET work on code generation, Pass@1: 69.08% (RepoCod), 81.77% (RepoEval), with +9.5% & +2.2% over top agentic baselines. Paper: arxiv.org/abs/2509.24148 #LLMs #Agents #LLM4Code #CodeGeneration @PurdueCS @cerias

Curious about how tests can guide the entire code generation process, not just validate it afterward? 🚀 Check out TENET, our test-driven LLM agent for repository-level code generation! 🔗 Paper Link: arxiv.org/abs/2509.24148 #LLMs #Agents #LLM4Code #CodeGeneration

Curious about how tests can guide the entire code generation process, not just validate it afterward? 🚀 Check out TENET, our test-driven LLM agent for repository-level code generation! 🔗 Paper Link: arxiv.org/abs/2509.24148 #LLMs #Agents #LLM4Code #CodeGeneration

Announcing the 3rd workshop on #LLM4Code, co-located with @ICSEconf 2026 in Rio de Janeiro, Brazil 🇧🇷! 🎯We are calling for submissions: 🌟Website: llm4code.github.io 🌟Deadline: Oct 20, 2025 🌟8-page research paper / 4-page position paper (including references) Our…

We introduce CodeARC, a new benchmark for evaluating LLMs’ inductive reasoning. Agents must synthesize functions from I/O examples—no natural language, just reasoning. 📄 arxiv.org/pdf/2503.23145 💻 github.com/Anjiang-Wei/Co… 🌐 anjiang-wei.github.io/CodeARC-Websit… #LLM #Reasoning #LLM4Code #ARC

Two of our papers have been accepted to the #ACL2025 main conference! Try our code generation benchmark 🐟RepoCod (lt-asset.github.io/REPOCOD/) and website generation tool 🧇WAFFLE (github.com/lt-asset/Waffle)! #LLM4Code #MLLM #FrontendDev #WebDev #LLM #CodeGeneration #Security

Wanna generate webpages automatically? Meet🧇WAFFLE: multimodal #LLMs for generating HTML from UI images! It uses structure-aware attention & contrastive learning. arxiv.org/abs/2410.18362 #LLM4Code #MLLM #FrontendDev #WebDev @LiangShanchao @NanJiang719 Shangshu Qian

All LLMs including GPT-4o achieve < 30% pass@1 on real-world code completion: Check out 🐟REPOCOD, a real-world code generation benchmark: - Repository-level context - Whole function generation - Validation with test cases - 980 instances from 11 Python projects #LLM #LLM4Code

Very excited to share the 1st @llm4code workshop has attracted 170+ registrations! If you’re at @ICSEconf and interested in LLMs, pls join us on April 20, we have 24 presentations and two keynotes on Code Llama (@b_roziere) and StarCoder2 (@LoubnaBenAllal1)! #icse24 #llm4code

Introducing Nova, a series of foundation models for binary/assembly code. We have also released fine-tuned models for binary code decompilation. #LLM #LLM4Code #BinaryAnalysis #Security @NanJiang719, Chengxiao Wang, Kevin Liu, @XiangzheX, Xiangyu Zhang, Petr Babkin.

Two of our papers have been accepted to the #ACL2025 main conference! Try our code generation benchmark 🐟RepoCod (lt-asset.github.io/REPOCOD/) and website generation tool 🧇WAFFLE (github.com/lt-asset/Waffle)! #LLM4Code #MLLM #FrontendDev #WebDev #LLM #CodeGeneration #Security

Curious about how tests can guide the entire code generation process, not just validate it afterward? 🚀 Check out TENET, our test-driven LLM agent for repository-level code generation! 🔗 Paper Link: arxiv.org/abs/2509.24148 #LLMs #Agents #LLM4Code #CodeGeneration

If you missed the fantastic keynotes on #CodeLlama and #Starcoder2 from their initiators and core contributors @b_roziere and @LoubnaBenAllal1, you can still check out the slides tinyurl.com/llm4code24 Hope to see your submissions to the 2nd @LLM4Code workshop. #LLM4code #llm

We are welcoming submissions to #LLM4Code 2025 (deadline Nov 18) colocated with @ICSEconf! llm4code.github.io #LLM

We introduce CodeARC, a new benchmark for evaluating LLMs’ inductive reasoning. Agents must synthesize functions from I/O examples—no natural language, just reasoning. 📄 arxiv.org/pdf/2503.23145 💻 github.com/Anjiang-Wei/Co… 🌐 anjiang-wei.github.io/CodeARC-Websit… #LLM #Reasoning #LLM4Code #ARC

🎉 Excited to announce that our paper "ReSym: Harnessing LLMs to Recover Variable and Data Structure Symbols from Stripped Binaries" has won the Distinguished Paper Award at #CCS2024! I'll present tomorrow afternoon in 9-4 session at @acm_ccs. Hope to see you! #LLM #LLM4Code

GPT 4.5 ranks only 10th for realistic complex coding tasks from GitHub repositories lt-asset.github.io/REPOCOD/#lite RepoCod tasks are - General code generation tasks, and - complex tasks: longest average canonical solution length (331.6 tokens) x.com/lin0tan/status… #LLM4Code

In the Agentic AI era, tests are the new specs! 🚀Check out our TENET work on code generation, Pass@1: 69.08% (RepoCod), 81.77% (RepoEval), with +9.5% & +2.2% over top agentic baselines. Paper: arxiv.org/abs/2509.24148 #LLMs #Agents #LLM4Code #CodeGeneration @PurdueCS @cerias

Curious about how tests can guide the entire code generation process, not just validate it afterward? 🚀 Check out TENET, our test-driven LLM agent for repository-level code generation! 🔗 Paper Link: arxiv.org/abs/2509.24148 #LLMs #Agents #LLM4Code #CodeGeneration

@katzy_jonathan presenting our #FORGE24 paper on “An Exploratory Investigation into Code License Infringements in Large Language Model Training Datasets”! Talk to him if U are interested in the data used for training a wide range of #llm4code and their implications! @ConfForge

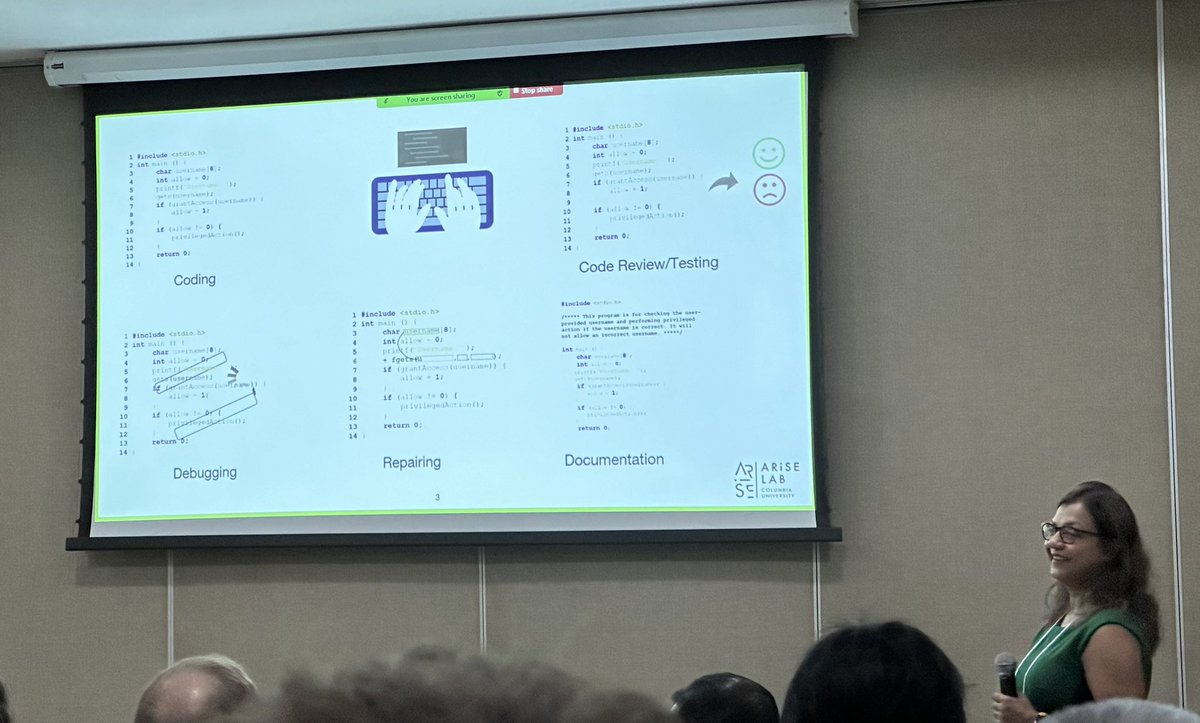

Last week, I enjoyed a visit to Japan, where I participated in the FM+SE #Shonan seminar & gave a talk about our #LLM4Code studies in @AISE_TUDelft at the FM+SE Summit 2024 Tokyo! Big thanks to Prof. Ahmed Hassan, Jack Jiang & Kamei Yasutaka for organizing this incredible event

Access the latest LLMs from one place. With the Kognie API, you can connect to GPT-5, Claude 4.5, Nano Banana, Flux Kontext, and more all with a single API key. Build smarter. Scale faster. 👉 kognie.com/api #LLMs #LLM4Code #developersarmy #MLOps #DeveloperCommunity

Excited to be at #ICSE2025 in #Ottawa! Join me for our talk: "Between Lines of Code: Unraveling the Distinct Patterns of Machine and Human Programmers" on 📅 Thursday, May 1st, at 2pm in📌 Room 207. Looking forward to discussing more about this fascinating topic! #LLM4Code #AI

@aalkaswan1 presenting our recent @ICSEconf work with @avandeursen on “Traces of Memorisation in Large Language Models for Code” in the final ICSE session on language models and code generation! @AISE_TUDelft @serg_delft #llm #llm4code #ICSE2024

🚨 Preprint Alert: Empirical study of the perception and usage of LLMs in an academic software engineering project. Accepted at #LLM4Code workshop co-located with #ICSE 2024. Provides insights into the role of LLMs for SE in an academic setting. arxiv.org/abs/2401.16186

Our #LLM4Code at #icse24 paper: Semantically Aligned Question and Code Generation for Automated Insight Generation leverages the semantic knowledge of LLMs to generate targeted and insightful questions about data and the corresponding code to answer those questions. (4/7)

Very insightful keynote by @baishakhir on semantic awareness for code models at @AIwareConf ! “Improve trust, integrate developer feedback, and increase explainability.” #AIWare #FSE2024 #LLM4Code

Something went wrong.

Something went wrong.

United States Trends

- 1. RIP Beef 1,242 posts

- 2. SNAP 653K posts

- 3. #HardRockBet 4,541 posts

- 4. Jamaica 97.7K posts

- 5. Friendly 56.1K posts

- 6. MRIs 3,266 posts

- 7. McCreary 2,769 posts

- 8. 53 Republicans 2,239 posts

- 9. Frank McCourt N/A

- 10. John Dickerson 1,552 posts

- 11. Hurricane Melissa 58K posts

- 12. #IDontWantToOverreactBUT 6,647 posts

- 13. $ZOOZ 5,594 posts

- 14. Jack DeJohnette 3,515 posts

- 15. Rand 32.5K posts

- 16. Sports Equinox 8,940 posts

- 17. #NationalBlackCatDay 3,674 posts

- 18. $NXXT 3,690 posts

- 19. $ASST 20K posts

- 20. #MondayMotivation 44.8K posts