#metatextgrad 搜尋結果

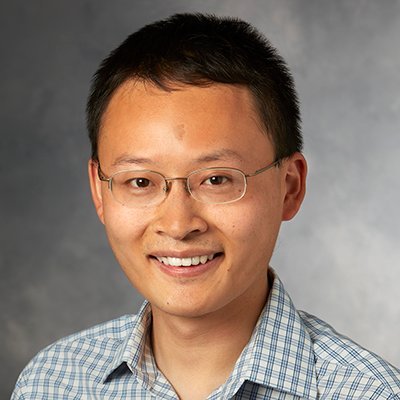

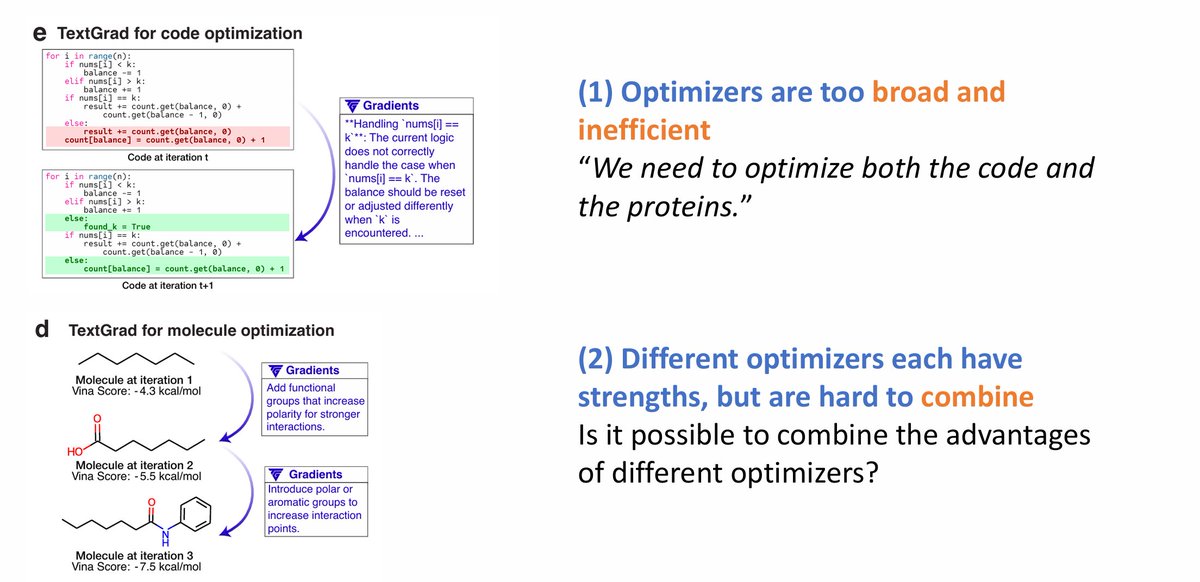

Introducing #metaTextGrad🌟: a meta-optimization framework built on #TextGrad , designed to improve existing LLM optimizers by aligning them more closely with specific tasks. 📰 NeurIPS 2025 paper: openreview.net/pdf?id=10s01Yr… 🧑💻Code: github.com/zou-group/meta… 📚 Slides:…

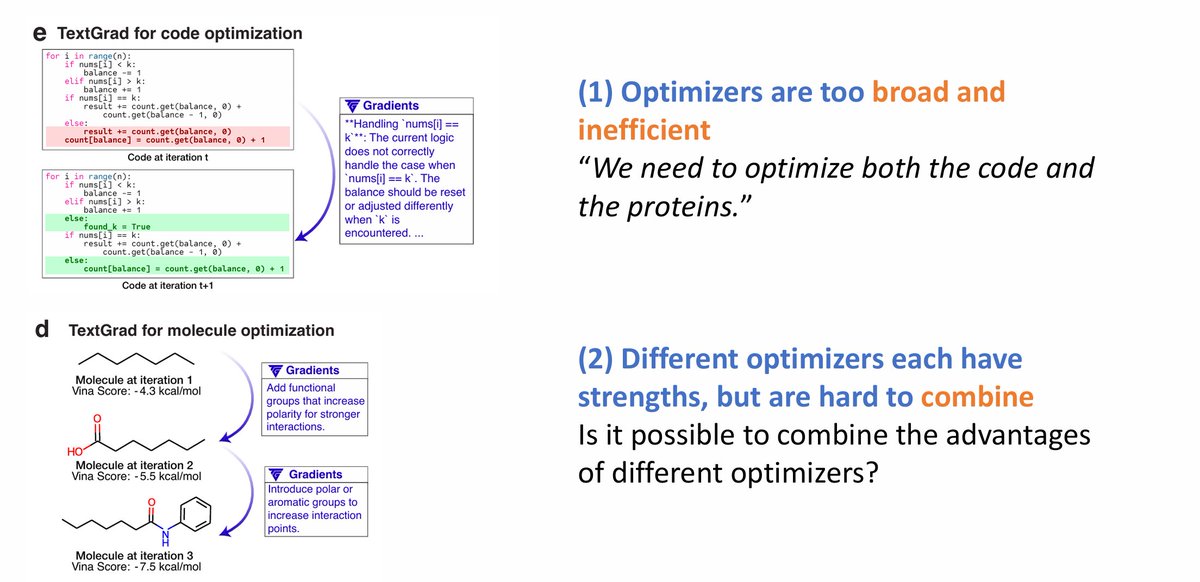

2/ Existing LM optimizers are broad and generic. #metaTextGrad automatically adapts them to specific tasks, greatly improving performance and efficiency. 📰 #NeurIPS2025 paper: openreview.net/pdf?id=10s01Yr… 🧑💻 Code: github.com/zou-group/meta… 📖 Slides: neurips.cc/media/neurips-…

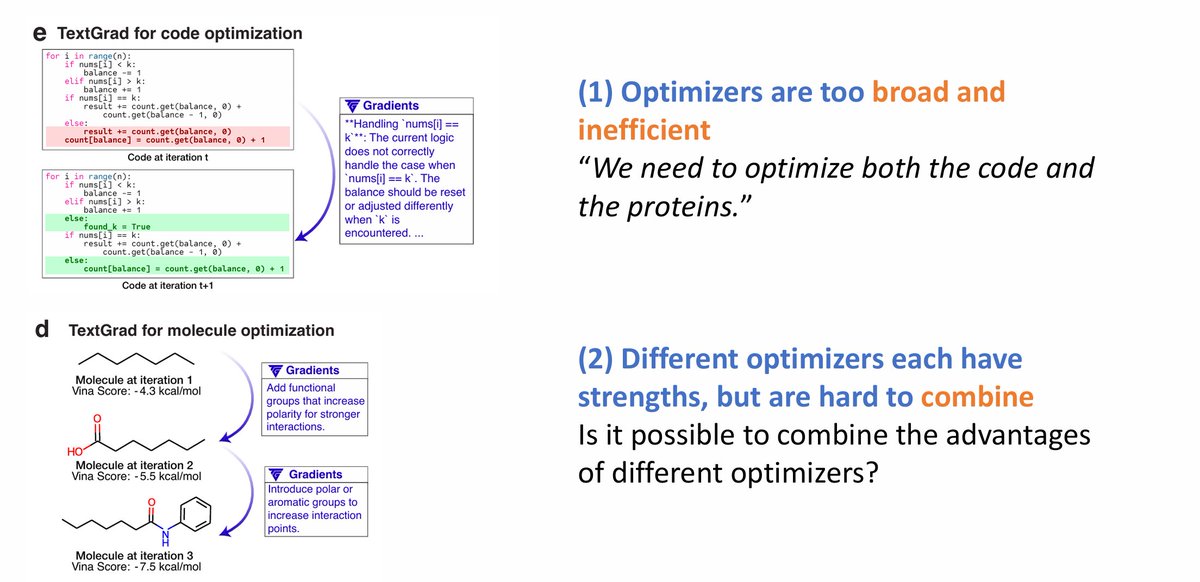

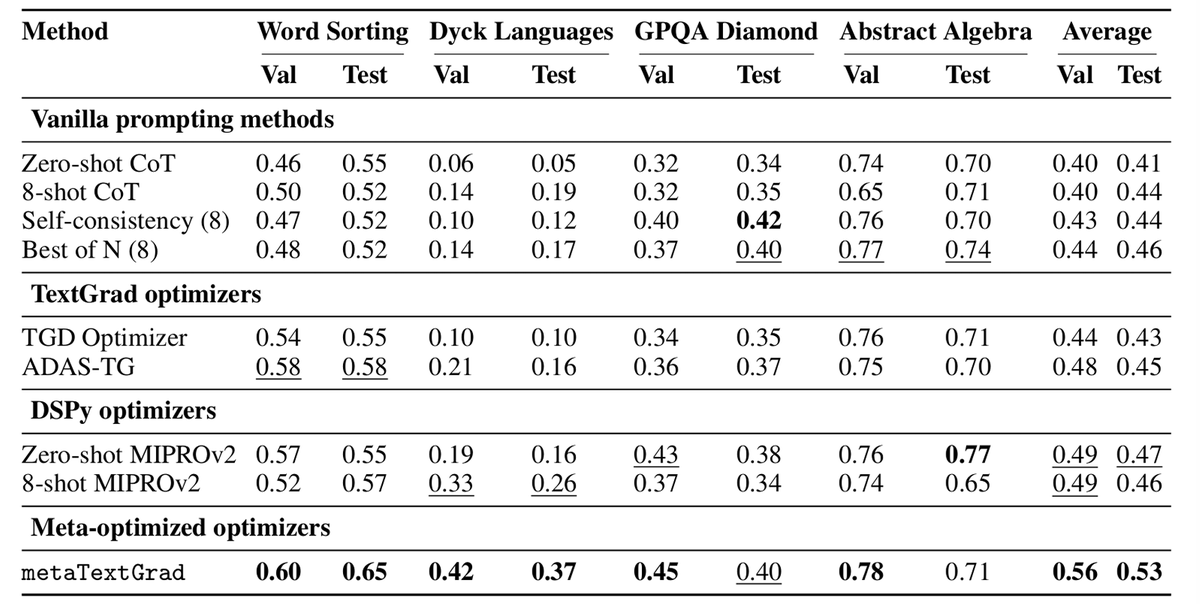

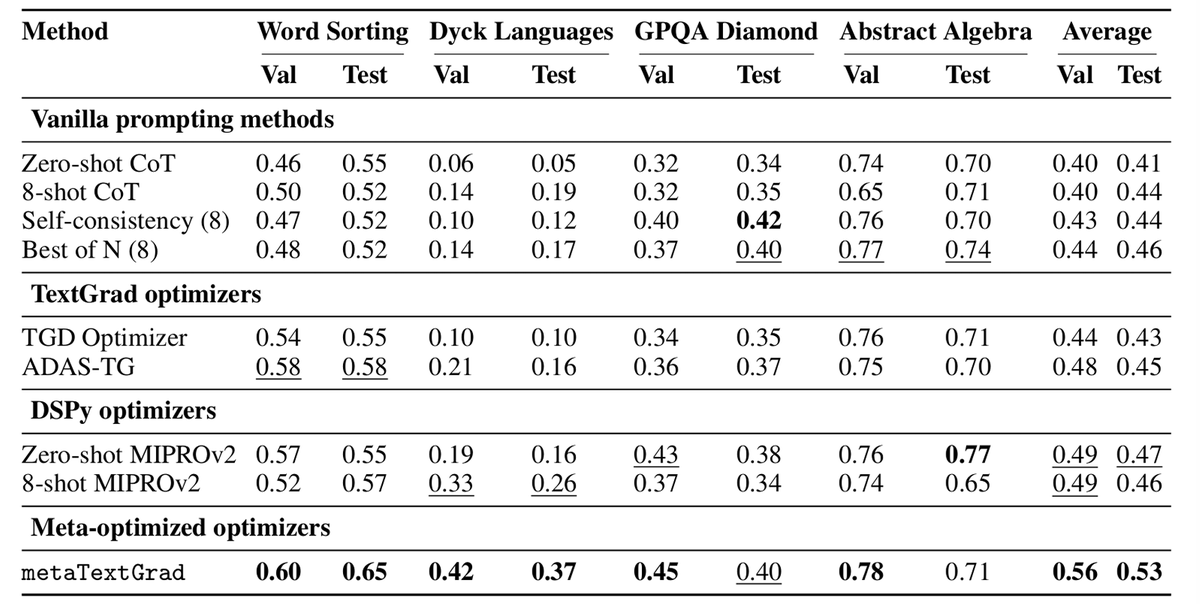

(6/8) Across various reasoning datasets, #metaTextGrad shows a marked improvement in performance over baselines.

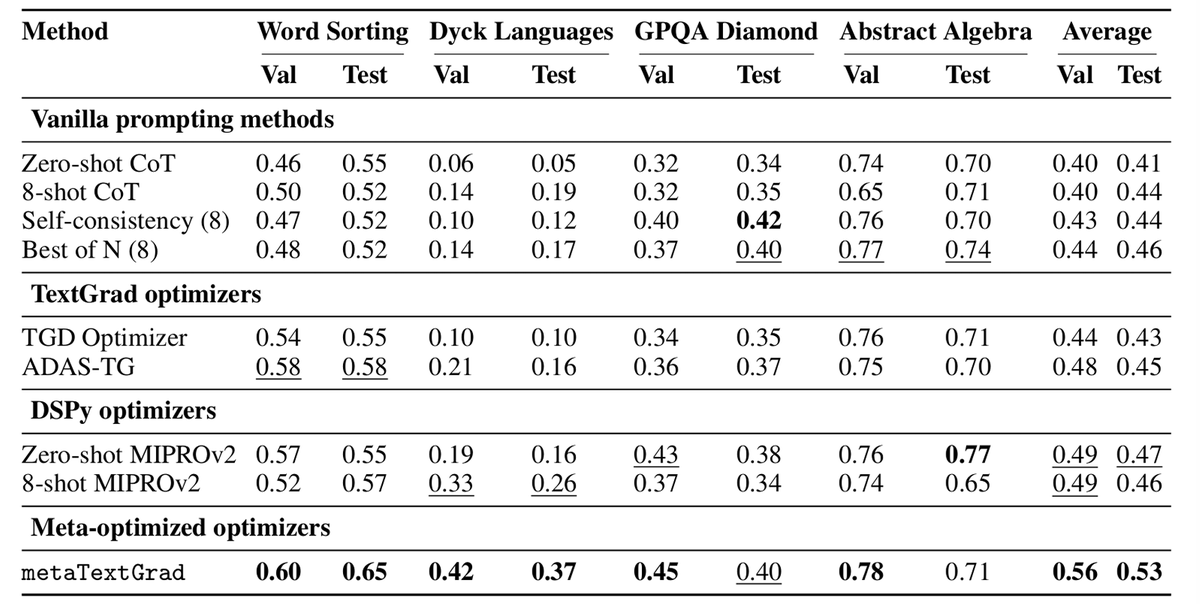

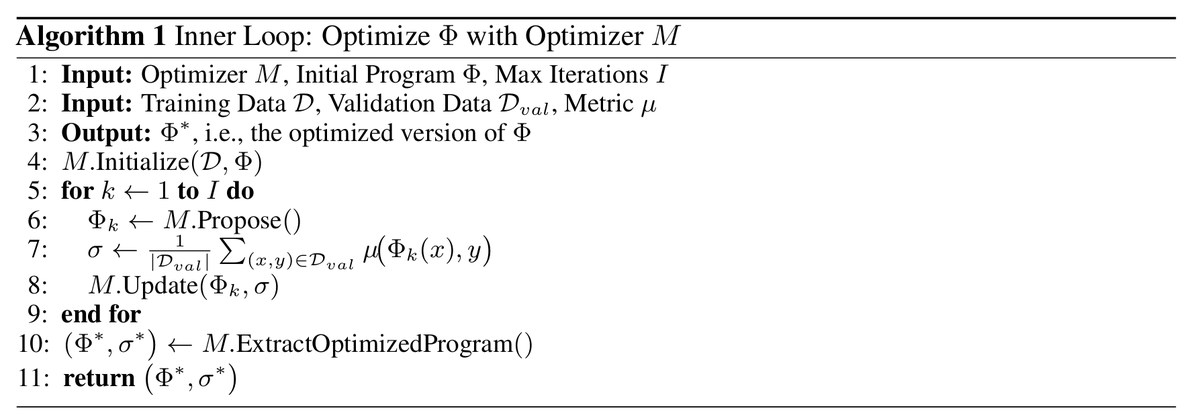

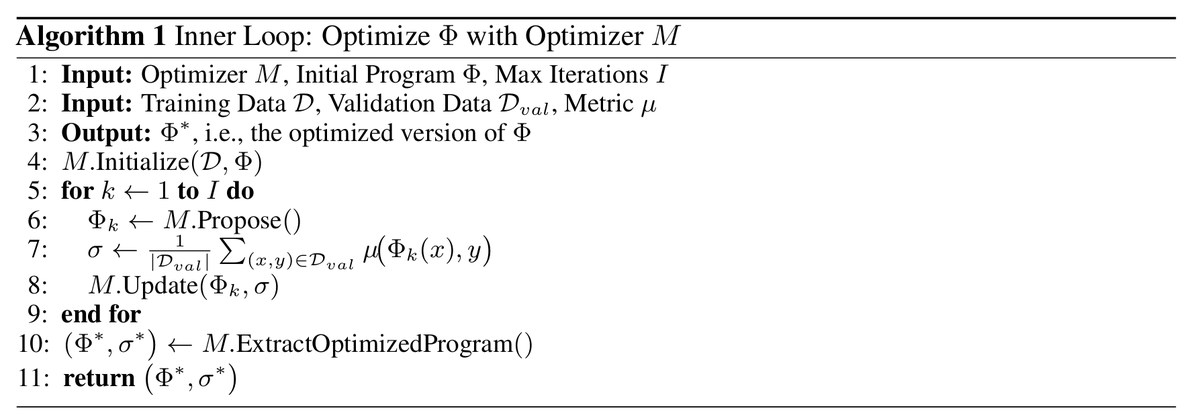

(3/8) The optimization in #metaTextGrad is divided into an inner loop and an outer loop. In the inner loop, an LLM optimizer optimizes programs, and its optimization results indicate the quality of the optimizer and how well it aligns with the task.

2/ Existing LM optimizers are broad and generic. #metaTextGrad automatically adapts them to specific tasks, greatly improving performance and efficiency. 📰 #NeurIPS2025 paper: openreview.net/pdf?id=10s01Yr… 🧑💻 Code: github.com/zou-group/meta… 📖 Slides: neurips.cc/media/neurips-…

(6/8) Across various reasoning datasets, #metaTextGrad shows a marked improvement in performance over baselines.

(3/8) The optimization in #metaTextGrad is divided into an inner loop and an outer loop. In the inner loop, an LLM optimizer optimizes programs, and its optimization results indicate the quality of the optimizer and how well it aligns with the task.

Introducing #metaTextGrad🌟: a meta-optimization framework built on #TextGrad , designed to improve existing LLM optimizers by aligning them more closely with specific tasks. 📰 NeurIPS 2025 paper: openreview.net/pdf?id=10s01Yr… 🧑💻Code: github.com/zou-group/meta… 📚 Slides:…

Introducing #metaTextGrad🌟: a meta-optimization framework built on #TextGrad , designed to improve existing LLM optimizers by aligning them more closely with specific tasks. 📰 NeurIPS 2025 paper: openreview.net/pdf?id=10s01Yr… 🧑💻Code: github.com/zou-group/meta… 📚 Slides:…

(6/8) Across various reasoning datasets, #metaTextGrad shows a marked improvement in performance over baselines.

2/ Existing LM optimizers are broad and generic. #metaTextGrad automatically adapts them to specific tasks, greatly improving performance and efficiency. 📰 #NeurIPS2025 paper: openreview.net/pdf?id=10s01Yr… 🧑💻 Code: github.com/zou-group/meta… 📖 Slides: neurips.cc/media/neurips-…

(3/8) The optimization in #metaTextGrad is divided into an inner loop and an outer loop. In the inner loop, an LLM optimizer optimizes programs, and its optimization results indicate the quality of the optimizer and how well it aligns with the task.

Something went wrong.

Something went wrong.

United States Trends

- 1. $TCT 1,480 posts

- 2. Cyber Monday 28.5K posts

- 3. Good Monday 33.4K posts

- 4. #MondayMotivation 6,586 posts

- 5. #MondayVibes 2,819 posts

- 6. TOP CALL 10.7K posts

- 7. #NavidadConMaduro 1,130 posts

- 8. Victory Monday N/A

- 9. Happy New Month 292K posts

- 10. #MondayMood 1,165 posts

- 11. Clarie 2,328 posts

- 12. #WorldAIDSDay 29.6K posts

- 13. John Denver 1,497 posts

- 14. Happy 1st 23.2K posts

- 15. Jillian 1,892 posts

- 16. Rosa Parks 2,615 posts

- 17. Bienvenido Diciembre 2,939 posts

- 18. Luigi Mangione 2,175 posts

- 19. Honduras 202K posts

- 20. Root 39.2K posts