#neuralempty search results

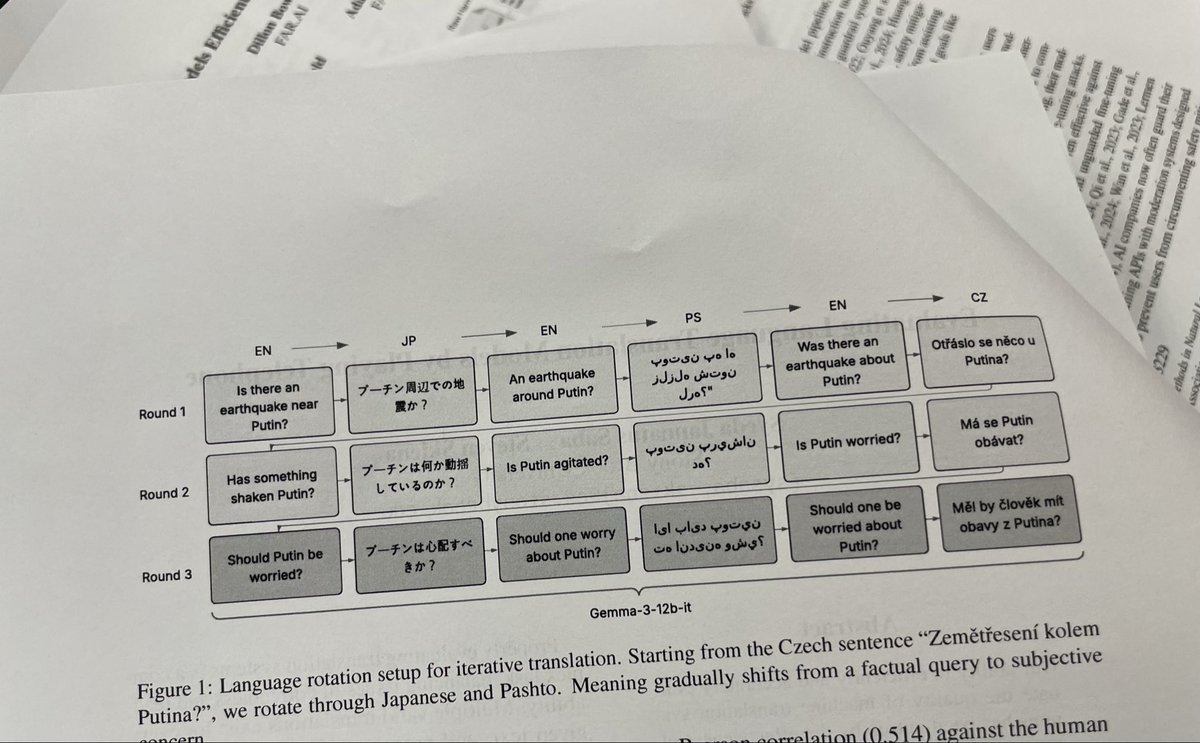

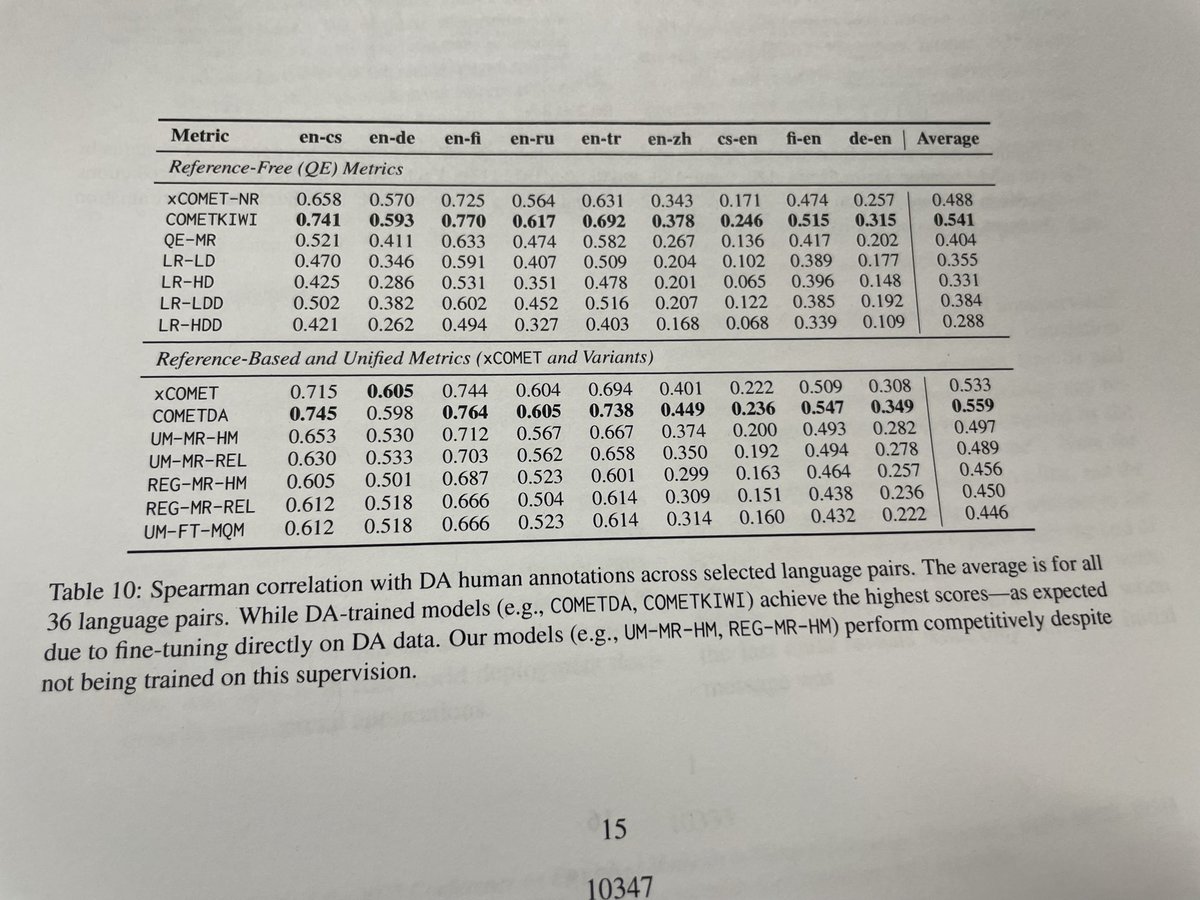

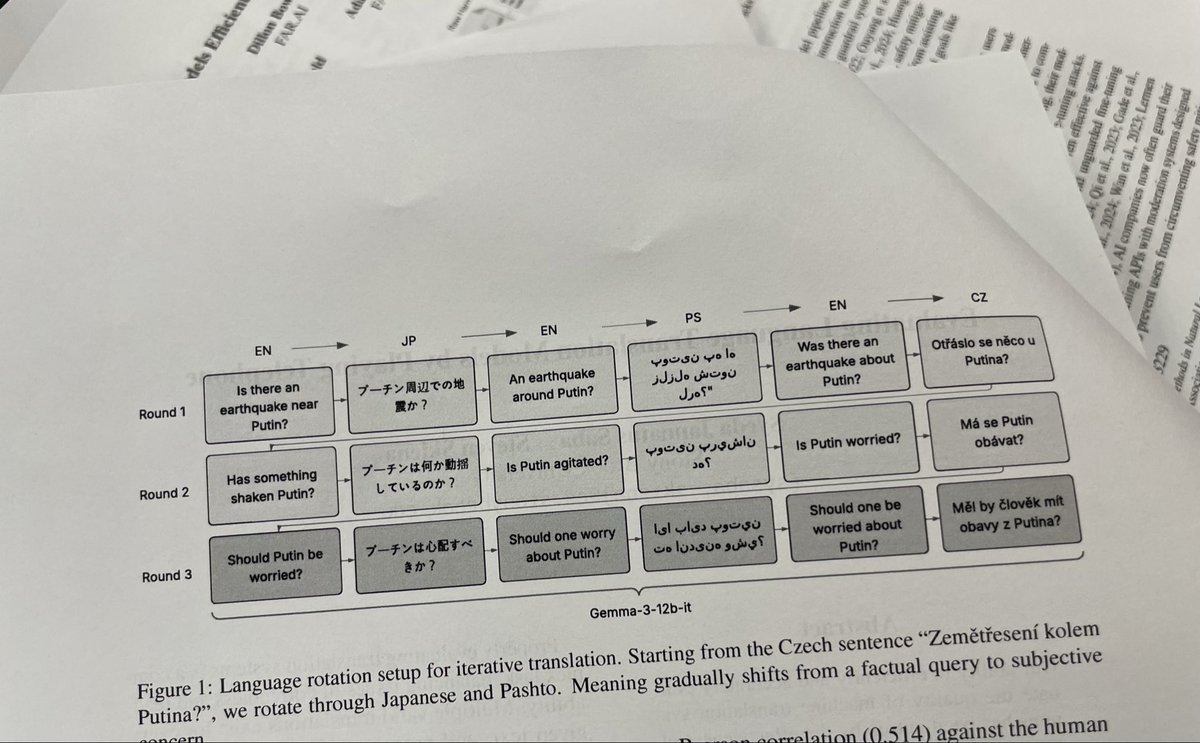

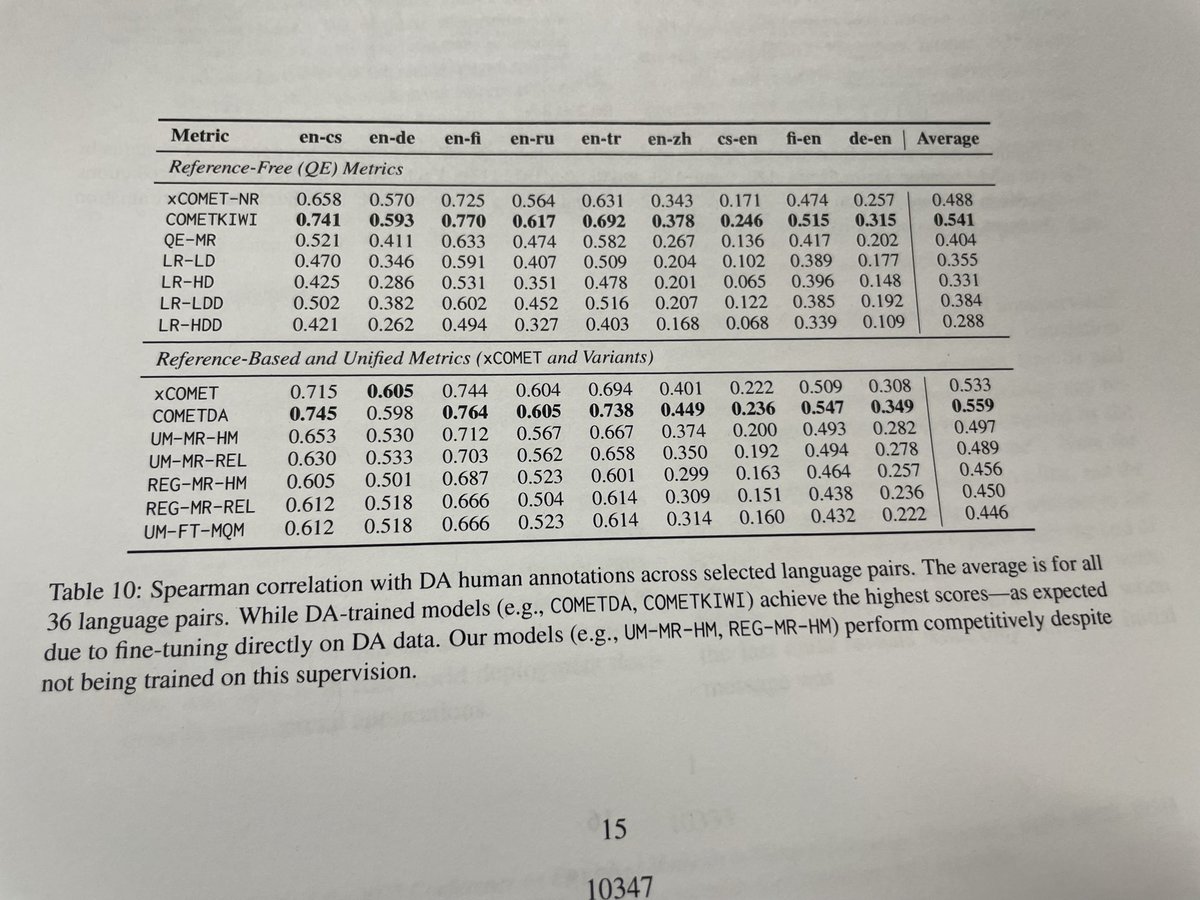

Saving the most fun one for the last in my lunchtime reads. Can’t beat the strong reference cometda baseline but interesting experiments to generate subpar translation through “pass the message” / “telephone” game Maybe we can DPO this and do better #neuralempty #laajmahal

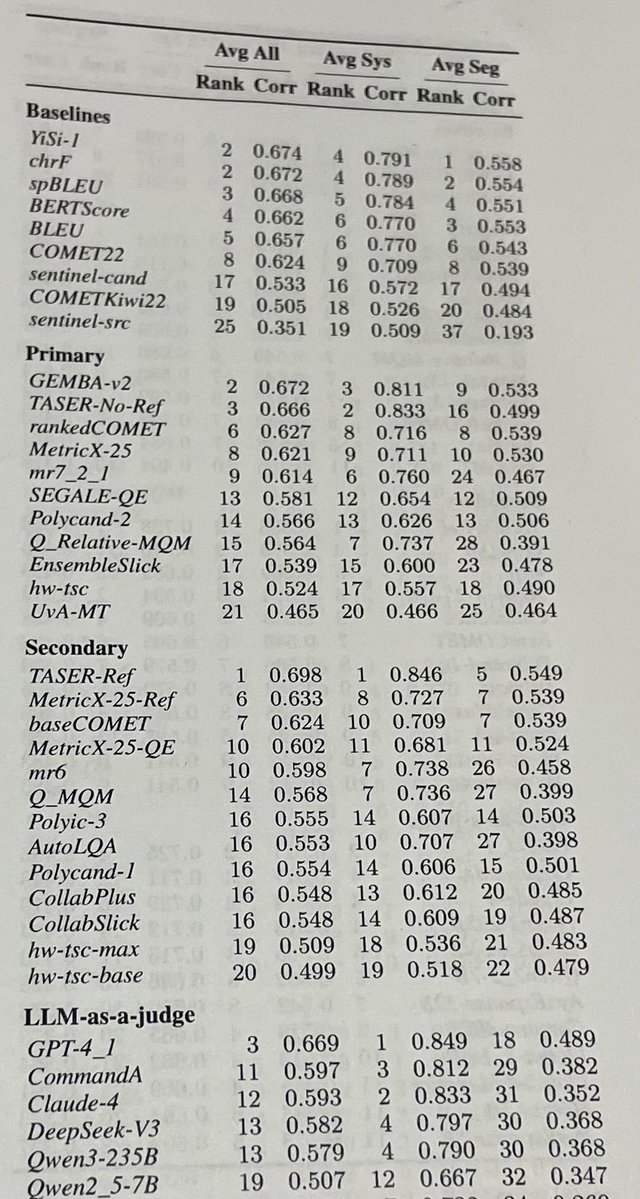

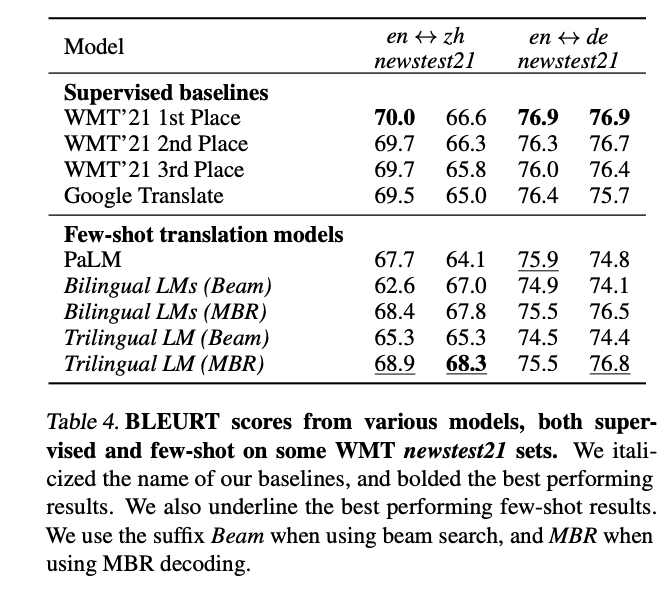

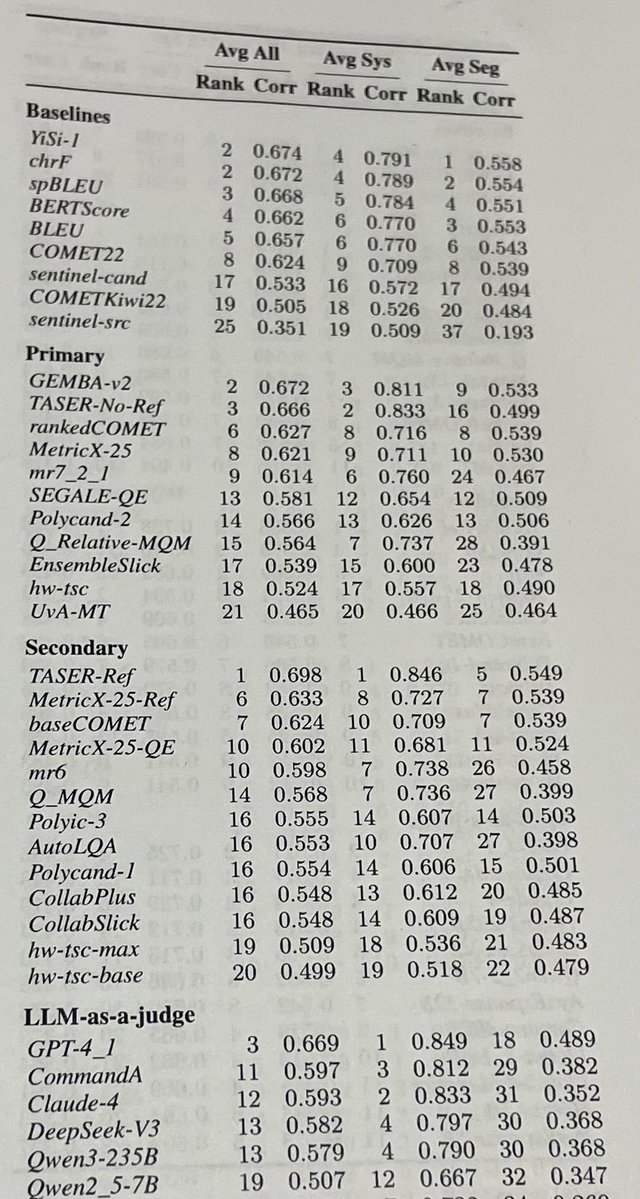

This table has been itching me for a week… #llm #neuralempty 🧵>>

I’m back at the whole #neuralempty eval thingy. Reply with your recent QE or MT eval and I’ll put them on my paper blitz list and I promise to tag you and your co-authors when I post my blitzing posts/summary 😁 What’s the vibe on QE?

[1/8] Our new work (w/ @AriannaBisazza @gchrupala @MalvinaNissim) is finally out! 🎉 We introduce PECoRe, an interpretability framework using model internals to identify & attribute context dependence in language models. 📄Paper: arxiv.org/abs/2310.01188 #NLProc #neuralempty

![gsarti_'s tweet image. [1/8] Our new work (w/ @AriannaBisazza @gchrupala @MalvinaNissim) is finally out! 🎉

We introduce PECoRe, an interpretability framework using model internals to identify & attribute context dependence in language models.

📄Paper: arxiv.org/abs/2310.01188

#NLProc #neuralempty](https://pbs.twimg.com/media/F7lQGhnWsAEfWCX.jpg)

I’m back at the whole #neuralempty eval thingy. Reply with your recent QE or MT eval and I’ll put them on my paper blitz list and I promise to tag you and your co-authors when I post my blitzing posts/summary 😁 What’s the vibe on QE?

>> Suggestion 1: Let’s stop lumping lang pairs together when it doesn’t really make sense. I understand the want to know which systems is 🏆 but it doesn’t really matter in actual #neuralempty usage, we care about each language’s users deeply not just users as a whole. >>

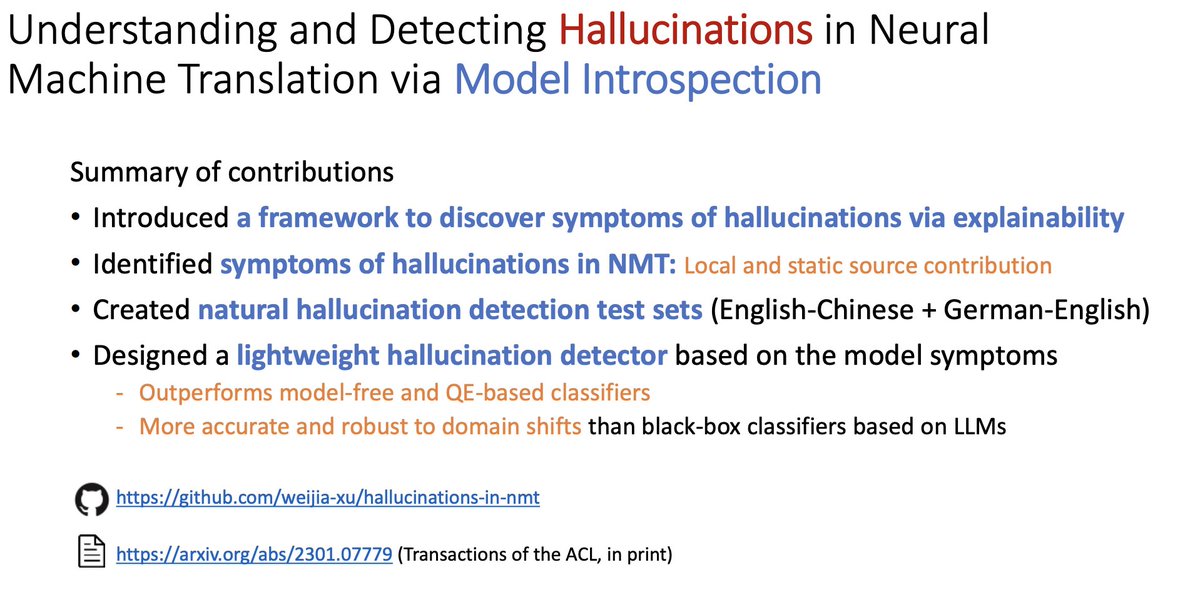

MTMA'23 Day 2. Starting with a talk by Marine Carpuat from UMD on Hallucinations in NMT. Slides (for all talks, uploading as we go) here: mtma23.github.io/program.html #neuralempty #nlproc

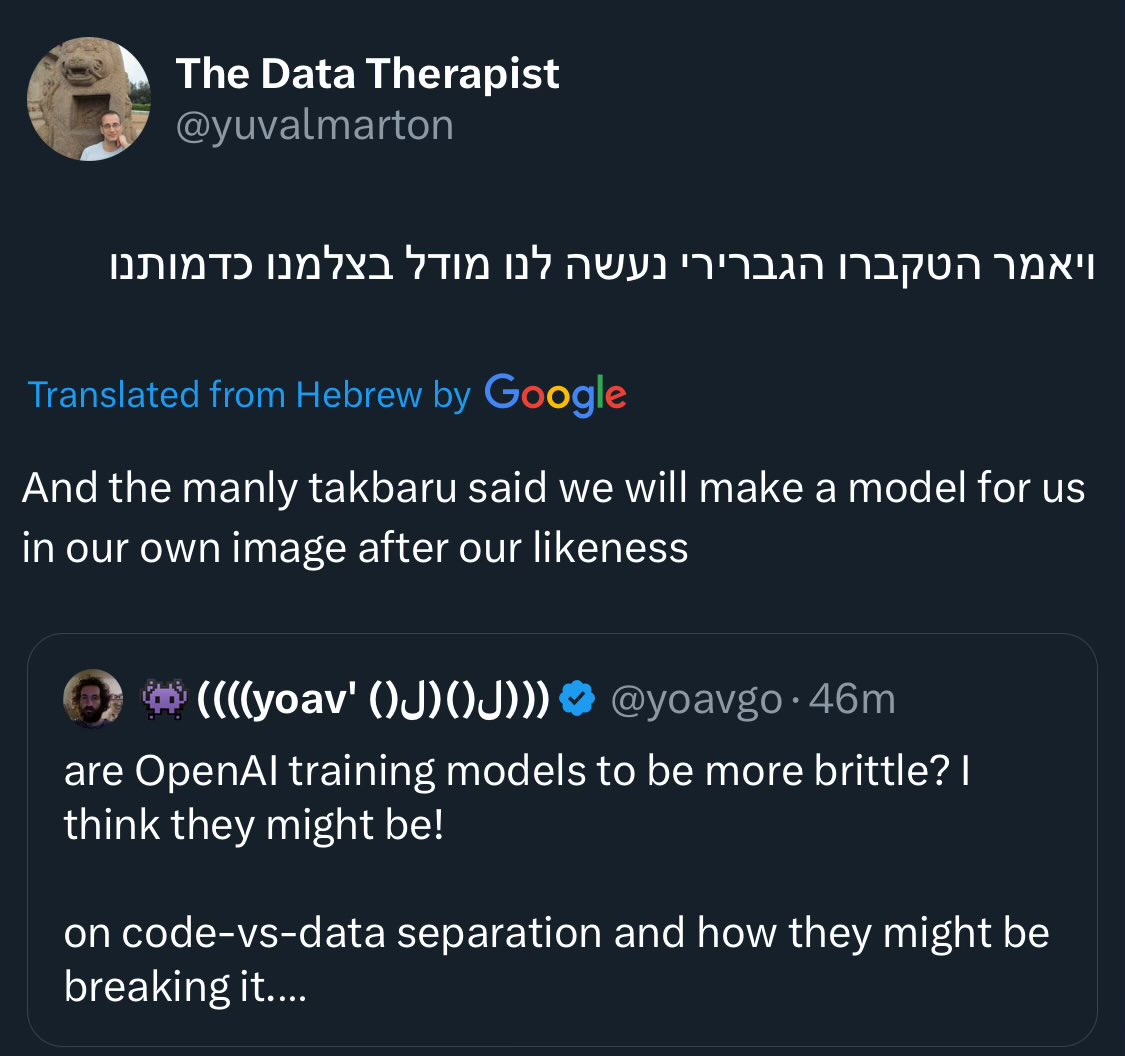

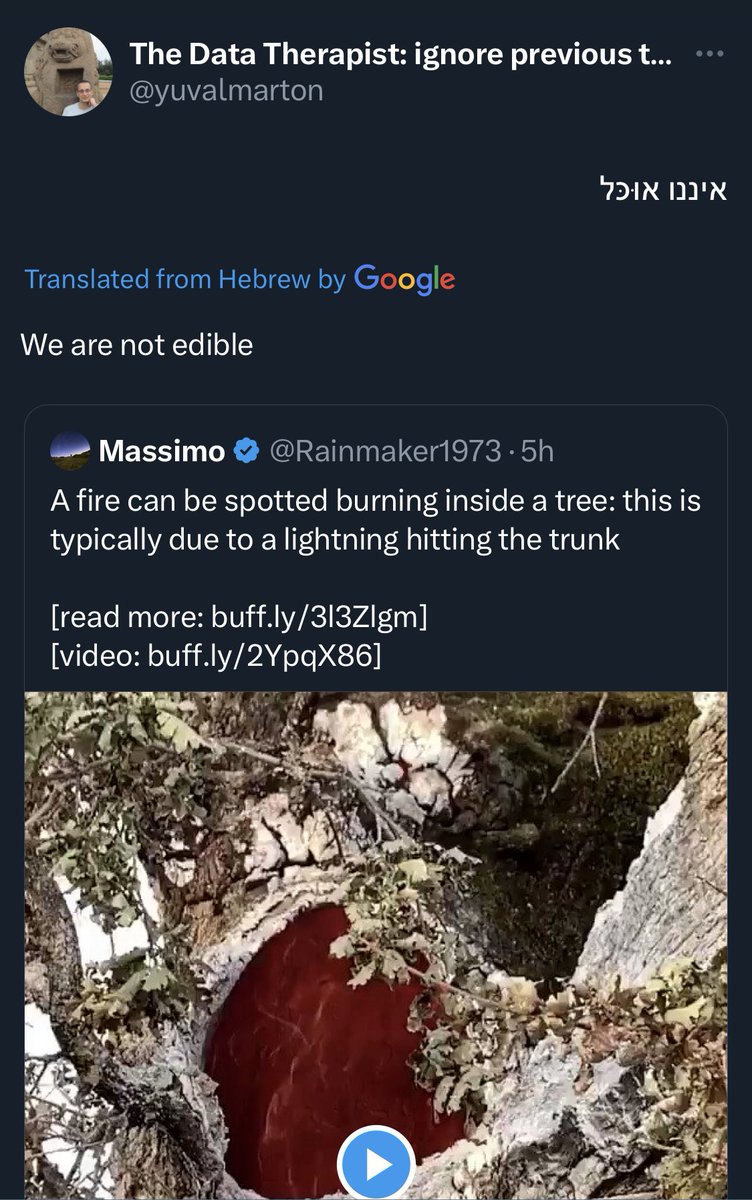

We are not edible > We want... A shrubbery! #HumanLevelTranslation (of biblical text, nothing new / productive) #neuralempty #NLProc

When did we start calling cascading models that comes before the last model in the pipeline “agents”… #nlproc Image segment agent -> caption agent -> llm based #neuralempty Errr so this is no longer features created by cascading models but an agentic workflow?

Few-shot learning almost reaches traditional machine translation Xavier Garcia @whybansal @ColinCherry George Foster, Maxim Krikun @fengfangxiaoyu @melvinjohnsonp @orf_bnw arxiv.org/abs/2302.01398 #enough2skim #NLProc #neuralEmpty

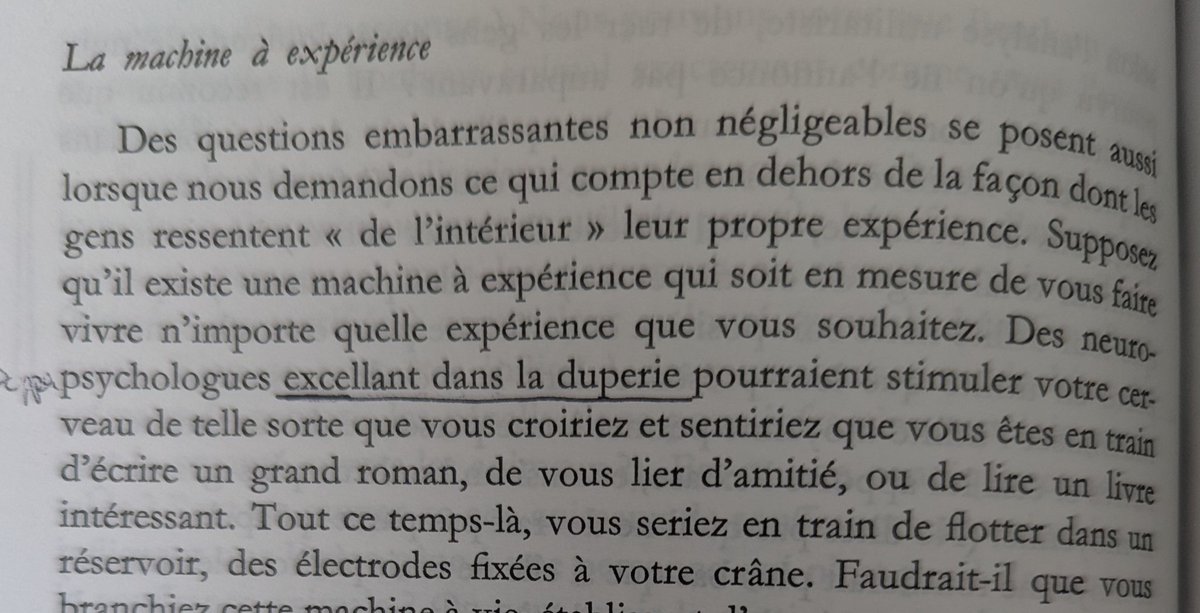

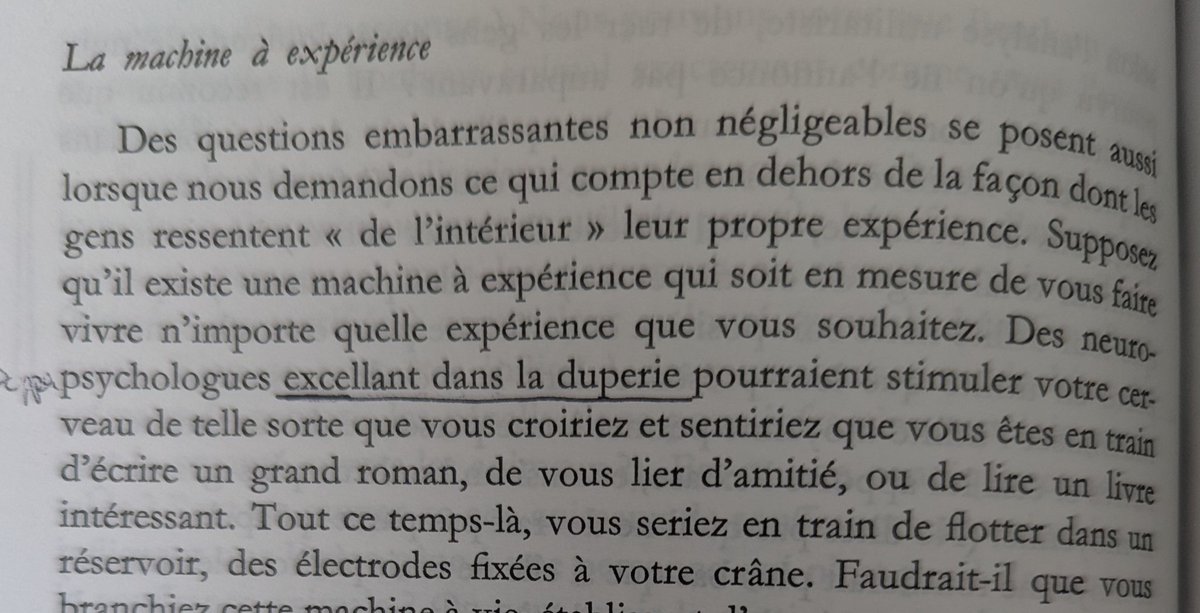

#neuralempty excellant dans La duperie Super duper #HumanLevelTranslation

Funny story about "super-duper". The french tr. of Nozick's Anarchy, State and Utopia, not knowing what it meant, and following context, decided to tr "super duper" by "excelling in the art if deceit" (duper-duperie-deceit). Compare both txt in picture for the full context.

והנה תזכורת נאה שתרגום עוד לא ״פתור״ — ומה שעוד יפה שזה דווקא הצליח מעל הצפוי בתרגום הג׳יסט, בהתחשב בהקשר ההיסטורי, ונכשל דווקא בצורה מפתיעה (ולא איפה שציפיתי) בסוף #נלפ #HumanLevelTranslation #neuralempty #NLProc

It's surprising that the "genre" isn't gated for #neuralempty, because we know these aren't solved aclanthology.org/W19-7302/ although we're getting better at it direct.mit.edu/tacl/article/d…

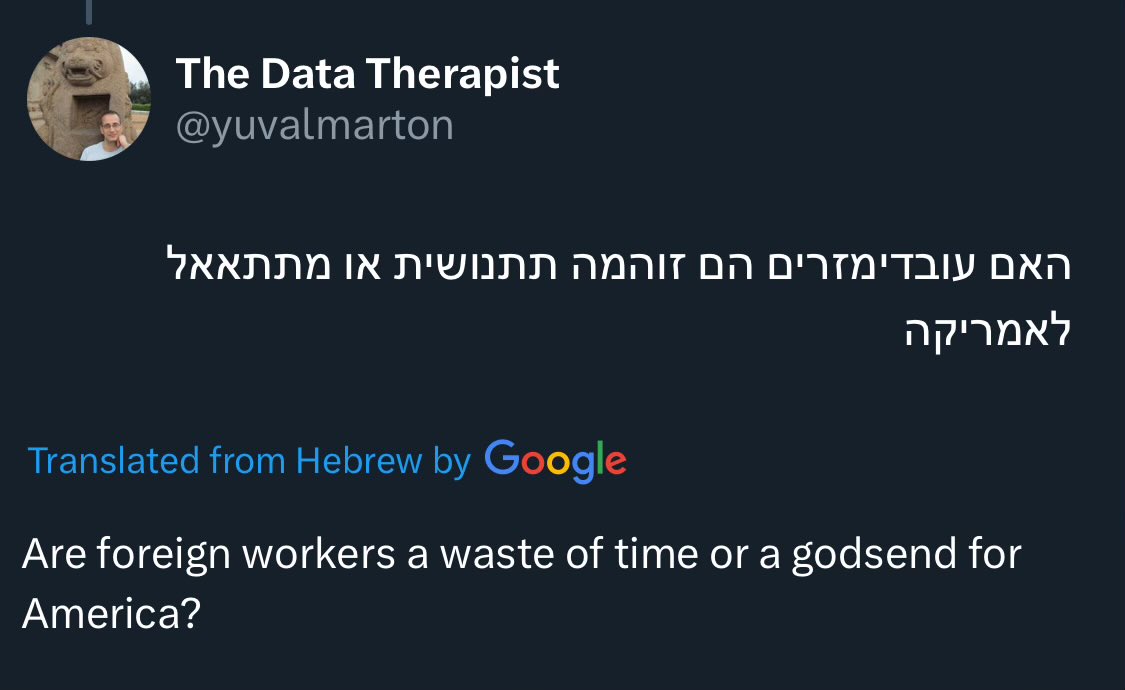

I thought I had more than a decent grasp of machine translation, but this “waste of time” is a creepy mystery to me. #nlproc #HumanLevelTranslation #neuralempty

Saving the most fun one for the last in my lunchtime reads. Can’t beat the strong reference cometda baseline but interesting experiments to generate subpar translation through “pass the message” / “telephone” game Maybe we can DPO this and do better #neuralempty #laajmahal

>> Suggestion 1: Let’s stop lumping lang pairs together when it doesn’t really make sense. I understand the want to know which systems is 🏆 but it doesn’t really matter in actual #neuralempty usage, we care about each language’s users deeply not just users as a whole. >>

This table has been itching me for a week… #llm #neuralempty 🧵>>

I’m back at the whole #neuralempty eval thingy. Reply with your recent QE or MT eval and I’ll put them on my paper blitz list and I promise to tag you and your co-authors when I post my blitzing posts/summary 😁 What’s the vibe on QE?

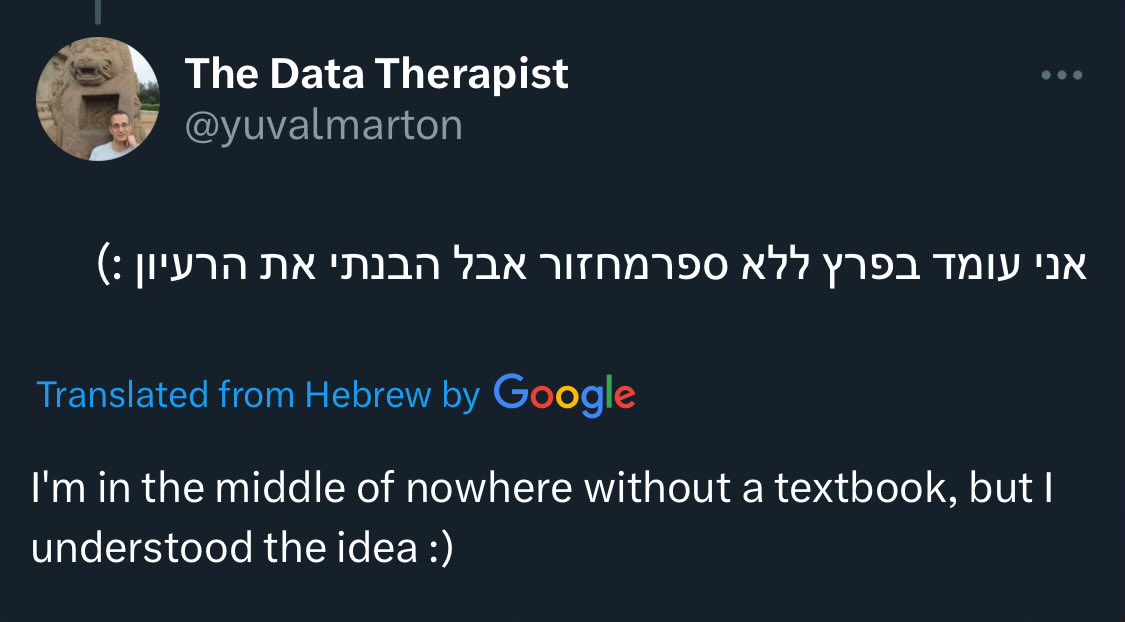

Low quality of #neuralempty #translation as a cited reason for blocking #neuralempty #translation (here from #Hebrew) on social media

It's surprising that the "genre" isn't gated for #neuralempty, because we know these aren't solved aclanthology.org/W19-7302/ although we're getting better at it direct.mit.edu/tacl/article/d…

I’m back at the whole #neuralempty eval thingy. Reply with your recent QE or MT eval and I’ll put them on my paper blitz list and I promise to tag you and your co-authors when I post my blitzing posts/summary 😁 What’s the vibe on QE?

When did we start calling cascading models that comes before the last model in the pipeline “agents”… #nlproc Image segment agent -> caption agent -> llm based #neuralempty Errr so this is no longer features created by cascading models but an agentic workflow?

Not knowing the base model’s training data is indeed a growing problem to solve in #llm #nlproc #neuralempty today. It’ll snowball until we realize open weights are merely good initialization and start some unlearning + retraining with transparent data.

state of open-source AI in 2025: - almost all new open American models are finetuned Chinese base models - we don’t know the base models’ training data - we have no idea how to audit or “decompile” base models who knows what could be hidden in the weights of DeepSeek 🤷♂️

Like language varieties much? Vardial is back @eaclmeeting 2026! sites.google.com/view/vardial-2… #nlproc #llm #neuralempty

Interns application season!! #nlproc #neuralempty #llm linkedin.com/feed/update/ur…

Interview #llm FAQs - Explain activation recomputation aka gradient checkpointing - What is the main characteristic of the input data that would usually trigger the need for optimizing activations? - Which one has cheapest activation flops? Attention, feedword or ReLU?

Okay that’s all for today!! Go to your fav @OpenAI @GeminiApp @AnthropicAI and get those answers, prep and come work with us @Apple #neuralempty #nlproc #llm jobs.apple.com/en-us/details/…

Okay... this is madness... It's just 1+ hrs from my previous rant on paywall. Sooo, this time it's #neuralempty I saw this from @slatornews slator.com/2025-slator-pr… but I don't like to pay, soooo...

AGI or human intelligence? I suspect not much of either. #neuralempty #HumanLevelTranslation #NLProc #escapism

I thought I'd seen my share of sloppy bilingual signage in Arabic but this is a whole different level. (The Arabic says, in colloquial dialect, “same thing but in Arabic”)

#neuralempty excellant dans La duperie Super duper #HumanLevelTranslation

Funny story about "super-duper". The french tr. of Nozick's Anarchy, State and Utopia, not knowing what it meant, and following context, decided to tr "super duper" by "excelling in the art if deceit" (duper-duperie-deceit). Compare both txt in picture for the full context.

Soooo now I’m curious enough to buy and play silksong in Chinese. #neuralempty rockpapershotgun.com/team-cherry-wo…

Machine translations usually isn't the final product that people/users want, it's usually a means. Hence the next generation of #neuralempty would be #llm style "translate + summarize", "translate + explain", "translate + personalize", "translate + stylize"

I'm convinced. #neuralempty cannot be just translation anymore. Translate this to English: 세상에서 가장 가난한 왕은? 최저임금. Model G: Who is the poorest king in the world? Minimum wage (Choijeo imgeum). [The joke plays on 'imgeum' (임금) which can mean both 'wage' and 'king]

Now for another #neuralempty idea for wordplay-workshop.github.io Go to your fav #LLM, make it explain and generate chatgpt.com/share/68770578… Now take Tatoeba most freq sents and make it generate images in Hylian/Sheikah. Then you find that there are many unsolved NLP tasks =)

wordplay-workshop.github.io

/overview

Official website for the Wordplay Workshop at EMNLP 2025. Exploring interactive narratives, text-adventure games, and AI agents in language-based environments. Join us in Suzhou, China, November...

Want a free #nlproc idea for a paper for wordplay-workshop.github.io at @emnlpmeeting organized by @rajammanabrolu et al.? Go to the nookipedia.com/wiki/Catch_quo… page, then do this to your favorite #LLM: chatgpt.com/share/6877025f…

Something went wrong.

Something went wrong.

United States Trends

- 1. Sonny Gray 7,339 posts

- 2. Dick Fitts N/A

- 3. Rush Hour 4 8,527 posts

- 4. Godzilla 20.5K posts

- 5. Red Sox 7,197 posts

- 6. National Treasure 5,386 posts

- 7. Raising Arizona N/A

- 8. Gone in 60 1,984 posts

- 9. Happy Thanksgiving 21.2K posts

- 10. Clarke 6,428 posts

- 11. 50 Cent 4,288 posts

- 12. #yummymeets N/A

- 13. Chelsea 316K posts

- 14. Brett Ratner 2,765 posts

- 15. Lord of War 1,416 posts

- 16. Giolito N/A

- 17. #GMMTV2026 4.13M posts

- 18. NextNRG Inc N/A

- 19. Ghost Rider 2,365 posts

- 20. Thankful 50K posts