#parallelization search results

「アテンションメカニズム: Transformerの心臓部。Modeで文脈全体一気に見る! 並列処理で高速。」 #AttentionMechanism #Parallelization

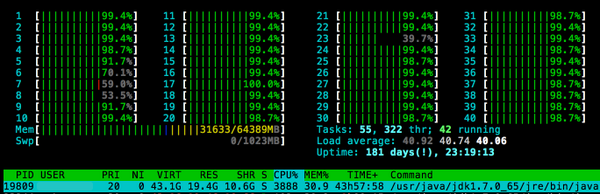

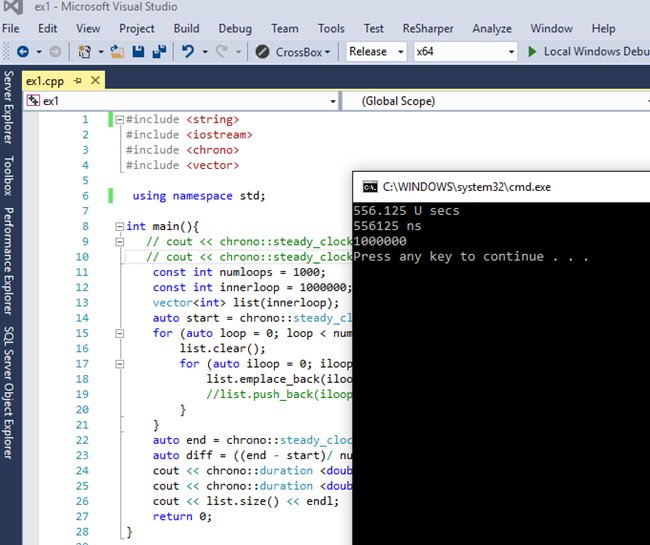

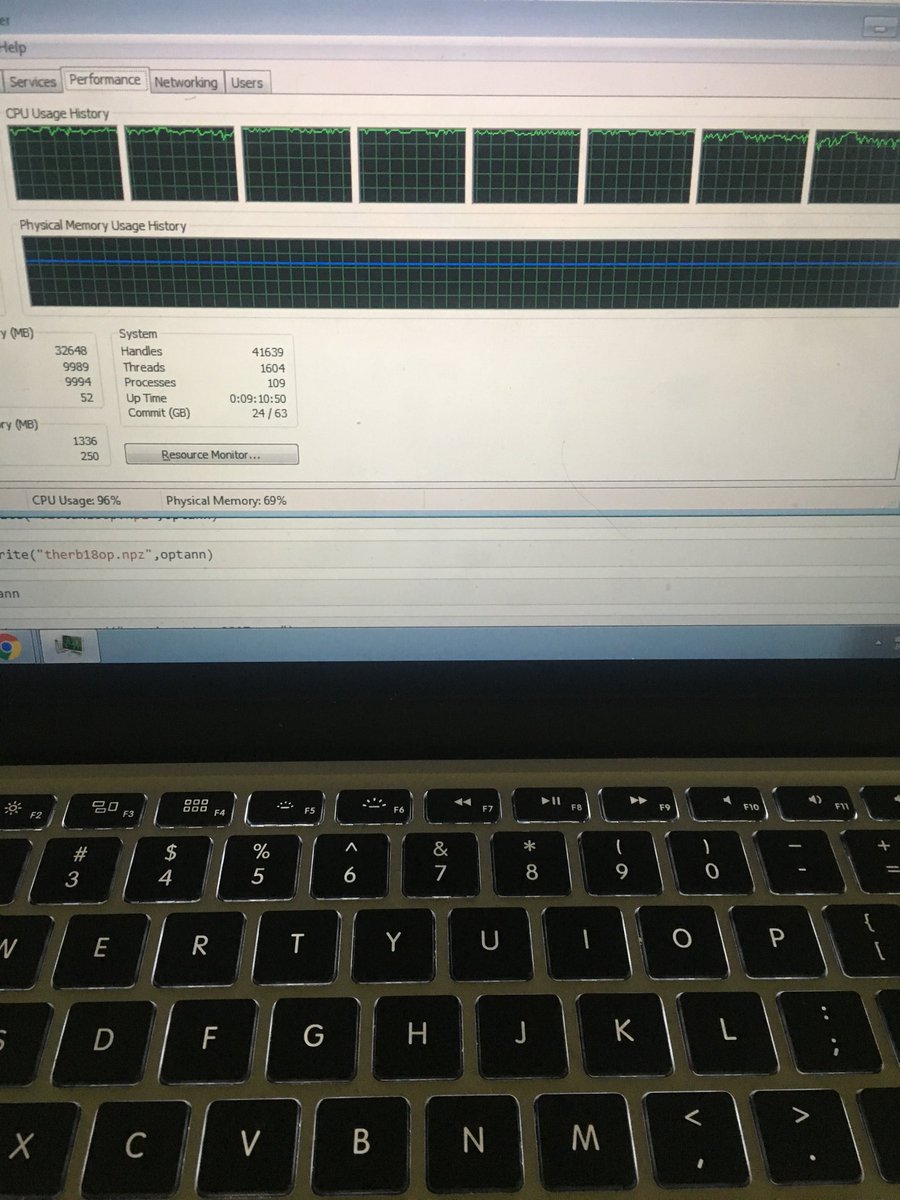

3/8 The achievement of speeding up was made possible through the implementation of #parallelization. By breaking down the workload into smaller chunks and processing them simultaneously, the state sync process was able to tap into the full potential of multiple $CPU cores.

Τι είναι το @fuel_network ? Είναι ένα πολλά υποσχόμενο Layer 2, διαφορετικό από ότι υπάρχει, αφού ο στόχος του είναι να "κουμπώνει" με σχεδιασμό #modular και να αναλαμβάνει το execution, το οποίο όντας σχεδιασμένο με τεχνικές #Parallelization, είναι ταχύτατο και πολύ σταθερό.

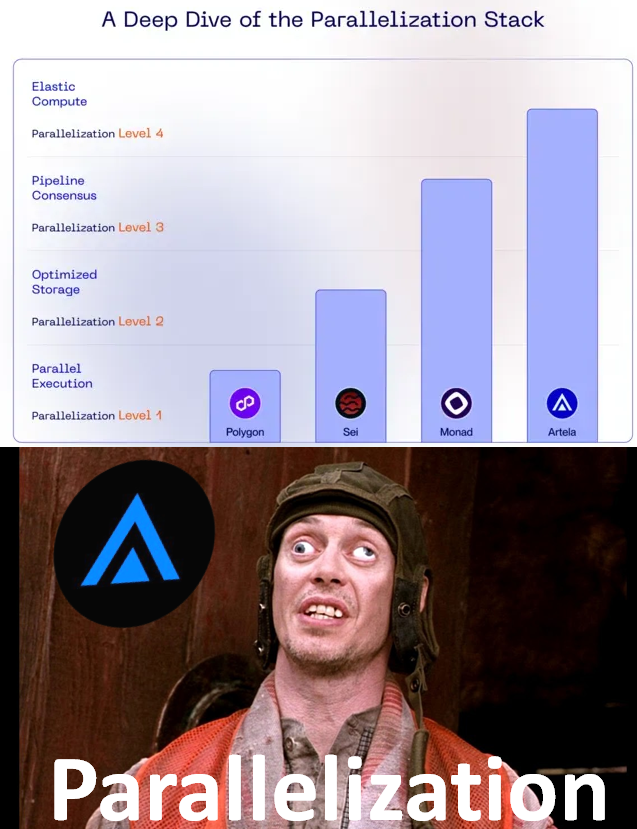

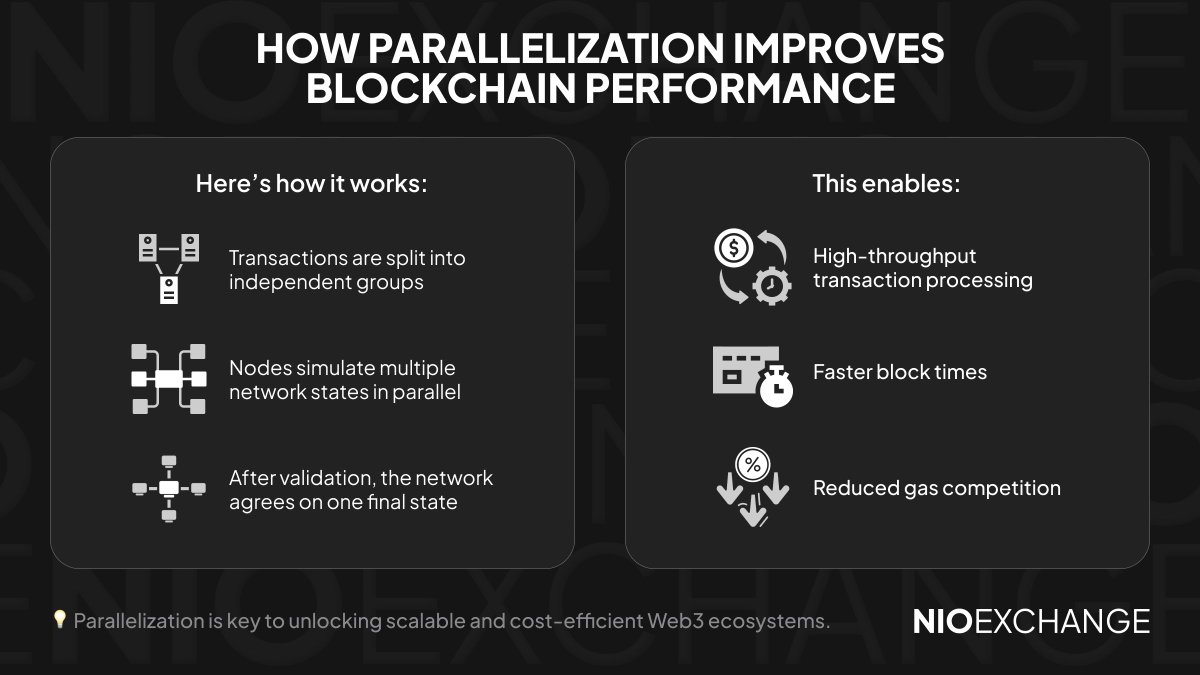

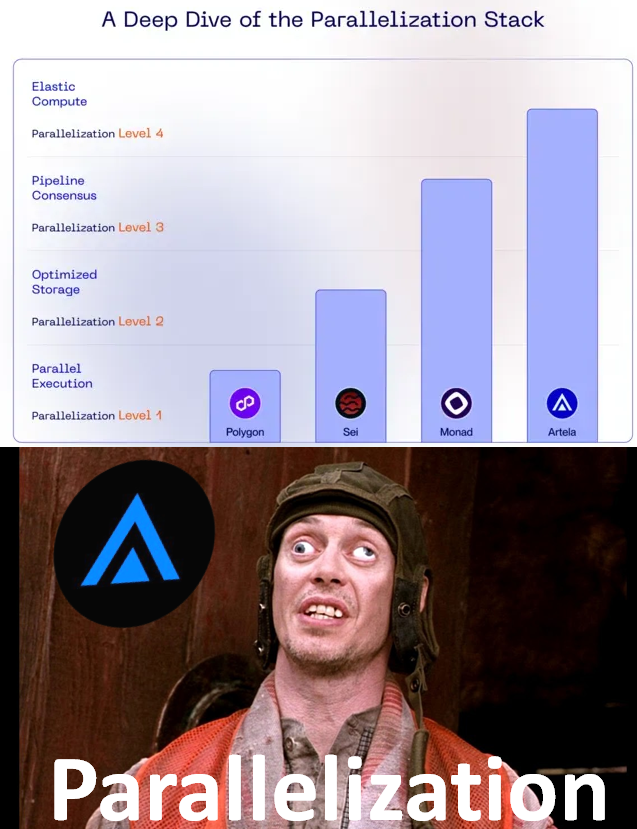

What Is Full-Stack Parallelization? 🛠️ Full-stack parallelization involves optimizing both computation and storage to work in parallel. This allows blockchain networks to handle more transactions simultaneously, improving speed and efficiency. #Blockchain #Parallelization

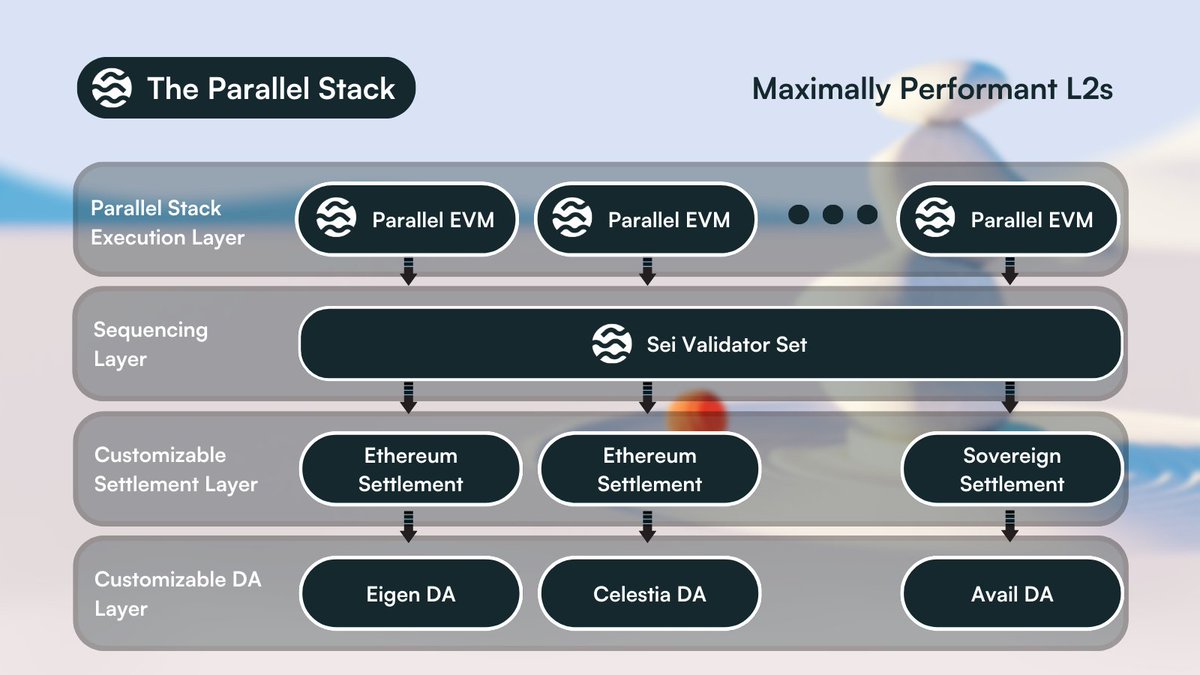

2/ Most solutions for #ETH are single threaded, with $SEI parallelization these networks will be able to process more transactions in parallel and will improve L2 and Rollup performance This is the power of #parallelization

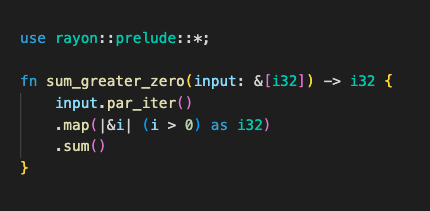

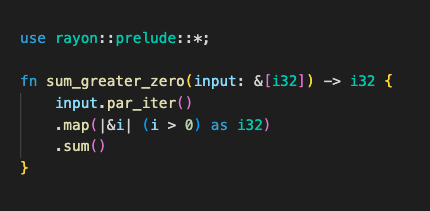

🦀 #daily #Rust 49. Simple #parallelization of a for loop using the Rayon crate by adding an import and using the .par_iter() parallel #iterator provided by Rayon. The code counts elements >0. Expected speedup is ~X where X is the nr. of CPU cores you have:

1. In High Performance Computing #HPC , it is not how fast you write the code, it is how fast the code you write runs. 2. It is all about memory: how much you use and how often you load it 3. If you load one value, you get 6-8 4. #parallelization will expose flows in your code

Faster Training of Large Language Models with Parallelization - Drops of AI #LLM #LargeLanguageModels #Parallelization #AI #DeepLearning #MachineLearning #Transformer #DataParallelism #ModelParallelism #TensorParallelism #PipelineParallelism #ZeRO #GPUs dropsofai.com/faster-trainin…

dropsofai.com

Faster Training of Large Language Models with Parallelization - Drops of AI

Implementing Faster Training of Large Language Models with Parallelization. Learn about key parallelism techniques for GPUs and TPUs to acce

WOW! An introduction that will hook you to read it till the end. (Cf. Guy E. Blelloch) #parallelization cs.cmu.edu/~guyb/papers/B…

Ahooy #seiyans New month, new season, new quarter and new possibilities come to @SeiNetwork 🌊 Last night all of #ETHDenver2024 witnessed the power of #parallelization and the future of #web3 @SeiMarines #SEI $SEI #ahoy

That's superb? 😱 I can only imagine but I don't think I or the industry is prepared for what's coming 🤔 It's big, it's massive and I'm loving the feelings #goQuai #Parallelization #ExecutionSharding

Today & tomorrow we're teaching "Shared memory #parallelization with OpenMP" - for multi-core, shared memory & ccNUMA platforms. The hands-on labs allow to immediately test and understand the #OpenMP directives, environment variables, and library routines vsc.ac.at/training/2023/…

Ecco il verdetto di ieri, sul meetup in live&stream di #PyData #Venice: spunti stimolanti e rinfresco! Grazie a Qintesi e @lucacorsato, @inmediaref e @perlfly per esserci stati nonostante la pioggia! #Parallelization #DataManagement #BestPractices @PyData github.com/pandle/PyDataV…

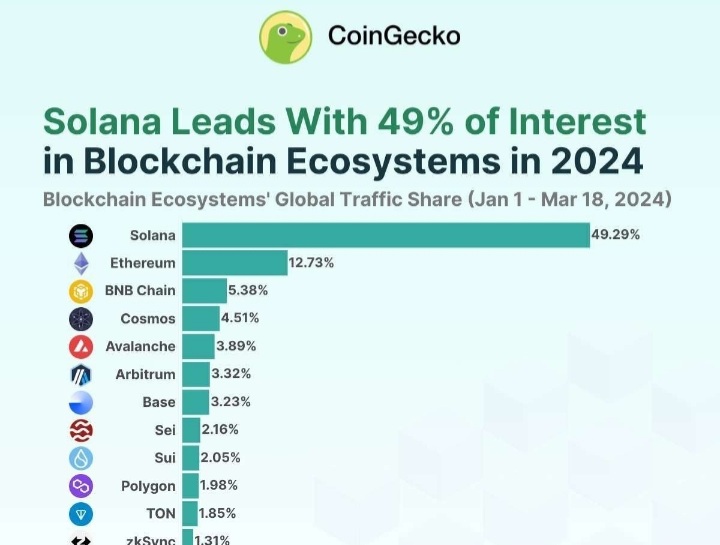

Ahoy #seiyan 🛥 did you notice it? @SeiNetwork in the top 10 global blockchain traffic, this is just the beginning. The wave of #parallelization is arriving and with it the EVM will be able to enjoy a web3 experience as fast as web2 12,500 TPS 390ms TTF (even less) #sei it

They say “many hands make light work.” The same is true of computing. #Parallelization breaks big tasks into smaller ones that can then be executed simultaneously across multiple processors. And we have @IllinoisCS' David J. Kuck to thank: bit.ly/IEEEAwards-Rec… #IEEEAwards2024

hack or just playing smarter? 🤔 #gmonad #Parallelization #EVM

Understanding EVM Parallelization : EVM parallelization is a fusion of two terms: #EVM and #parallelization. To grasp its essence, delving into the definitions of each term becomes crucial. EVM, an abbreviation for Ethereum Virtual Machine, represents Ethereum's computational…

「アテンションメカニズム: Transformerの心臓部。Modeで文脈全体一気に見る! 並列処理で高速。」 #AttentionMechanism #Parallelization

Faster Training of Large Language Models with Parallelization - Drops of AI #LLM #LargeLanguageModels #Parallelization #AI #DeepLearning #MachineLearning #Transformer #DataParallelism #ModelParallelism #TensorParallelism #PipelineParallelism #ZeRO #GPUs dropsofai.com/faster-trainin…

dropsofai.com

Faster Training of Large Language Models with Parallelization - Drops of AI

Implementing Faster Training of Large Language Models with Parallelization. Learn about key parallelism techniques for GPUs and TPUs to acce

hack or just playing smarter? 🤔 #gmonad #Parallelization #EVM

Ethereum → Slow & Steady 🐢 Other L1s → Compromise 🤷♂️ Monad → Parallel EVM ⚡ The obvious choice. #Monad #ETH #Parallelization

Ethereum → Legacy 🐢 Other L1s → Compromise 🤷♂️ Monad → Parallel EVM ⚡ The clear winner. #Monad #ETH #Parallelization

Ethereum → Legacy 🐢 Other L1s → Compromise 🤷 Monad → Parallel EVM ⚡ The choice is obvious. #Monad #ETH #Parallelization

🚀 Parallelization = more power 💪 It lets blockchains process multiple transactions at once, not one by one. Result? Blazing fast speed & scalability 🔗 #BlockchainTech #Parallelization #NioX

Efficient Hardware Scaling and Diminishing Returns in Large-Scale Training of Language Models Jared Fernandez, Luca Wehrstedt, Leonid Shamis et al.. Action editor: Binhang Yuan. openreview.net/forum?id=p7jQE… #parallelization #gpus #gpu

Our scalable AI training uses shard chain parallelization: each shard trains a model sub-component, with final aggregation via BFT consensus. Speeds up large-scale NLP training by 4x. @PublicAI #ShardTraining #Parallelization #NLPAcceleration

Efficient Hardware Scaling and Diminishing Returns in Large-Scale Training of Language Models openreview.net/forum?id=p7jQE… #parallelization #gpus #gpu

REX: GPU-Accelerated Sim2Real Framework with Delay and Dynamics Estimation Bas van der Heijden, Jens Kober, Robert Babuska, Laura Ferranti tmlr.infinite-conf.org/paper_pages/O4… #simulates #simulated #parallelization

🔍 Discover the Power of Parallelization! 🌐 Read and unlock the secrets to faster, more efficient computing! Whether you're into software development or just curious about optimization. Check more👉ceexglobal.zendesk.com/hc/en-001/arti… #Parallelization #TechInsights #Programming…

Let's discuss specific implementation strategies and explore the best approaches for balancing performance and resource utilization. #ZKPs #Parallelization #Scalability #CrossChain

REX: GPU-Accelerated Sim2Real Framework with Delay and Dynamics Estimation Bas van der Heijden, Jens Kober, Robert Babuska, Laura Ferranti. Action editor: Florian Shkurti. openreview.net/forum?id=O4CQ5… #simulates #simulated #parallelization

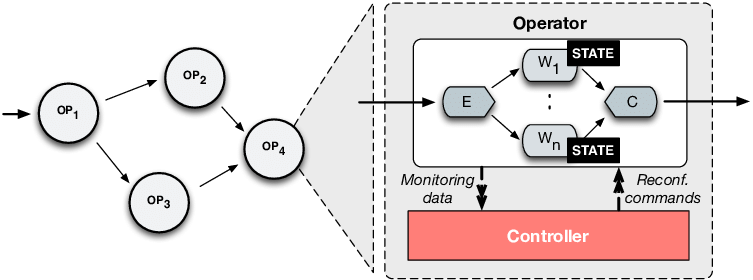

How #Data #Parallelization Works in Streaming Systems searchdatamanagement.techtarget.com/post/How-paral… #MachineLearning #NLP #Analytics #AI #100DaysOfCode #DEVCommunity #DevOps #IoT #IIoT #Serverless #5G #Womenintech #DataScience #Coding #Programming #BigData #RStats #TensorFlow #Java #Python

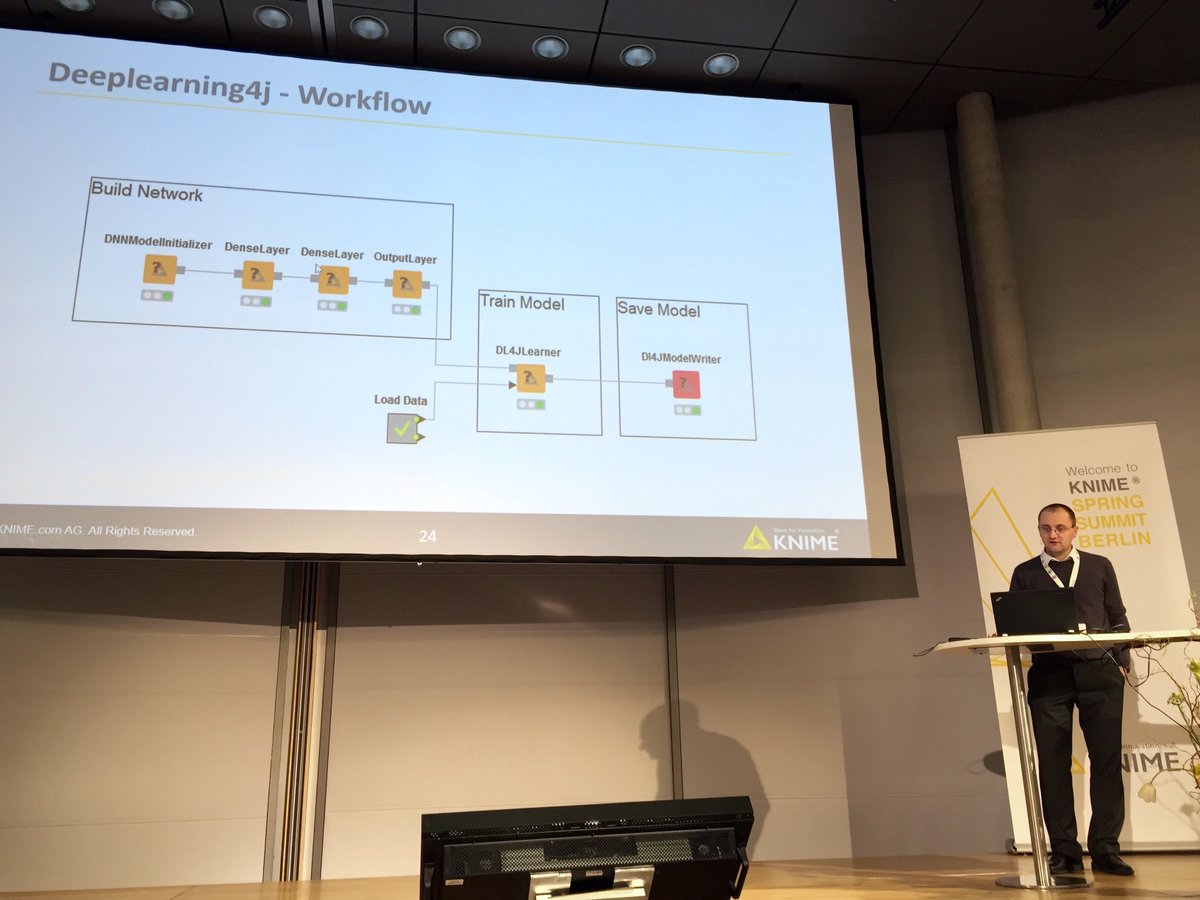

#KNIMESummit2016 Bernd Wiswedel- what's coming in 2016: #deeplearning integrated #parallelization #machinelearning

Did you know...? #parallelization #highdetail #data #display #seconds #BaseNPlatform #basencorporation

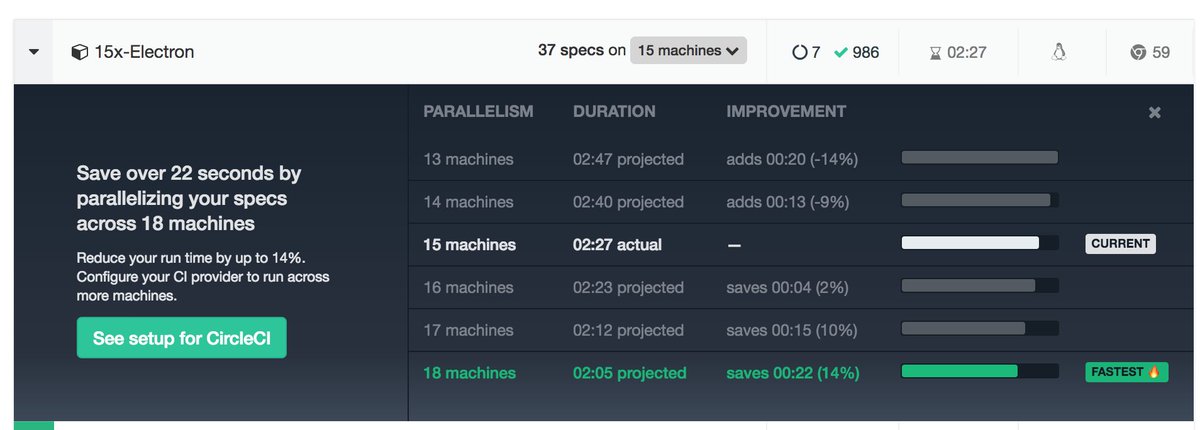

#NewFeature Alert for the #Cypressio Dashboard! 🚨 Introducing...the #parallelization calculator 👀 🔥 cypress.io/dashboard/

Neha Narula (@neha) on The Scalable Commutativity Rule -> buff.ly/2oLkY74 #NYC #parallelization #concurrency #os

Neha Narula on The Scalable Commutativity Rule - buff.ly/2jzJ4OV PDF - buff.ly/2jzJkO9 #parallelization #compsci

Neha Narula on The Scalable Commutativity Rule buff.ly/2lk3rQC #parallelization #concurrency #video #NYC

Why parallelize code? Here's an expert view on why and how sdm.link/parpart2 @Go_Parallel #parallelization

🦀 #daily #Rust 49. Simple #parallelization of a for loop using the Rayon crate by adding an import and using the .par_iter() parallel #iterator provided by Rayon. The code counts elements >0. Expected speedup is ~X where X is the nr. of CPU cores you have:

Increase #parallelization skills with these eval guides from @intel, others. sdm.link/evalguide @Go_Parallel

A busy day @embedded_world for our friends @silexica and their SLX Tool Suite silexica.com #multicore #parallelization

Something went wrong.

Something went wrong.

United States Trends

- 1. Cowboys 30.4K posts

- 2. Jonathan Taylor 8,303 posts

- 3. Jets 90.5K posts

- 4. Bengals 53.3K posts

- 5. Bucs 9,420 posts

- 6. Bo Nix 5,540 posts

- 7. #BroncosCountry 3,433 posts

- 8. Saints 29.8K posts

- 9. Eagles 120K posts

- 10. Caleb 38.2K posts

- 11. Shough 3,384 posts

- 12. Giants 100K posts

- 13. Riley Moss 1,178 posts

- 14. Eberflus 2,062 posts

- 15. Rattler 5,521 posts

- 16. Zac Taylor 5,706 posts

- 17. Falcons 40.1K posts

- 18. RJ Harvey 2,270 posts

- 19. Sutton 5,510 posts

- 20. Joe Milton N/A