#pytorch3d 検索結果

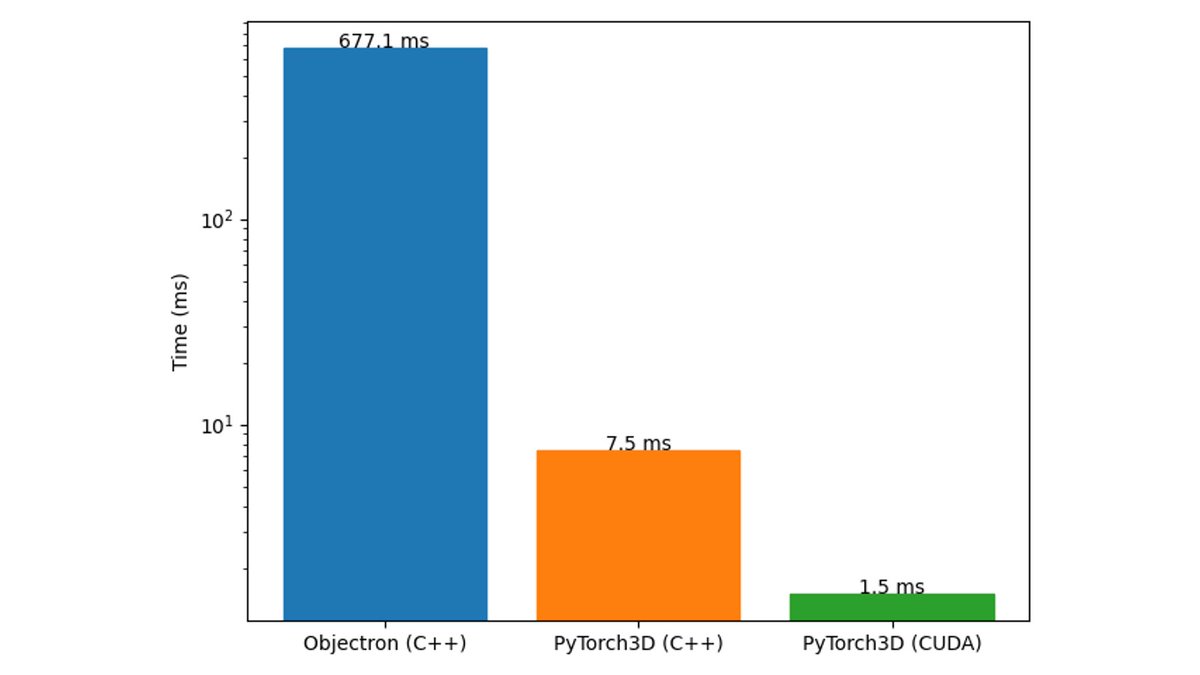

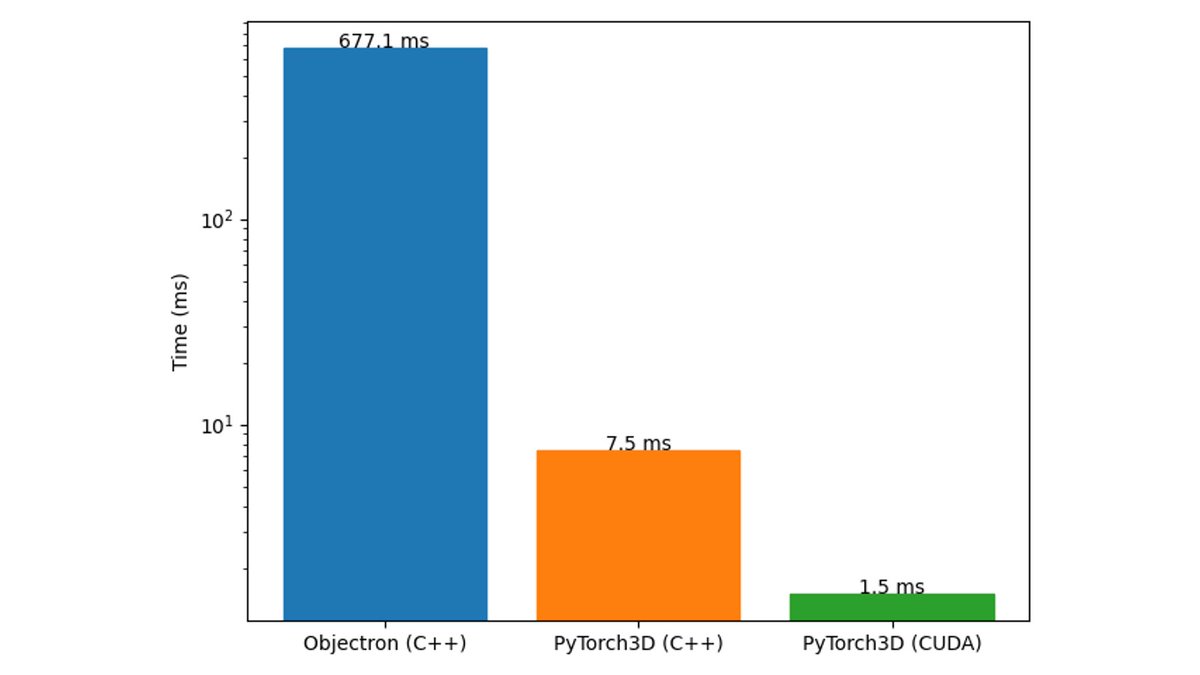

Spoiled by 2D, I was shocked to find out there are no good ways to compute exact IoU of oriented 3D boxes. So, we came up with a new algorithm which is exact, simple, efficient and batched. Naturally, we have C++ and CUDA support #PyTorch3D Read more: tinyurl.com/27zywpws

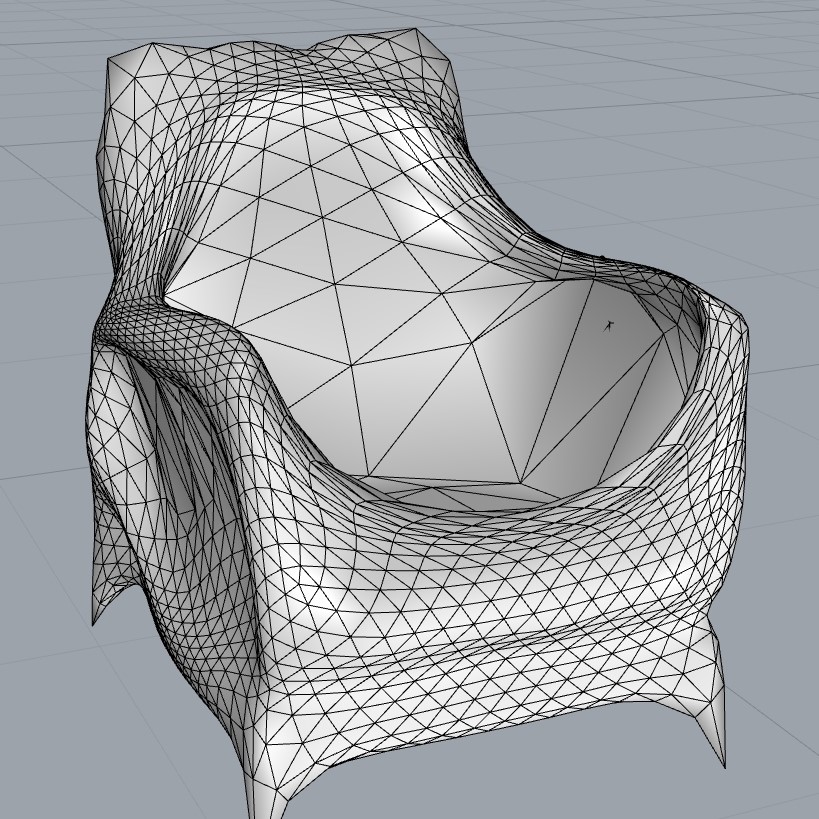

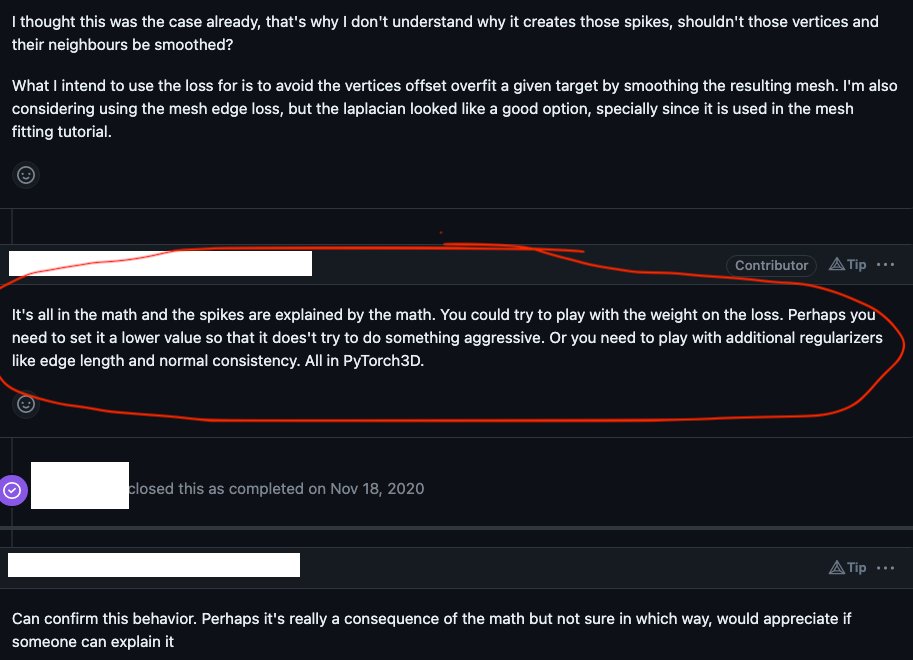

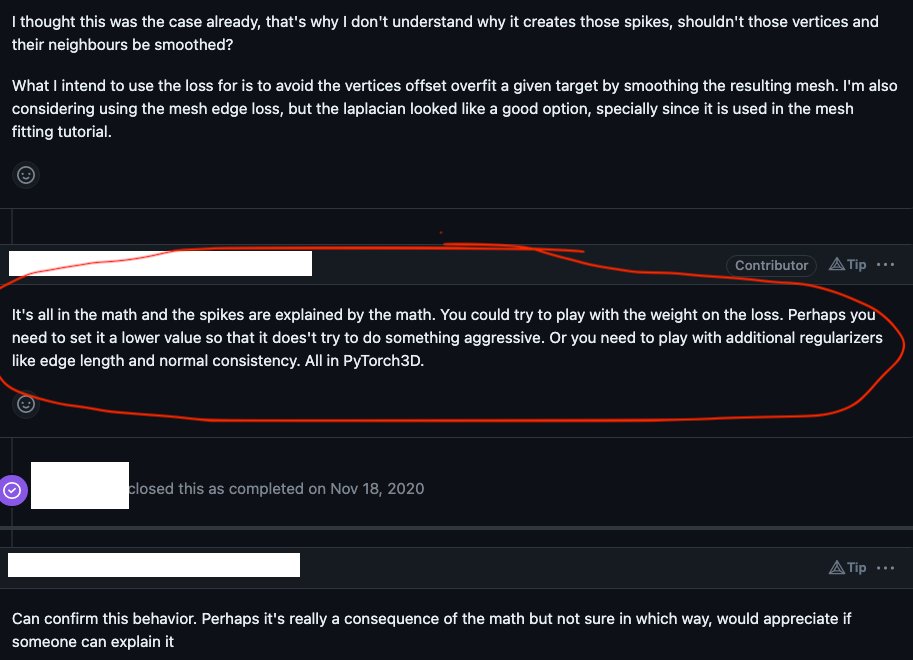

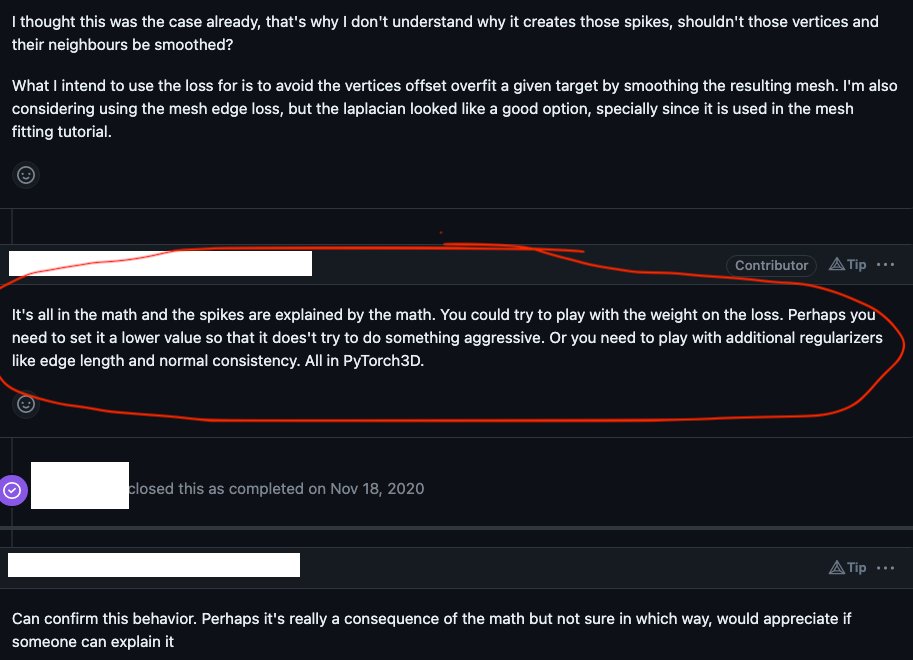

ItS aLL iN tHe MaTh BrO!! 🤷 #PyTorch3d #computervision Context: someone asked about weird behavior in the Laplacian smoothing operation causing spikes in meshes

Today we released code for SynSin, our CVPR'20 oral that generates novel views from a single image: github.com/facebookresear… We have: - Pretrained models - Jupyter notebook demos - Training and evaluation - #pytorch3d integration Congrats to @oliviawiles1 on the release!

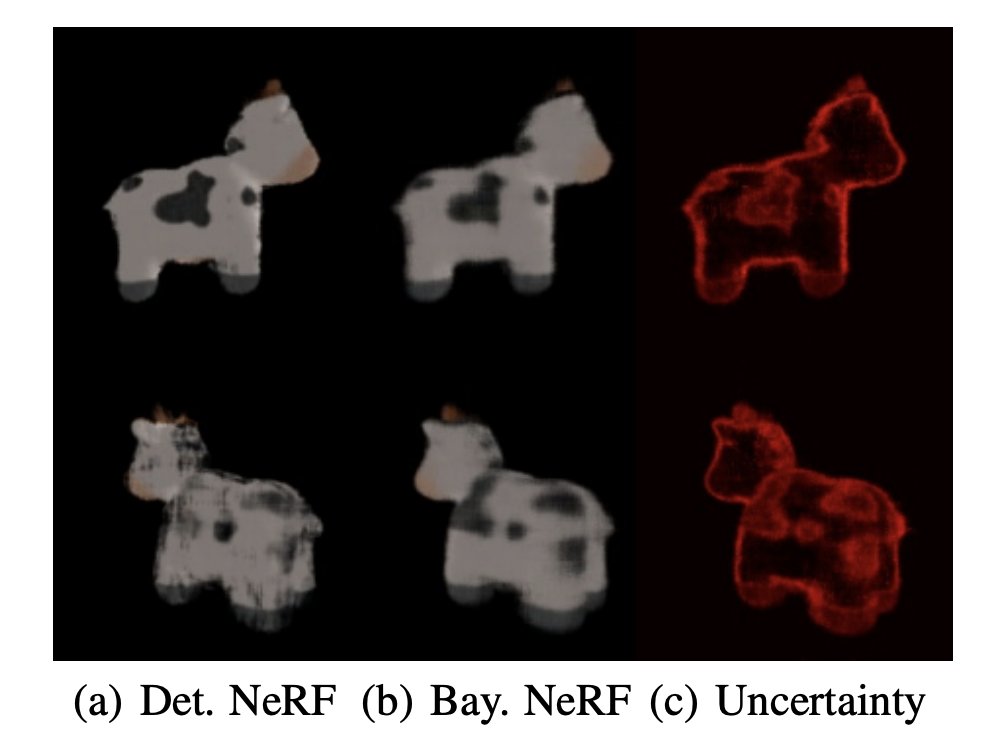

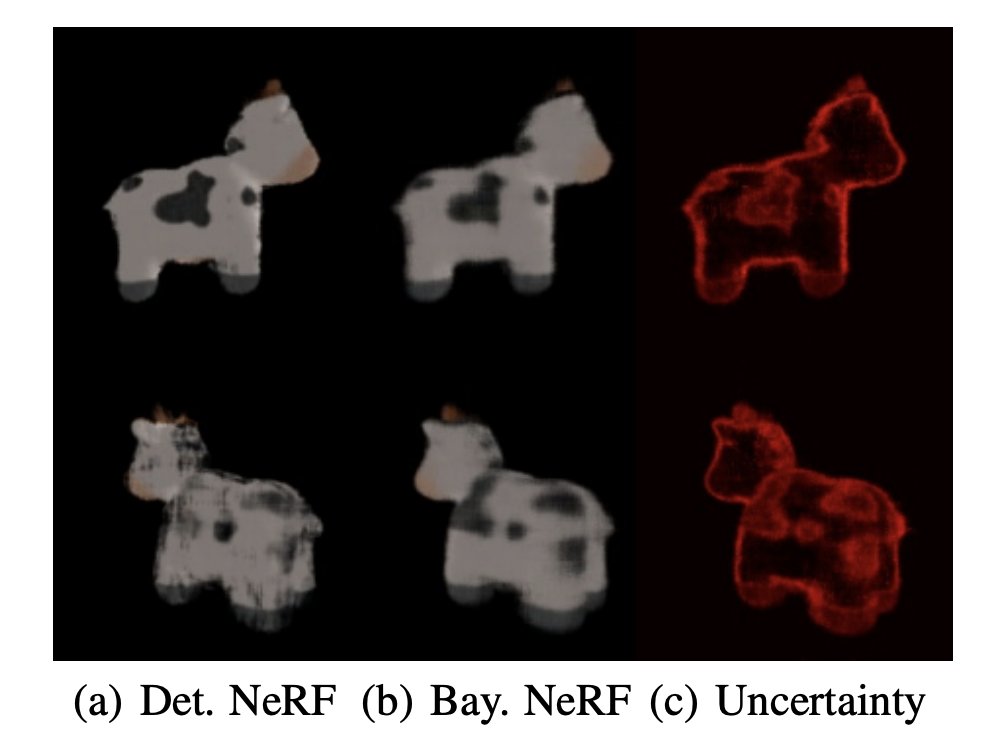

We demonstrate utility of TyXe by integrating it with #PyTorch for Uncertainty and OOD, #PyTorch3D, and DGL to run Bayesian ResNets, Bayesian GNNs, and Bayesian Neural Radience Fields. No cows were harmed or mis-inferred during this process (see this little NeRF demo) 4/5

Victorian Octopus #WorldTour2022 Aaron Wacker - Digital Animation #3dAnimation #Pytorch3d #VQGAN #CLIP #Diffusion #pifuHD

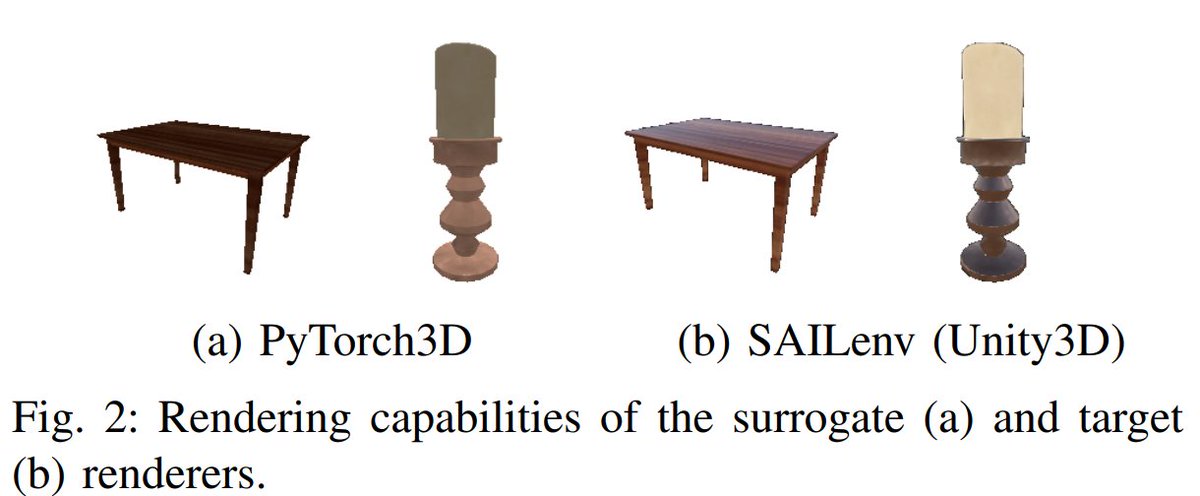

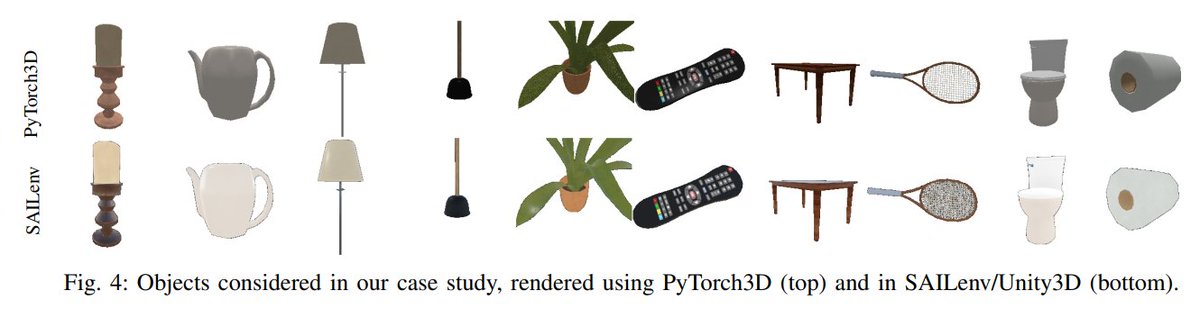

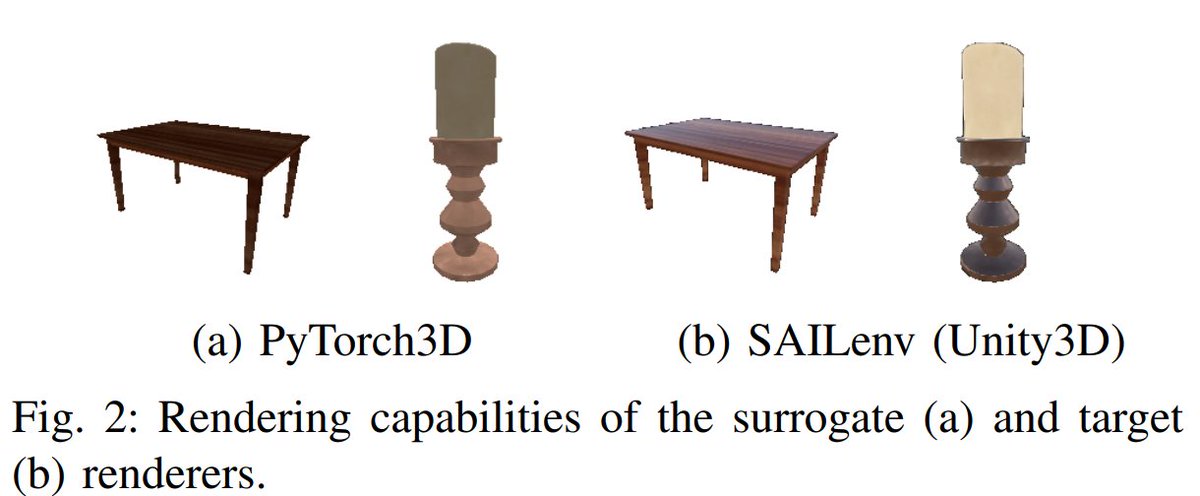

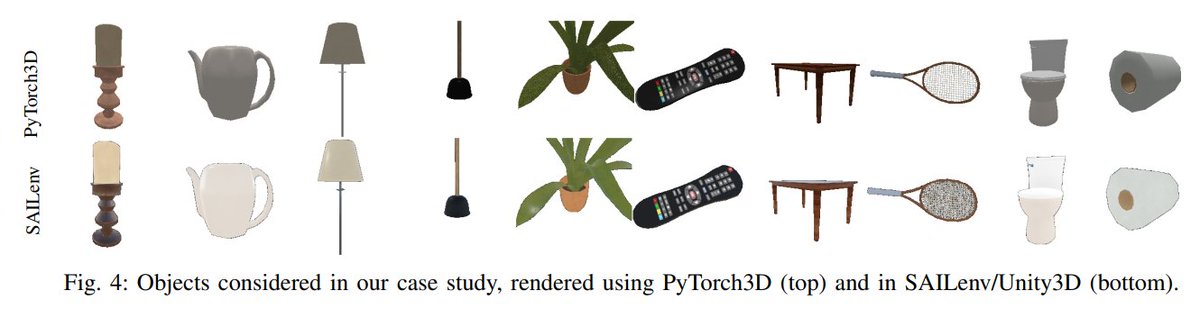

3D Virtual Environments are usually based on renderers (target renderers) that have more advanced features (e.g. image quality) than the ones that are supported by versatile differentiable renderers (#pytorch3d), that we refer to as surrogate renderers. 3/6

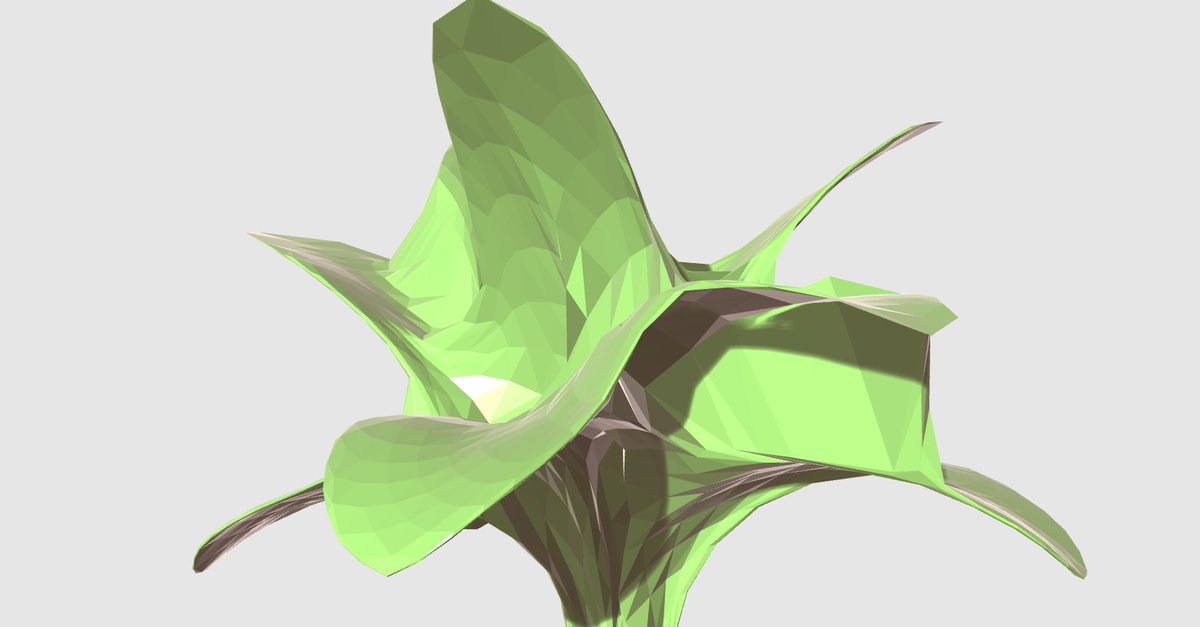

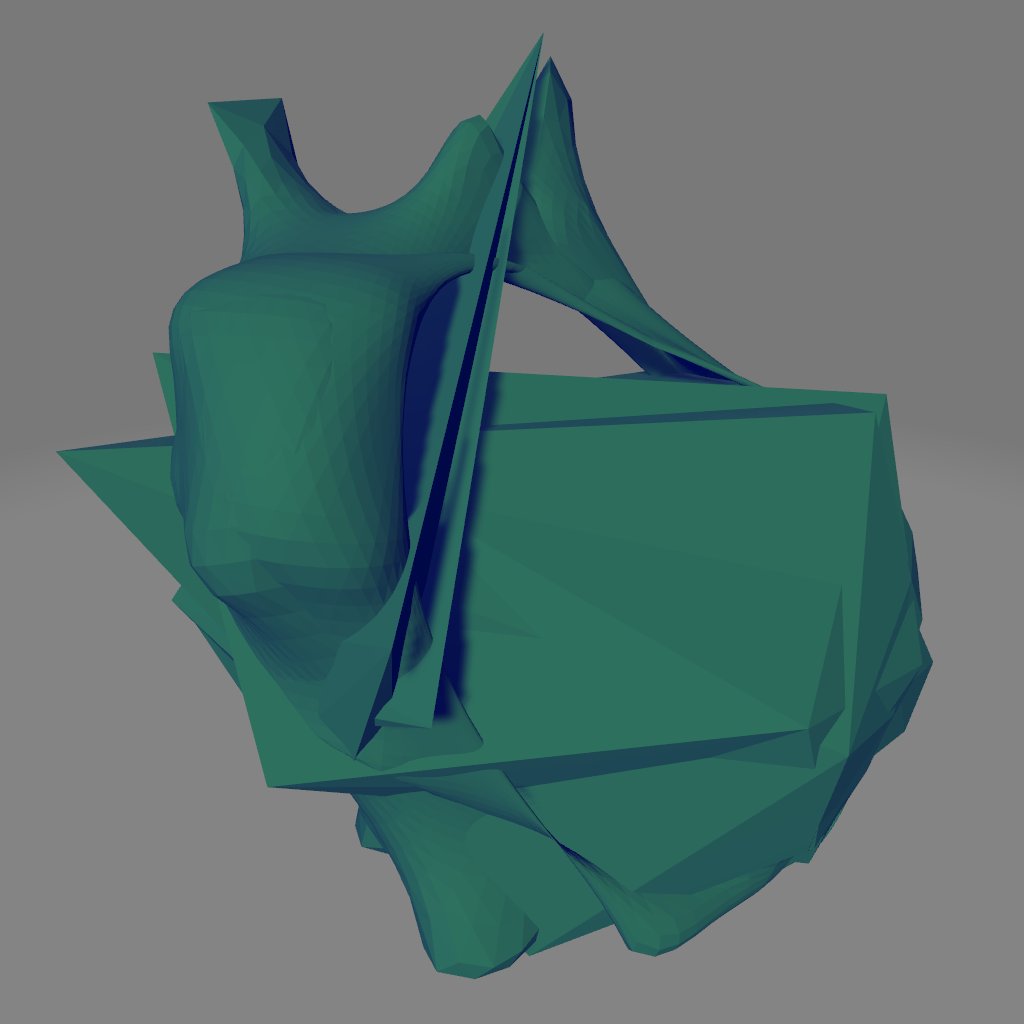

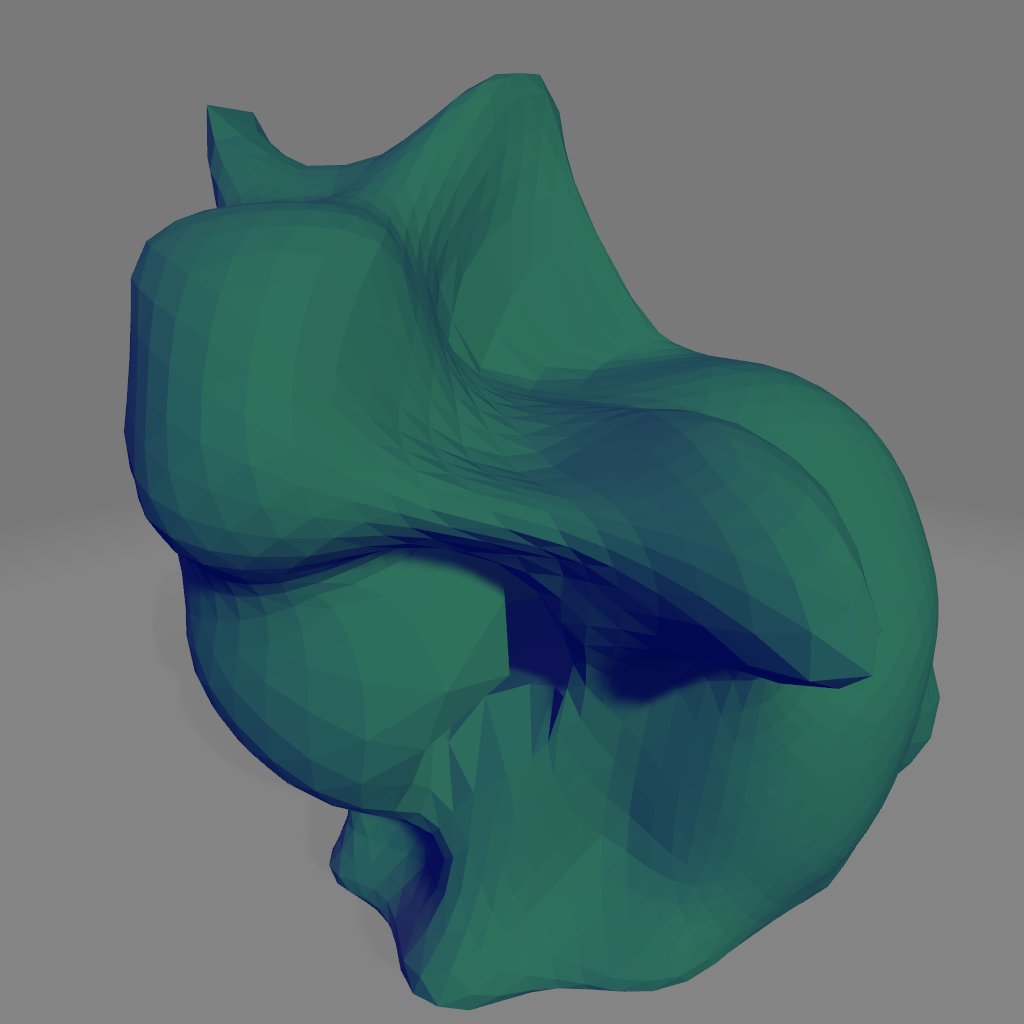

Pytorch3d now provides clean code to fit textured mesh, textured volume and simple neural-radiance-field. Plenty of handy 3D utils there, and very good tutorials/docs. Here some examples fitting Suzanne pytorch3d.org #pytorch3d #neuralrendering

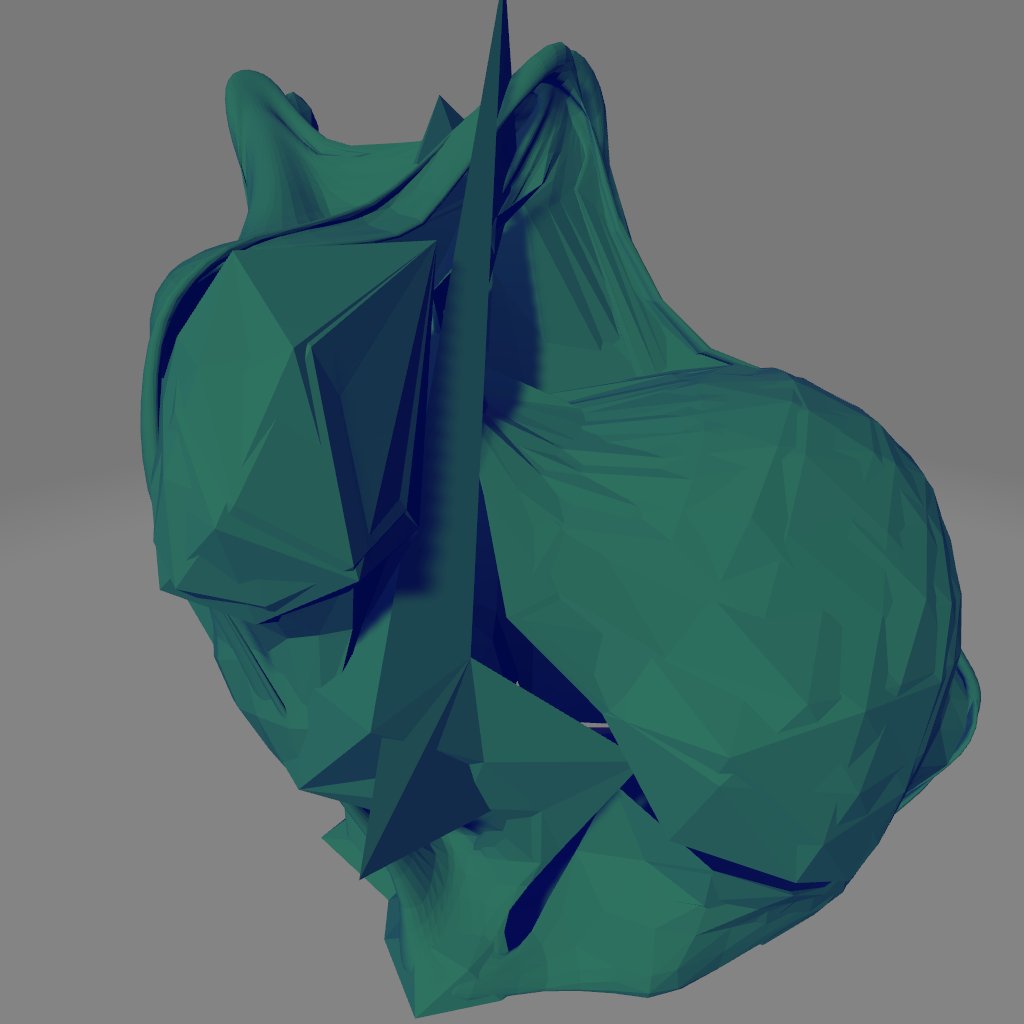

First needed to play with the major 3D ML libraries, #PyTorch3D and the Tensorflow counterpart @_TFGraphics_. Here I'm deforming a sphere into Suzanne via SGD by optimizing the chamfer distance (plus regularizers)

入力した画像のシルエットをもとに、カメラ位置の最適化を行うPyTorch3dのチュートリアルを試してみた。収束した位置からレンダリングしたモデルがずれている。。数値上目標の位置はほとんどずれてないはずなのになぜだろう #PyTorch3d

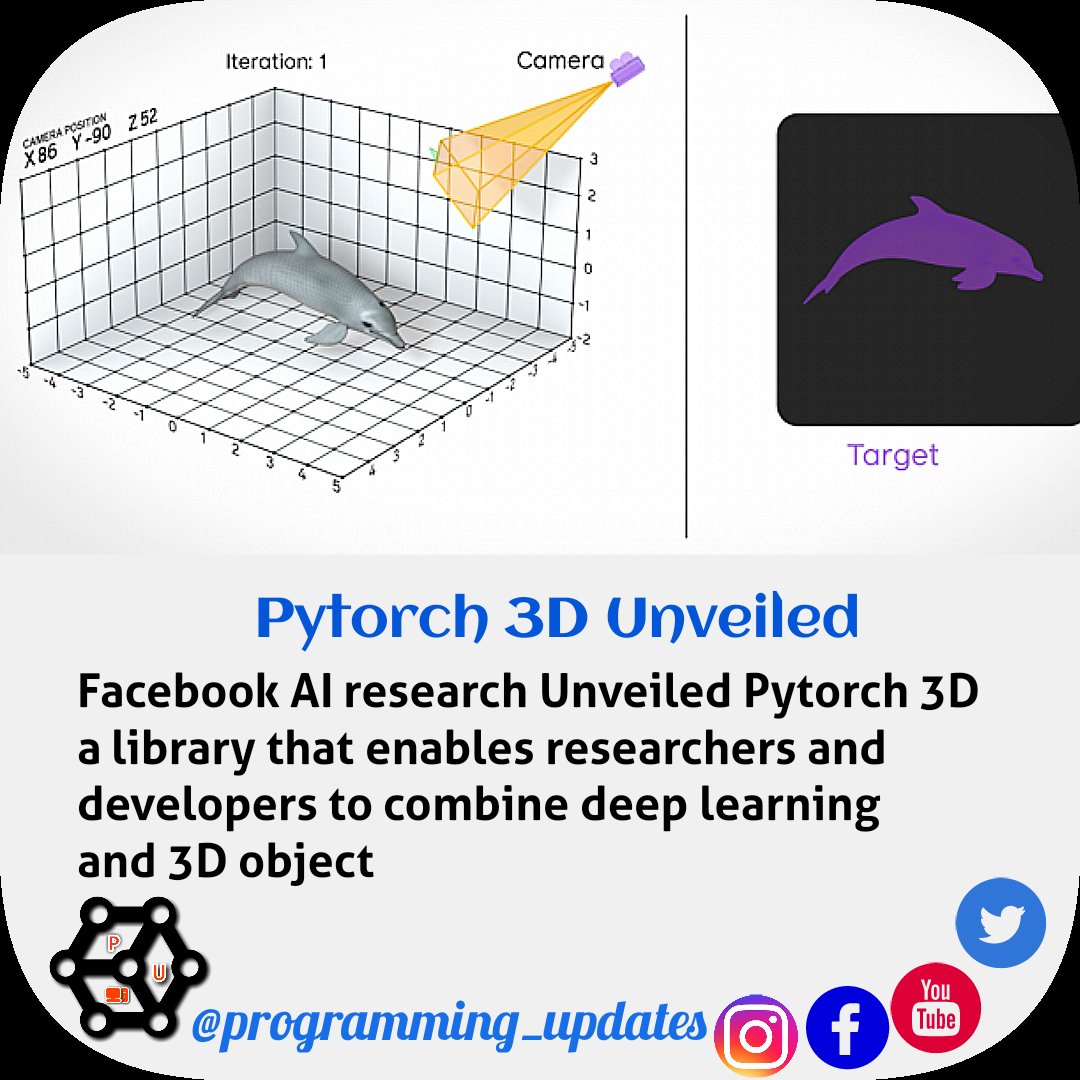

#PyTorch3D is a highly modular and optimized library for 3D deep learning built by @FacebookAI. The library is designed to provide the tools and resources necessary to make 3D deep learning easier with PyTorch. Learn more: bit.ly/3ca53bF

RT How to render 3D files using PyTorch3D dlvr.it/RymQWs #pytorch3d #3dmesh #rendering #python #imageprocessing

Nothing worse on a Sunday night than battling CUDA configs for PyTorch3D #pytorch #Pytorch3D #Colab #couldhavehadbettersundayplans #pain

A10 Parkinglot Testing 100% 42/42 [2:34:29<00:00, 220.70s/it] with #PyTorch 2 #CUDA 11.8 #pytorch3d 0.7.4 #kaolin 0.14.0

ItS aLL iN tHe MaTh BrO!! 🤷 #PyTorch3d #computervision Context: someone asked about weird behavior in the Laplacian smoothing operation causing spikes in meshes

For the stimulus space, we used the Basel Face Model (faces.dmi.unibas.ch/bfm/bfm2019.ht…). @wenxuan_guo_ skillfully implemented BFM rendering in #PyTorch3D, allowing us to optimize face parameters with SGD. 7/13

For the stimulus space, we used the Basel Face Model (faces.dmi.unibas.ch/bfm/bfm2019.ht…). @wenxuan_guo_ skillfully implemented BFM rendering in #PyTorch3D, allowing us to optimize face parameters with SGD. 7/13

#pytorch3d ift.tt/Cnmv3NQ PyTorch3D is FAIR's library of reusable components for deep learning with 3D data #github #githubtrending

Code & models publicly available at github.com/radekd91/emoca. Developed with @PyTorch using @PyTorchLightnin and #Pytorch3D. Experiment tracking done with the irreplaceable @weights_biases tool. Big thank you for providing and maintaining these great tools.

Code & mode are publicly available at github.com/radekd91/emoca. Developed with @PyTorch using @PyTorchLightnin and #Pytorch3D. Experiment tracking done with the irreplaceable @weights_biases tool. Big thanks for providing and maintaining these great tools. (6/6)

Victorian Octopus #WorldTour2022 Aaron Wacker - Digital Animation #3dAnimation #Pytorch3d #VQGAN #CLIP #Diffusion #pifuHD

3D Deep Learning with #PyTorch3D is way easier and faster than conventional methods, and many AI innovators and researchers are rooting for it. These are its USPs: bit.ly/3tR217d #PyTorch #python #pythonprogramming #pythonprogramminglanguage #Artiba

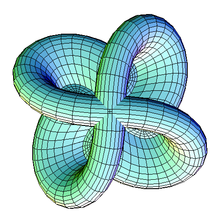

Torus → castle One year ago I was playing with 3D loss functions implemented in #PyTorch3D. These are essential for geometric machine learning. Eventually this research path led me to lattices and graph structures, which are my current focus. #machinelearning #motiondesign

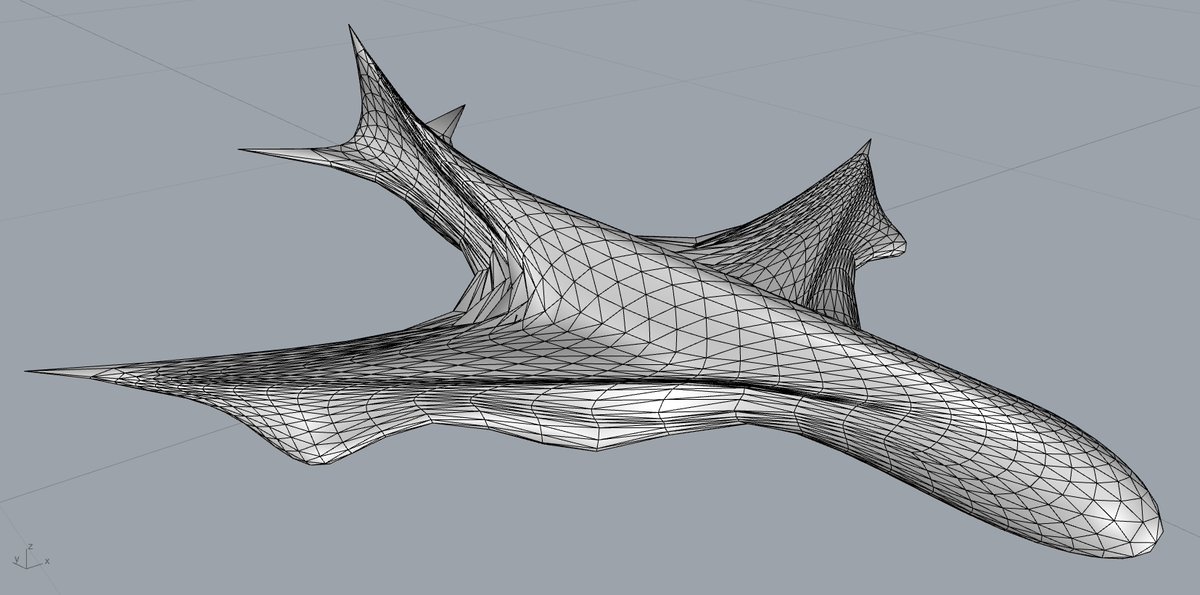

2nd take on the "Blue Whale Knight" creature . The model deviates wildly from the basic #SMPL human. Shields and fins appeared, and legs atrophied as those of marine mammals. Spectral vertex features work well when you want to depict such smooth forms. #AIart #Pytorch3d #3dart

Would you wear such a costume on #Halloween ? "The Blue Whale Knights" were awesome warriors in the Berserk manga. As many other characters there, they combine animal elements in their armor design. I could evolve a very detailed mesh for that text. #AIart #pytorch3d #3dart

3D Virtual Environments are usually based on renderers (target renderers) that have more advanced features (e.g. image quality) than the ones that are supported by versatile differentiable renderers (#pytorch3d), that we refer to as surrogate renderers. 3/6

Spoiled by 2D, I was shocked to find out there are no good ways to compute exact IoU of oriented 3D boxes. So, we came up with a new algorithm which is exact, simple, efficient and batched. Naturally, we have C++ and CUDA support #PyTorch3D Read more: tinyurl.com/27zywpws

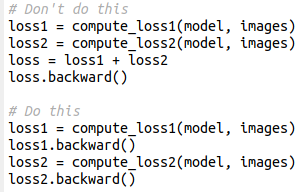

This simple pytorch trick will cut in half your GPU memory use / double your batch size (for real). Instead of adding losses and then computing backward, it's better to compute the backward on each loss (which frees the computational graph). Results will be exactly identical

インスタにはafterも上げたけど... アプリのフィルタもいいけど、自分でコード書いてフィルタかけるのも面白いよね。 またPythonで色々試してみよう〜 #写真好きな人と繋がりたい #pieni #pythonprogamming

Today we released code for SynSin, our CVPR'20 oral that generates novel views from a single image: github.com/facebookresear… We have: - Pretrained models - Jupyter notebook demos - Training and evaluation - #pytorch3d integration Congrats to @oliviawiles1 on the release!

ItS aLL iN tHe MaTh BrO!! 🤷 #PyTorch3d #computervision Context: someone asked about weird behavior in the Laplacian smoothing operation causing spikes in meshes

We demonstrate utility of TyXe by integrating it with #PyTorch for Uncertainty and OOD, #PyTorch3D, and DGL to run Bayesian ResNets, Bayesian GNNs, and Bayesian Neural Radience Fields. No cows were harmed or mis-inferred during this process (see this little NeRF demo) 4/5

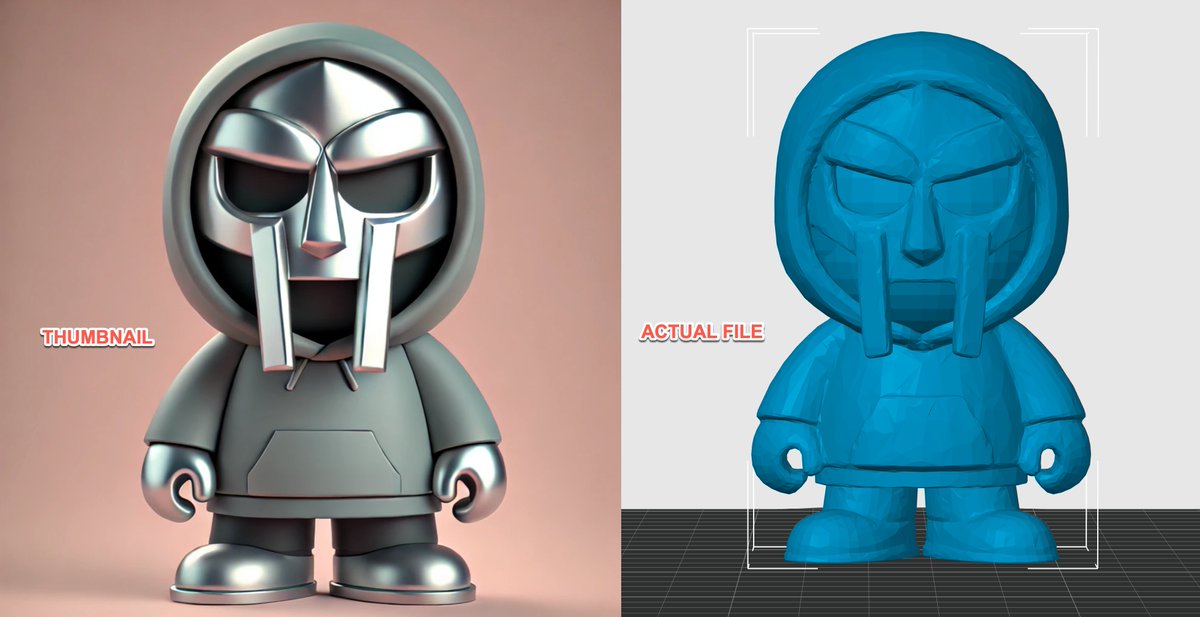

I friggin HATE AI File Garbage This is exactly why every 3D file site needs to be more like @printablescom & have AI Filters/flags/indicators on their files ugh

Something went wrong.

Something went wrong.

United States Trends

- 1. Freddie Freeman 76.7K posts

- 2. #WorldSeries 220K posts

- 3. Good Tuesday 26.9K posts

- 4. Dodgers 277K posts

- 5. Klein 220K posts

- 6. #tuesdayvibe 1,731 posts

- 7. Grokipedia 102K posts

- 8. Wikipedia 71.1K posts

- 9. Jamaica 144K posts

- 10. $PYPL 31.6K posts

- 11. Ohtani 144K posts

- 12. USS George Washington 26.7K posts

- 13. National First Responders Day N/A

- 14. Wordle 1,592 X N/A

- 15. PayPal 89.2K posts

- 16. kershaw 20.7K posts

- 17. 18 INNINGS 17.3K posts

- 18. Nelson 22.5K posts

- 19. Hurricane Melissa 110K posts

- 20. Riley Gaines 74K posts