#segmentanything resultados da pesquisa

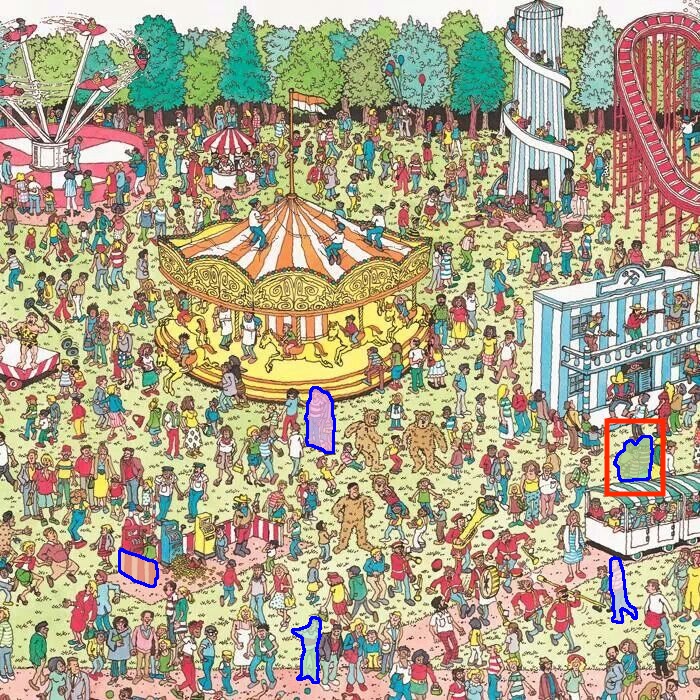

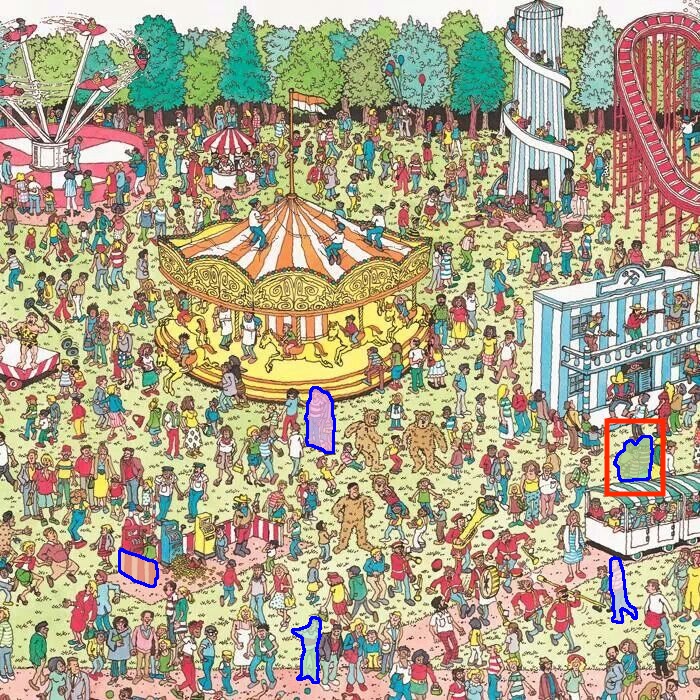

Did you know you can teach #GPT3 to find Waldo? 🕵️ 𝚐𝚛𝚊𝚍𝚒𝚘-𝚝𝚘𝚘𝚕𝚜 version 0.0.7 is out, with support for @MetaAI 's #segmentanything model (SAM) Ask #GPT3 to find a man wearing red and white stripes and Waldo will appear! 𝚙𝚒𝚙 𝚒𝚗𝚜𝚝𝚊𝚕𝚕 𝚐𝚛𝚊𝚍𝚒𝚘-𝚝𝚘𝚘𝚕𝚜

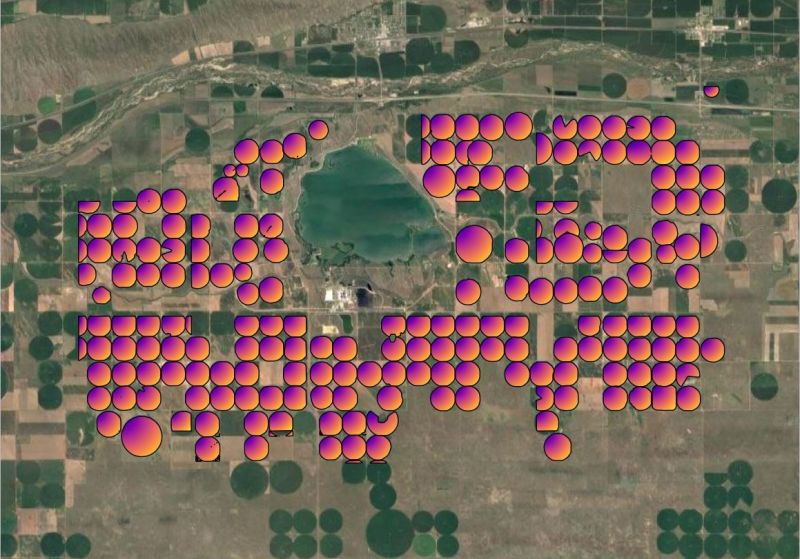

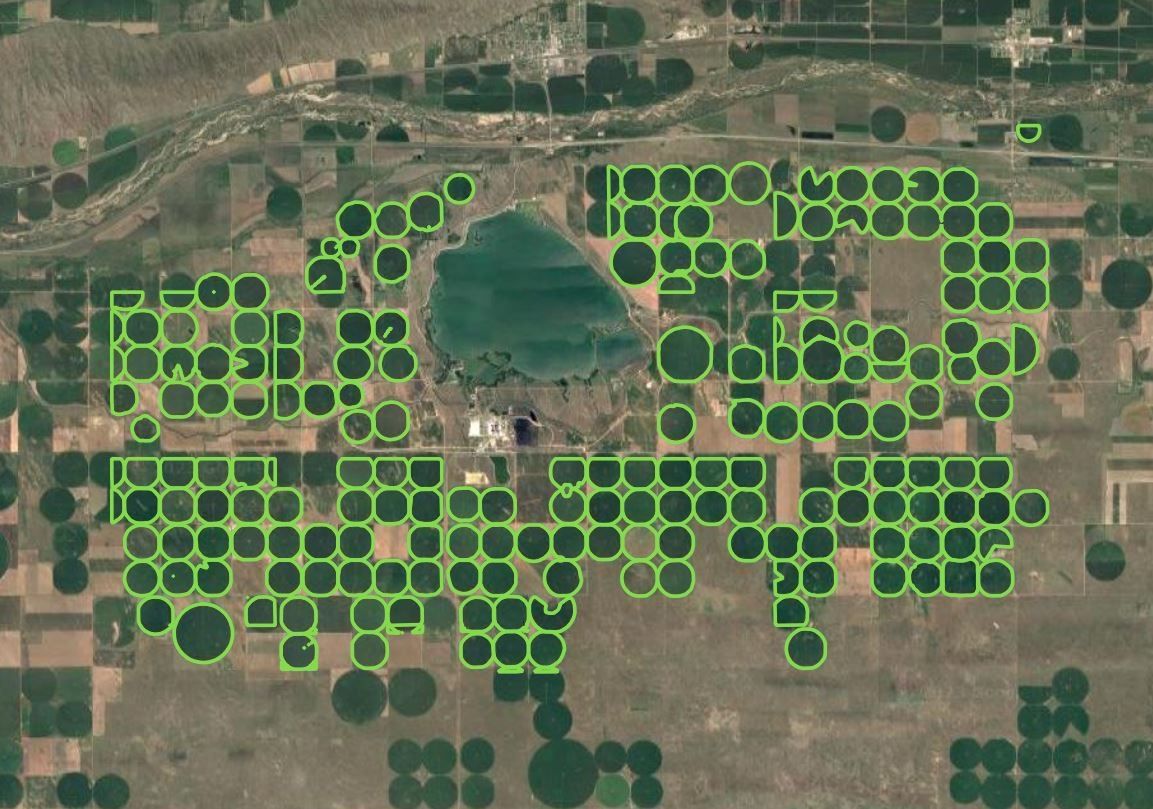

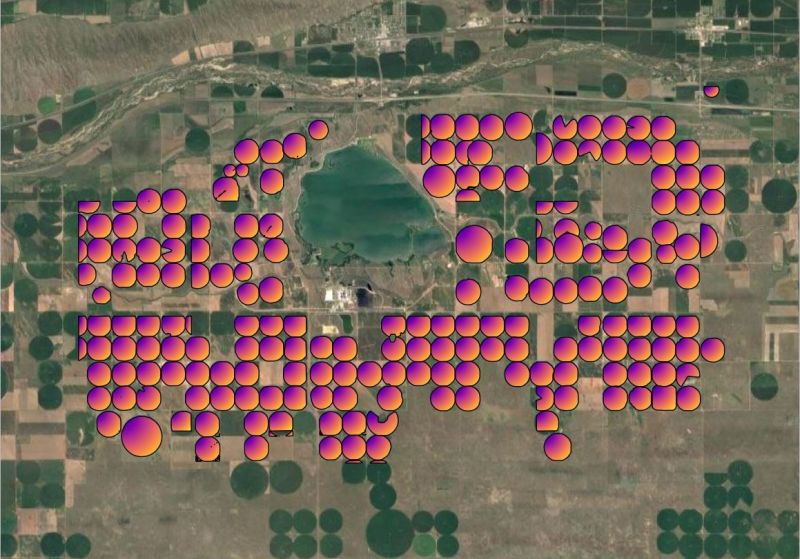

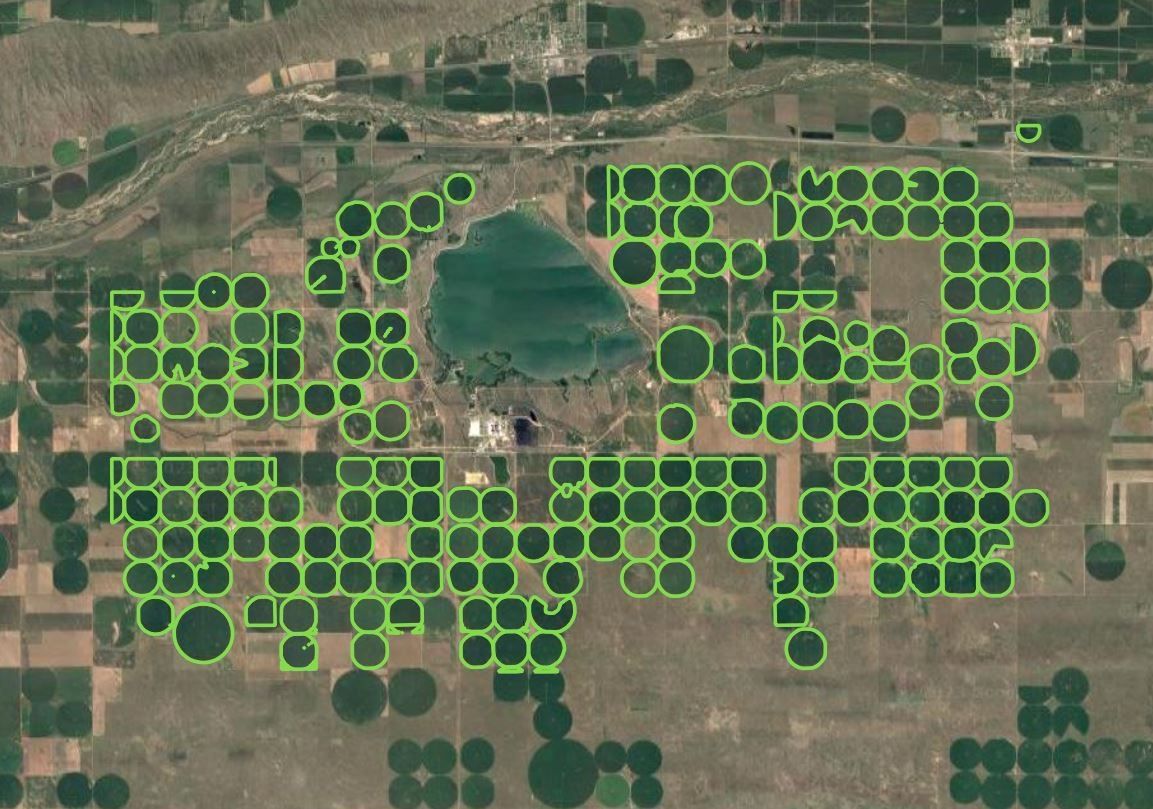

Identifying central pivot irrigation boundaries by simply using the text prompt “circle” with the segment-geospatial package 👇 GitHub: github.com/opengeos/segme… LinkedIn post: linkedin.com/posts/qiusheng… #geospatial #segmentanything

ついに、QGIS上でSegment Anything Model (SAM) を動かすことに成功した! 以下のプラグインを使用しています。 geo-sam.readthedocs.io/en/latest/inde… RGB3バンド入力だけでなく、SAR画像の入力も考慮されていたり、CPUで動いたりと色々嬉しい機能がある。 下の動画もCPUで動かしてます。 #QGIS #segmentAnything

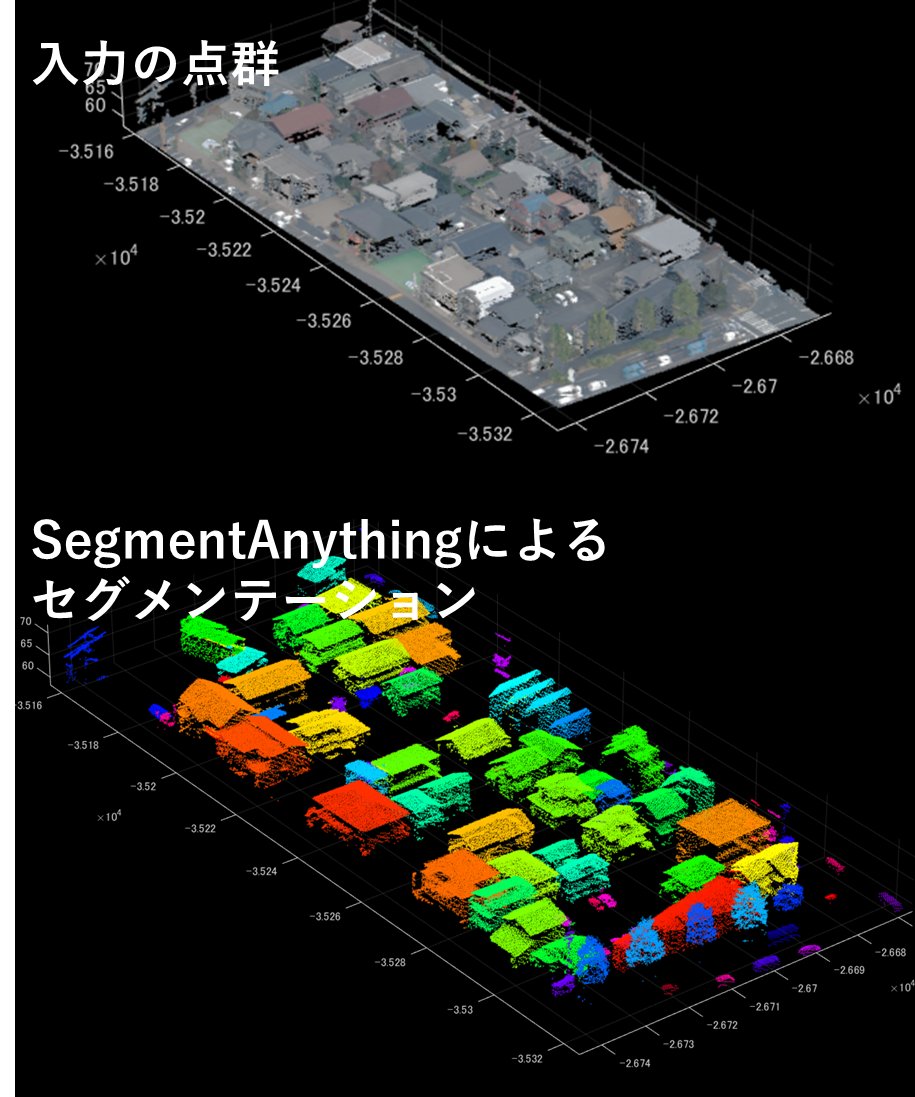

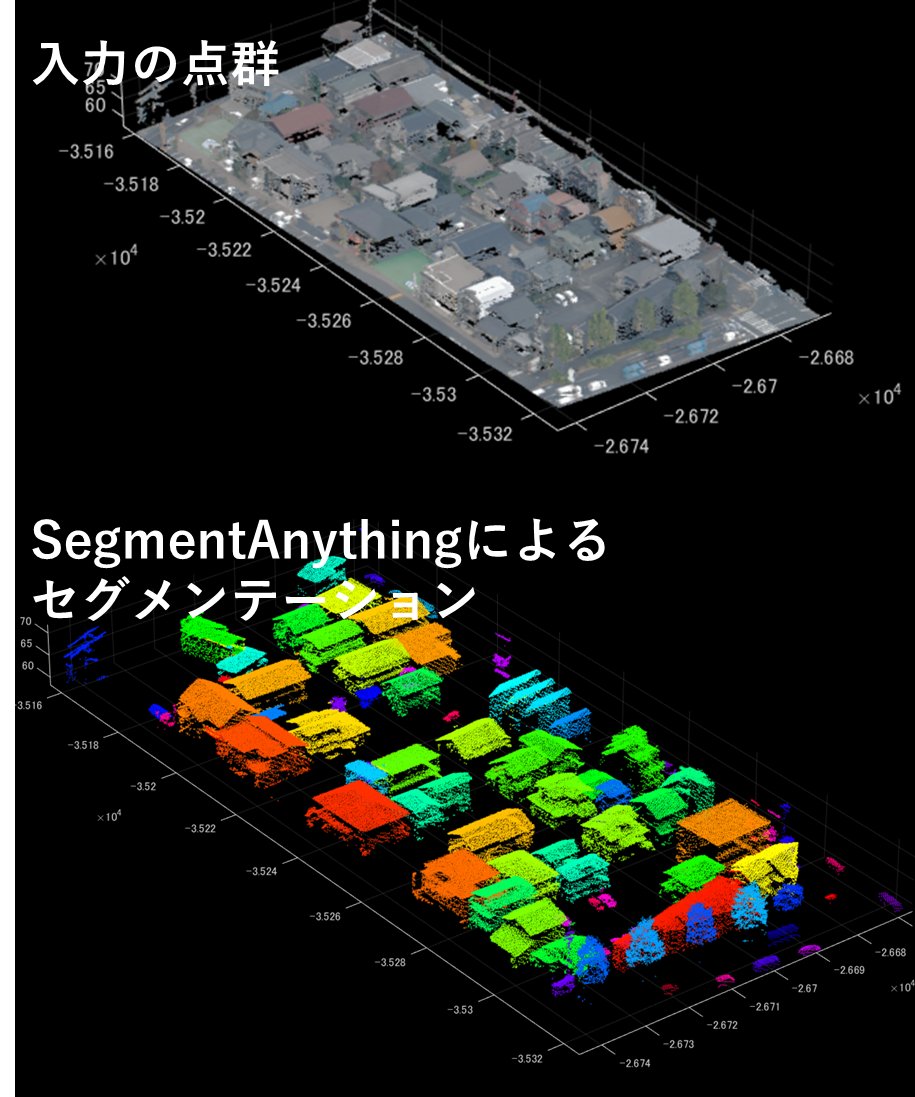

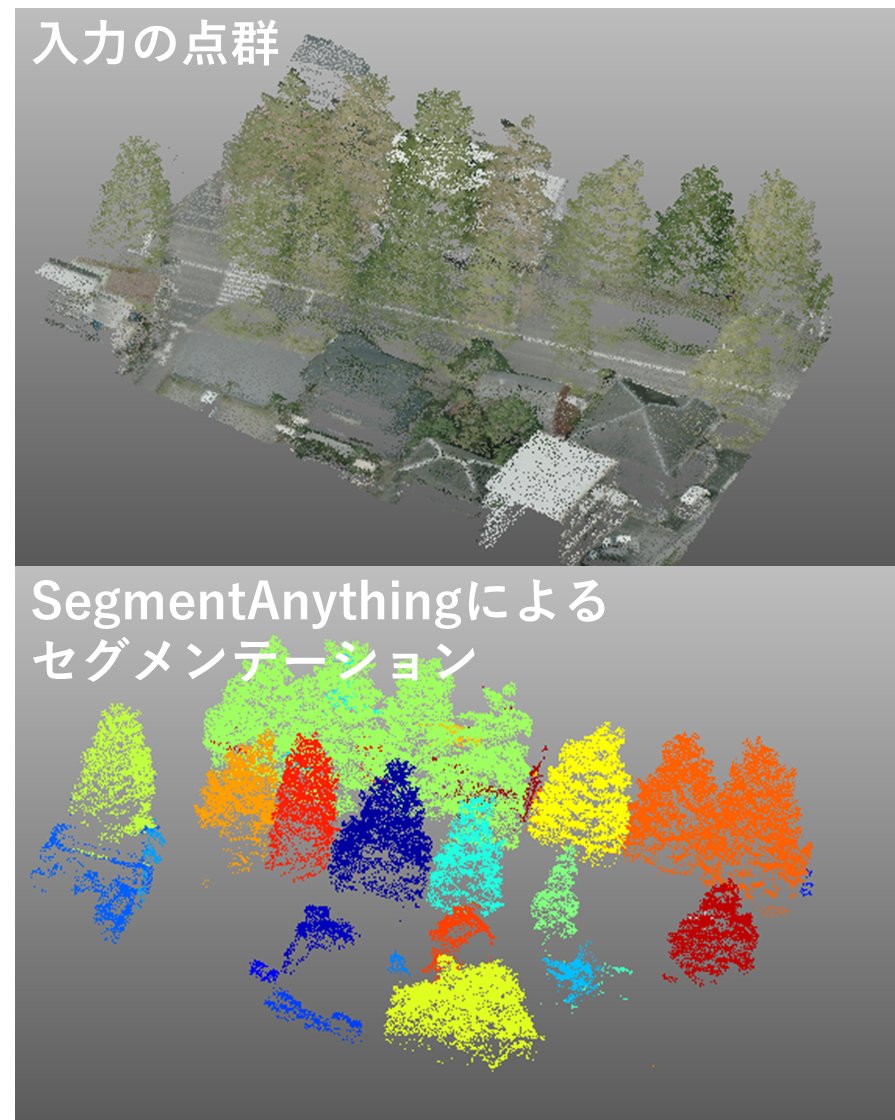

segment-lidarを使って、静岡県が公開しているVIRTUAL SHIZUOKAの3次元点群データに対してインスタンスセグメンテーションを行ってみた。 建物1つ1つとまではいかないけど、車なども含めてある程度セグメンテーションできているっぽい。 github.com/Yarroudh/segme… #点群データ #segmentanything

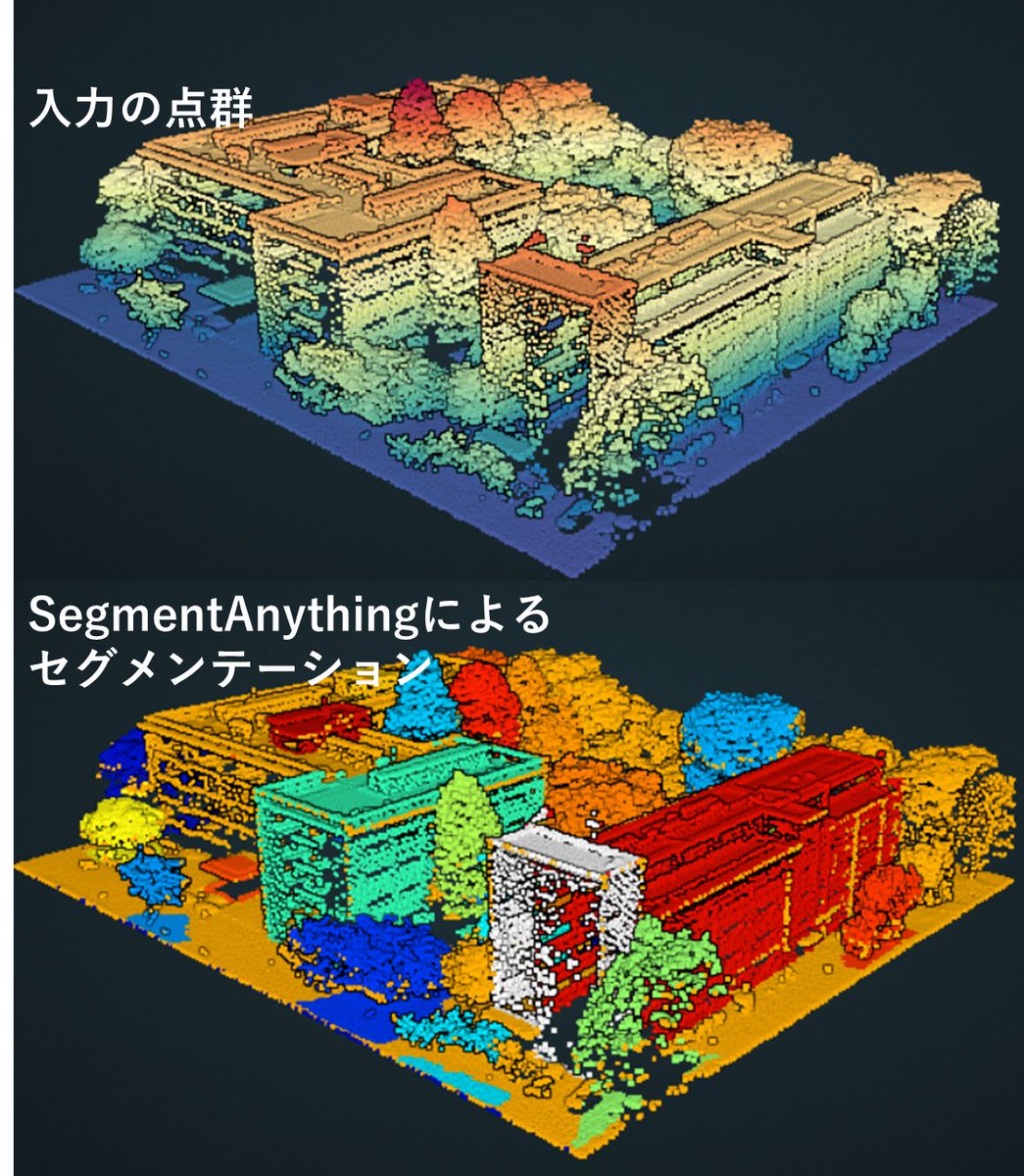

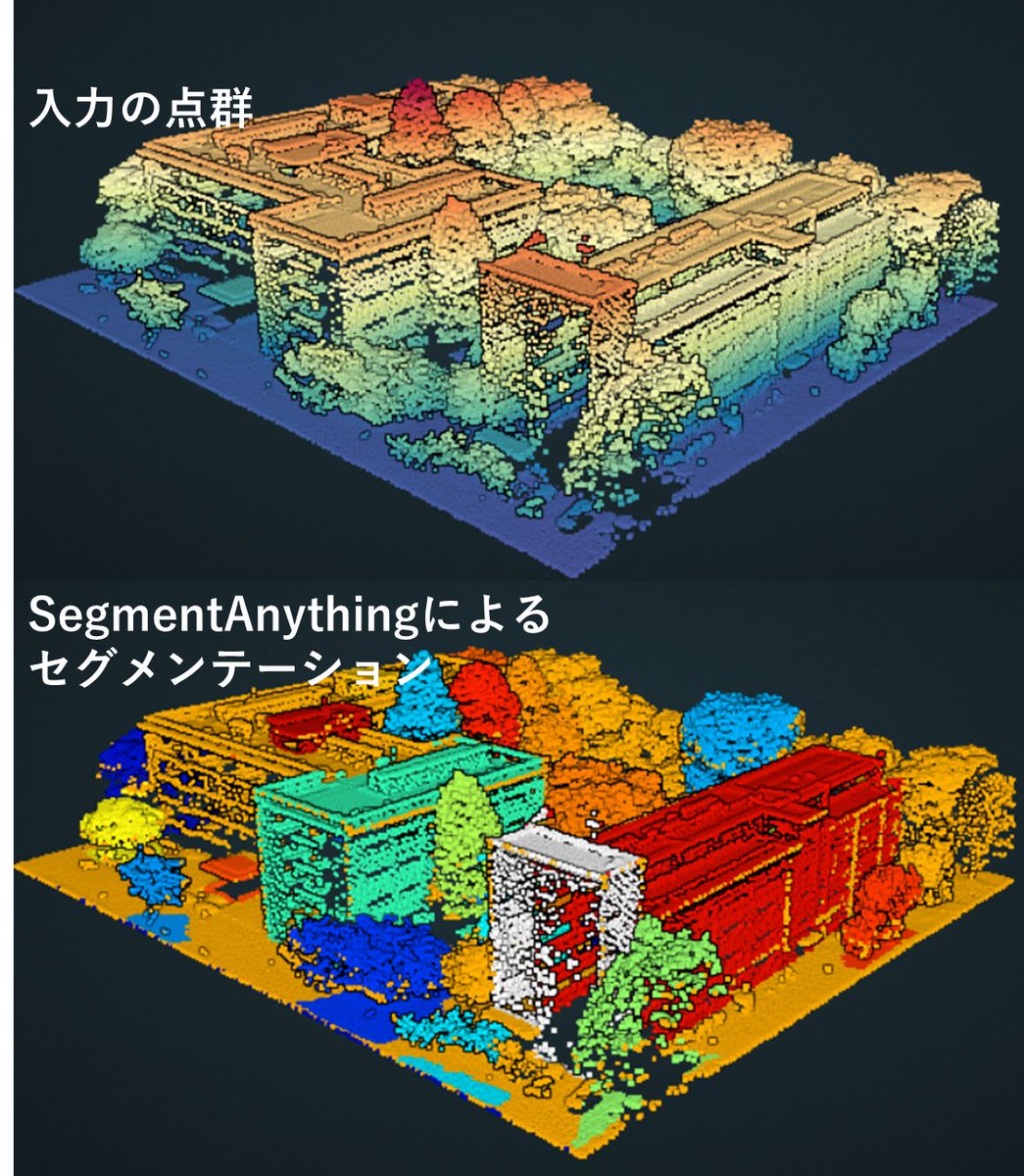

東京都より公開されている #点群 データと #オルソ画像 を利用して、セグメンテーションを行いました。東京ドームが一つの大きな物体として認識されています。また周辺の建物もうまく色分けされています。#SegmentAnything を利用してセグメンテーションしました #デジタルツイン実現プロジェクト

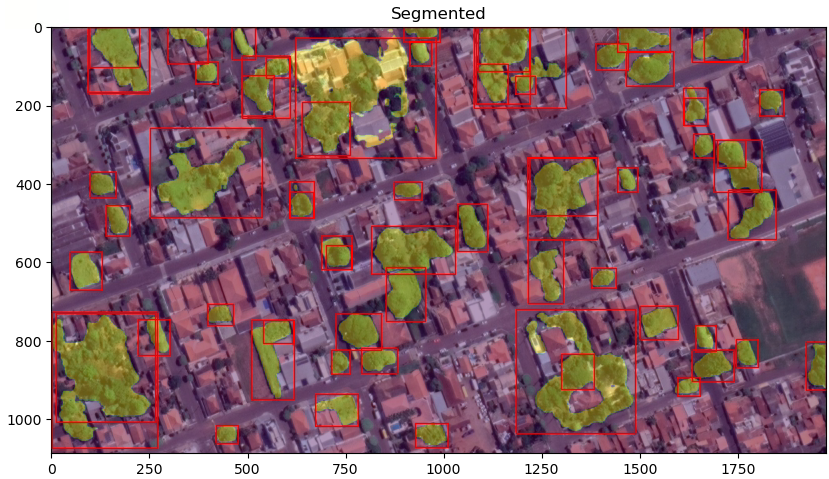

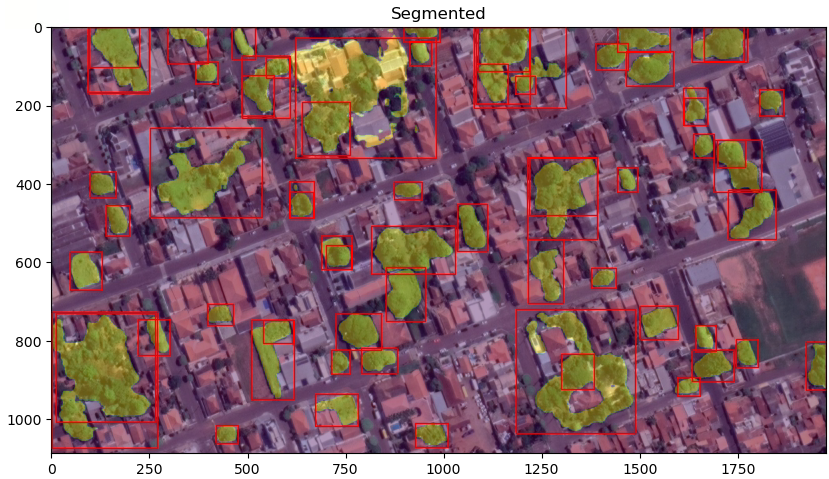

Segmenting aerial imagery with text prompts. It will soon be available through the segment-geospatial Python package. The image below is the segmentation result using the text prompt 'tree'. It is full automatic. GitHub: github.com/opengeos/segme… #geospatial #segmentanything

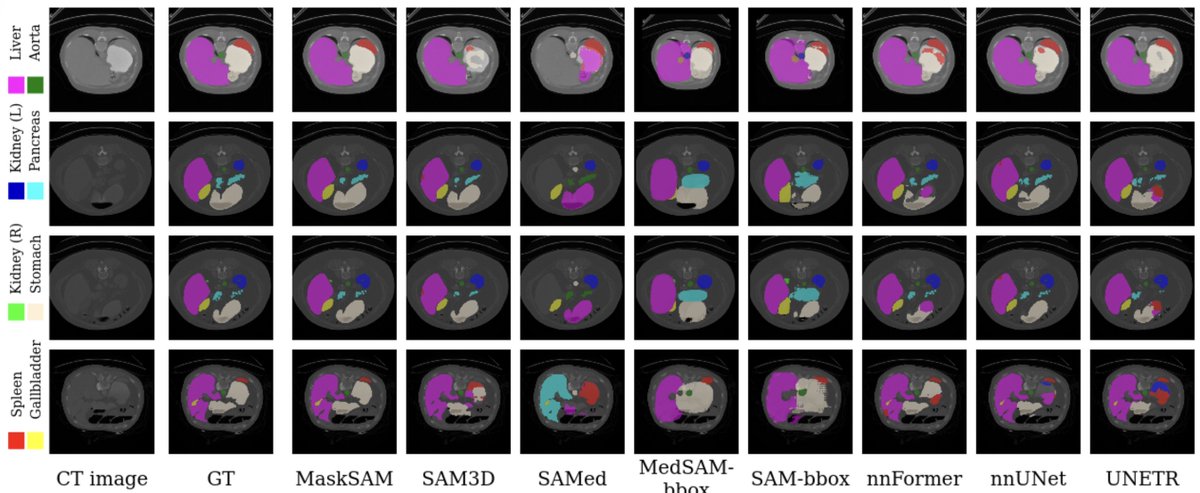

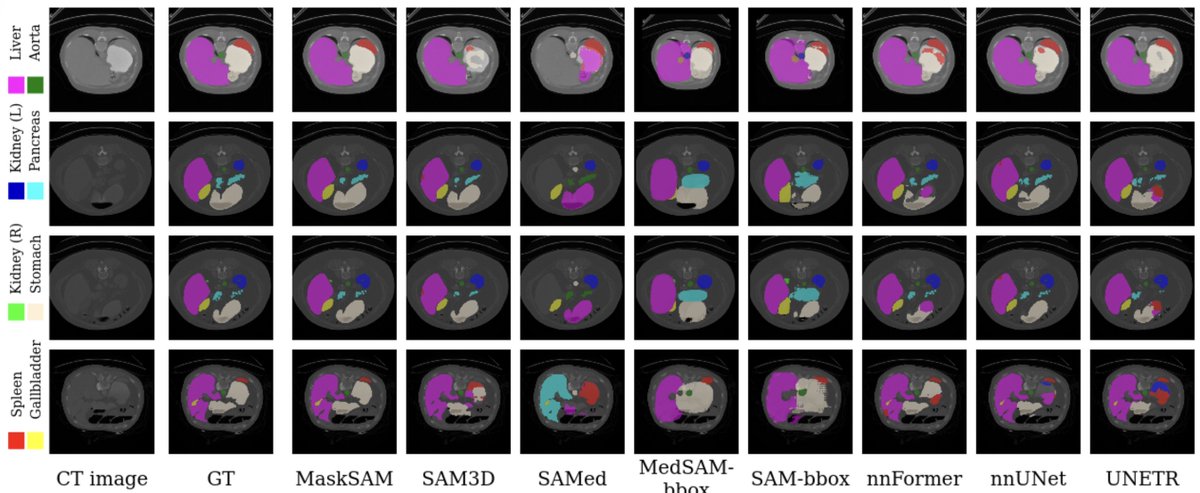

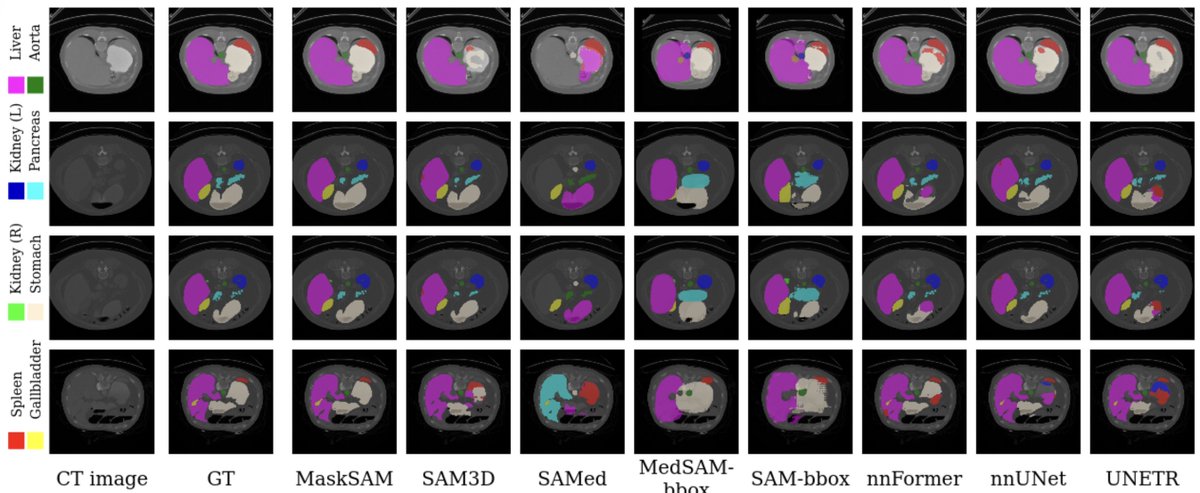

🚀 Big news! Our paper MaskSAM is heading to #ICCV2025 in Hawaii! 🌺🌴 We make SAM smarter for medical image segmentation — no prompts, just mask magic 🩺✨ (+2.7% Dice on AMOS2022). 🔗 arxiv.org/abs/2403.14103 #MaskSAM #SegmentAnything #MedicalImaging #AIforHealthcare

🌍 Segment-geospatial v0.10.0 is out! It's time to get excited 🚀 It now supports segmenting remote sensing imagery with FastSAM 🛰️ GitHub: github.com/opengeos/segme… Notebook: samgeo.gishub.org/examples/fast_… #geospatial 🗺️ #segmentanything 🌄 #deeplearning 🧠

🔥 Our paper SAMWISE: Infusing Wisdom in SAM2 for Text-Driven Video Segmentation is accepted at #CVPR2025! 🎉 We make #SegmentAnything wiser, enabling it to understand text prompts—training only 4.9M parameters! 🧠 💻 Code, models & demo: github.com/ClaudiaCuttano… Why SAMWISE?👇

Messing around with Customized SD 1.5 model (trained random pictures of me, *cough*), ControlNet Segmentation vs Meta's SAM (Segment Anything). Using SAM output with custom SD 1.5 produces some pretty good results. #stablediffusion #segmentanything

Segment-geospatial v0.9.1 is out. It now supports segmenting remote sensing imagery with the High-Quality Segment Anything Model (HQ-SAM) Video: youtu.be/n-FZzKirE9I Notebook: samgeo.gishub.org/examples/input… GitHub: github.com/opengeos/segme… #segmentanything #geospatial #deeplearning

Segment-geospatial v0.8.0 is out. New features include segmentating remote sensing imagery with text prompts interactively 🤩 Notebook: samgeo.gishub.org/examples/text_… GitHub: github.com/opengeos/segme… Video: youtu.be/cSDvuv1zRos #geospatial #segmentanything

#EarthEngine Image Segmentation with the Segment Anything Model (SAM) Notebook: geemap.org/notebooks/135_… GitHub: github.com/opengeos/segme… #geospatial #segmentanything

Mapping swimming pools 🏊♀️ interactively with text prompts and the Segment Anything Model 🤩 Notebook: samgeo.gishub.org/examples/swimm… GitHub: github.com/opengeos/segme… #geospatial #segmentanything #deeplearning

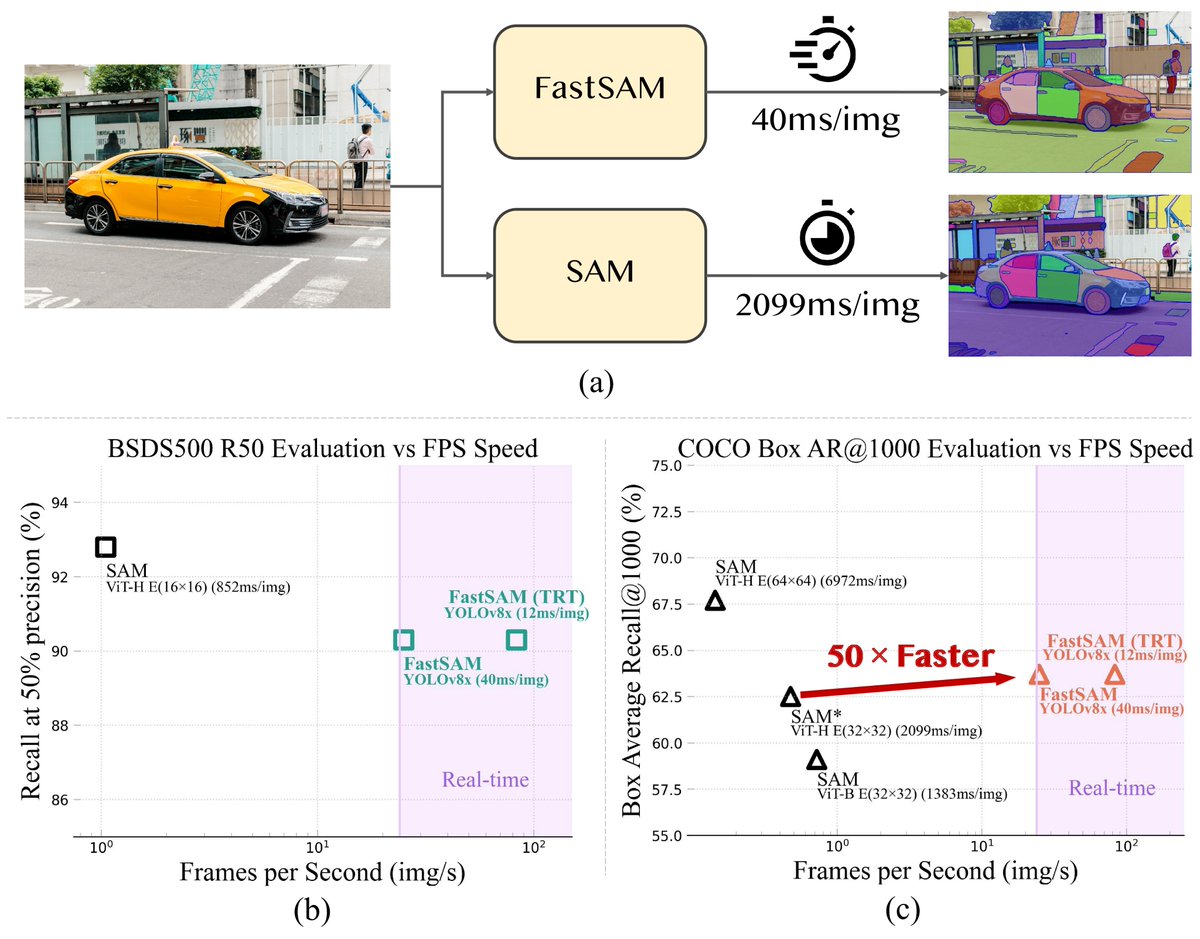

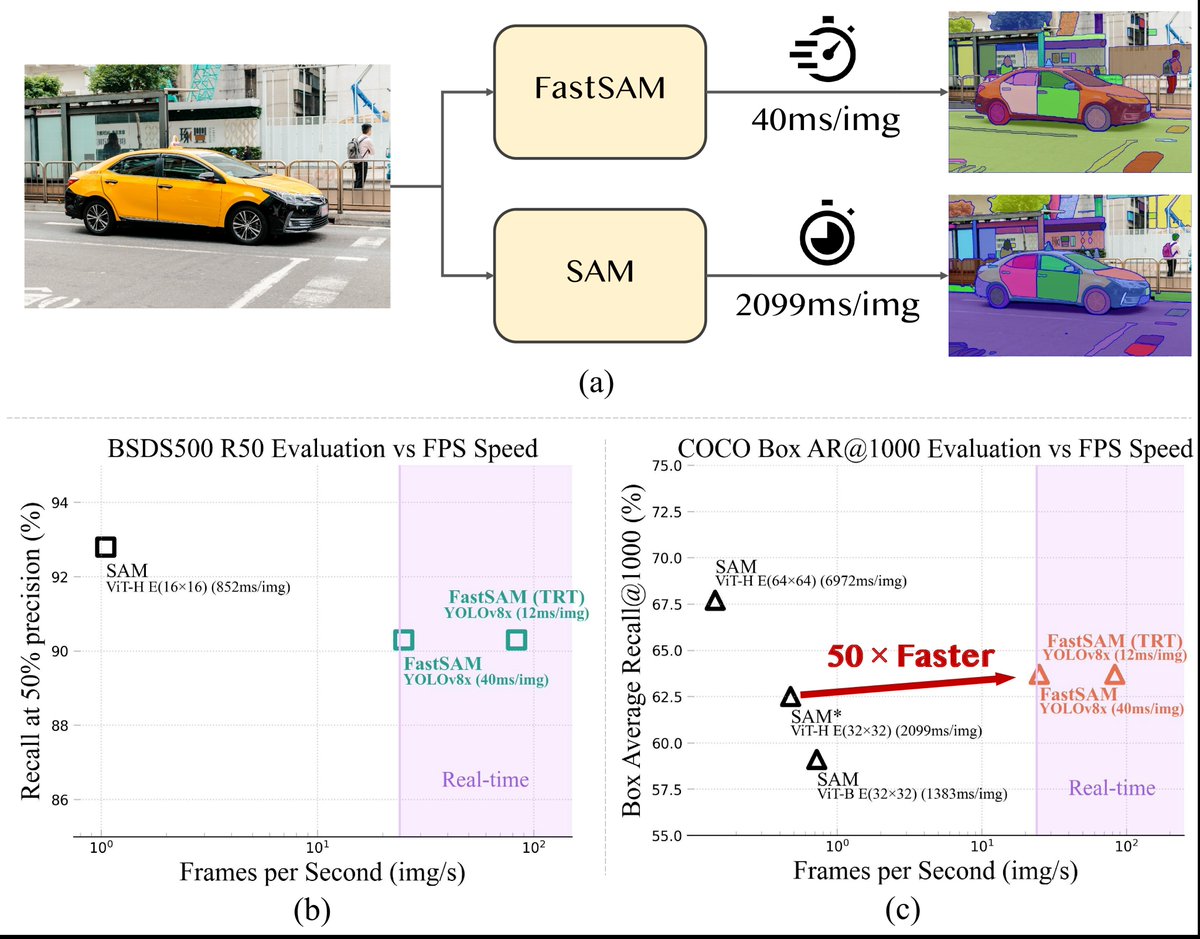

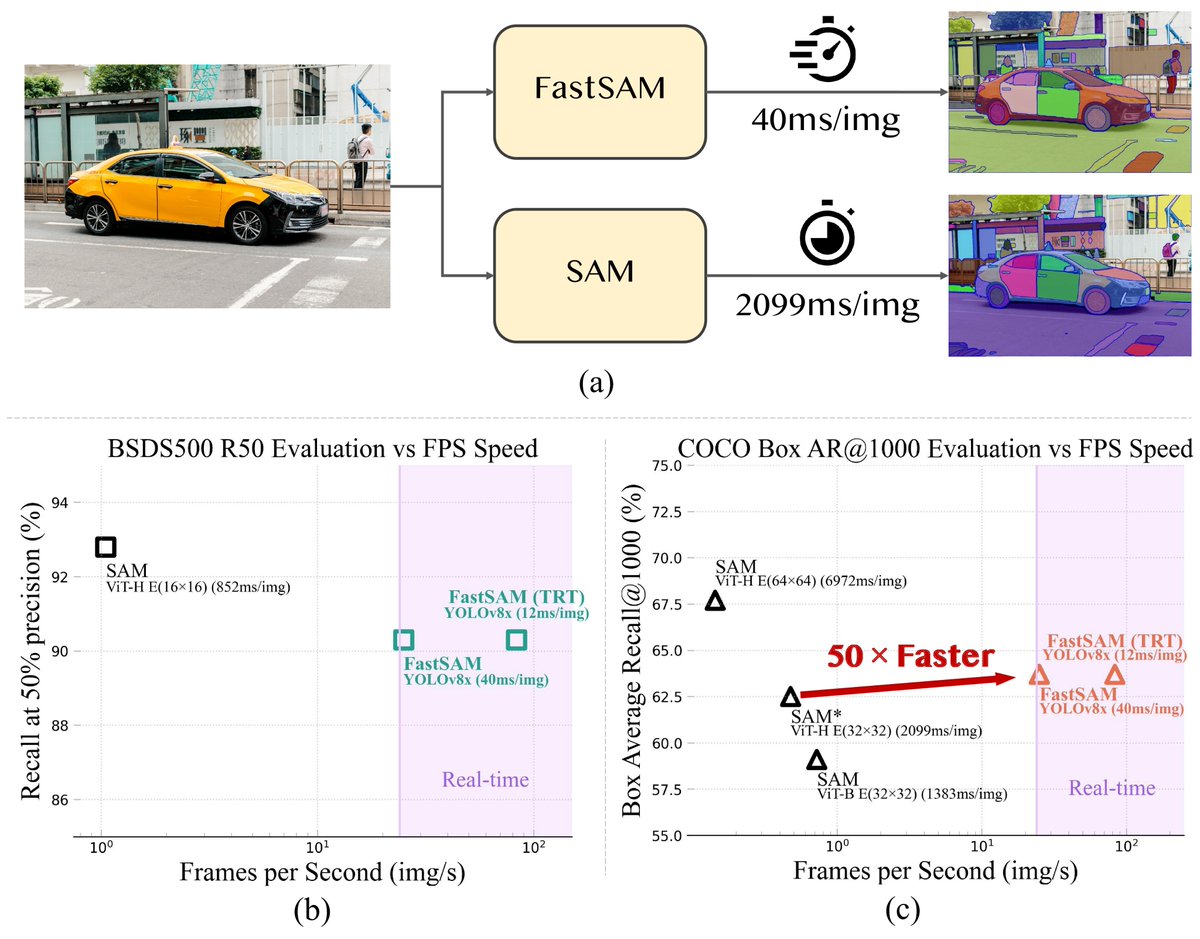

The Fast Segment Anything Model (FastSAM) is now available on PyPI. Install it with 'pip install segment-anything-fast'. Segment-geospatial will soon supports FastSAM. GitHub: github.com/opengeos/FastS… #segmentanything #deeplearning

神奈川県より公開されている3次元 #点群 をダウンロードし、#SegmentAnything を利用し物体のセグメンテーションを行いました。オルソ画像にて着色とセグメンテーションをし、その情報を点群に投影しています。.tfw形式のファイルからうまく座標を合わせるための情報を読み込んでいます。

3次元 #点群 のセグメンテーションを行いました。#SegmentAnything モデルを3次元点群データに適用し、まとまりごとに分類しました! それぞれの物体が異なる色で塗り分けられています。建物ごとの情報などが抽出しやすくなるかもしれません。 #東京都デジタルツイン実現プロジェクト

#東京都デジタルツイン実現プロジェクト により公開されている三鷹市の3次元 #点群 のセグメンテーションを行いました。#SegmentAnything を利用して建物や樹木ごとに分けています。物体ごとに色分けされて示されています。

🚀 Big news! Our paper MaskSAM is heading to #ICCV2025 in Hawaii! 🌺🌴 We make SAM smarter for medical image segmentation — no prompts, just mask magic 🩺✨ (+2.7% Dice on AMOS2022). 🔗 arxiv.org/abs/2403.14103 #MaskSAM #SegmentAnything #MedicalImaging #AIforHealthcare

東京都より公開されている #点群 データと #オルソ画像 を利用して、セグメンテーションを行いました。東京ドームが一つの大きな物体として認識されています。また周辺の建物もうまく色分けされています。#SegmentAnything を利用してセグメンテーションしました #デジタルツイン実現プロジェクト

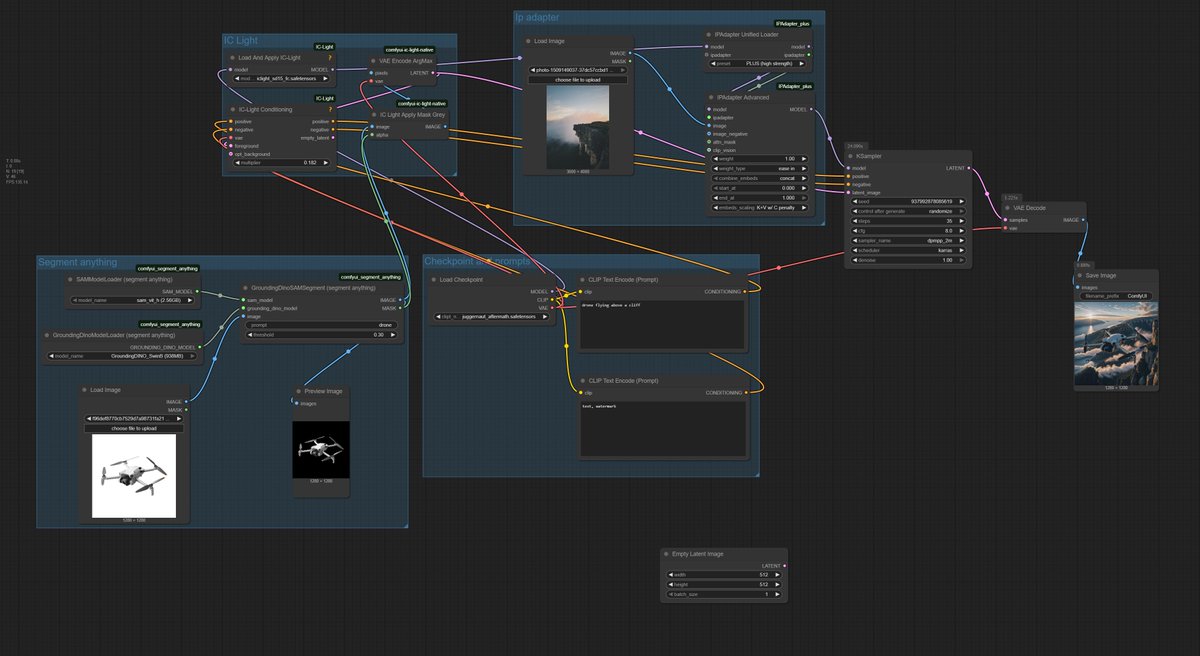

Day 16 of the #0to100xEngineer Journey Manual masking? Painful. Lighting mismatch? Fake. 🔹 GroundingDINO + SAM = text-based object masks 🔹 IC-Light - auto relighting for any scene - Fast, clean, photoreal edits - perfect for product visuals. #SegmentAnything #ICLight

In this work, we explore how wavelet transforms can be used to adapt SAM, a large vision model, to low-level vision tasks--Camouflaged Object Detection, Shadow Detection, Blur Detection, PolyP Detection. #SegmentAnything

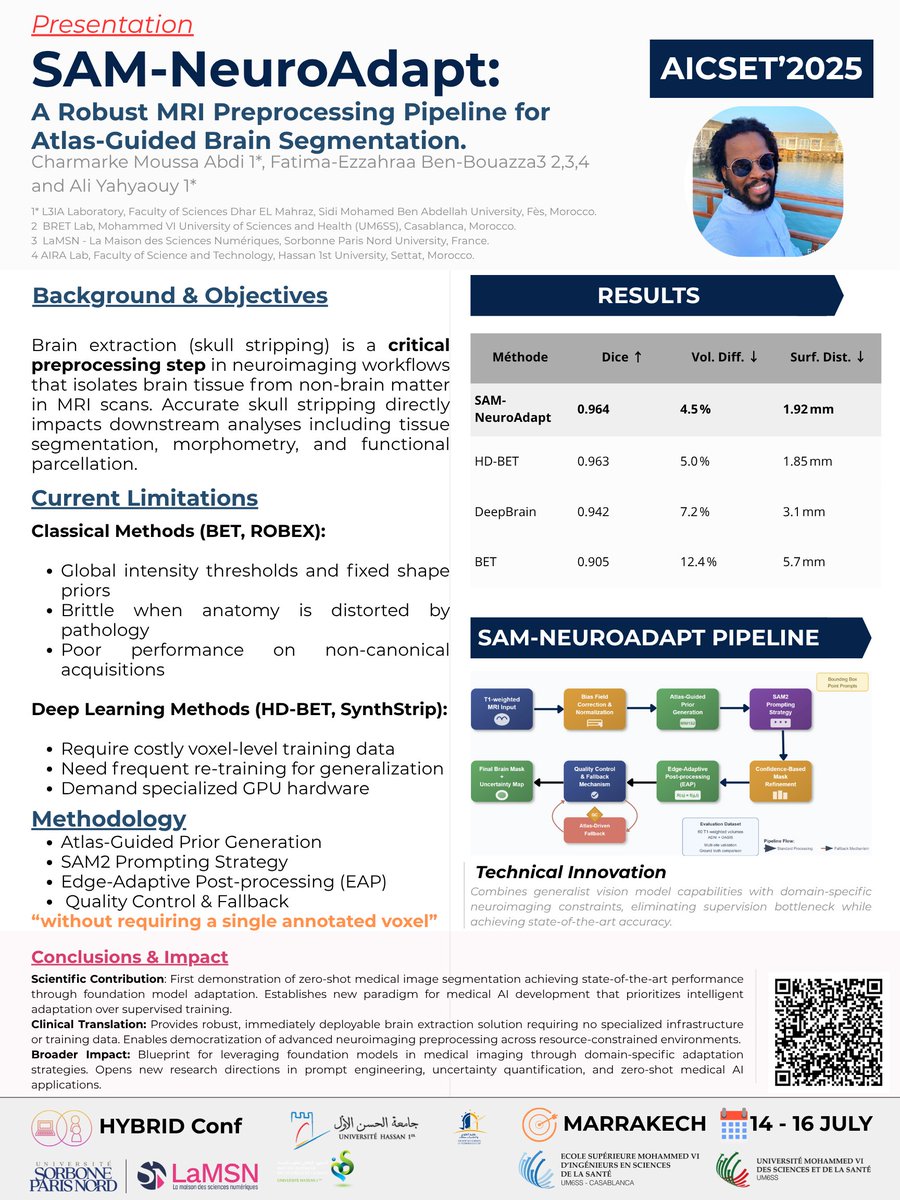

Thrilled to present at AICSET 2025 (Marrakech, July 14–16) Our paper “SAM-NeuroAdapt: A Robust MRI Pre-processing Pipeline for Atlas-Guided Brain Segmentation” has been accepted for oral presentation: #IA #NeuroImagerie #SegmentAnything #ICSET #AICSET

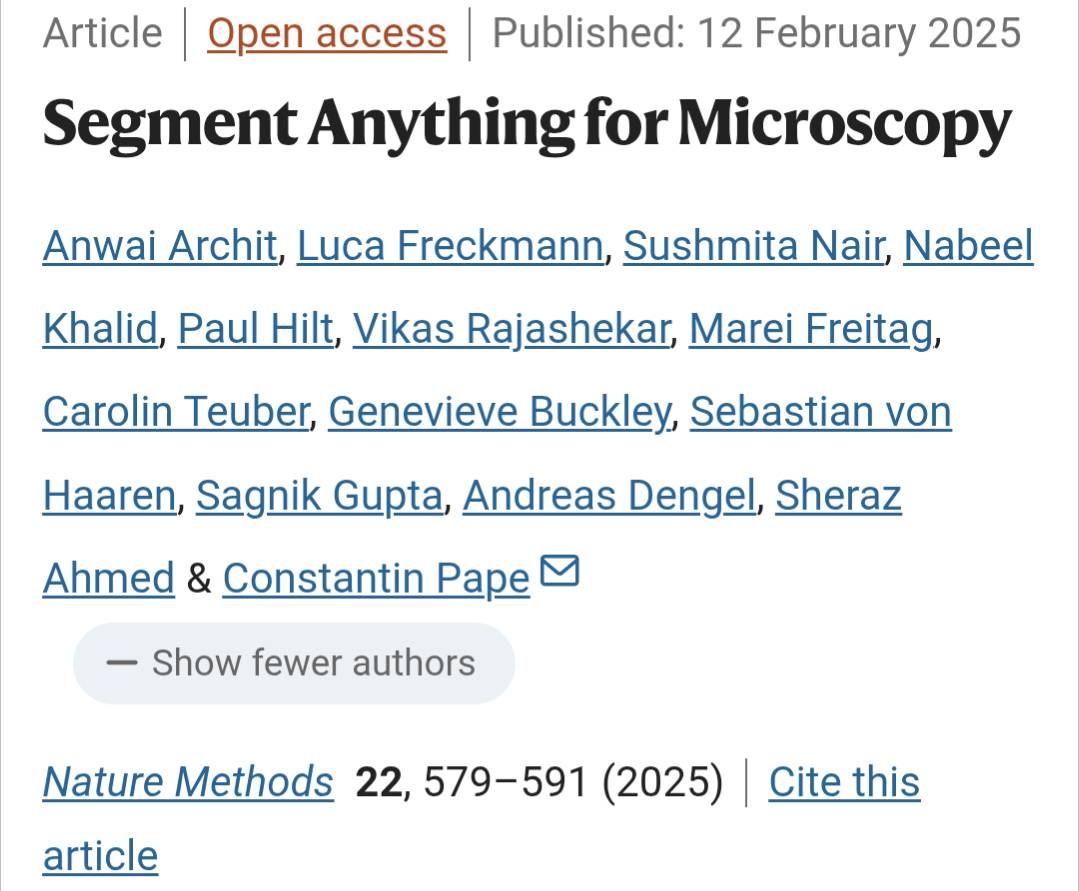

Arguably one of the most important papers for microscopy landed in February this year. This Nature paper provides a segmentation and fine tuning framework for anything microscopy. Fast, general, and open-source. #Microscopy #AI #SegmentAnything ow.ly/mvHV50W25SO

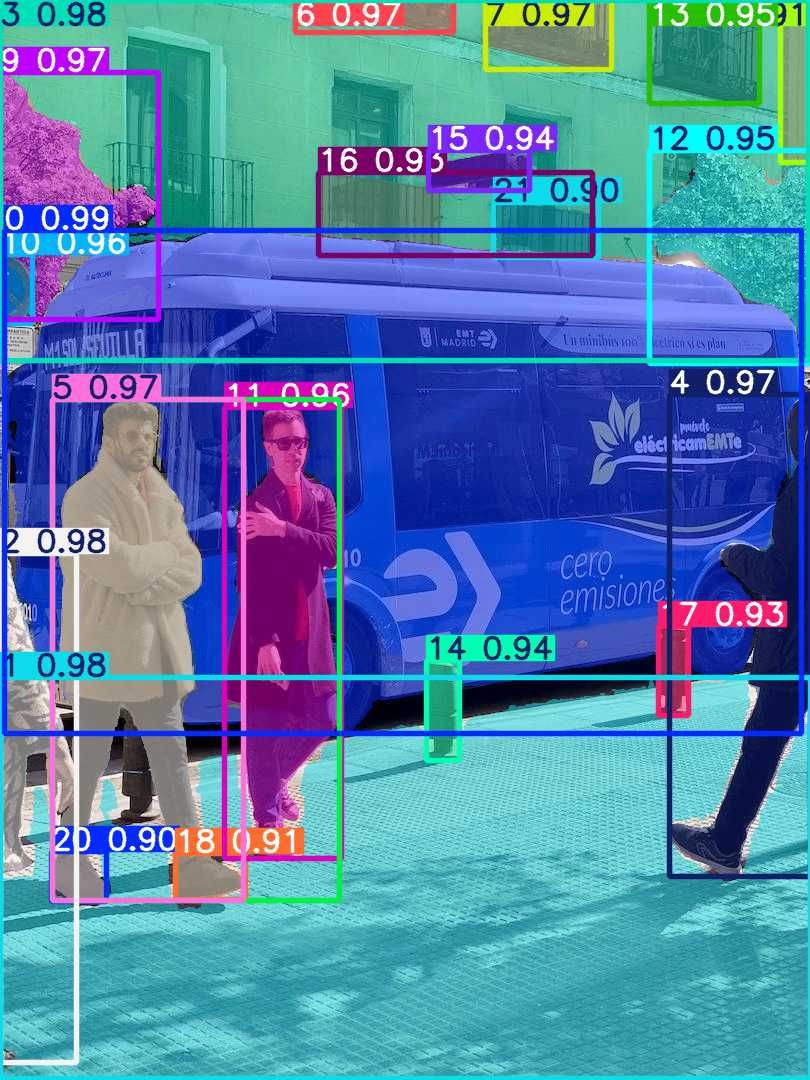

New tutorial | @AIatMeta Segment Anything 2 in @Google Colab with Ultralytics! 🚀 Segment objects using point and box prompts, or segment everything automatically with a ready-to-use Colab notebook. Watch here ➡️ ow.ly/1brb50VXBtC #SAM2 #SegmentAnything #Ultralytics #AI

東京都より公開されている #点群 データと #オルソ画像 を利用して、セグメンテーションを行いました。東京ドームが一つの大きな物体として認識されています。また周辺の建物もうまく色分けされています。#SegmentAnything を利用してセグメンテーションしました #デジタルツイン実現プロジェクト

Shocked 💀⚡️ Initially tried to use #klingai for the ball swap but found the mask too restricting. Ended up using a custom #ComfyUI workflow with #segmentanything and VACE!! Featuring @sweaty__palms getting electrocuted 😬

航空機 #LiDAR により取得した #点群 に対して #SegmentAnything (SAM) を行いました。それぞれの家などが色分けされています。おおよその家の数や屋根の面積の計算につながるかもしれません。 対象のデータは #東京都デジタルツイン実現プロジェクト よりダウンロードしています。 #MATLAB

3次元 #点群 データに対して、#SegmentAnything (#SAM)を適用し、物体ごとのセグメンテーションを行いました。それぞれが異なる色で示されています。三角の大きな建物は2つに分かれています。データは、#東京都デジタルツイン実現プロジェクト のページからダウンロードしています。

🔥 Our paper SAMWISE: Infusing Wisdom in SAM2 for Text-Driven Video Segmentation is accepted at #CVPR2025! 🎉 We make #SegmentAnything wiser, enabling it to understand text prompts—training only 4.9M parameters! 🧠 💻 Code, models & demo: github.com/ClaudiaCuttano… Why SAMWISE?👇

Inference using @Meta SAM and SAM2 using @ultralytics notebook 😍 This week, we have added the Segment Anything model notebook, Give it a try and share your thoughts 👇 Notebook➡️github.com/ultralytics/no… #computervision #segmentanything #ai #metaai

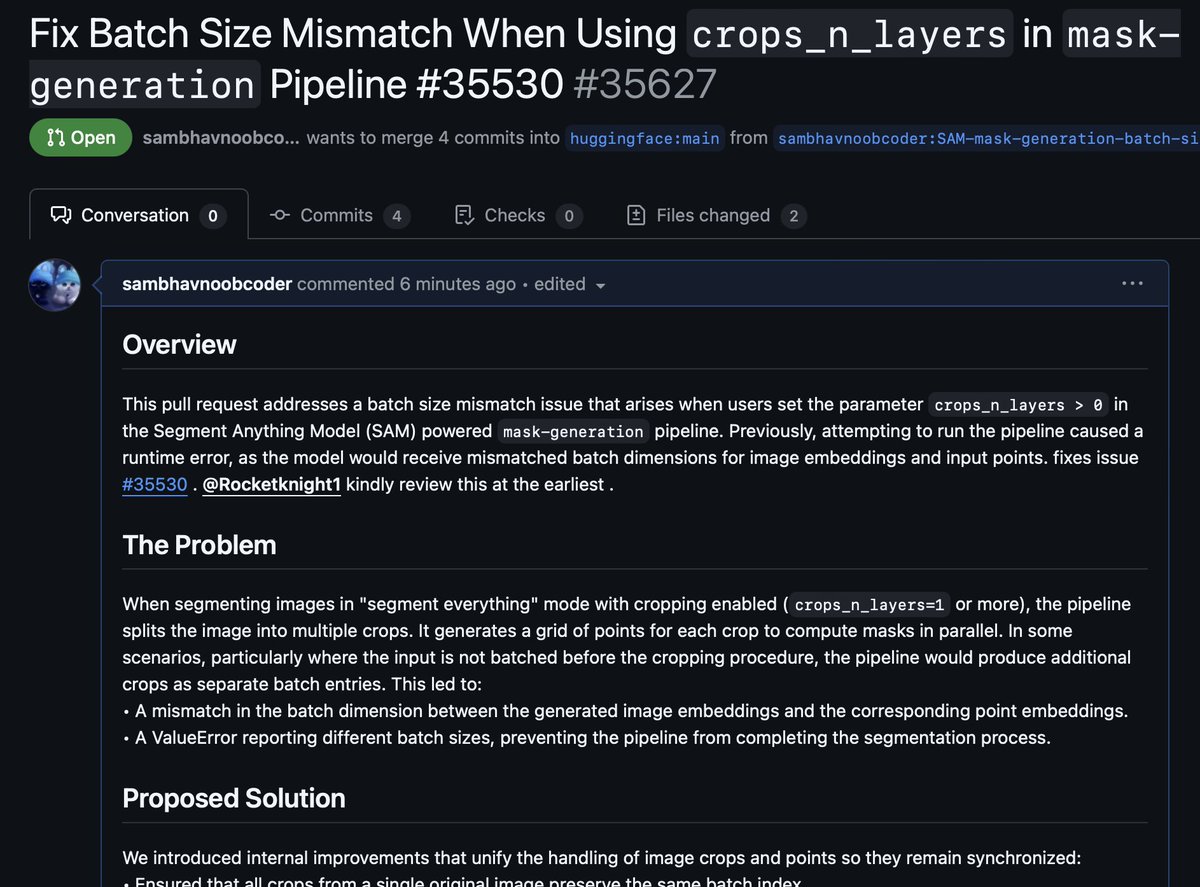

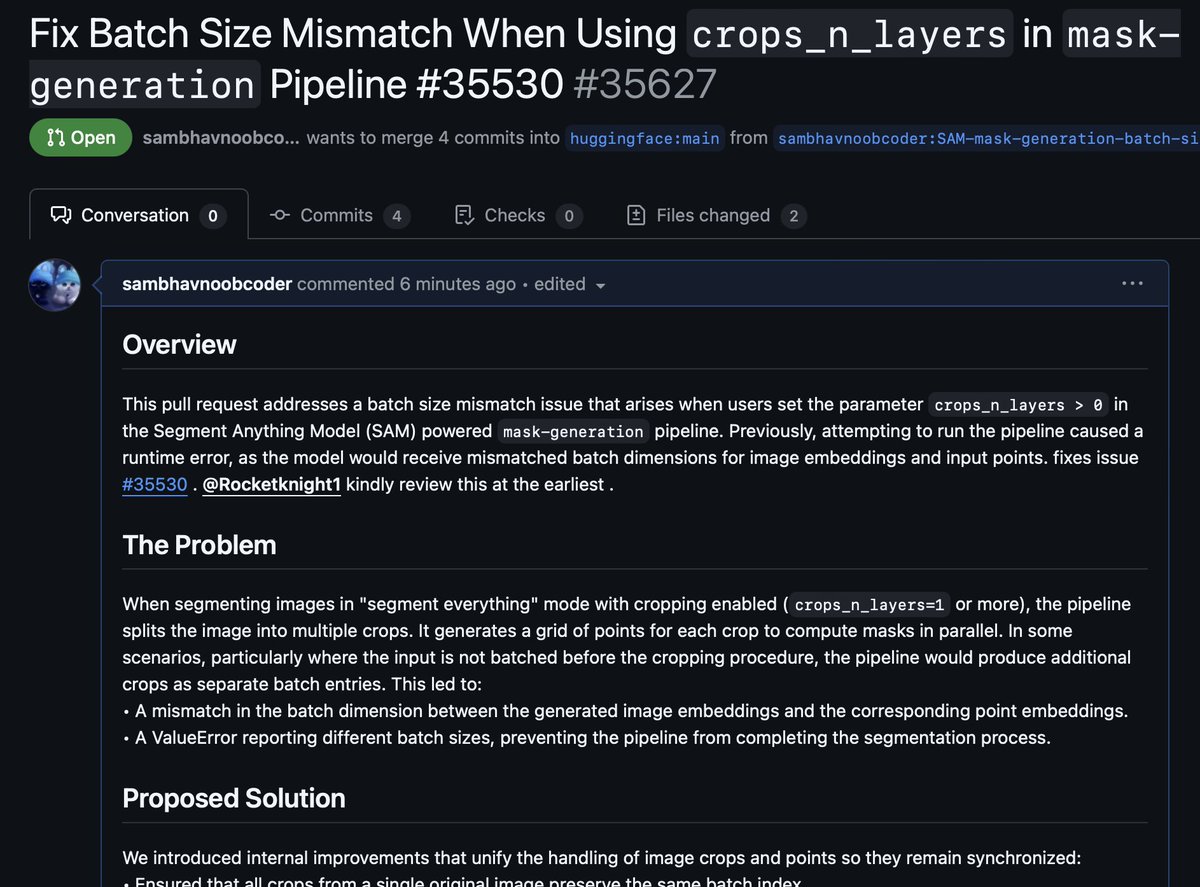

Fixed the batch size mismatch for #SegmentAnything pipeline with crops_n_layers in @huggingface #Transformers! Now, generating multi-crop masks is smooth and error-free. Huge thanks to #OpenSource supporters. Learn more in my latest PR. #AI #ComputerVision #SAM @Meta @AIatMeta

神奈川県より公開されている3次元 #点群 をダウンロードし、#SegmentAnything を利用し物体のセグメンテーションを行いました。オルソ画像にて着色とセグメンテーションをし、その情報を点群に投影しています。.tfw形式のファイルからうまく座標を合わせるための情報を読み込んでいます。

Website that gives you SUPERPOWER (Part 1). Create video cutouts and effects with a few clicks using AI for free using sam2.metademolab. 🤯 #meta #segmentanything #videoeffects #website #free #aiapp #aisoftware #videoeditingsoftware #aiwebsite #metaai

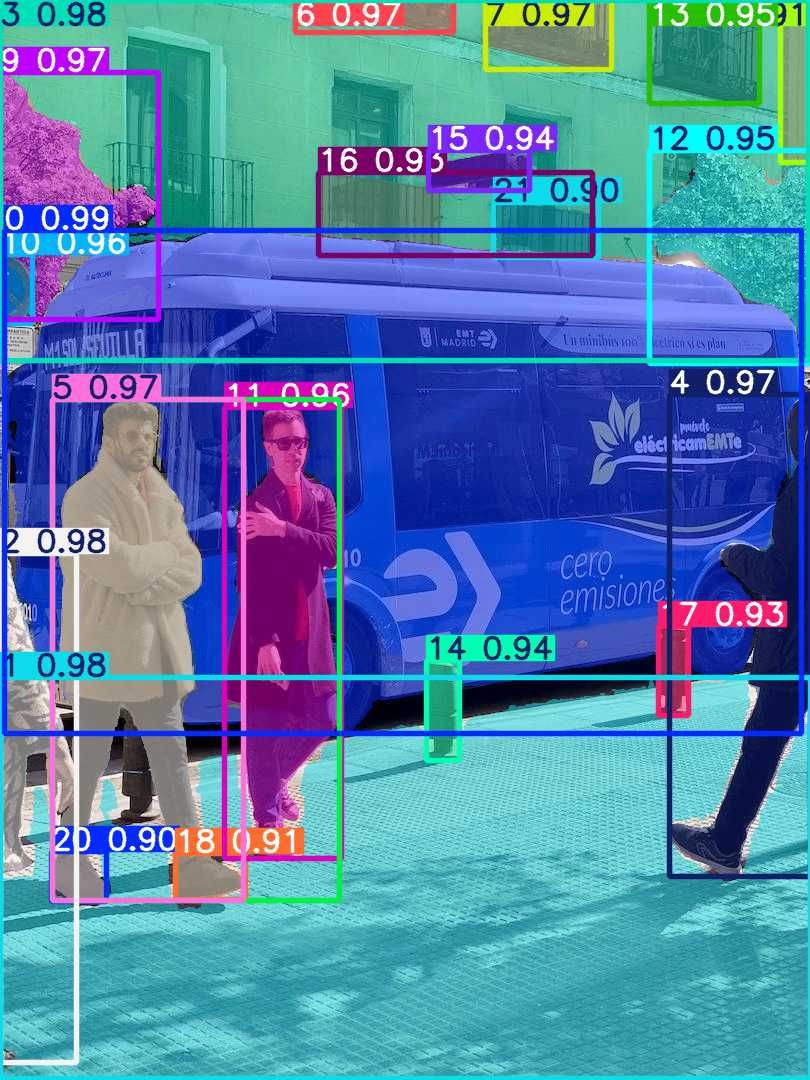

Auto Annotation using SAM2 & Ultralytics 🚀 You can streamline your annotation workflow using Segment Anything 2 (SAM2), which allows for automatic data segmentation, reducing manual effort and saving time. Learn more: docs.ultralytics.com/models/sam-2/ #segmentanything #ai #ml

Identifying central pivot irrigation boundaries by simply using the text prompt “circle” with the segment-geospatial package 👇 GitHub: github.com/opengeos/segme… LinkedIn post: linkedin.com/posts/qiusheng… #geospatial #segmentanything

Did you know you can teach #GPT3 to find Waldo? 🕵️ 𝚐𝚛𝚊𝚍𝚒𝚘-𝚝𝚘𝚘𝚕𝚜 version 0.0.7 is out, with support for @MetaAI 's #segmentanything model (SAM) Ask #GPT3 to find a man wearing red and white stripes and Waldo will appear! 𝚙𝚒𝚙 𝚒𝚗𝚜𝚝𝚊𝚕𝚕 𝚐𝚛𝚊𝚍𝚒𝚘-𝚝𝚘𝚘𝚕𝚜

#東京都デジタルツイン実現プロジェクト により公開されている三鷹市の3次元 #点群 のセグメンテーションを行いました。#SegmentAnything を利用して建物や樹木ごとに分けています。物体ごとに色分けされて示されています。

3次元 #点群 のセグメンテーションを行いました。#SegmentAnything モデルを3次元点群データに適用し、まとまりごとに分類しました! それぞれの物体が異なる色で塗り分けられています。建物ごとの情報などが抽出しやすくなるかもしれません。 #東京都デジタルツイン実現プロジェクト

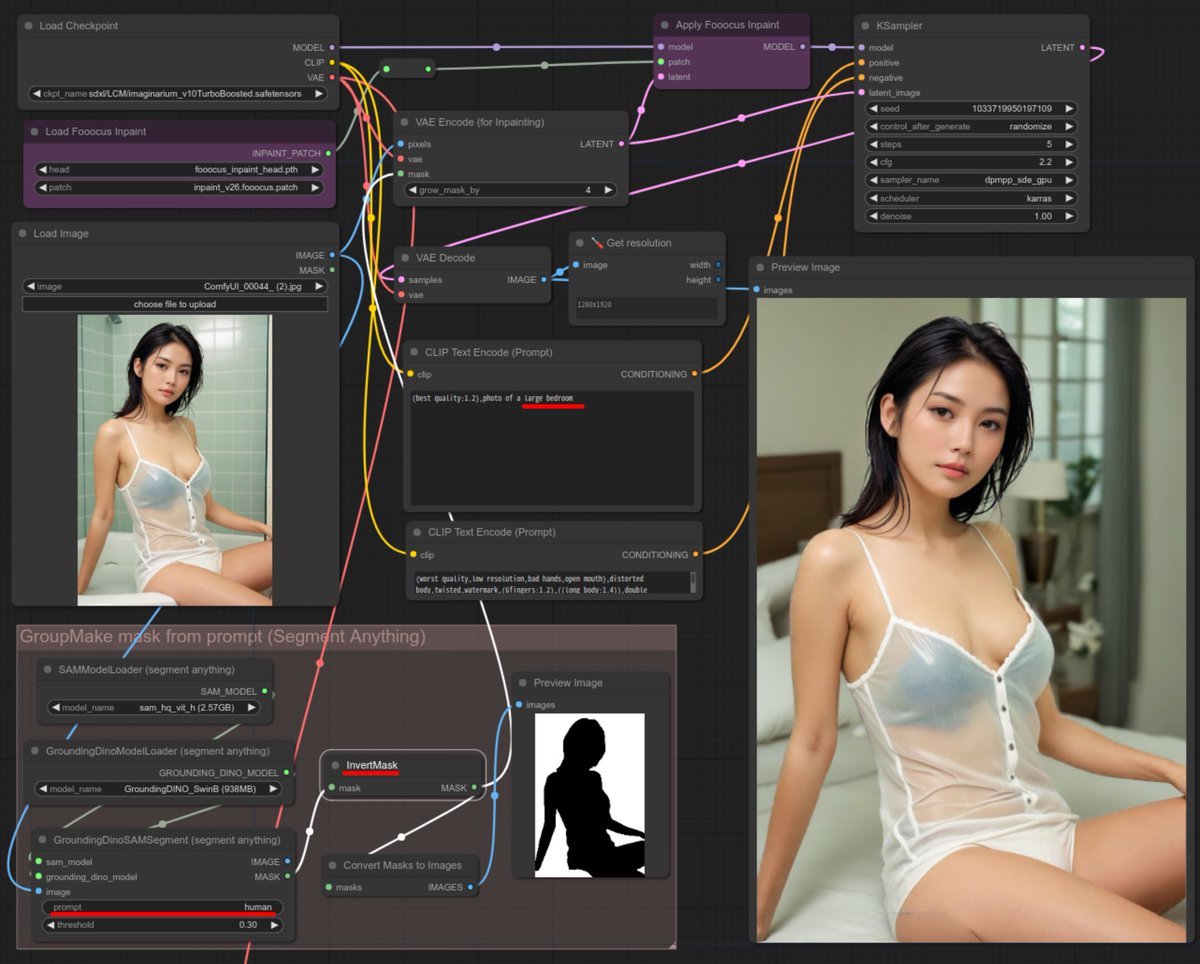

マスク切るの面倒Part2(笑)。教えて頂いた #SegmentAnything 版。ModelのDL失敗して時間かかったけど作動!ありがとうございました。> @noma_door さん。変更点はMask 呪文をBackgroundではなくHuman。Maskを反転したところ。サンプルは風呂場からベッドルームw #AI美女 #AIグラビア #SDXL #ComfyUI

#SegmentAnything を利用して物体のセグメンテーションを行いました! 猫やパイプなどの輪郭をきれいにセグメンテーションできています! 小さな物体もうまく色分けできています。 #MATLAB を利用しました。

[#MATLAB 2024aプレリリース版] 来年春ごろリリース予定のMATLABの機能に、#SegmentAnything があるようです。 以下のように物体を選択するとその領域を自然に切り取ってくれました! SAMのアドオンをインストールすれば簡単に実行することができました!

![imvisionlabs's tweet image. [#MATLAB 2024aプレリリース版]

来年春ごろリリース予定のMATLABの機能に、#SegmentAnything があるようです。

以下のように物体を選択するとその領域を自然に切り取ってくれました!

SAMのアドオンをインストールすれば簡単に実行することができました!](https://pbs.twimg.com/media/GBY9WI1agAABXj-.jpg)

🌍 Segment-geospatial v0.10.0 is out! It's time to get excited 🚀 It now supports segmenting remote sensing imagery with FastSAM 🛰️ GitHub: github.com/opengeos/segme… Notebook: samgeo.gishub.org/examples/fast_… #geospatial 🗺️ #segmentanything 🌄 #deeplearning 🧠

3次元 #点群 から植物の分類を行い、点群に対して、#SegmentAnything を利用することで、樹木個体のセグメンテーションを行いました。大まかに分けることができましたが、隣り合うものは同一の物体になっていたりしました。より工夫して精度の良いセグメンテーションを目指したいです。

Segmenting aerial imagery with text prompts. It will soon be available through the segment-geospatial Python package. The image below is the segmentation result using the text prompt 'tree'. It is full automatic. GitHub: github.com/opengeos/segme… #geospatial #segmentanything

#SegmentAnything を利用して農作物(テンサイ)のセグメンテーションを行いました。物体検出により対象のバウンディングボックスを作成し、それを入力として、輪郭の抽出を行っています。セグメンテーションを行うことで農作物の面積などを求められる可能性があります #YOLO

Fixed the batch size mismatch for #SegmentAnything pipeline with crops_n_layers in @huggingface #Transformers! Now, generating multi-crop masks is smooth and error-free. Huge thanks to #OpenSource supporters. Learn more in my latest PR. #AI #ComputerVision #SAM @Meta @AIatMeta

#MATLAB を利用して、#SegmentAnything を実行しました。猫の領域を青で示しています。#YOLOX を利用して猫の位置を特定し、SegmentAnythingでマスクを作成しています。 sam.segmentObjectsFromEmbeddings関数でSAMを実行できます!

🚀 Big news! Our paper MaskSAM is heading to #ICCV2025 in Hawaii! 🌺🌴 We make SAM smarter for medical image segmentation — no prompts, just mask magic 🩺✨ (+2.7% Dice on AMOS2022). 🔗 arxiv.org/abs/2403.14103 #MaskSAM #SegmentAnything #MedicalImaging #AIforHealthcare

The FastSAM package is now available on both PyPI and conda-forge. Install it with "mamba install -c conda-forge segment-anything-fast " GitHub: github.com/opengeos/FastS… PyPI: pypi.org/project/segmen… Conda-forge: anaconda.org/conda-forge/se… #segmentanything #deeplearning

The Fast Segment Anything Model (FastSAM) is now available on PyPI. Install it with 'pip install segment-anything-fast'. Segment-geospatial will soon supports FastSAM. GitHub: github.com/opengeos/FastS… #segmentanything #deeplearning

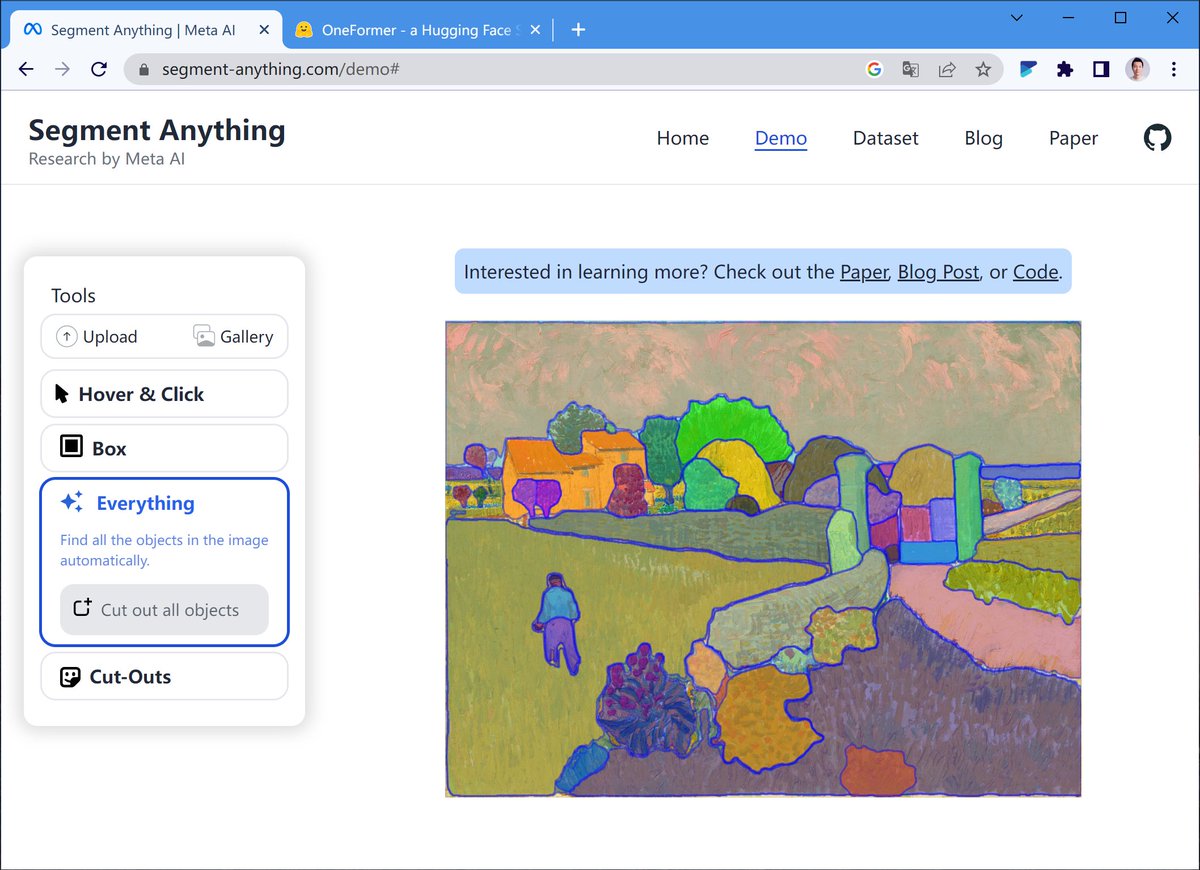

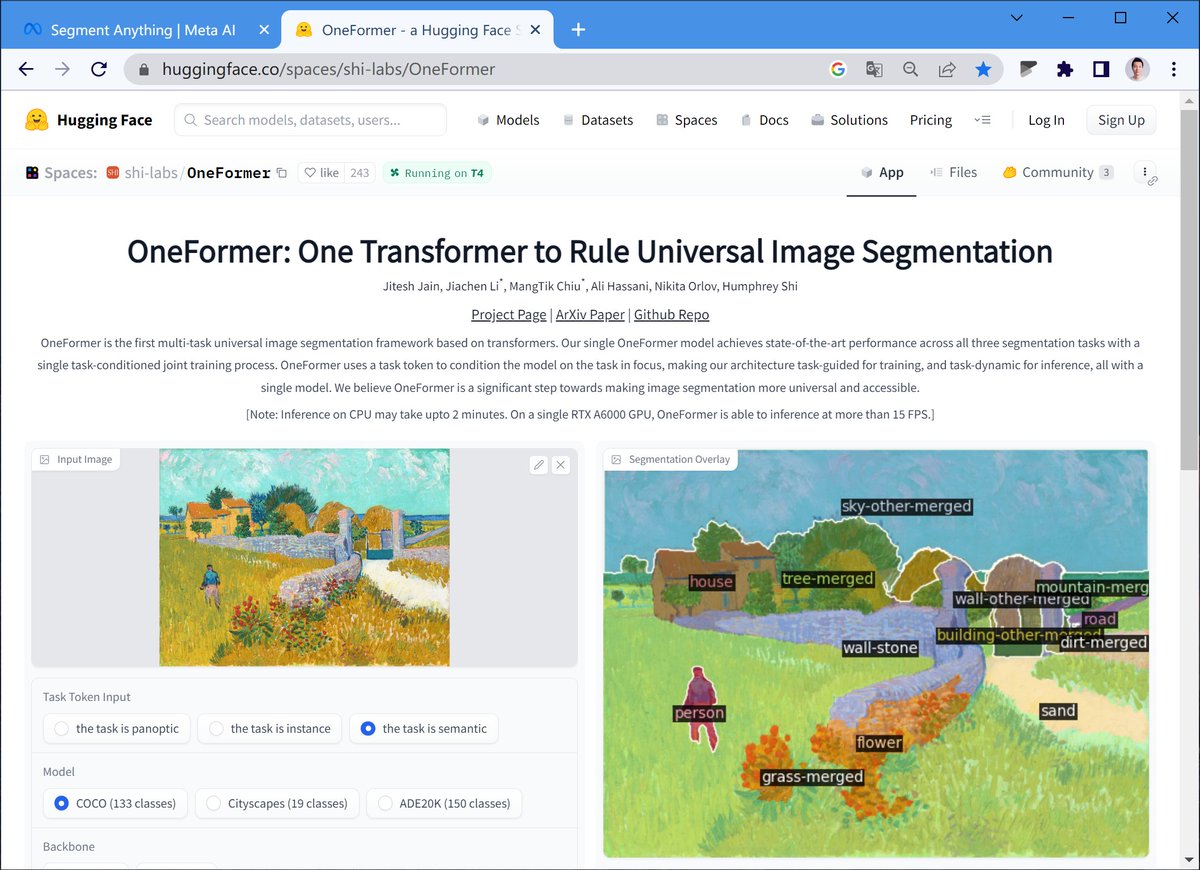

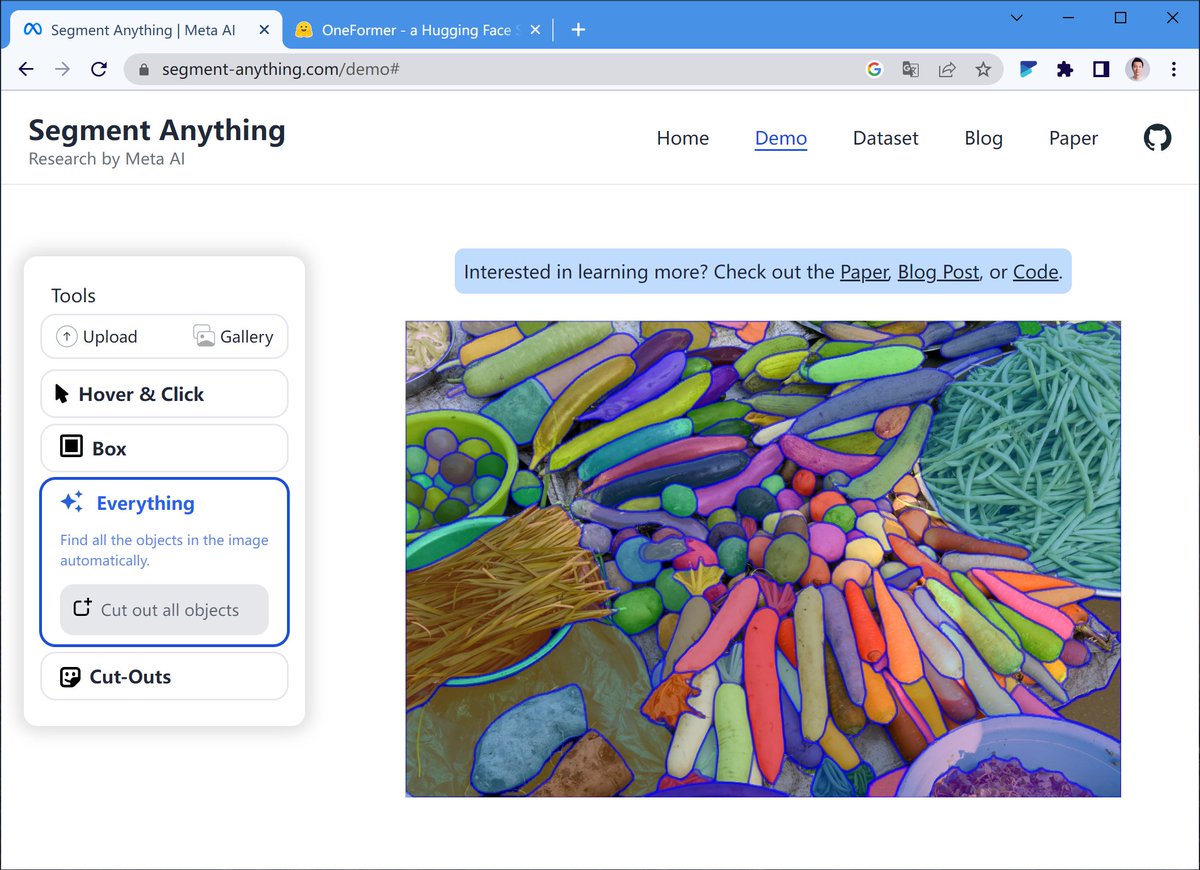

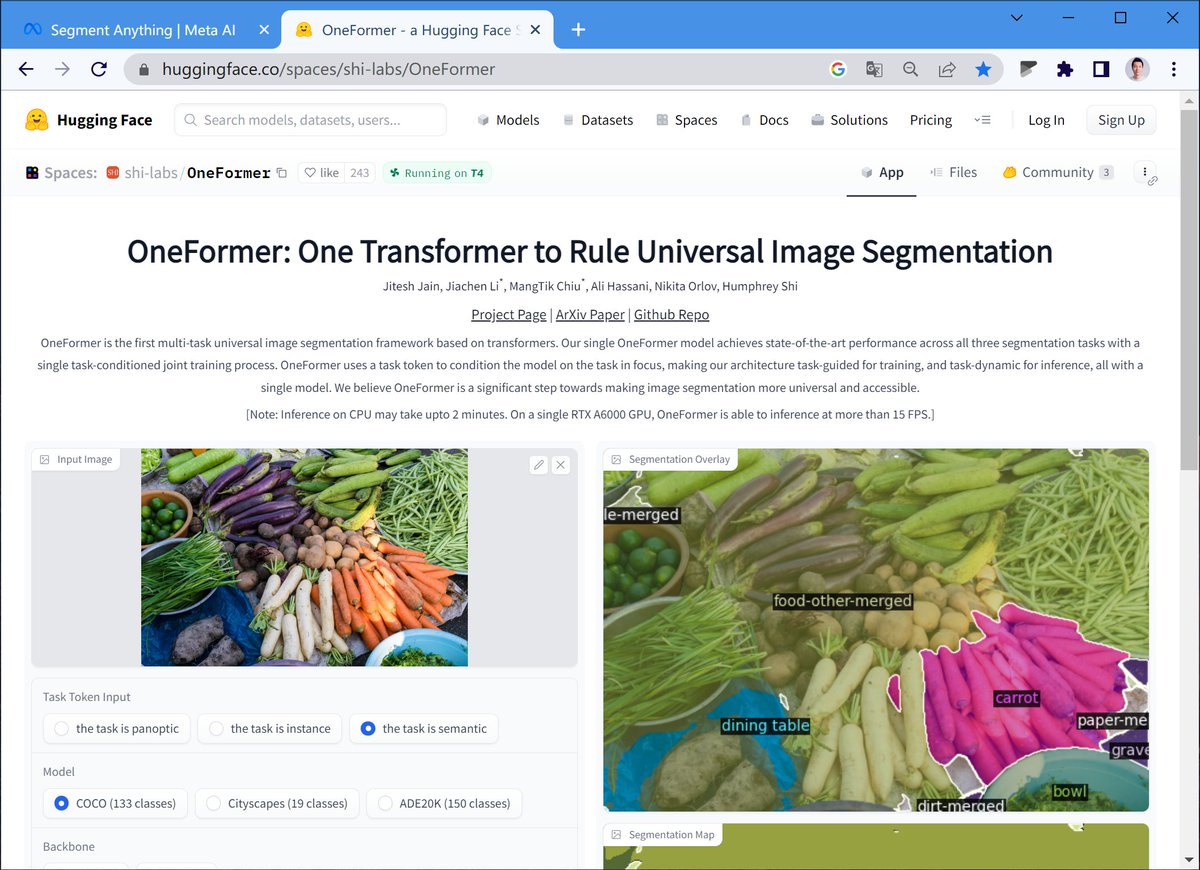

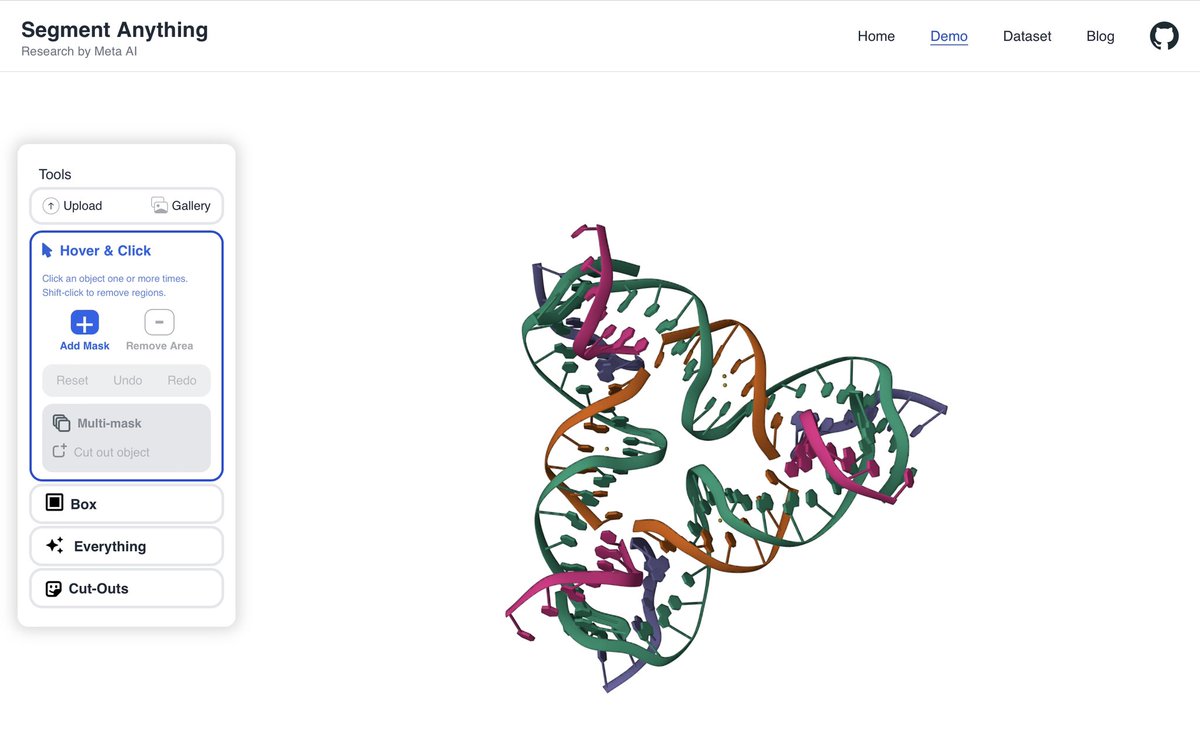

👉 Meta launches Segment Anything, an AI tool that can easily identify and isolate objects in images! 📸🤖 Trained on 11 million photos, it can handle different types of images, from microscopy to underwater photos. #Meta #AI #SegmentAnything #ComputerVision #opensource

Inference using @Meta SAM and SAM2 using @ultralytics notebook 😍 This week, we have added the Segment Anything model notebook, Give it a try and share your thoughts 👇 Notebook➡️github.com/ultralytics/no… #computervision #segmentanything #ai #metaai

Something went wrong.

Something went wrong.

United States Trends

- 1. Aaron Gordon 28.9K posts

- 2. Steph 68K posts

- 3. Jokic 23.6K posts

- 4. Good Friday 40.9K posts

- 5. Halle 21.4K posts

- 6. #criticalrolespoilers 14.1K posts

- 7. #EAT_IT_UP_SPAGHETTI 259K posts

- 8. Vikings 53K posts

- 9. Wentz 25.7K posts

- 10. Talus Labs 19.1K posts

- 11. #LOVERGIRL 19.2K posts

- 12. Hobi 43.9K posts

- 13. Warriors 95.1K posts

- 14. #breachchulavista 1,342 posts

- 15. Ronald Reagan 21.5K posts

- 16. Megan 38.6K posts

- 17. Cupcakke 5,205 posts

- 18. Sven 7,666 posts

- 19. Pacers 22.2K posts

- 20. Digital ID 91K posts