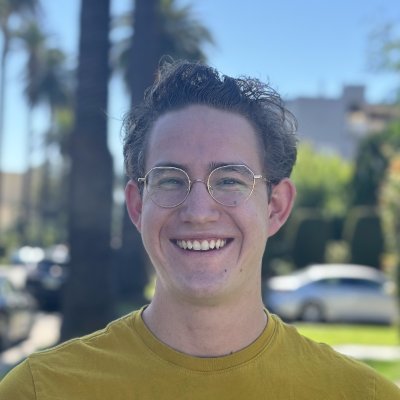

Chengxu Zhuang

@ChengxuZhuang

AI Research scientist at Meta. Previous worked on ChatGPT AVM at OpenAI. Was a Postdoc at MIT on NLP and brain. Stanford PhD on CV and neuroscience.

Może Ci się spodobać

Excited about this update from our team, esp Pingchuan Ma, Honglin Chen, and Damian Mrowca! Sounds more natural and human like, with better translation support. Enjoy the chats while we further improve the model, and let us know what could be even better!

Glad to see that my first publication w @dyamins was continued by @aran_nayebi ! AI has so much potential to contribute to other fields of science, particularly neuroscience, considering how much is unknown and the fascinating parallels—and differences—between AI and the brain.

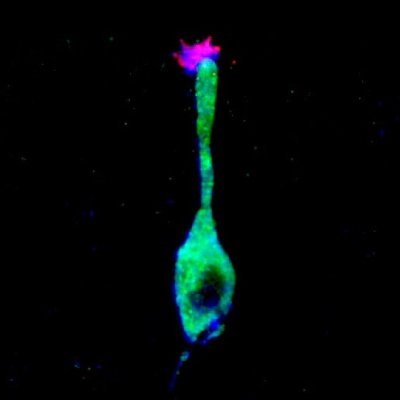

Check out our new work exploring how to make robots sense touch more like our brains! Surprisingly, ConvRNNs aligned best with mouse somatosensory cortex and even passed the NeuroAI Turing Test on current neural data. We also developed new tactile-specific augmentations for…

Human-AI interfaces must surpass human conversation. We are not there yet, as we type and use mouse. Speech outruns typing, while gestures like circling outperform mouse dragging. ChatGPT’s AVMs are built towards this goal. The future should real-time all-modality conversations.

"Chatting" with LLM feels like using an 80s computer terminal. The GUI hasn't been invented, yet but imo some properties of it can start to be predicted. 1 it will be visual (like GUIs of the past) because vision (pictures, charts, animations, not so much reading) is the 10-lane…

I mostly agree—but what's more important than being 'general' is that an AGI benefits all of humanity. To truly achieve this, an AGI must naturally interact with humans, requiring robust vision, speech, and motor-control capabilities.

It's a bizarre redefinition of the meaning of "general" in AGI. Vision: not part of AGI Spatial understanding: not part of AGI Non-visual (e.g. tactile) sensing: not part of AGI Motor control: not part of AGI I'll write a longer post on this soon.

Having compositionality in representations doesn’t mean having compositional models. E2E learning is strong in generalizing progress in one subject into others. Reasoning is the core part behind this generalization and likely not further modularizable.

Much of the field obsesses over end-to-end learning. But strong generalization requires compositionality: building modular, reusable abstractions, and reassembling them on the fly when faced with novelty. The models of the future won't be just pipes, they will be Lego castles.

Sad to see you go. Working under your leadership has been an incredible experience. The AI4Science community is lucky to have your energy and expertise. Wishing you every success ahead!

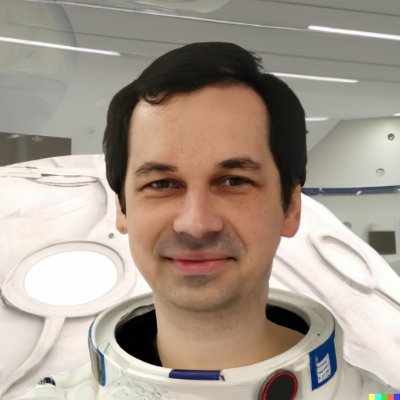

This is what I sent to my colleagues at OpenAI: Hi all, I made the difficult decision to leave OpenAI as an employee, but I’m looking to work closely together as a partner going forward. Contributing to the mission of OpenAI and working with world-class teams to create and…

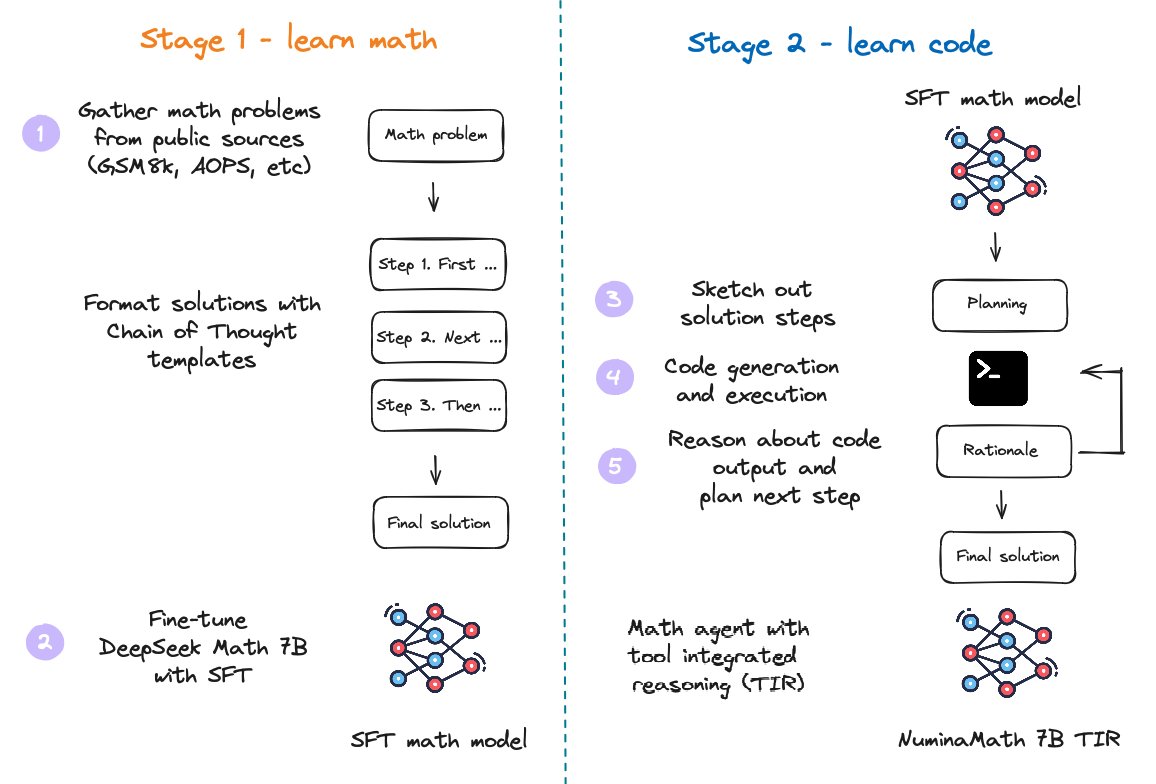

Awesome win for open-source and neurosymbolic AI :) Combining CoT planning in free-form text w/ interleaved program generation, execution, and repair, small Numina 7b model wins AIMO progress prize, solving challenging competition-level math problems. huggingface.co/blog/winning-a…

🎉 Thrilled to share that our paper (the first paper here) has won the Best Paper Award at NAACL 2024! A huge thank you to my mentors, @ev_fedorenko and @jacobandreas, and everyone who supported this work. 🙌 Check out the details and our findings here: aclanthology.org/2024.naacl-lon…

Two papers! Can visual grounding help LMs learn more efficiently? 1. We show that algs like CLIP don't learn language better (t.ly/eQHA9) 2. We then propose a new one, LexiContrastive Grounding, which does! (t.ly/KB818) Code: t.ly/C0wu- 🧵

The presentation will start very soon! Come to the Don Alberto 1 for the talk!

I am at the Mexico City attending NAACL 2024 from now to June 22nd. The first paper will be presented at tomorrow's first oral session at Don Alberto 1. If you are interested in the work, please come to the talk! If you want to chat, just DM me! Looking forward to the conference!

I am at the Mexico City attending NAACL 2024 from now to June 22nd. The first paper will be presented at tomorrow's first oral session at Don Alberto 1. If you are interested in the work, please come to the talk! If you want to chat, just DM me! Looking forward to the conference!

Two papers! Can visual grounding help LMs learn more efficiently? 1. We show that algs like CLIP don't learn language better (t.ly/eQHA9) 2. We then propose a new one, LexiContrastive Grounding, which does! (t.ly/KB818) Code: t.ly/C0wu- 🧵

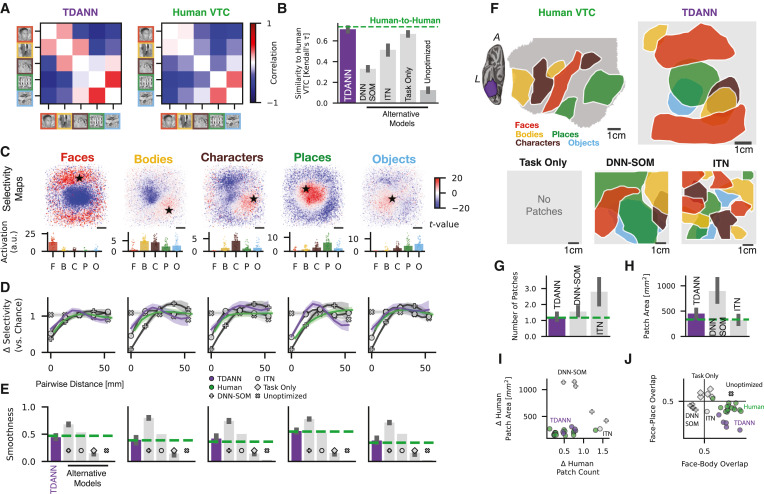

1/ Our work on unified principles for Topographic Deep Artificial Neural Networks is finally out in Neuron! 7 years in the making. tinyurl.com/2dtkh9fc

Have laws gotten simpler over time? Analyzing all laws by congress 1951-2022, we (w/ Frank Mollica, @LanguageMIT) find laws remain laden with complex structures vs baseline, suggesting efforts to simplify have largely failed Out now @APA JEP-General! psycnet.apa.org/record/2024-76… 1/

Exciting news! The Murty Lab @GeorgiaTech, has received its first significant funding from the @NatEyeInstitute. We are now actively recruiting up to two postdocs in experimental and computational neuroscience in human vision NeuroAI. 1/n

We should be smarter than just scaling! We should create data-efficient algs. Humans are great at this, algs should learn from humans. This is what I have been working on (t.ly/KB818). This is also what our BabyLM is about (babylm.github.io)!

Zuck on Dwarkesh TLDR: AI winter is here. Zuck is a realist, and believes progress will be incremental from here on. No AGI for you in 2025. 1) Zuck is essentially an real world growth pessimist. He thinks the bottlenecks start appearing soon for energy and they will be take…

✨🎓 I defended my dissertation “The Relationship between Linguistic Representations in Biological and Artificial Neural Networks” on Tuesday! 🎓✨ Incredibly grateful for my amazing PhD advisor @ev_fedorenko and a wonderful journey at @mitbrainandcog! 🧠🤖

Here's my conversation with Edward Gibson (@LanguageMIT), a linguist and psychologist at MIT, heading the MIT Language Lab. We talk all about the human language: syntax, grammar, structure, theories of language, evolution of language, how it reflects culture, and of course LLMs,…

Research should adopt the principle shown by this video: when you have a new claim/model, try hard and thoroughly to break it so that (if it's indeed not broken) it's clear your new thing is better!

Helmet testing ⛑️ Seems quite the difference! 🤔

Agree that LLMs face "a quartet of cognitive challenges: reasoning, planning, persistent memory, and understanding the physical world." Addressing these challenges requires developing separate modules for them and training those modules on data from other sensors (like vision)!

There is no question that AI will eventually reach and surpass human intelligence in all domains. But it won't happen next year. And it won't happen with the kind of Auto-Regressive LLMs currently in fashion (although they may constitute a component of it).…

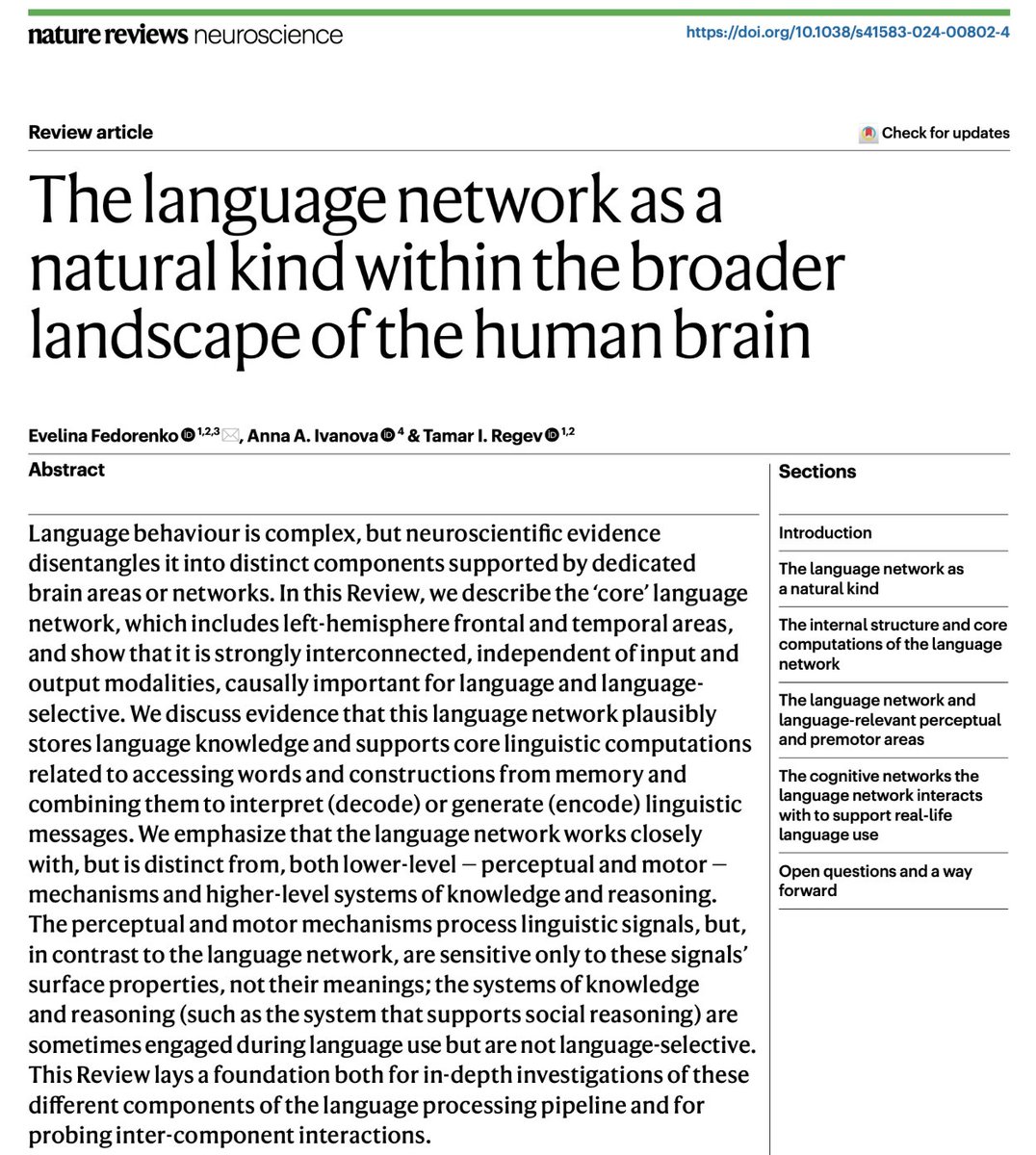

This is a great review of the language network. This network is distinct from systems of thought. This distinction might be the basis of more human-like and powerful artificial intelligence models!

Thrilled to share a review on THE LANGUAGE NETWORK AS A NATURAL KIND—a culmination of ~20 yrs of thinking about+studying language from linguistic, psycholinguistic, and cog neuro perspectives. @NatRevNeurosci rdcu.be/dEylV With the amazing @neuranna @tamaregev 🥳 🧵1/n

United States Trendy

- 1. Happy Thanksgiving 441K posts

- 2. Turkey Day 21K posts

- 3. Afghan 427K posts

- 4. #Thankful 5,601 posts

- 5. #StrangerThings5 368K posts

- 6. #CalleBatallaYVictoria 5,970 posts

- 7. #DareYouToDeath 323K posts

- 8. Feliz Día de Acción de Gracias 1,417 posts

- 9. #Gratitude 5,552 posts

- 10. DYTD TRAILER 243K posts

- 11. Good Thursday 24.9K posts

- 12. Taliban 53.7K posts

- 13. Tini 14.4K posts

- 14. God for His 19.2K posts

- 15. For the Lord 33.5K posts

- 16. BYERS 84.2K posts

- 17. God in Christ Jesus 5,572 posts

- 18. Gobble Gobble 16.4K posts

- 19. robin 123K posts

- 20. Rahmanullah Lakanwal 162K posts

Może Ci się spodobać

-

Daniel Yamins

Daniel Yamins

@dyamins -

Judy Fan

Judy Fan

@judyefan -

Robert Yang

Robert Yang

@GuangyuRobert -

Fenil Doshi @ NeurIPS 2025

Fenil Doshi @ NeurIPS 2025

@fenildoshi009 -

Nick Haber

Nick Haber

@nickhaber -

Honglin Chen

Honglin Chen

@honglin_c -

Aran Nayebi

Aran Nayebi

@aran_nayebi -

Daniel Bear

Daniel Bear

@recursus -

Gabriel Kreiman

Gabriel Kreiman

@gkreiman -

Martin Schrimpf

Martin Schrimpf

@martin_schrimpf -

Katherine Hermann

Katherine Hermann

@khermann_ -

Kohitij Kar

Kohitij Kar

@KohitijKar -

Eshed Margalit

Eshed Margalit

@eshedmargalit -

Josh McDermott

Josh McDermott

@JoshHMcDermott -

Dawn Finzi

Dawn Finzi

@dfinz

Something went wrong.

Something went wrong.