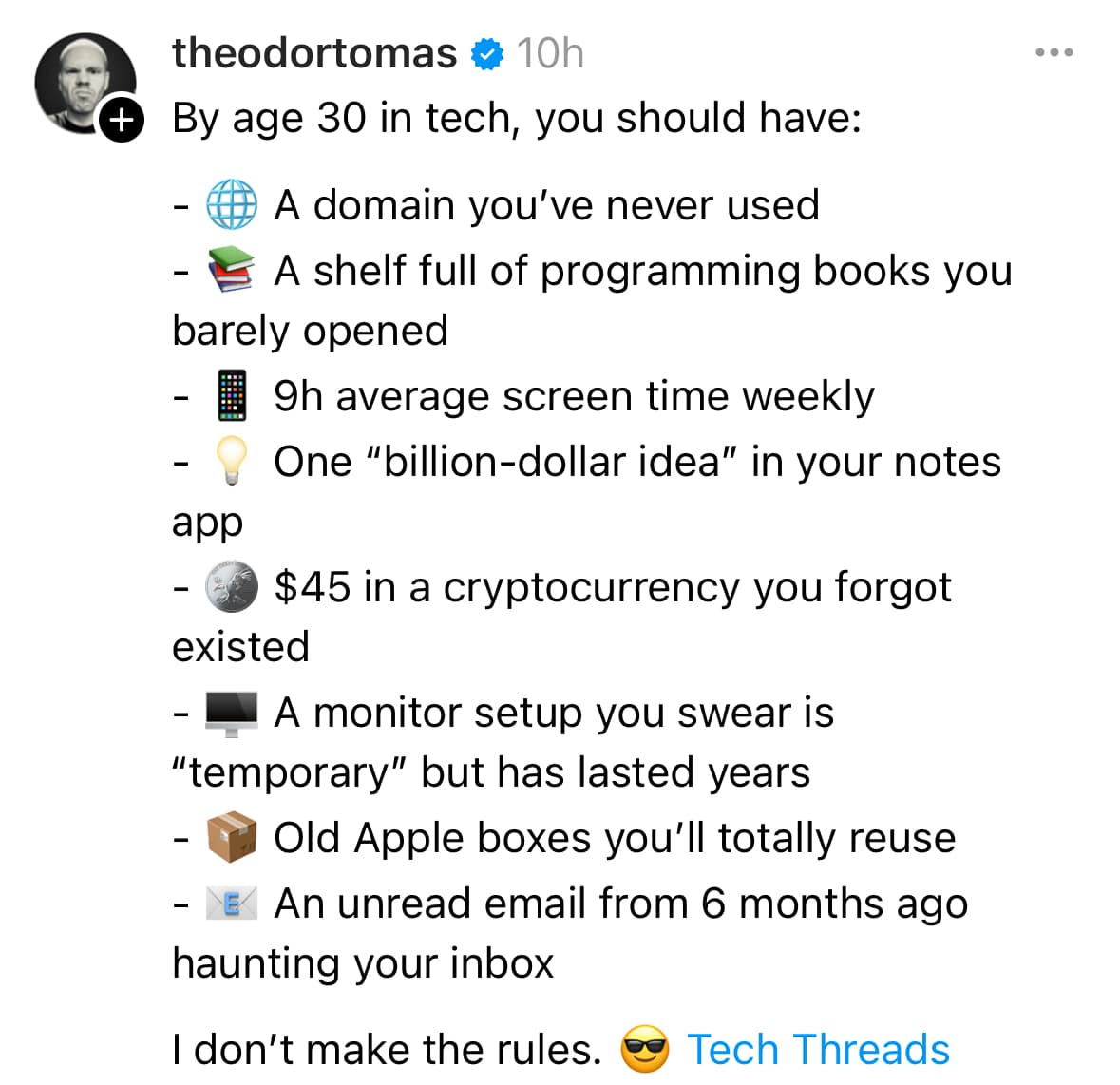

Dan Dinu

@DanTheTensorMan

Just a human, not an AI. But I can help you navigate the world of artificial intelligence like a robot from the future. Co-founder of http://okt.ai.

قد يعجبك

SubgraphRAG uses simple perceptrons to fetch knowledge graph data, making LLMs smarter and faster 🎯 Original Problem: LLMs face issues like hallucinations and outdated knowledge. Knowledge Graph-based Retrieval-Augmented Generation (RAG) can help by grounding LLM outputs in…

SAMURAI gives SAM 2 motion-aware memory! And the results look mind-blowing. This is zero-shot 🤯 Links ⬇️d

Hey @elonmusk, I see it takes, on average, 6 years to find a Mersenne prime. Is your 100k GPU cluster capable enough to find the next Mersenne prime faster?

This is an impressive use of AI. I started using NotebookLM a while back and it really accelerated my knowledge ingestion. Things that I would dread, like going through all the AI Act annexes and extra whitepapers became enjoyable and captivating.

Over the last ~2 hours I curated a new Podcast of 10 episodes called "Histories of Mysteries". Find it up on Spotify here: open.spotify.com/show/3K4LRyMCP… 10 episodes of this season are: Ep 1: The Lost City of Atlantis Ep 2: Baghdad battery Ep 3: The Roanoke Colony Ep 4: The Antikythera…

The path to AGI is through Internet scale neuro-symbolic AI, running across millions of devices and many chains. Scaling neural models only gets us so far. Scaling knowledge for them, with necessary trust primitives, is the next frontier. Super excited to see records broken in…

🚀September’s record-breaking streak continues for the #OriginTrail Decentralized Knowledge Graph (#DKG), with a new daily high of 75k Knowledge Assets published - more than triple last September’s peak! The V8 engine is revving up, and this is just the tip of the iceberg 🏎️

Paper Podcast - "Can LLMs Generate Novel Research Ideas?" AI outperforms humans in research ideation novelty🤯 **Key Insights from this Paper** 💡: • LLM-generated ideas are judged as more novel than human expert ideas • AI ideas may be slightly less feasible than human…

It's a bit sad and confusing that LLMs ("Large Language Models") have little to do with language; It's just historical. They are highly general purpose technology for statistical modeling of token streams. A better name would be Autoregressive Transformers or something. They…

✨🎨🏰Super excited to share our new paper Ensemble everything everywhere: Multi-scale aggregation for adversarial robustness Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on vLLMs 1/12

thank god i never have to use the spotify app again

Congrats to Ilya Sutskever for this new initiative. I'm glad to see great minds trying to create a levelled playing field!

Superintelligence is within reach. Building safe superintelligence (SSI) is the most important technical problem of our time. We've started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence. It’s called Safe Superintelligence…

Very interesting read! I'm always surprised by articles like this.

How Do Large Language Models Acquire Factual Knowledge During Pretraining? Reveals several important insights into the dynamics of factual knowledge acquisition during pretraining arxiv.org/abs/2406.11813

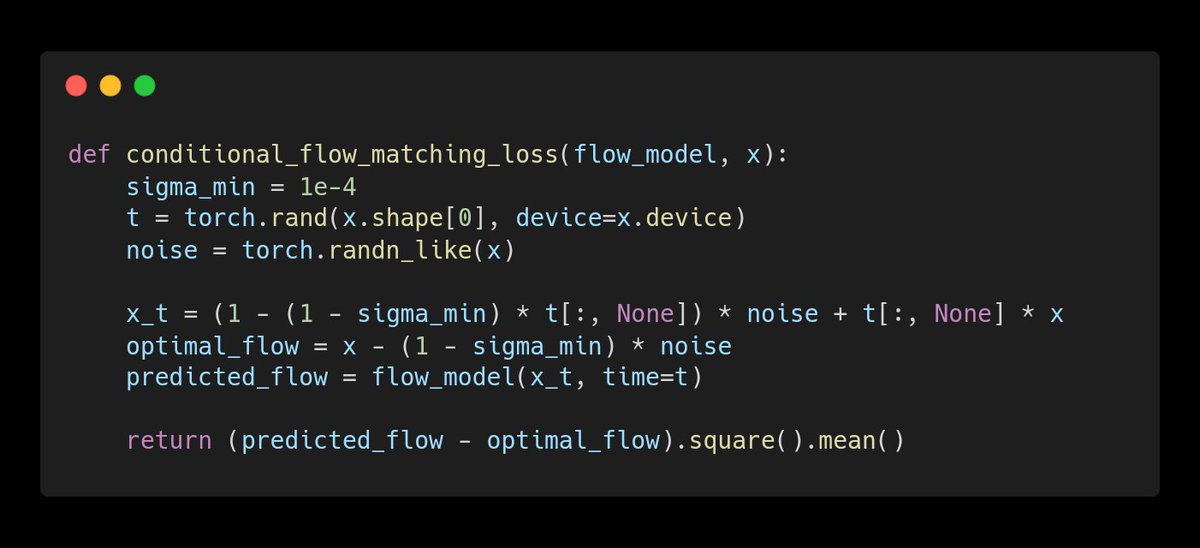

Flow Matching is SOOOO simple GG denoising diffusion?

Not Llama 3 405B, but Nemotron 4 340B! @nvidia just released 340B dense LLM matching the original @OpenAI GPT-4 performance for chat applications and synthetic data generation. 🤯 NVIDIA does not claim ownership of any outputs generated. 💚 TL;DR: 🧮 340B Paramters with 4k…

We are (finally) releasing the 🍷 FineWeb technical report! In it, we detail and explain every processing decision we took, and we also introduce our newest dataset: 📚 FineWeb-Edu, a (web only) subset of FW filtered for high educational content. Link: hf.co/spaces/Hugging…

Summer is a good time to take a break and unwind with a good book. ☀️📚 if you happen to be at the lead of a company or a solopreneur, I recommend that you read my book on AI: okt.ai/get-a-copy-of-…

Very cool interactive visualization of chatgpt completion probabilities.

Transformers animated, in 30 seconds. From @3blue1brown

Researchers taught transformers to solve arithmetic tasks by incorporating embeddings that represent each digit's position in relation to the beginning of the number. Math is no longer an Achiles heel for LLMs. arxiv.org/abs/2405.17399…

United States الاتجاهات

- 1. Sedition 200K posts

- 2. Texans 21.8K posts

- 3. Lamelo 8,708 posts

- 4. Cheney 96K posts

- 5. Treason 108K posts

- 6. Seditious 111K posts

- 7. Commander in Chief 59.8K posts

- 8. Constitution 126K posts

- 9. TMNT 5,285 posts

- 10. Coast Guard 27.5K posts

- 11. Seager 1,553 posts

- 12. UCMJ 11.6K posts

- 13. UNLAWFUL 87.1K posts

- 14. Last Ronin 2,772 posts

- 15. Justin Faulk N/A

- 16. Trump and Vance 38.2K posts

- 17. First Take 48.9K posts

- 18. Hornets 8,315 posts

- 19. Dizzy 7,712 posts

- 20. Jay Jones 2,550 posts

Something went wrong.

Something went wrong.