Was dir gefallen könnte

state of open source in q4 2025,🤖s are first PR great reviewers in vLLM now!

🔥 This is a great read!! Exercise for the reader: how would Blackwell Ultra with 2x exponential cores impact the design of FA4? developer.nvidia.com/blog/inside-nv…

Day 1 with @modal notebook and it's so much fun! Switching from CPU to GPU easily between cells while maintaining environments and volumes is 🤌 * Run CPU nodes to download checkpoints and simple dev work with vLLM for testing * Scale out to B200 when ready!

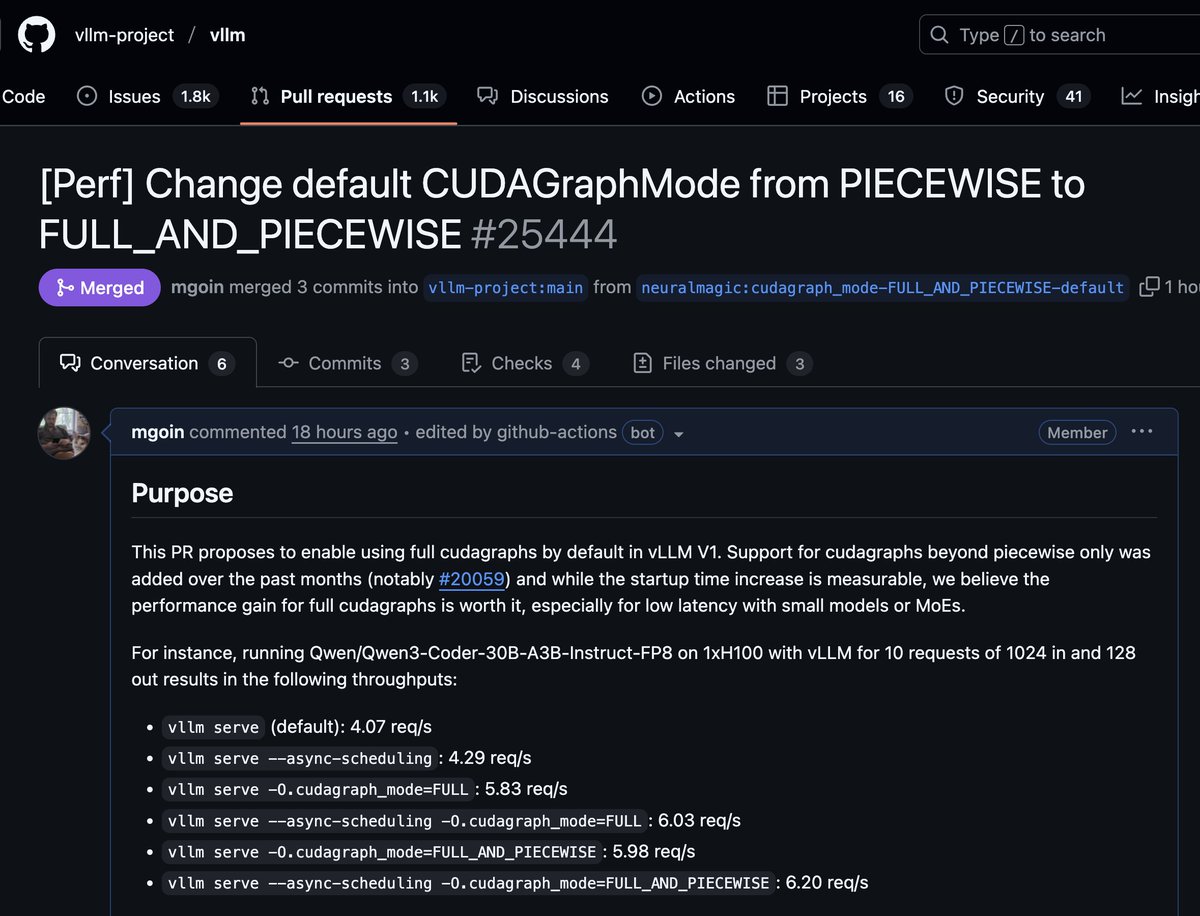

Just enabled full cudagraphs by default on @vllm_project! This change should offer a huge improvement for low latency workloads on small models and efficient MoEs For Qwen3-30B-A3B-FP8 on H100 at bs=10 1024/128, I was able to see a speedup of 47% 🔥

It has been 1+ month of intense work! Now time to get some sleep 😴

Launching this model together with the amazing @vllm_project team was a real highlight for me! Follow this guide to launch gpt-oss in vLLM: blog.vllm.ai/2025/08/05/gpt…

I didn't expect the first section "KV-cache hit rate is the single most important metric for a production-stage AI agent" but 🤯

After four overhauls and millions of real-world sessions, here are the lessons we learned about context engineering for AI agents: manus.im/blog/Context-E…

Long time in the making and I'm beyond excited about the future of vLLM!

PyTorch and vLLM are both critical to the AI ecosystem and are increasingly being used together for cutting edge generative AI applications, including inference, post-training, and agentic systems at scale. 🔗 Learn more about PyTorch → vLLM integrations and what’s to come:…

Announcing the first Codex open source fund grant recipients: ⬩vLLM - inference serving engine @vllm_project ⬩OWASP Nettacker - automated network pentesting @iotscan ⬩Pulumi - infrastructure as code in any language @pulumicorp ⬩Dagster - cloud-native data pipelines @dagster…

😲 super cool !!! Reminded me of Kevin's thesis "Structured Contexts For Large Language Models" and this is such a natural continuation of the idea.

We're excited to release our latest paper, “Sleep-time Compute: Beyond Inference Scaling at Test-Time”, a collaboration with @sea_snell from UC Berkeley and @Letta_AI advisors / UC Berkeley faculty Ion Stoica and @profjoeyg letta.com/blog/sleep-tim…

🙏 @deepseek_ai's highly performant inference engine is built on top of vLLM. Now they are open-sourcing the engine the right way: instead of a separate repo, they are bringing changes to the open source community so everyone can immediately benefit! github.com/deepseek-ai/op…

Having been at every single vLLM meetup, I won't miss this one :D Looking forward to meet all the vLLM users in Boston!

Friends from the East Coast! Join us on Tuesday, March 11 in Boston for the first ever East Coast vLLM Meetup. You will meet vLLM contributors from @neuralmagic, @RedHat, @Google, and more. Come share how you are using vLLM and see what's on the roadmap! lu.ma/7mu4k4xx

Landed my first PR in @vllm_project 1 year ago today (github.com/vllm-project/v…) 38K LOC and 100+ PRs later and we are just getting started

Robert and I started contributing to vLLM around the same time and today is my turn. Back then vLLM had only about 30 contributors. One year later, today the project has received contributions from 800+ community members! and we're just getting started github.com/vllm-project/v…

Landed my first PR in @vllm_project 1 year ago today (github.com/vllm-project/v…) 38K LOC and 100+ PRs later and we are just getting started

United States Trends

- 1. Auburn 46.1K posts

- 2. At GiveRep N/A

- 3. Brewers 65.5K posts

- 4. Cubs 56.7K posts

- 5. Georgia 68.3K posts

- 6. #SEVENTEEN_NEW_IN_TACOMA 33.1K posts

- 7. Gilligan's Island 4,832 posts

- 8. Utah 25.4K posts

- 9. #byucpl N/A

- 10. Macrohard 4,251 posts

- 11. Kirby 24.3K posts

- 12. Arizona 42K posts

- 13. Wordle 1,576 X N/A

- 14. #AcexRedbull 4,262 posts

- 15. Michigan 63.2K posts

- 16. Boots 51.1K posts

- 17. #Toonami 2,993 posts

- 18. #BYUFootball 1,020 posts

- 19. mingyu 90.8K posts

- 20. Hugh Freeze 3,279 posts

Was dir gefallen könnte

-

SkyPilot

SkyPilot

@skypilot_org -

Woosuk Kwon

Woosuk Kwon

@woosuk_k -

Zhuohan Li

Zhuohan Li

@zhuohan123 -

Shishir Patil

Shishir Patil

@shishirpatil_ -

Conor Power

Conor Power

@conor_power23 -

Joey Gonzalez

Joey Gonzalez

@profjoeyg -

Romil Bhardwaj

Romil Bhardwaj

@bromil101 -

Lianmin Zheng

Lianmin Zheng

@lm_zheng -

Hao Zhang

Hao Zhang

@haozhangml -

Zhanghao Wu

Zhanghao Wu

@Michaelvll1 -

Richard Liaw

Richard Liaw

@richliaw -

Wei-Lin Chiang

Wei-Lin Chiang

@infwinston -

Audrey Cheng

Audrey Cheng

@audreyccheng -

Sarah Wooders

Sarah Wooders

@sarahwooders -

Paras Jain

Paras Jain

@_parasj

Something went wrong.

Something went wrong.