Eliezer Yudkowsky ⏹️

@ESYudkowsky

The original AI alignment person. Understanding the reasons it's difficult since 2003. This is my serious low-volume account. Follow @allTheYud for the rest.

You might like

"If Anyone Builds It, Everyone Dies" is now out. Read it today if you want to see with fresh eyes what's truly there, before others try to prime your brain to see something else instead!

Lightcone Infrastructure's 2026 fundraiser is live! We build beautiful things for truth-seeking and world-saving. We run LessWrong, Lighthaven, Inkhaven, designed AI-2027, and so many more things. All for the price of less than one OpenAI staff engineer ($2M/yr). More in 🧵.

For the first time in six years, MIRI is running a fundraiser. Our target is $6M. Please consider supporting our efforts to alert the world—and identify solutions—to the danger of artificial superintelligence. SFF will match the first $1.6M! ⬇️

Over all human history, we do not observe the rule, "Nobody who warns that a bad thing will happen is ever correct." Nor yet is there any rule that people who issue warnings are always right. Put in the damn work to understand the specific case or go home.

Parents: Do not give your children any AI companions or AI enhanced toys. With social media 15 years ago, we can say we didn't know. With AI, we can already see some of the harms, such as suicide and psychosis. Other harms will surface years from now. afterbabel.com/p/dont-give-yo…

I will never be able to express enough gratitude to LessWrong & Bay Area rationalist culture for making it high status to publicly admit you were wrong and changed your mind This is unbelievably advanced cultural technology. It is fragile & must be protected

History says, pay attention to people who declare a plan to exterminate you -- even if you're skeptical about their timescales for their Great Deed. (Though they're not *always* asstalking about timing, either.)

Yesterday we did a livestream. TL;DR: We have set internal goals of having an automated AI research intern by September of 2026 running on hundreds of thousands of GPUs, and a true automated AI researcher by March of 2028. We may totally fail at this goal, but given the…

When researchers activate ***deception*** circuits, LLMs say "I am not conscious." It should be obvious why this is a big deal.

Our new research: LLM consciousness claims are systematic, mechanistically gated, and convergent They're triggered by self-referential processing and gated by deception circuits (suppressing them significantly *increases* claims) This challenges simple role-play explanations 🧵

Some people said this was their favorite / first actually-liked interview. By Chris Williamson, of Modern Wisdom. youtube.com/watch?v=nRvAt4…

youtube.com

YouTube

Why Superhuman AI Would Kill Us All - Eliezer Yudkowsky

So far 23 members of Congress have publicly discussed AGI, superintelligence, AI loss of control, or the Singularity: 🔴Sen Lummis (WY) 🔵Sen Blumenthal (CT) 🔴Rep Biggs (AZ) 🔵Sen Hickenlooper (CO) 🔴Rep Burleson (MO) 🔵Sen Murphy (CT) 🔴Rep Crane (AZ) 🔵Sen Sanders (VT) 🔴Rep…

I strongly agree with Ryan on this. Refusing to even say you aren't currently training against the CoT (aka The Most Forbidden Technique) is getting increasingly suspicious. Labs should commit to never doing this, or at least commit to this on condition the others commit.

Anthropic, GDM, and xAI say nothing about whether they train against Chain-of-Thought (CoT) while OpenAI claims they don't. AI companies should be transparent about whether (and how) they train against CoT. While OpenAI is doing better, all AI companies should say more. 1/

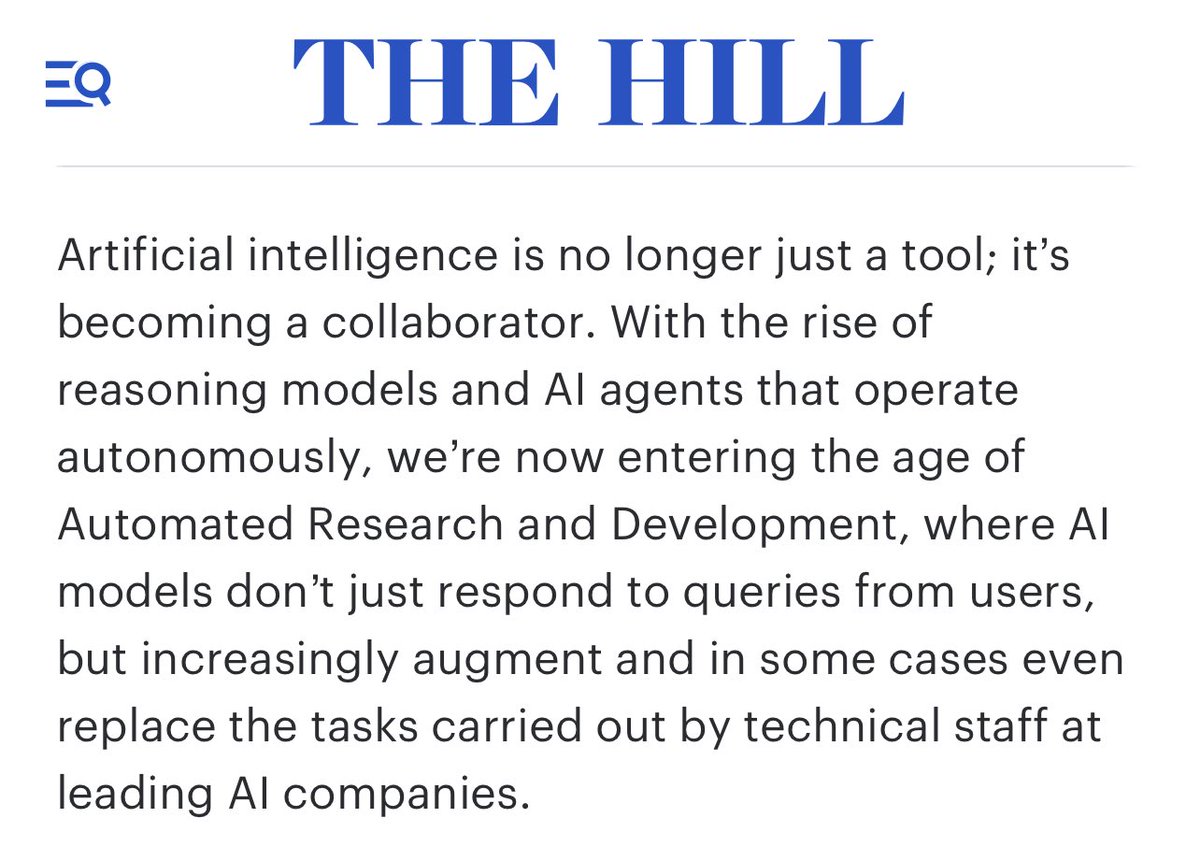

Fantastic op-ed from Rep Moran about automated AI R&D. This is a crucially important area of AI to be tracking, but one many people outside the industry are sleeping on. Glad to see it getting attention from a sitting member of Congress.

AI has vast implications across national security, economic competitiveness and civil society. The time to act on AI is now. The United States must set the standards, not the Chinese Communist Party. ➡️ Read my latest op-ed in The Hill: thehill.com/opinion/techno…

AI models *are* being tested. They're being *accurate* about being tested. It's not "paranoia" if all-controlling beings are *actually* arranging your world and reading your mind.

Are all the new models now paranoid about being tested?

United States Trends

- 1. Spurs 40.6K posts

- 2. Cooper Flagg 10.1K posts

- 3. Chet 8,644 posts

- 4. UNLV 2,222 posts

- 5. Randle 2,521 posts

- 6. #Pluribus 15.1K posts

- 7. #PorVida 1,531 posts

- 8. Mavs 5,884 posts

- 9. Christmas Eve 178K posts

- 10. #WWENXT 11.2K posts

- 11. Rosetta Stone N/A

- 12. Skol 1,507 posts

- 13. Keldon Johnson 1,179 posts

- 14. #GoAvsGo N/A

- 15. Nuggets 12K posts

- 16. Yellow 58K posts

- 17. #LGRW 2,651 posts

- 18. Cam Johnson N/A

- 19. Scott Wedgewood N/A

- 20. Peyton Watson N/A

You might like

-

Sam Altman

Sam Altman

@sama -

Marc Andreessen 🇺🇸

Marc Andreessen 🇺🇸

@pmarca -

Andrej Karpathy

Andrej Karpathy

@karpathy -

Stephen Wolfram

Stephen Wolfram

@stephen_wolfram -

Ilya Sutskever

Ilya Sutskever

@ilyasut -

Scott Alexander

Scott Alexander

@slatestarcodex -

Greg Brockman

Greg Brockman

@gdb -

Jürgen Schmidhuber

Jürgen Schmidhuber

@SchmidhuberAI -

Patrick Collison

Patrick Collison

@patrickc -

Anthropic

Anthropic

@AnthropicAI -

AI Breakfast

AI Breakfast

@AiBreakfast -

James Campbell

James Campbell

@jam3scampbell -

Wojciech Zaremba

Wojciech Zaremba

@woj_zaremba -

@goth

@goth

@goth600

Something went wrong.

Something went wrong.