Guim Perarnau

@GuimPML

Machine learning engineer at Meta, London. Sometimes I write about deep learning and GANs. Opinions are my own.

You might like

Engineer @JoshBambrick demos NSTM: Key News Themes, a real-time query-driven news overview composition system he developed w/ @chokky_vista, @GuimPML, Igor Malioutov, @prog_aa, Vittorio Selo & Iat Chong Chan Demo 3B|8:45 AM EDT Demo 5B|4:45 PM EDT bloom.bg/3fc5DGC #ACL2020

Cornell's excellent Machine Learning course is entirely online, for free, including all the lectures on YouTube and all the course notes: cs.cornell.edu/courses/cs4780… That's the bright side of the Internet: so much knowledge freely available! 🙌😀

New blog post: "A Recipe for Training Neural Networks" karpathy.github.io/2019/04/25/rec… a collection of attempted advice for training neural nets with a focus on how to structure that process over time

How do we store memories? “We’re much better at recognising than recalling. When we remember something, we have to try to relive an experience. When we recognise something, we must merely be conscious of the fact that we have had this experience before.” aeon.co/essays/your-br…

4.5 years of GAN progress on face generation. arxiv.org/abs/1406.2661 arxiv.org/abs/1511.06434 arxiv.org/abs/1606.07536 arxiv.org/abs/1710.10196 arxiv.org/abs/1812.04948

Large-Scale GAN Training: My internship project with Jeff and Karen. We push the SOTA Inception Score from 52 -> 166+ and give GANs the ability to trade sample variety and fidelity. arxiv.org/abs/1809.11096

New work from our group @GoogleAI - A practical “cookbook” for #GAN research in 2018: arxiv.org/abs/1807.04720 Code & pre-trained TensorFlow Hub models: github.com/google/compare… Attending #ICML2018 ? Visit our poster on Saturday (Reproducibility Workshop)

My new paper is out! " The relativistic discriminator: a key element missing from standard GAN" explains how most GANs are missing a key ingredient which makes them so much better and much more stable! #Deeplearning #AI ajolicoeur.wordpress.com/RelativisticGA… arxiv.org/abs/1807.00734

most common neural net mistakes: 1) you didn't try to overfit a single batch first. 2) you forgot to toggle train/eval mode for the net. 3) you forgot to .zero_grad() (in pytorch) before .backward(). 4) you passed softmaxed outputs to a loss that expects raw logits. ; others? :)

Honored to see my research together with Brian Dolhansky to be featured in #TechCrunch . Come and see our presentation at #CVPR18 next week! It will be eye opening! GANs to the power! techcrunch.com/2018/06/16/fac…

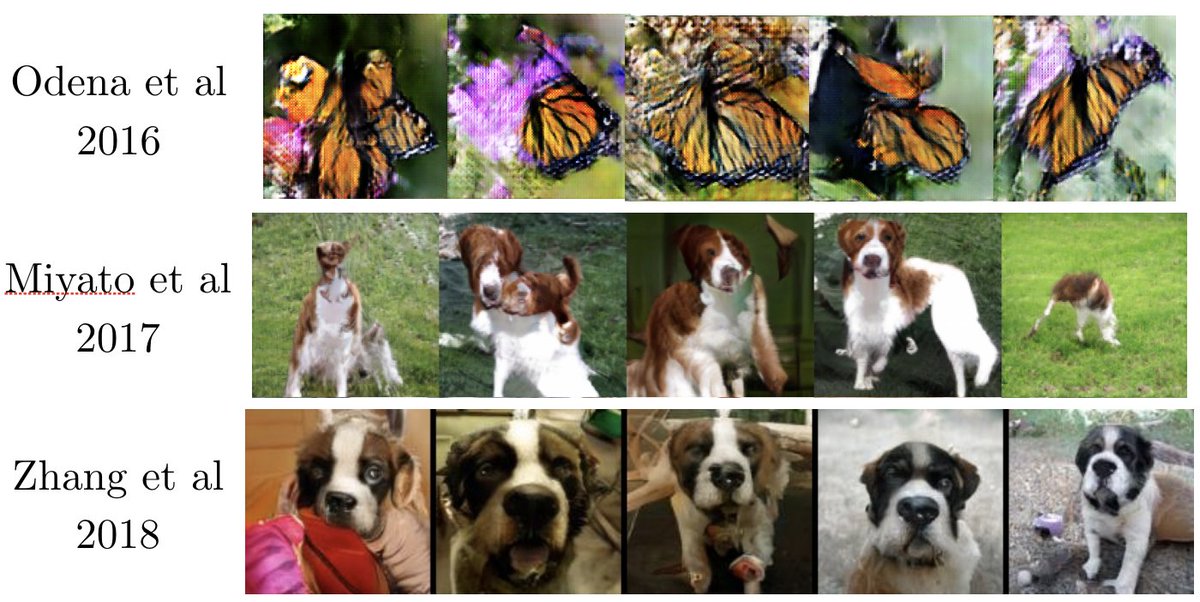

Two years of GAN progress on class-conditional ImageNet-128

My new paper on generating images from scene graphs using graph convolution and GANs is up on arXiv! To appear at CVPR2018, with @agrimgupta92 and @drfeifei arxiv.org/abs/1804.01622

Thread on how to review papers about generic improvements to GANs

Adversarial examples that fool both human and computer vision arxiv.org/abs/1802.08195

Our new paper: "Is Generator Conditioning Causally Related to GAN Performance?" TLDR: "Almost certainly" arxiv.org/abs/1802.08768

What's your motivation for training a generative model? Unsupervised representation learning? Computer vision? Compression? If you can answer that, you probably know what evaluation to use. Test generative models in applications, like the field used to.

Introducing exemplar GANs and a compelling use case: eye in-painting preserving the identity of the subject. #facebook #research #gan #inpainting #face #computervision arxiv.org/abs/1712.03999

An Early Overview of #ICLR2018 @ICLR2018 finally here! prlz77.github.io/iclr2018-stats Find the most exciting and the most controversial papers 😉

It has been a hot week for GANs! Here's a recap of new articles: 24 Nov - StarGANs arxiv.org/abs/1711.09020 28 Nov - Are GANs Created Equal? arxiv.org/abs/1711.10337 30 Nov - High-Resolution Image Synthesis with cGANs tcwang0509.github.io/pix2pixHD/

United States Trends

- 1. Epstein 1.03M posts

- 2. Steam Machine 56.1K posts

- 3. Virginia Giuffre 59.3K posts

- 4. Xbox 62.8K posts

- 5. Valve 38K posts

- 6. #LightningStrikes N/A

- 7. Bradley Beal 5,084 posts

- 8. Bill Clinton 25.6K posts

- 9. Boebert 48.1K posts

- 10. Jake Paul 4,157 posts

- 11. Starship 11.8K posts

- 12. Dana Williamson 9,673 posts

- 13. #dispatch 56.1K posts

- 14. Rep. Adelita Grijalva 22.2K posts

- 15. Maxwell 136K posts

- 16. Anthony Joshua 3,114 posts

- 17. H-1B 115K posts

- 18. Tim Burchett 16.8K posts

- 19. Scott Boras 1,279 posts

- 20. Godzilla 13.8K posts

Something went wrong.

Something went wrong.