iDSS Lab

@IDSSLab

iDSS Lab connects researchers and professionals working on machine learning solutions that support decision making

You might like

Successful data science projects are analytically sound. understandable to key decision makers, and fit existing organizational routines

Transparency & explainability in AI aren’t enough. We make the case for responsiveness as the key to accountability in high-stakes AI systems. Joint work with Gijs van Maanen

💥In our first new post of 2025 @dakolkman & Gijs van Maanen argue that rather than transparency and explainability, responsiveness is key to accountability in AI decision making. #AIPolicy #DecisionMaking #Policymaking blogs.lse.ac.uk/impactofsocial…

📢 New paper in @PLOSONE! Can machine learning help us build better theories? Spoiler: Yes, it can! 🧵👇 journals.plos.org/plosone/articl…

🚨New paper 🚨 Sure—algorithms are far from objective. But what if the real issue is how they represent? 🤔 This was a question @maanengijsvan and I kept returning to during countless discussions. These conversations evolved into a manuscript: tandfonline.com/doi/metrics/10… 🧵

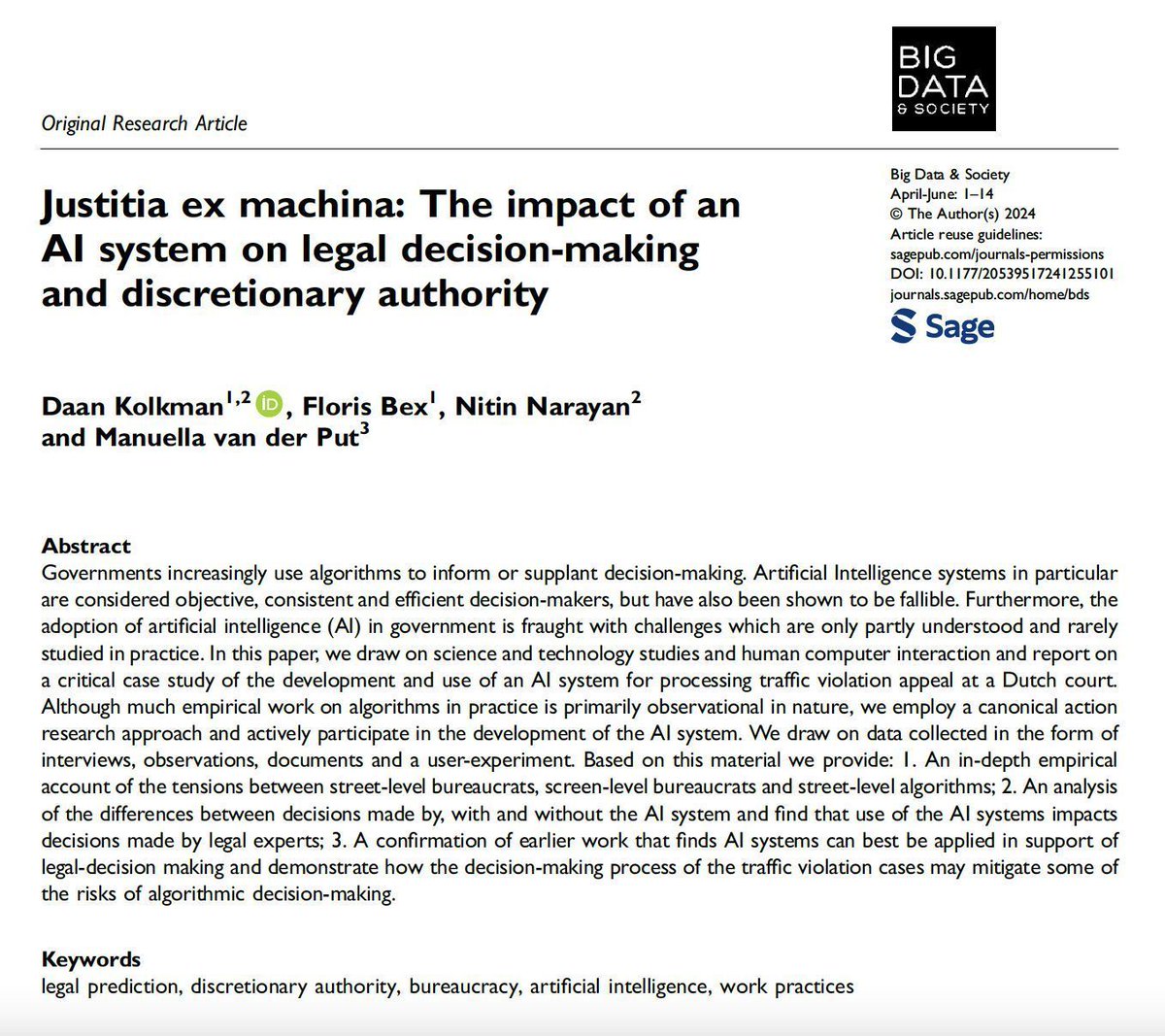

📣 New pub is out: “Justitia ex machina: The impact of an AI system on legal decision-making and discretionary authority” by Daan Kolkman (@dakolkman), Floris Bex (@florisbex), Nitin Narayan and Manuella van der Put. Read here 🔗 buff.ly/3RqxurP #LegalPrediction AI

📢New paper in @BigDataSoc: "Justitia ex machina: The impact of an AI system on legal decision-making and discretionary authority" with @florisbex, Nitin Narayan, and Manuella van der Put. doi.org/10.1177/205395… 👇 Some highlights:

journals.sagepub.com

Justitia ex machina: The impact of an AI system on legal decision-making and discretionary author...

Governments increasingly use algorithms to inform or supplant decision-making. Artificial Intelligence systems in particular are considered objective, consisten...

📢 Are you working on a (qualitative) case study of an algorithm used in government? We would love to hear from you at our #EASST4S2024 panel. Remember to submit by 12 February 2024!🗓️16-19 July in Amsterdam.

💥In our first new post of 2025 @dakolkman & Gijs van Maanen argue that rather than transparency and explainability, responsiveness is key to accountability in AI decision making. #AIPolicy #DecisionMaking #Policymaking blogs.lse.ac.uk/impactofsocial…

ChatGPT and spatial analysis for retail: A test using OpenStreetMap Points Of Interest and WorldPop socio-demographic data: linkedin.com/posts/thebigda…

So @mickjagger famously asked MC Escher to design the cover artwork for @RollingStones' Let it Bleed album. Escher declined and Robert Brownjohn designed the cover instead. But what if Escher had said yes? Let's find out by asking #openai's #dalle2 text-to-image model:

Netherlands Court of Audit (@Rekenkamer) finds 6 out of 9 algorithms used by the Netherlands government do not meet basic requirements pertaining accountability, privacy, governance, and impartiality. Just the tip of the iceberg? english.rekenkamer.nl/latest/news/20…

Ook aan data scientists is een enorm tekort, maar ze worden ook lang niet altijd goed ingezet. Dus hoe haal je als werkgever waarde uit de data scientists die je wel kunt vinden? AG Connect vroeg het onderzoeker @dakolkman van @jadatascience. #datascience agconnect.nl/artikel/zo-haa…

New Dutch government agreement stipulates the instatement of an algortihm watchdog. This watchdog is to uphold new (to be developed) regulation pertaining transparency, discrimination, and bias of algorithms. Promising first step towards more algorithmic accountability. (1/2)

The digital society is not merely a conceptual challenge for humanities & social sciences. We are looking for contributions that address algorithmization & datafication in empirical & socially engaged ways & which provide opportunities for intervention: dataschool.nl/en/news/oa-boo…

A precedent for: Machine learning engineer arrested for training algorithm to throw ridiculous recidivism odds / unfair grades / fraud risk assessments / etc?

No apologies necessary, don’t mind to disagree with you on this. I’m quite sure programmers/data scientists distinguish between good and bad code/algorithms, independently of the impact of such ‘objects’.

No algorithmic accountability without a critical audience. 👇🏼

“F**k the algorithm”?: What the world can learn from the UK’s A-level grading fiasco @jeu1987 #algorithmicaccountability #policy #alevelsresults wp.me/p4m9em-ada

“F**k the algorithm”?: What the world can learn from the UK’s A-level grading fiasco @jeu1987 #algorithmicaccountability #policy #alevelsresults wp.me/p4m9em-ada

I was simultaneously appalled, confused, and intrigued by the A-level grading algorithm fiasco so I decided to do a write-up of the situation. Might be helpful for those also unfamiliar with the UK education and university admissions system: daankolkman.com/uncategorized/…

Incidentially, the UK Review of quality assurance of Government analytical models AND the UK guidance on producing quality analysis both prescribe external audits in cases of “high bussiness risk”. So I wonder if anyone has come acros evidence of such external review/audit?

The recent #alevels #omnishambles and attempts at alogirthmic marking, reminds me of this recent @LSEImpactBlog post by @jeu1987 and the implicit trust placed in algorithms for policymaking. blogs.lse.ac.uk/impactofsocial…

Is public accountability possible in algorithmic policymaking? The case for a public watchdog #Algorithms #Policy @jeu1987 wp.me/p4m9em-aa9

United States Trends

- 1. #LingOrm1st_ImpactFANCON 690K posts

- 2. Talus Labs 25.1K posts

- 3. #BUNCHITA 1,507 posts

- 4. #KirbyAirRiders 1,975 posts

- 5. Frankenstein 83.5K posts

- 6. Giulia 15.8K posts

- 7. taylor york 9,140 posts

- 8. #SmackDown 48.9K posts

- 9. #River 4,942 posts

- 10. Tulane 4,505 posts

- 11. Ketanji Brown Jackson 4,882 posts

- 12. Justice Jackson 6,265 posts

- 13. Pluribus 31.6K posts

- 14. Aaron Gordon 5,384 posts

- 15. Russ 14.5K posts

- 16. Guillermo del Toro 26.3K posts

- 17. Tatis 2,274 posts

- 18. Connor Bedard 3,274 posts

- 19. Supreme Court 183K posts

- 20. Westbrook 7,134 posts

Something went wrong.

Something went wrong.

![karisyd's profile picture. [kaɪ̯ ʀiːmɐ] Professor USYD, podcaster, speaker, #digital #disruption #philosophy #thefuture #futureofwork. Convener #disruptsyd. The Future, This Week podcast](https://pbs.twimg.com/profile_images/1443472970266013705/Od2K6eRe.jpg)