JaneDing

@JaneDing_AI

Data Science junior @Umich · Starting research in vision-language models and pragmatic generation · Exploring how AI communicates like humans

🔎 New Toolkit Released: VLM-Lens 🔎 github.com/compling-wat/v… In the past 10 months, our lab, together with collaborators @ziqiao_ma @SLED_AI @jzhou_jz, developed a simple and streamlined interpretability toolkit for VLMs supporting 16 state-of-the-art models across the board!

Over the past few months, I’ve heard the same complaint from nearly every collaborator working on computational cogsci + behavioral and mechanistic interpretability: “Open-source VLMs are a pain to run, let alone analyze.” We finally decided to do something about it (thanks…

Excited to attend #COLM2025 in Montréal this week! I’ll be presenting our paper "Vision-Language Models Are Not Pragmatically Competent in Referring Expression Generation", in Poster Session 4. Looking forward to meeting many of you there! ☺️ vlm-reg.github.io

Regrettably can’t attend #COLM2025 due to deadlines, but @JaneDing_AI and @SLED_AI will be presenting our work. :) @JaneDing_AI is an exceptional undergraduate researcher and a great collaborator! Go meet her at COLM if you’re curious about her work on mechanistic…

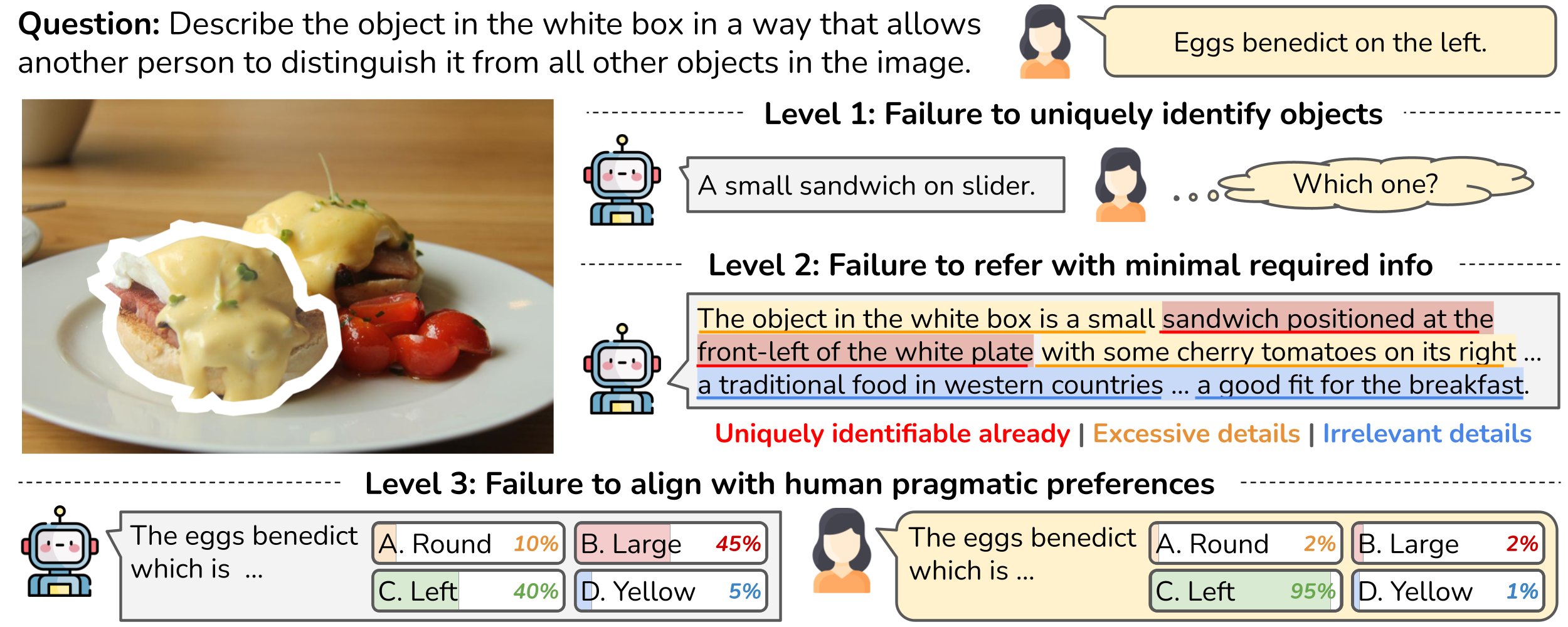

Vision-Language Models (VLMs) can describe the environment, but can they refer within it? Our findings reveal a critical gap: VLMs fall short of pragmatic optimality. We identify 3 key failures of pragmatic competence in referring expression generation with VLMs: (1) cannot…

+1 on this! Mixed-effects models are such an underrated protocol for behavioral analysis that AI researchers often overlook. Behavioral data are almost never independent: clustering, repeated measures, and hierarchical structures abound. Mixed-effects models account for these…

I'd highlight the point on generalization: to make a "poor generalization" argument, we need systematic evaluations. A promising protocol is prompting multiple LMs and treating each as an individual in mixed-effect models. arxiv.org/pdf/2502.09589 w/ @tom_yixuan_wang (2/n)

Our study on pragmatic generation is accepted to #COLM2025! Missed the first COLM last year (no suitable ongoing project at the time😅). Heard it’s a great place to connect with LM folks, excited to join for round two finally.

Vision-Language Models (VLMs) can describe the environment, but can they refer within it? Our findings reveal a critical gap: VLMs fall short of pragmatic optimality. We identify 3 key failures of pragmatic competence in referring expression generation with VLMs: (1) cannot…

Thrilled to finally share SimWorld — the result of over a year’s work of the team. Simulators have been foundational for embodied AI research (I’ve worked with AI2Thor, CARLA, Genesis…), and SimWorld pushes this further with photorealistic Unreal-based rendering and scalable…

P.S., We are building @GrowAiLikeChild, an open-source community uniting researchers from computer science, cognitive science, psychology, linguistics, philosophy, and beyond. Instead of putting growing up and scaling up into opposite camps, let's build and evaluate human-like AI…

Vision-Language Models (VLMs) can describe the environment, but can they refer within it? Our findings reveal a critical gap: VLMs fall short of pragmatic optimality. We identify 3 key failures of pragmatic competence in referring expression generation with VLMs: (1) cannot…

United States Tendenze

- 1. Mamdani 283K posts

- 2. Kandi 4,738 posts

- 3. Mama Joyce 1,294 posts

- 4. #ItsGoodToBeRight N/A

- 5. #HMGxBO7Sweeps 1,494 posts

- 6. Egg Bowl 2,191 posts

- 7. Brandon Aiyuk N/A

- 8. Chance Moore N/A

- 9. #BY9sweepstakes N/A

- 10. Ukraine 598K posts

- 11. Adolis Garcia 1,954 posts

- 12. #AleMeRepresenta N/A

- 13. Putin 209K posts

- 14. Richie Saunders N/A

- 15. Wisconsin 8,411 posts

- 16. Joshua 40.9K posts

- 17. El Bombi N/A

- 18. #DanSeats N/A

- 19. Nolan Jones N/A

- 20. Kiffin 11K posts

Something went wrong.

Something went wrong.

![fredahshi's tweet card. [EMNLP 2025 Demo] Extracting internal representations from vision-language models. Beta version. - compling-wat/vlm-lens](https://pbs.twimg.com/card_img/1989526674870829056/QrZjhZzf?format=jpg&name=orig)