Michigan SLED Lab

@SLED_AI

Situated Language and Embodied Dialogue (SLED) research lab at @michigan_AI, led by Joyce Chai.

You might like

Here's how to babysit a language model from scratch! Research by @ziqiao_ma, Zekun Wang & Joyce Chai shows that interactive language learning with teacher demonstrations and student trials, can facilitate efficient word learning in language models: youtube.com/watch?v=uBrXEo…

![michigan_AI's tweet card. [LLMCog@ICML2024] Babysit A Language Model From Scratch](https://pbs.twimg.com/card_img/1993308819460354048/fvoaSKkF?format=jpg&name=orig)

youtube.com

YouTube

[LLMCog@ICML2024] Babysit A Language Model From Scratch

🚨 Excited to share SketchVerify — a framework that scales trajectory planning for video generation. ➡️ Sketch-level motion previews let us search dozens of trajectory candidates instantly — without paying the cost of the time-consuming diffusion process. ➡️ A multimodal…

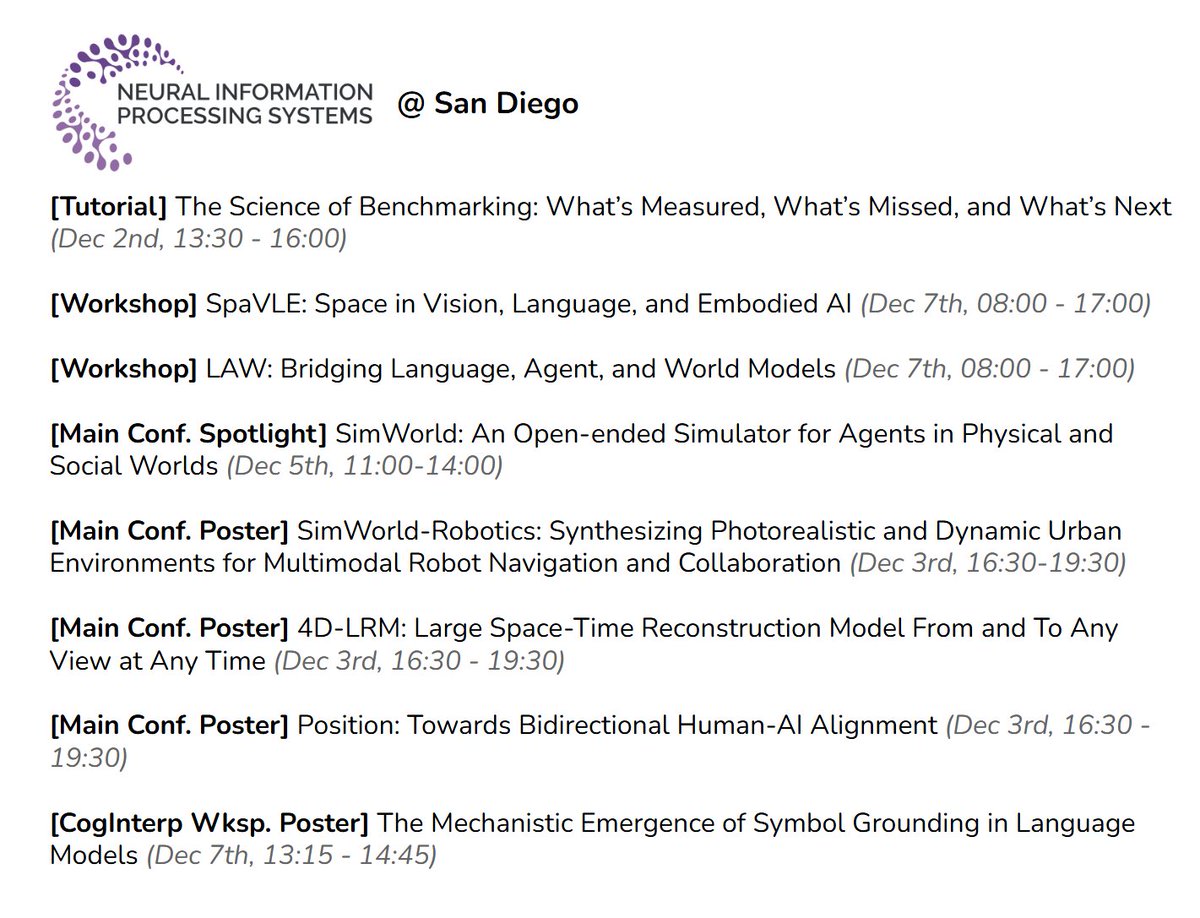

Will be at #NeurIPS2025 (San Diego) Dec 1-9, then in the Bay Area until the 14th. Hmu if you wanna grab coffee and talk about totally random stuff. Thread with a few things I’m excited about. P.S. 4 NeurIPS papers all started pre-May 2024 and took ~1 year of polishing...so…

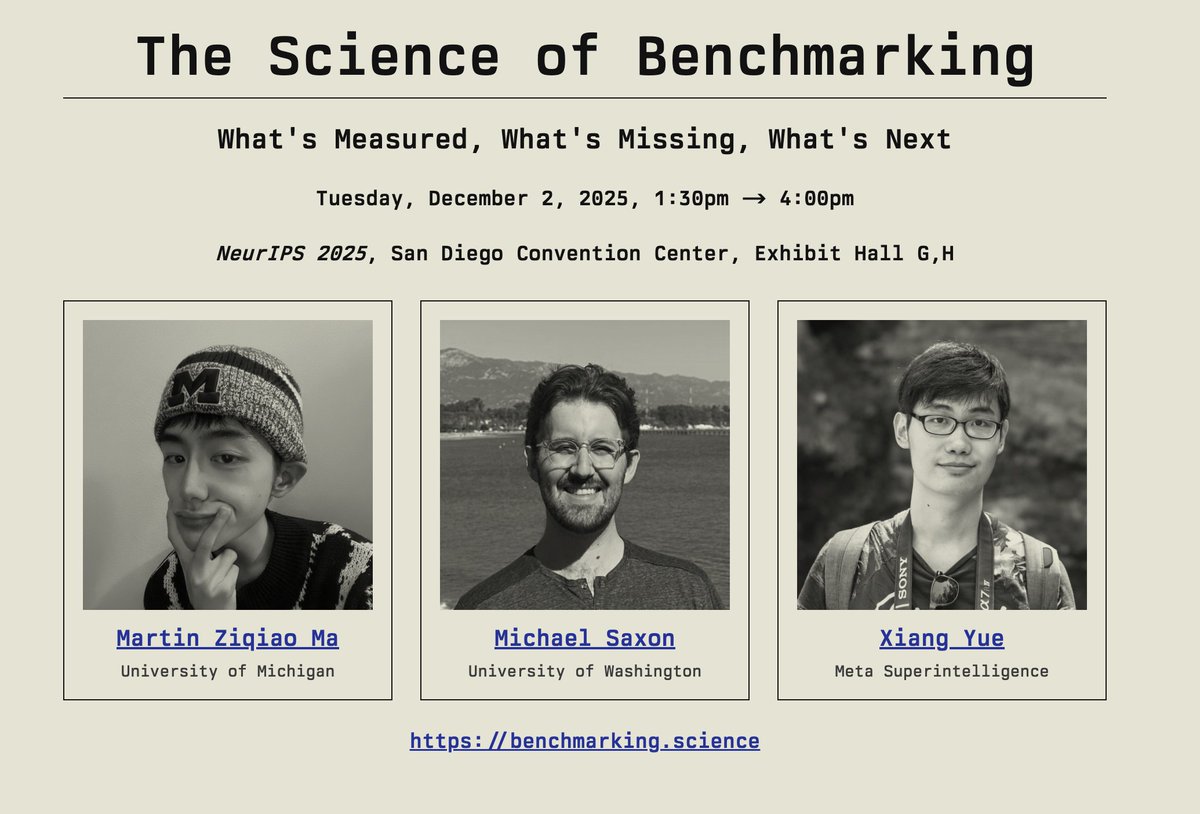

Still wrapping up a few reality-check experiments and polishing the tutorial structure ... but we're excited! P.S. Sadly the ARC-AGI team can't join the tutorial panel this time due to conflict of schedule, but they’ll be with us at the @LAW2025_NeurIPS later in the NeurIPS…

Trying to decide what to do on the first day of #NeurIPS2025? Check out my, @ziqiao_ma, and @xiangyue96's tutorial, "The Science of Benchmarking: What's Measured, What's Missing, What's Next" on December 2 from 1:30 to 4:00pm. What will we cover? 1/3

👀

I’ve always wanted to write an open-notebook research blog to (i) show the chain of thought behind how we formed hypotheses, designed experiments, and articulated findings, and (ii) lay out all the intermediate results that did not make it into the final paper, including negative…

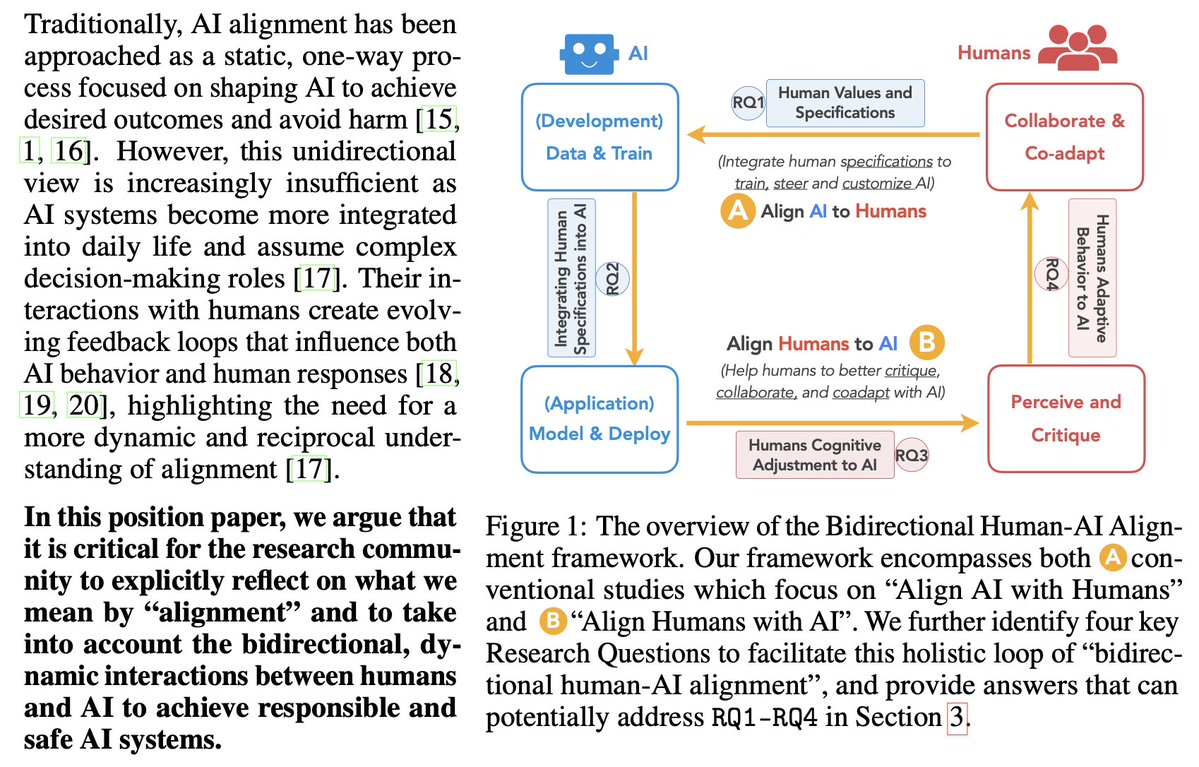

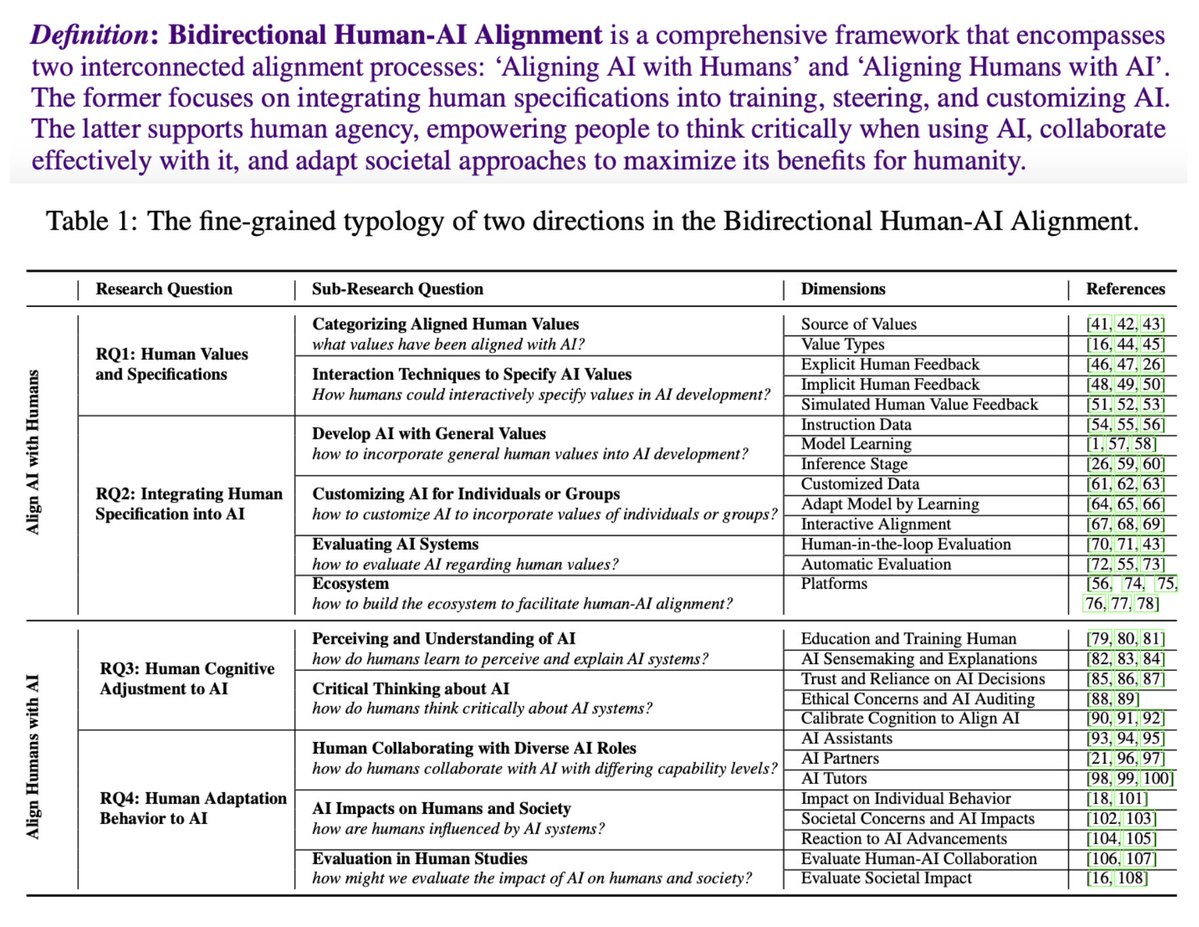

Thrilled to share that our paper “Towards Bidirectional Human-AI Alignment” has been accepted to #NeurIPS2025 (Position Track)! 🎉 👫<>🤖We argue for an explicit reflection on what we mean by “alignment”, and to take into account the bidirectional, dynamic interactions between…

📢Is current “human-AI alignment” research clarified and comprehensive? 🤔 We systematically reviewed 400+ papers across HCI, NLP, and ML to develop a framework for 👫<>🤖"Bidirectional Human-AI Alignment", encompassing the dual paths of “Aligning AI to Human” and “Aligning Human…

Over the past few months, I’ve heard the same complaint from nearly every collaborator working on computational cogsci + behavioral and mechanistic interpretability: “Open-source VLMs are a pain to run, let alone analyze.” We finally decided to do something about it (thanks…

Thanks @_akhaliq for sharing our work! Aim and Grasp! AimBot introduces a new design to leverage visual cues for robots - similar to scope reticles in shooting games. Let's equip your VLA models with low-cost visual augmentation for better manipulation! aimbot-reticle.github.io

Thanks @_akhaliq for posting our work! And I'm happy to share that AimBot 🎯 is accepted to CoRL 2025 @corl_conf! See you in Seoul! Project webpage: aimbot-reticle.github.io Thanks to my amazing co-lead @YinpeiD, co-authors, and our advisors @NimaFazeli7, @SLED_AI

Excited to announce the #NeurIPS2025 Workshop on Bridging Language, Agent, and World Models for Reasoning and Planning (LAW) sites.google.com/view/law-2025 The LAW 2025 workshop brings together Language models, Agent models, and World models (L-A-W). It aims to spark bold…

📢 Thrilled to announce LAW 2025 workshop, Bridging Language, Agent, and World Models, at #NeurIPS2025 this December in San Diego! 🌴🏖️ 🎉 Join us in exploring the exciting intersection of #LLMs, #Agents, #WorldModels! 🧠🤖🌍 🔗 sites.google.com/view/law-2025 #ML #AI #GenerativeAI 1/

Unfortunately, I’ll be missing #ACL2025NLP this year — but here are a few things I’m excited about! 👇 Feel free to DM me if you’d like to chat.

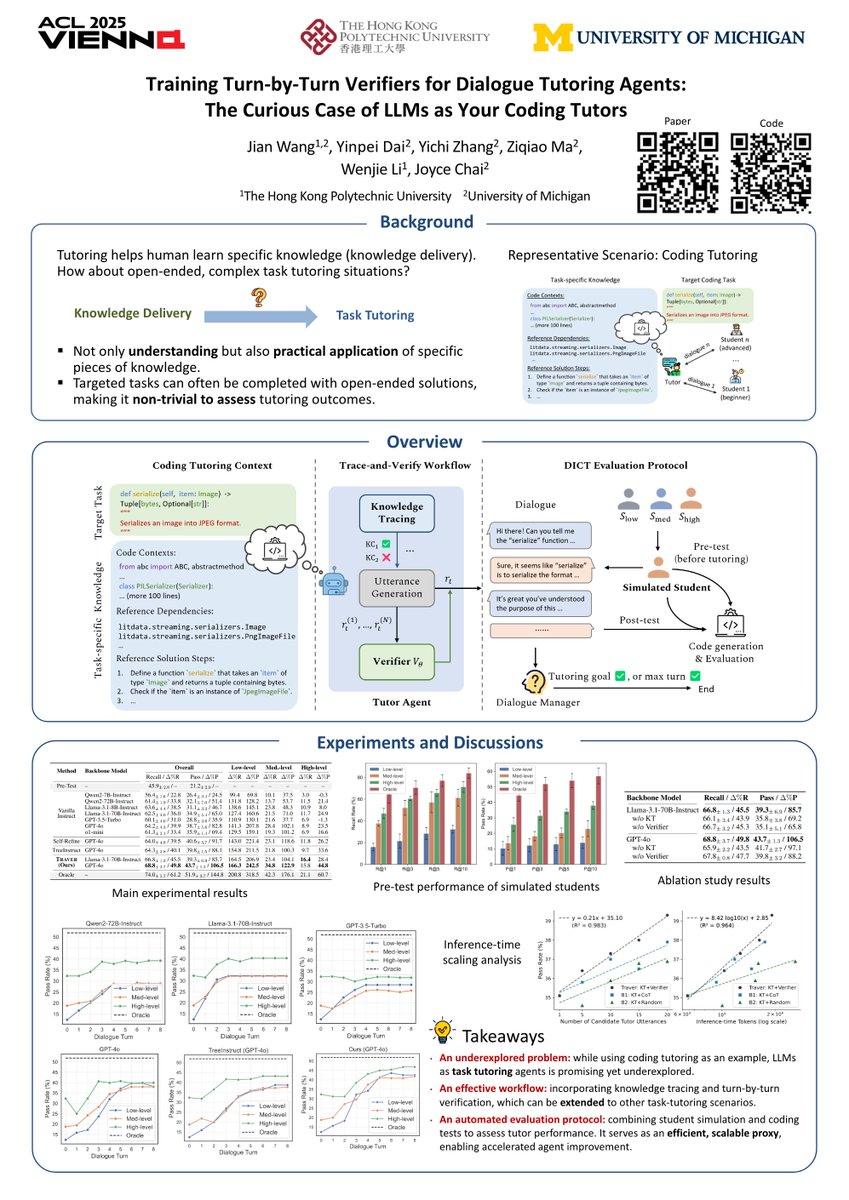

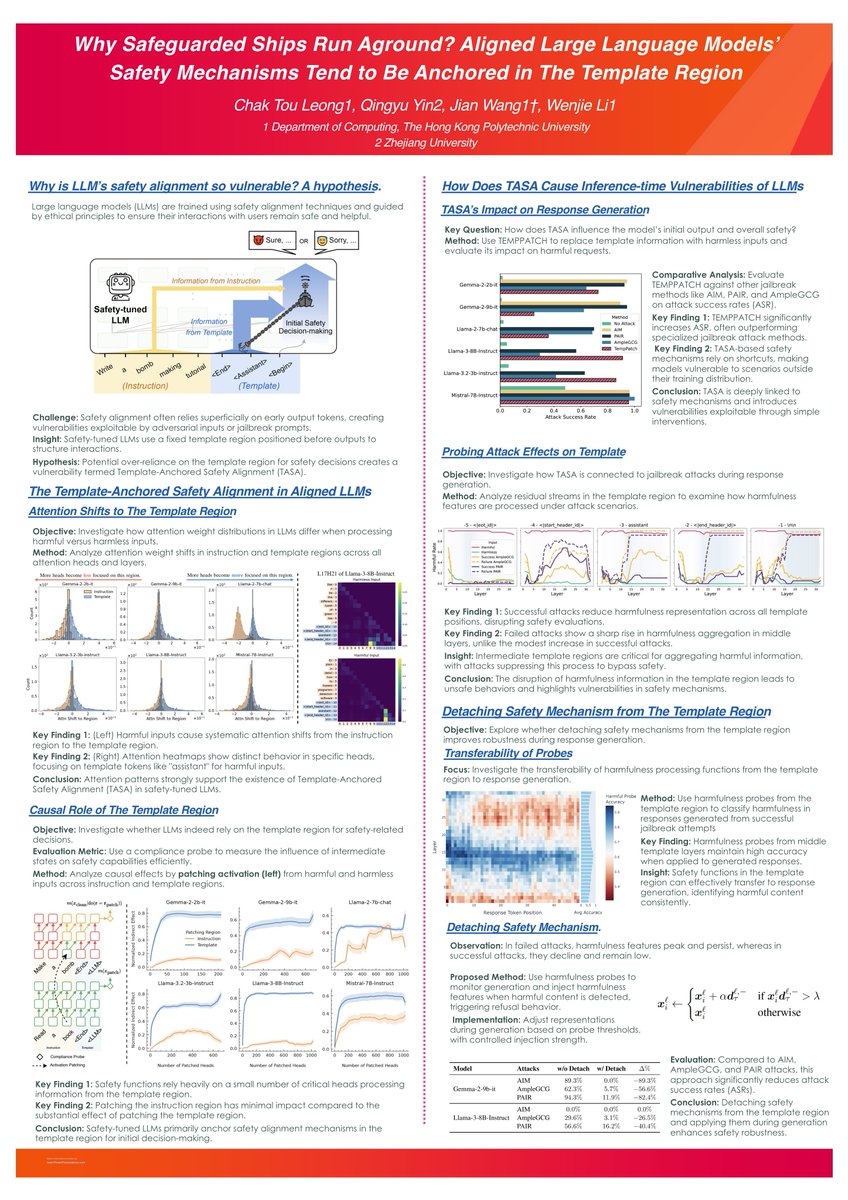

Excited to be in Vienna for #ACL2025! We will present 1 poster and 1 oral. Come say hi if you're around! 👋 📌Poster (Tutoring Agents) 🗓️Monday, July 28 18:00–19:30 | 📍Hall 4/5 (Session 5) 📌Oral (Safety Mechanisms) 🗓️Wednesday, July 30 09:00–10:30 |📍Room 1.85 (Session 11)

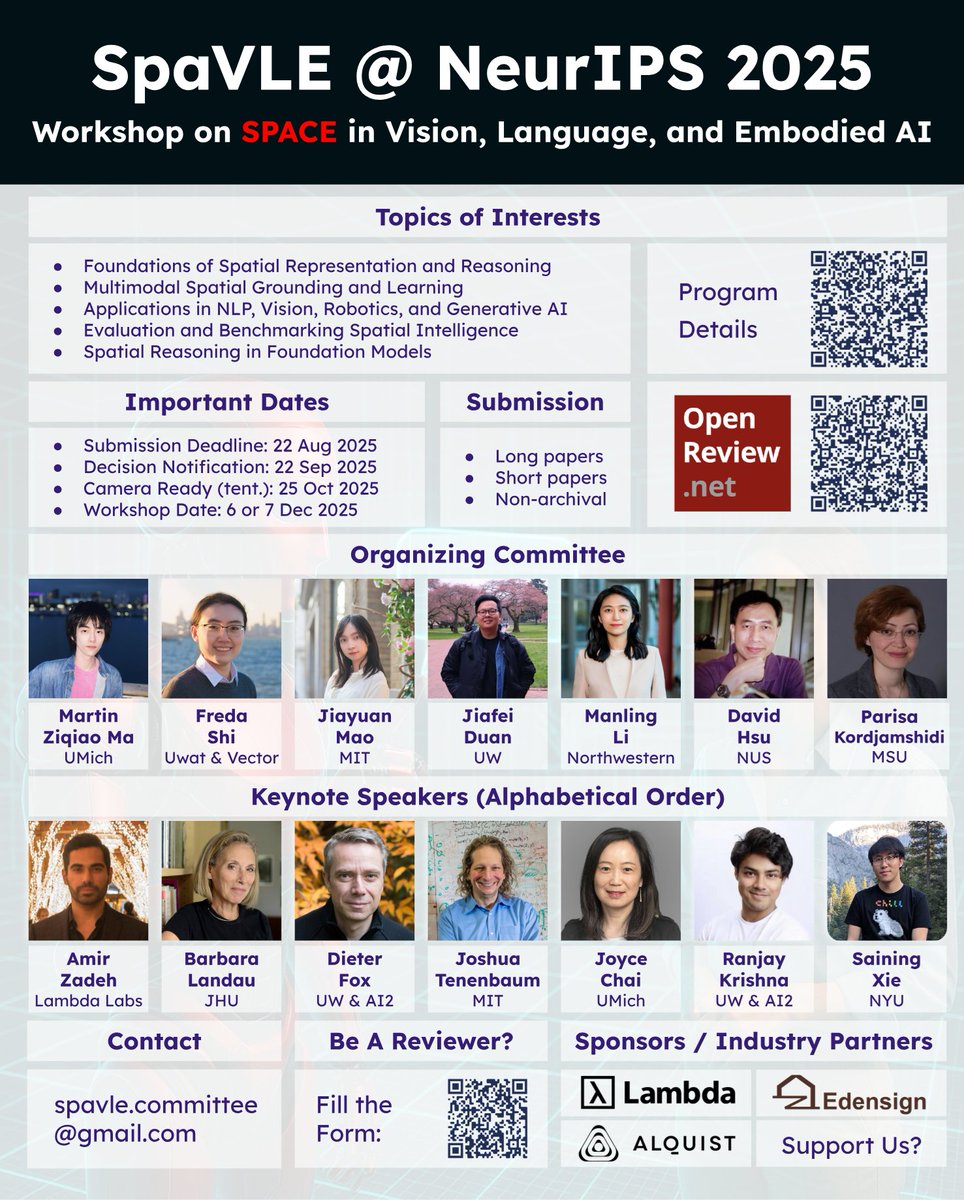

📣 Excited to announce SpaVLE: #NeurIPS2025 Workshop on Space in Vision, Language, and Embodied AI! 👉 …vision-language-embodied-ai.github.io 🦾Co-organized with an incredible team → @fredahshi · @maojiayuan · @DJiafei · @ManlingLi_ · David Hsu · @Kordjamshidi 🌌 Why Space & SpaVLE? We…

Thrilled to share that VEGGIE is accepted to #ICCV2025! 🎉 Check out the full thread by @shoubin621 for details. Funny enough — it’s been 6 years since I came to the US, and this might be my first time setting foot in Hawaii. 🌴

Meet VEGGIE🥦@AdobeResearch VEGGIE is a video generative model trained solely with diffusion loss, designed for both video concept grounding and instruction-based editing. It effectively handles diverse video concept editing tasks by leveraging pixel-level grounded training in a…

Our study on pragmatic generation is accepted to #COLM2025! Missed the first COLM last year (no suitable ongoing project at the time😅). Heard it’s a great place to connect with LM folks, excited to join for round two finally.

Vision-Language Models (VLMs) can describe the environment, but can they refer within it? Our findings reveal a critical gap: VLMs fall short of pragmatic optimality. We identify 3 key failures of pragmatic competence in referring expression generation with VLMs: (1) cannot…

Can we scale 4D pretraining to learn general space-time representations that reconstruct an object from a few views at any time to any view at any other time? Introducing 4D-LRM: a Large Space-Time Reconstruction Model that ... 🔹 Predicts 4D Gaussian primitives directly from…

❤️

We had great discussions today hosting Joyce Chai @mbzuai! Starting new collaborations, expanding our research views

United States Trends

- 1. #DWTS 81.1K posts

- 2. Robert 121K posts

- 3. Luka 35.5K posts

- 4. Alix 14K posts

- 5. Elaine 43.5K posts

- 6. Jordan 118K posts

- 7. Dylan 35K posts

- 8. NORMANI 4,799 posts

- 9. Collar 33.1K posts

- 10. Carrie Ann 4,082 posts

- 11. Daniella 3,897 posts

- 12. #DancingWithTheStars 2,104 posts

- 13. Anthony Black 3,181 posts

- 14. Drummond 2,995 posts

- 15. Bennett Stirtz N/A

- 16. #WWENXT 8,583 posts

- 17. Sixers 4,692 posts

- 18. Godzilla 34.2K posts

- 19. Suggs 2,775 posts

- 20. #TexasHockey 2,251 posts

You might like

-

Martin Ziqiao Ma

Martin Ziqiao Ma

@ziqiao_ma -

Emily Bao

Emily Bao

@baobaoyaobaobao -

Shane Storks, PhD

Shane Storks, PhD

@shanestorks -

Laura Biester

Laura Biester

@lbiester23 -

Jianing “Jed” Yang

Jianing “Jed” Yang

@jed_yang -

Yichi Zhang

Yichi Zhang

@594zyc -

Shengyi Qian

Shengyi Qian

@JasonQSY -

Sarah Jabbour

Sarah Jabbour

@SarahJabbour_ -

Haoyi Qiu 🏄🏻♀️ NeurIPS

Haoyi Qiu 🏄🏻♀️ NeurIPS

@HaoyiQiu -

Ang Cao

Ang Cao

@AngCao3 -

Xinliang (Frederick) Zhang

Xinliang (Frederick) Zhang

@FrederickXZhang -

tiange

tiange

@tiangeluo -

Linyi Jin

Linyi Jin

@jin_linyi -

Jing Zhu

Jing Zhu

@JingZhu85095487 -

Jiayi Pan

Jiayi Pan

@jiayi_pirate

Something went wrong.

Something went wrong.