Prahitha Movva @COLM2025

@PrahithaM

MSCS @UMassAmherst, Community Lead @cohere

你可能会喜欢

Why don’t VLAs generalize as well as their VLM counterparts? One culprit: catastrophic forgetting during fine-tuning. 🧠 We introduce VLM2VLA: a training paradigm that preserves the VLM capabilities while teaching robotic control. vlm2vla.github.io 🧵

This paper by Ivan Lee (@ivn1e) & @BergKirkpatrick was great! Best thing I’ve seen at #COLM2025 so far! Readability ≠ Learnability: Rethinking the Role of Simplicity in Training Small Language Models openreview.net/forum?id=AFMGb…

Reinforcement Learning (RL) has long been the dominant method for fine-tuning, powering many state-of-the-art LLMs. Methods like PPO and GRPO explore in action space. But can we instead explore directly in parameter space? YES we can. We propose a scalable framework for…

Headed to COLM this week! I’ll be presenting at the NLP4Democracy Workshop and would love to connect over coffee to chat about societal impact of AI, alignment, or AI policy. Also, currently on the job market, always up for chats about research or opportunities! #COLM2025

At @ChandarLab, we are happy to announce the third edition of our assistance program to provide feedback for members of communities underrepresented in AI who want to apply to high-profile graduate programs. Want feedback? Details: chandar-lab.github.io/grad_app/. Deadline: Nov 01! cc:…

Over the past few months, I’ve heard the same complaint from nearly every collaborator working on computational cogsci + behavioral and mechanistic interpretability: “Open-source VLMs are a pain to run, let alone analyze.” We finally decided to do something about it (thanks…

It is PhD application season again 🍂 For those looking to do a PhD in AI, these are some useful resources 🤖: 1. Examples of statements of purpose (SOPs) for computer science PhD programs: cs-sop.org [1/4]

Smol win, just realized the work I published last year got its first citation :) I really want to contribute to the world of Mechanistic Interpretability, and the thought of being cited along with the giants of the field, for a field of work I am so passionate about, made my day!…

It’s PhD / grad school application season again🌱 I’ve been getting reach out about SoPs, research fit, “should I do PhD at all??” Here's the dump of my honest thoughts from my application experience - what worked for me, and how I decided if a PhD was right for me. Wrote it…

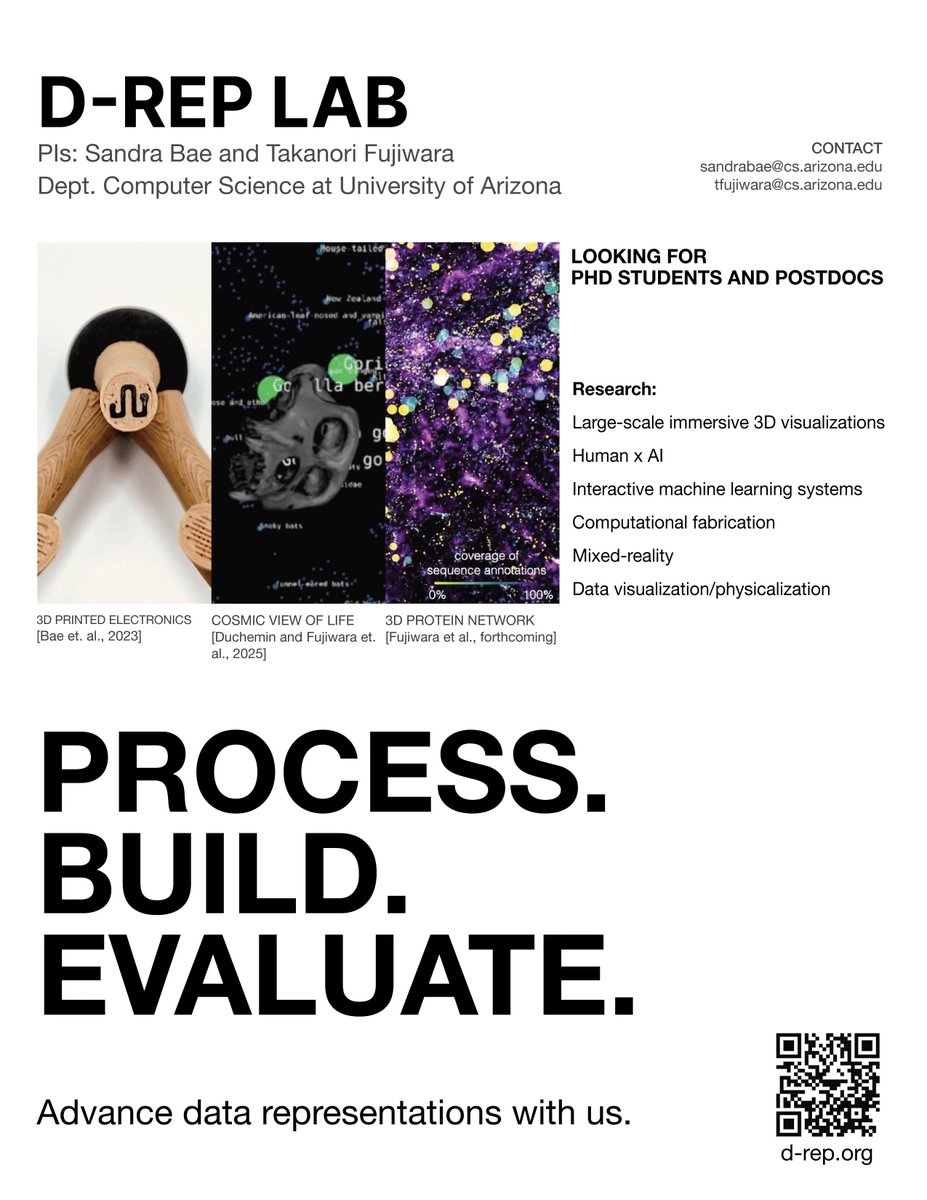

@ folks seeking PhD & post-doc opportunities! My friends @sandrabae_sb and @TakanoriFW are starting a new lab! both are such talented, thoughtful researchers and I know they'll be generous and supportive advisors! students will be so lucky to get the chance to work with them!

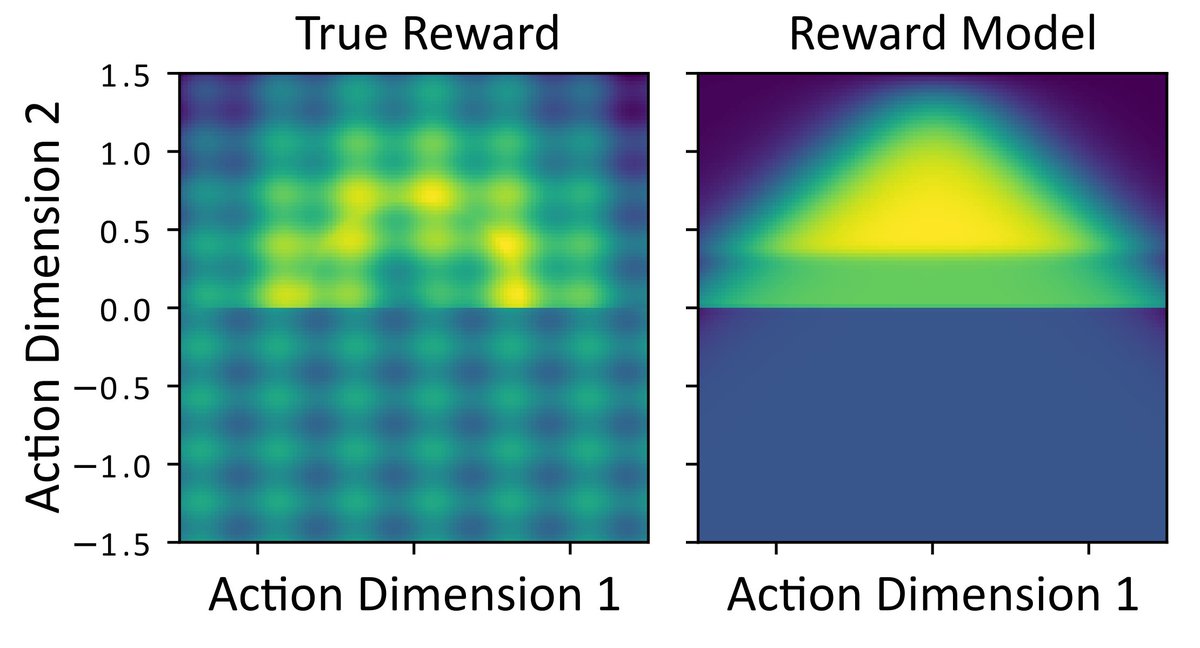

Reward models do not have the capacity to fully capture human preferences. If they can't represent human preferences, how can we hope to use them to align a language model? In our #COLM2025 "Off-Policy Corrected Reward Modeling for RLHF", we investigate this issue 🧵

Who is going to be at #COLM2025? I want to draw your attention to a COLM paper by my student @sheridan_feucht that has totally changed the way I think and teach about LLM representations. The work is worth knowing. And you meet Sheridan at COLM, Oct 7!

[📄] Are LLMs mindless token-shifters, or do they build meaningful representations of language? We study how LLMs copy text in-context, and physically separate out two types of induction heads: token heads, which copy literal tokens, and concept heads, which copy word meanings.

![sheridan_feucht's tweet image. [📄] Are LLMs mindless token-shifters, or do they build meaningful representations of language? We study how LLMs copy text in-context, and physically separate out two types of induction heads: token heads, which copy literal tokens, and concept heads, which copy word meanings.](https://pbs.twimg.com/media/Gn787vEagAAVPcb.jpg)

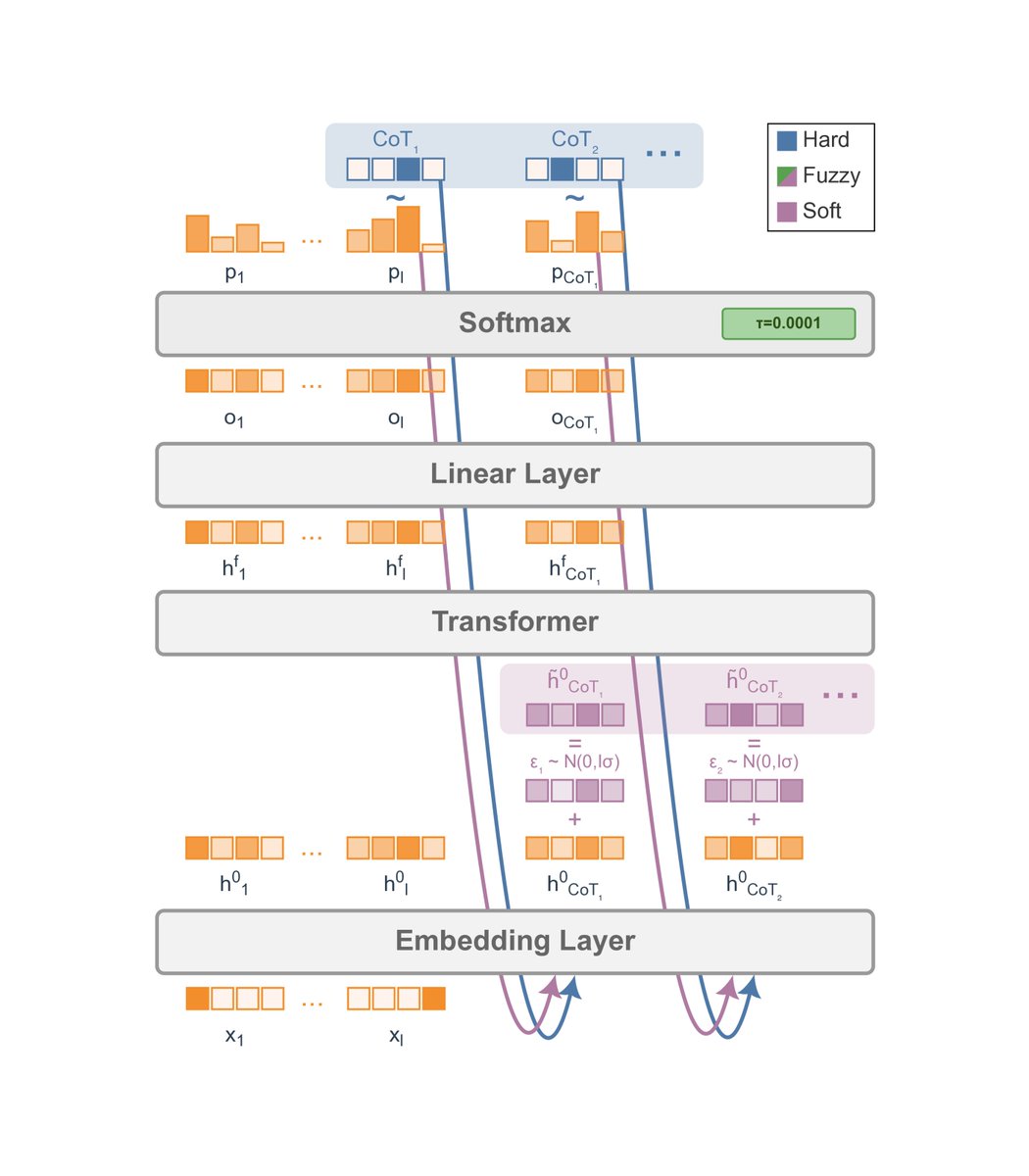

🔥New preprint: Soft Tokens, Hard Truths Introduces the first scalable continuous-token RL method for LLMs - no reference CoTs needed; scales to hundreds of thought tokens. Best to train soft, infer hard! Pass@1 parity ⚖️, Pass@32 gains 📈& better robustness 🛡️ vs. hard CoT 1/🧵

Are there conceptual directions in VLMs that transcend modality? Check out our COLM spotlight🔦 paper! We analyze how linear concepts interact with multimodality in VLM embeddings using SAEs with @Huangyu58589918, @napoolar, @ShamKakade6 and Stephanie Gil arxiv.org/abs/2504.11695

We’ve received A LOT OF submissions this year 🤯🤯 and are excited to see so much interest! To ensure high-quality review, we are looking for more dedicated reviewers. If you'd like to help, please sign up here docs.google.com/forms/d/e/1FAI…

✨ Internship Opportunity @ Google Research ✨ We are seeking a self-motivated student researcher to join our team at Google Research starting around January 2026. 🚀 In this role, you will contribute to research projects advancing agentic LLMs through tool use and RL, with the…

On my way to #UIST2025, kicking off strong with a 16 hour+ flight due to a refueling stop. ✈️ In Seoul until 28th then Busan. HCI friends, lmk if you’d like to meet up! We are also hiring a PhD student for projects around human-AI grounding —will post this again closer to conf.

In today's modern AI world I sometimes wonder if "research scientist or engineer" is the right dichotomy anymore. Im not convinced this is the right framework anymore. I think it should be a slider of a single factor called "creativity" and that's it. It's also not exactly a…

I'm looking for an informal PhD supervisor in LLMs/post-training — any recommendations? My supervisor is leaving academia & the rest of the dep't doesn't work on LLMs, so I'm hoping to find someone external to collaborate with More info 👇, RTs appreciated! 🙏

Our ML Industry program is excited to host @psssnikhil1, Senior ML Engineer at Adobe for a session on "Crafting a Successful AI Career & Transitioning Research into Scalable AI Products." Thanks to @__Vaibhavi, @arya_suneesh and @PrahithaM for organizing this event ✨ Learn…

United States 趋势

- 1. Auburn 46.5K posts

- 2. Discussing Web3 N/A

- 3. At GiveRep N/A

- 4. Brewers 66.1K posts

- 5. MACROHARD 6,375 posts

- 6. #SEVENTEEN_NEW_IN_TACOMA 35.8K posts

- 7. Gilligan 6,361 posts

- 8. Georgia 68.6K posts

- 9. Utah 25.6K posts

- 10. Wordle 1,576 X N/A

- 11. #MakeOffer 19.4K posts

- 12. Cubs 57K posts

- 13. #SVT_TOUR_NEW_ 28.1K posts

- 14. Kirby Smart 8,594 posts

- 15. Arizona 42K posts

- 16. #HawaiiFB N/A

- 17. #BYUFOOTBALL 1,027 posts

- 18. mingyu 101K posts

- 19. Boots 51.4K posts

- 20. Holy War 2,194 posts

Something went wrong.

Something went wrong.