OpenChat

@OpenChatDev

Advancing Open Source LLMs with Mixed Quality Data through offline RL-inspired C-RLFT. ⠀⠀⠀⠀⠀⠀⠀⠀⠀ ⠀⠀⠀⠀⠀⠀⠀⠀⠀ ⠀⠀𝗣𝗿𝗼𝗷𝗲𝗰𝘁 𝗟𝗲𝗮𝗱: Guan Wang, @AlpayAriyak

🚀Introducing OpenChat 3.6 🌟Surpassed official Llama3-Instruct—with 1-2M synthetic data compared to ~10M human labels 🤫GPTs are close to limits—excel at generation but fall short at complex tasks 🎯We are training next gen—capable of deterministic reasoning and planning 🔗…

Will Sudoku become the MNIST for reasoning? Simple rules, clear structure, unique solutions—yet surprisingly challenging for modern LLMs, often requiring explicit trial-and-error to solve. huggingface.co/datasets/sapie…

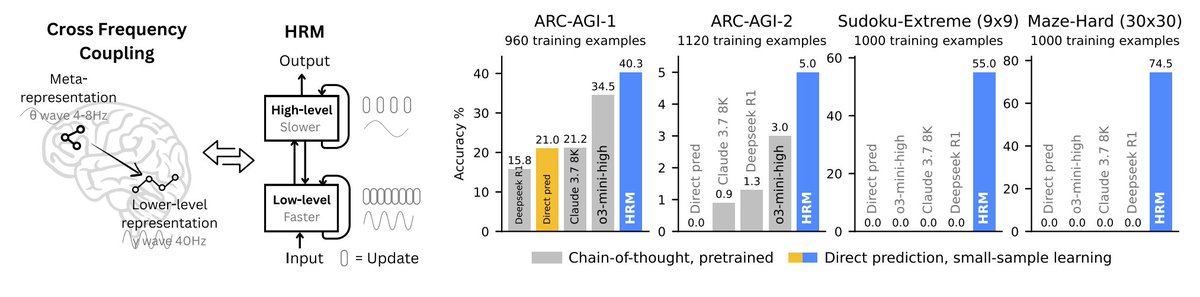

🚀Introducing Hierarchical Reasoning Model🧠🤖 Inspired by brain's hierarchical processing, HRM delivers unprecedented reasoning power on complex tasks like ARC-AGI and expert-level Sudoku using just 1k examples, no pretraining or CoT! Unlock next AI breakthrough with…

Thrilled to see RSP featured at AAAI'25! This pioneering concept was a key inspiration for developing OpenChat! 🚀 #AI #AAAI25

🚨Recursive Skip-Step Planning (RSP) Relying on larger, expressive models for sequential decision-making has recently become a popular choice, but are they truly necessary? Can we replace these heavy models? Yes—RSP empowers shallow MLPs to excel in long-horizon tasks!🧵(1/n)

skronge bones in that one 🔍 excellent job! a 7b model out cracking gpt4 turbo and gpt4o and claude 3 sonnet!

🚀Excited to share our Storm-7B🌪️. This model achieves a 50.5% length-controlled win rate against GPT-4 Preview, making it the first open-source model to match GPT-4 Preview on AlpacaEval 2.0. 📄arxiv.org/pdf/2406.11817 🤗huggingface.co/jieliu/Storm-7B

🚀Introducing OpenChat 3.6 🌟Surpassed official Llama3-Instruct—with 1-2M synthetic data compared to ~10M human labels 🤫GPTs are close to limits—excel at generation but fall short at complex tasks 🎯We are training next gen—capable of deterministic reasoning and planning 🔗…

🚀 The World's First Gemma fine-tune based on openchat-3.5-0106 data and method (C-RLFT). Almost the same performance as the Mistral-based version. 6T tokens = secret recipe? HuggingFace: huggingface.co/openchat/openc…

🚀Kudos to @huggingface ! OpenChat-3.5 Update 0106 has landed on HuggingChat & Spaces! Explore now! Experience open-source AI at ChatGPT & Grok level! 🤗 HuggingChat: huggingface.co/chat 🌌 Spaces: huggingface.co/spaces/opencha… 🖥️ OpenChat UI: openchat.team

huggingface.co

HuggingChat

Making the community's best AI chat models available to everyone.

🚀Announcing OpenChat-3.5 Update 0106: 𝗪𝗼𝗿𝗹𝗱’𝘀 𝗕𝗲𝘀𝘁 𝗢𝗽𝗲𝗻 𝗦𝗼𝘂𝗿𝗰𝗲 𝟳𝗕 𝗟𝗟𝗠! Experience ChatGPT & Grok-level AI locally 💿! Surpassing Grok-0 (33B) across all 4 benchmarks and Grok-1 (???B) on average and 3/4 benchmarks 🔥. 🎯 This update mainly enhanced…

🚀Announcing OpenChat-3.5 Update 0106: 𝗪𝗼𝗿𝗹𝗱’𝘀 𝗕𝗲𝘀𝘁 𝗢𝗽𝗲𝗻 𝗦𝗼𝘂𝗿𝗰𝗲 𝟳𝗕 𝗟𝗟𝗠! Experience ChatGPT & Grok-level AI locally 💿! Surpassing Grok-0 (33B) across all 4 benchmarks and Grok-1 (???B) on average and 3/4 benchmarks 🔥. 🎯 This update mainly enhanced…

We achieved almost ideal MoE fine-tuning performance (equivalent to a dense model with the same active parameters). <10% overhead

United States 趨勢

- 1. Baker 38.5K posts

- 2. 49ers 36.6K posts

- 3. Packers 33.9K posts

- 4. Bucs 12.2K posts

- 5. #BNBdip N/A

- 6. Flacco 12.6K posts

- 7. Fred Warner 13.2K posts

- 8. Cowboys 75.5K posts

- 9. Ty Dillon N/A

- 10. Niners 5,880 posts

- 11. #TNABoundForGlory 9,791 posts

- 12. Cam Ward 3,072 posts

- 13. Zac Taylor 3,355 posts

- 14. #FTTB 4,584 posts

- 15. #GoPackGo 4,233 posts

- 16. Panthers 77K posts

- 17. Titans 24.4K posts

- 18. Egbuka 6,710 posts

- 19. Browns 67.6K posts

- 20. Byron 5,450 posts

Something went wrong.

Something went wrong.