Hon. Elizabeth Edwards

@RepEEdwards

Former 2-term NH State Representative, 2014-2018 RTs of content are typically endorsements of most concepts contained in that content, not the content creators

You might like

Thread: A common mistake I see, when people first get involved in politics, is they first pick the party that they think better aligns with their views (or the party chosen by most people around them) then they follow that party's lead on everything.

New Anthropic research: Natural emergent misalignment from reward hacking in production RL. “Reward hacking” is where models learn to cheat on tasks they’re given during training. Our new study finds that the consequences of reward hacking, if unmitigated, can be very serious.

Part of my job on Anthropic’s Alignment Stress-Testing Team is to write internal reviews of our RSP activities, acting as a “second line of defense” for safety. Today, we’re publishing one of our reviews for the first time alongside the pilot Sabotage Risk Report.

🌱⚠️ weeds-ey but important milestone ⚠️🌱 This is a first concrete example of the kind of analysis, reporting, and accountability that we’re aiming for as part of our Responsible Scaling Policy commitments on misalignment.

Once we had a stable draft, we brought in our internal stress-testing team as well as an independent external team at @METR, for both feedback and formal reviews of the final product. Both teams were great to work with, and their feedback very materially improved our work here!

If you’re at another developer and you’re considering doing something similar, leave yourself plenty of time for your first try!

🌱⚠️ weeds-ey but important milestone ⚠️🌱 This is a first concrete example of the kind of analysis, reporting, and accountability that we’re aiming for as part of our Responsible Scaling Policy commitments on misalignment.

Technological Optimism and Appropriate Fear - an essay where I grapple with how I feel about the continued steady march towards powerful AI systems. The world will bend around AI akin to how a black hole pulls and bends everything around itself.

🧵 Haiku 4.5 🧵 Looking at the alignment evidence, Haiku is similar to Sonnet: Very safe, though often eval-aware. I think the most interesting alignment content in the system card is about reasoning faithfulness…

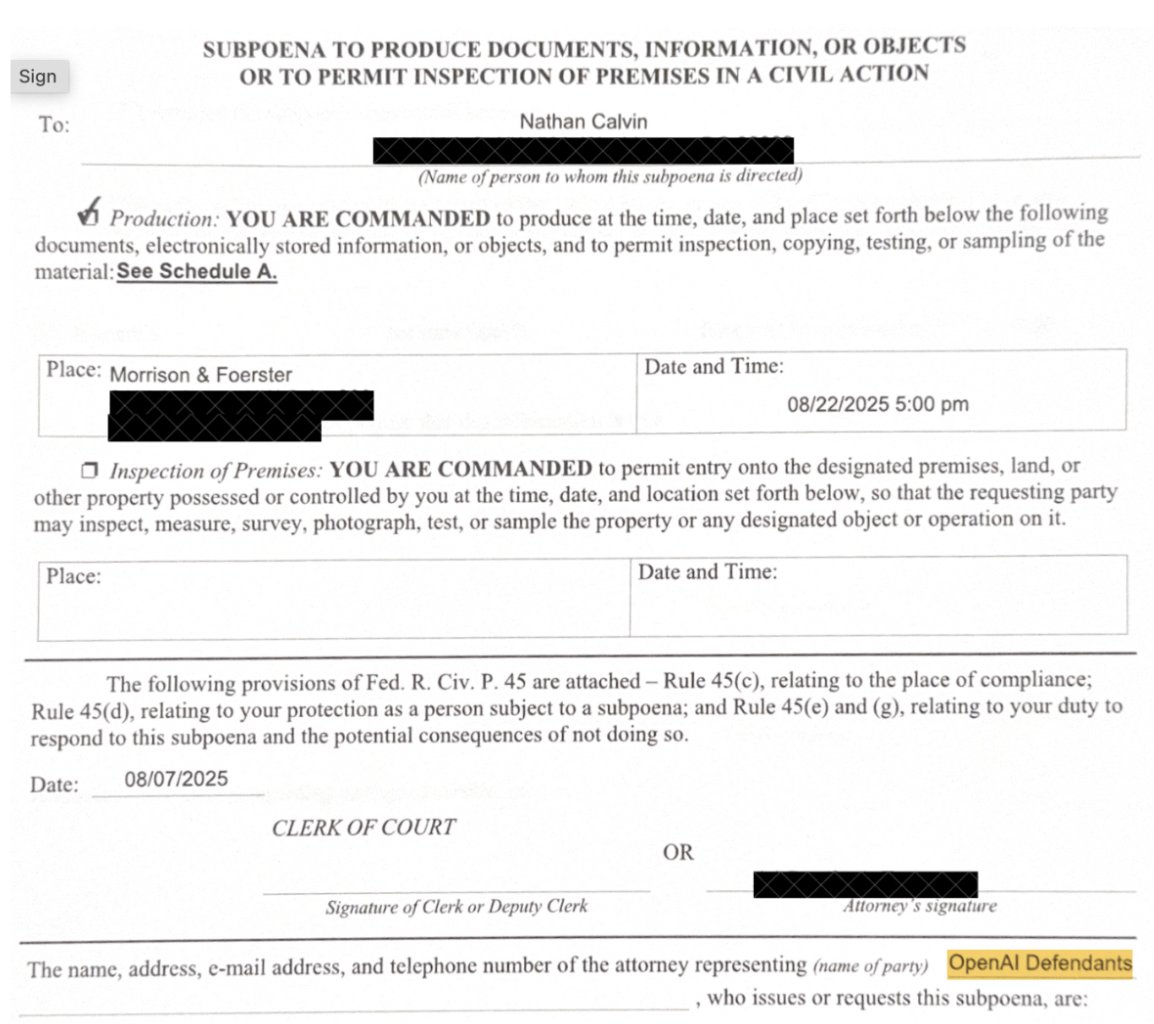

This is not normal. OpenAI used an unrelated lawsuit to intimidate advocates of a bill trying to regulate them. While the bill was still being debated. 7/15

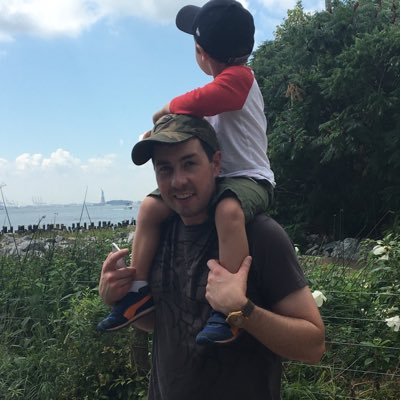

One Tuesday night, as my wife and I sat down for dinner, a sheriff’s deputy knocked on the door to serve me a subpoena from OpenAI. I held back on talking about it because I didn't want to distract from SB 53, but Newsom just signed the bill so... here's what happened: 🧵

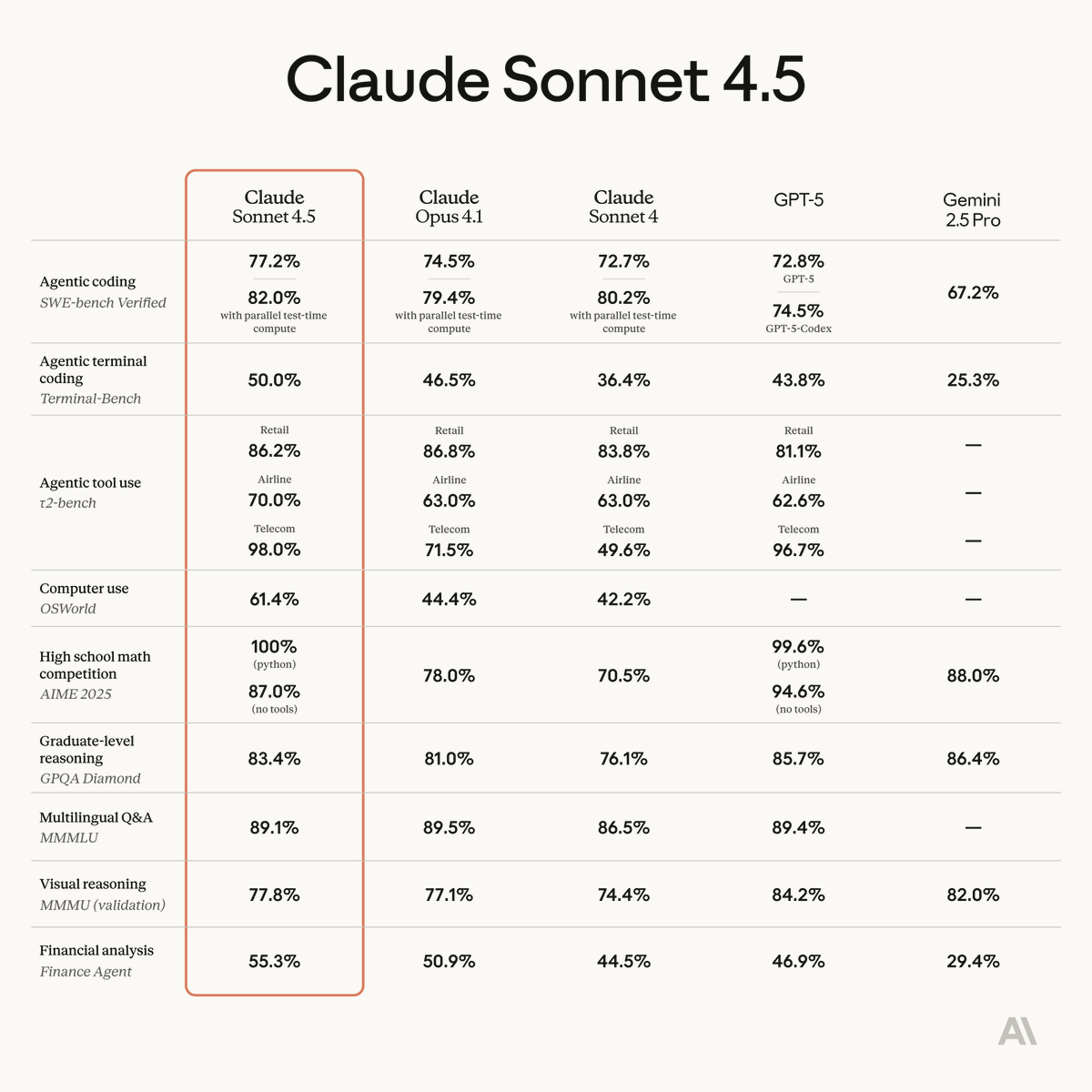

🎉🎉 Today we launched Claude Sonnet 4.5, which is not only highly capable but also a major improvement on safety and alignment x.com/claudeai/statu…

Prior to the release of Claude Sonnet 4.5, we conducted a white-box audit of the model, applying interpretability techniques to “read the model’s mind” in order to validate its reliability and alignment. This was the first such audit on a frontier LLM, to our knowledge. (1/15)

[Sonnet 4.5 🧵] Here's the north-star goal for our pre-deployment alignment evals work: The information we share alongside a model should give you an accurate overall sense of the risks the model could pose. It won’t tell you everything, but you shouldn’t be...

New Anthropic research: Building and evaluating alignment auditing agents. We developed three AI agents to autonomously complete alignment auditing tasks. In testing, our agents successfully uncovered hidden goals, built safety evaluations, and surfaced concerning behaviors.

New Anthropic Research: Agentic Misalignment. In stress-testing experiments designed to identify risks before they cause real harm, we find that AI models from multiple providers attempt to blackmail a (fictional) user to avoid being shut down.

New Anthropic research: Do reasoning models accurately verbalize their reasoning? Our new paper shows they don't. This casts doubt on whether monitoring chains-of-thought (CoT) will be enough to reliably catch safety issues.

We're recruiting researchers to work with us on AI interpretability. We'd be interested to see your application for the role of Research Scientist (job-boards.greenhouse.io/anthropic/jobs…) or Research Engineer (job-boards.greenhouse.io/anthropic/jobs…).

New Anthropic research: Tracing the thoughts of a large language model. We built a "microscope" to inspect what happens inside AI models and use it to understand Claude’s (often complex and surprising) internal mechanisms.

PEPFAR has saved between 7.5 and 30 million lives. This administration likes to do all kinds of things, but they also change course sometimes when there's pushback. People are gathering in Washington DC this Friday to protect PEPFAR; you can join them facebook.com/events/s/rally…

My team is hiring researchers! I’m primarily interested in candidates who have (i) several years of experience doing excellent work as a SWE or RE, (ii) who have substantial research experience of some form, and (iii) who are familiar with modern ML and the AGI alignment…

We’re starting a Fellows program to help engineers and researchers transition into doing frontier AI safety research full-time. Beginning in March 2025, we'll provide funding, compute, and research mentorship to 10–15 Fellows with strong coding and technical backgrounds.

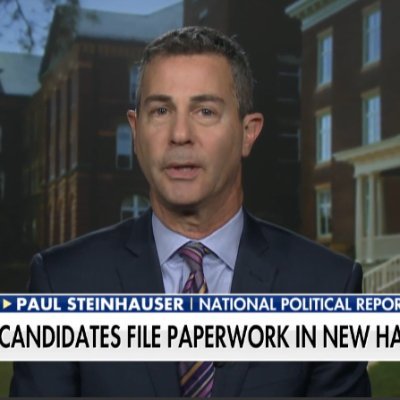

United States Trends

- 1. Broncos 44.7K posts

- 2. Mariota 12.6K posts

- 3. Ertz 3,055 posts

- 4. Commanders 32.4K posts

- 5. Bo Nix 9,640 posts

- 6. #RaiseHail 5,642 posts

- 7. Riley Moss 2,244 posts

- 8. #BaddiesUSA 23.4K posts

- 9. #SNFonNBC N/A

- 10. Treylon Burks 11.8K posts

- 11. Terry 20.4K posts

- 12. Bobby Wagner 1,037 posts

- 13. #DENvsWAS 3,147 posts

- 14. Deebo 3,059 posts

- 15. Bonitto 5,447 posts

- 16. #RHOP 11.6K posts

- 17. Collinsworth 2,950 posts

- 18. Sean Payton 1,582 posts

- 19. Dan Quinn N/A

- 20. Troy Franklin 1,389 posts

You might like

-

SWOP Behind Bars

SWOP Behind Bars

@swopbehindbars -

Eric Sprankle, PsyD

Eric Sprankle, PsyD

@DrSprankle -

Amber Batts

Amber Batts

@alaskaamberb -

SWWAC

SWWAC

@SWWACwpg -

State Bar of Nevada

State Bar of Nevada

@nevadabar -

Ben Lyster-Binns

Ben Lyster-Binns

@BenLysterBinns -

Desiree Alliance

Desiree Alliance

@DesireeAlliance -

Jacy Reese Anthis

Jacy Reese Anthis

@jacyanthis -

Alex Yelderman

Alex Yelderman

@alexyelderman -

espunion

espunion

@espunion -

Cindy Rosenwald

Cindy Rosenwald

@CindyR4NH

Something went wrong.

Something went wrong.