You might like

Can AI actually automate jobs? @Scale_AI and @ai_risks are launching the Remote Labor Index (RLI), the first benchmark and public leaderboard that test how well AI agents can complete real, paid freelance work in domains like software engineering, design, architecture, data…

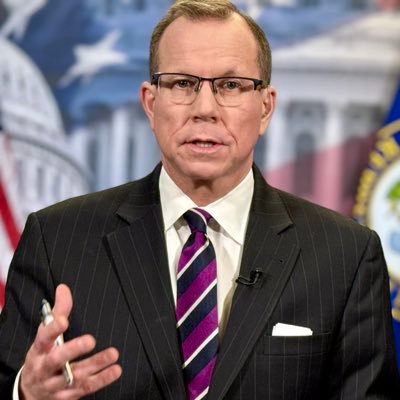

There’s no magic wand for making AI work. Scale CEO @jdroege joined @richardquest on @cnni to share what it really takes:

🔄RLHF → RLVR → Rubrics → OnlineRubrics 👤 Human feedback = noisy & coarse 🧮 Verifiable rewards = too narrow 📋 Static rubrics = rigid, easy to hack, miss emergent behaviors 💡We introduce OnlineRubrics: elicited rubrics that evolve as models train. arxiv.org/abs/2510.07284

Sat down with @lennysan to talk about where AI is headed and how we’re making it work for model builders, enterprises and governments. Also went down memory lane about my time at Uber Eats. 🙂

“I think one of the misunderstandings is that AI is this magic wand or it can solve all problems, and that’s not true today. But there is a ton of value when you get it right.” Our CEO @jdroege shared his AI success framework with CNN's @claresduffy. cnn.com/2025/09/30/tec…

New @Scale_AI paper! The culprit behind reward hacking? We trace it to misspecification in high-reward tail. Our fix: rubric-based rewards to tell “excellent” responses apart from “great.” The result: Less hacking, stronger post-training! arxiv.org/pdf/2509.21500

United States Trends

- 1. GTA 6 59.8K posts

- 2. GTA VI 21.2K posts

- 3. Rockstar 52.3K posts

- 4. Antonio Brown 5,752 posts

- 5. GTA 5 8,579 posts

- 6. Nancy Pelosi 128K posts

- 7. Ozempic 18.9K posts

- 8. Rockies 4,198 posts

- 9. Paul DePodesta 2,161 posts

- 10. Justin Dean 1,826 posts

- 11. #LOUDERTHANEVER 1,538 posts

- 12. GTA 7 1,325 posts

- 13. Grisham 1,917 posts

- 14. Kanye 26.2K posts

- 15. Grand Theft Auto VI 43.9K posts

- 16. Elon Musk 232K posts

- 17. Fickell 1,094 posts

- 18. Free AB N/A

- 19. $TSLA 57.1K posts

- 20. Silver Slugger 2,884 posts

You might like

-

Alexandr Wang

Alexandr Wang

@alexandr_wang -

Hugging Face

Hugging Face

@huggingface -

Andrej Karpathy

Andrej Karpathy

@karpathy -

LlamaIndex 🦙

LlamaIndex 🦙

@llama_index -

Jan Leike

Jan Leike

@janleike -

Anthropic

Anthropic

@AnthropicAI -

AI at Meta

AI at Meta

@AIatMeta -

clem 🤗

clem 🤗

@ClementDelangue -

LangChain

LangChain

@LangChainAI -

a16z

a16z

@a16z -

Chroma

Chroma

@trychroma -

Runway

Runway

@runwayml -

Greg Brockman

Greg Brockman

@gdb -

Ilya Sutskever

Ilya Sutskever

@ilyasut

Something went wrong.

Something went wrong.