Yeda Song

@__runamu__

Multimodal Agents for the Real World: GUI Agents, VLM, and RL @ UMich 🇺🇸

You might like

🔥 GUI agents struggle with real-world mobile tasks. We present MONDAY—a diverse, large-scale dataset built via an automatic pipeline that transforms internet videos into GUI agent data. ✅ VLMs trained on MONDAY show strong generalization ✅ Open data (313K steps) (1/7) 🧵 #CVPR

🚨🚨New paper on core RL: a way to train value-functions via flow-matching for scaling compute! No text/images, but a flow directly on a scalar Q-value. This unlocks benefits of iterative compute, test-time scaling for value prediction & SOTA results on whatever we tried. 🧵⬇️

Flow Q-learning (FQL) is a simple method to train/fine-tune an expressive flow policy with RL. Come visit our poster at 4:30p-7p this Wed (evening session, 2nd day)!

Excited to introduce flow Q-learning (FQL)! Flow Q-learning is a *simple* and scalable data-driven RL method that trains an expressive policy with flow matching. Paper: arxiv.org/abs/2502.02538 Project page: seohong.me/projects/fql/ Thread ↓

✨Two life updates✨ 1. Started my internship at @LG_AI_Research in Ann Arbor, Michigan — Advancing AI for a better life! 🔮 2. Advanced to PhD candidacy at UMich CSE. This means I’ve completed my coursework and passed the qualification process. 🙌

The race for LLM "cognitive core" - a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing. Its features are slowly crystalizing: - Natively multimodal…

I’m so excited to announce Gemma 3n is here! 🎉 🔊Multimodal (text/audio/image/video) understanding 🤯Runs with as little as 2GB of RAM 🏆First model under 10B with @lmarena_ai score of 1300+ Available now on @huggingface, @kaggle, llama.cpp, ai.dev, and more

Can scaling data and models alone solve computer vision? 🤔 Join us at the SP4V Workshop at #ICCV2025 in Hawaii to explore this question! 🎤 Speakers: @danfei_xu, @joaocarreira, @jiajunwu_cs, Kristen Grauman, @sainingxie, @vincesitzmann 🔗 sp4v.github.io

We're heading to #CVPR2025! 📰Curious about what’s coming? Take a look at our list of accepted papers and come to meet the authors! Get ready for innovative #AI research and fresh insights!

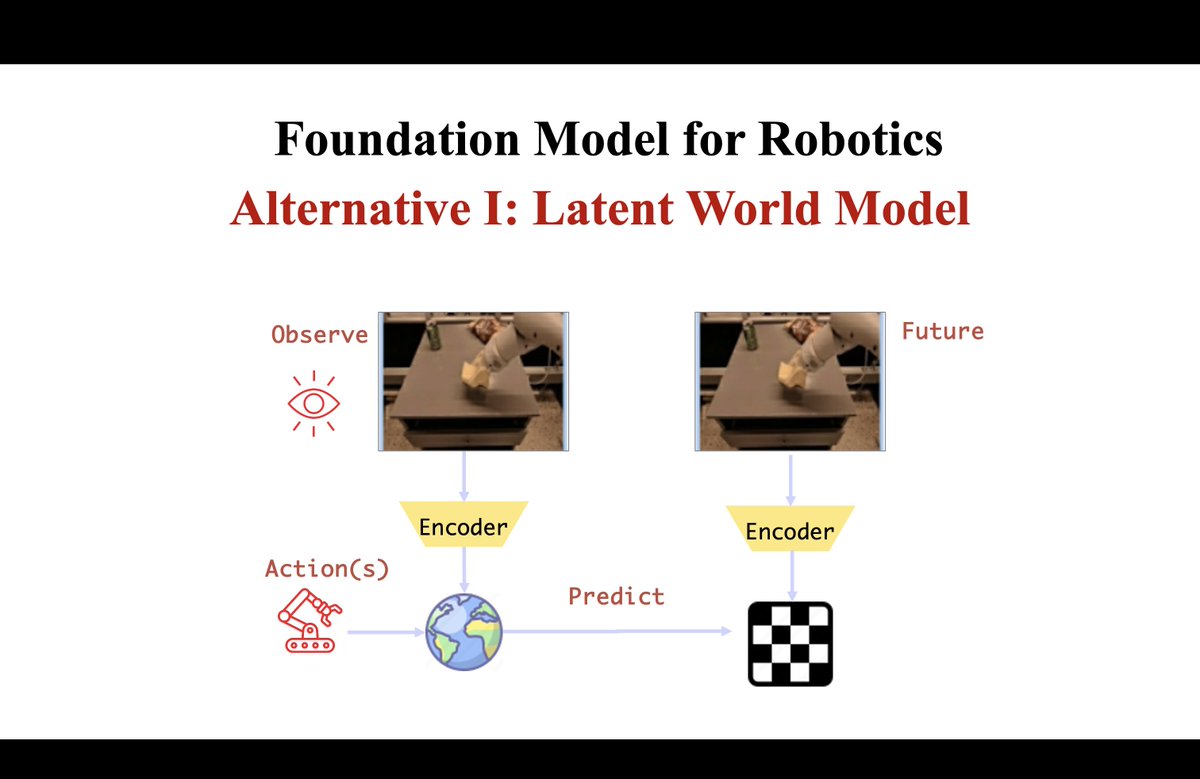

Excited to speak at the Workshop on Computer Vision in the Wild @CVPR 2025! 🎥🌍 🗓️ June 11 | 📍 Room 101 B, Music City Center, Nashville, TN 🎸 🧠 Talk: From Perception to Action: Building World Models for Generalist Agents Let’s connect if you're around! #CVPR2025 #robotics…

🚀 Excited to announce our 4th Workshop on Computer Vision in the Wild (CVinW) at @CVPR 2025! 🔗 computer-vision-in-the-wild.github.io/cvpr-2025/ ⭐We have invinted a great lineup of speakers: Prof. Kaiming He, Prof. @BoqingGo, Prof. @CordeliaSchmid, Prof. @RanjayKrishna, Prof. @sainingxie, Prof.…

Arrived in Nashville for #CVPR 🤠 Excited to present MONDAY, a collaboration with @LG_AI_Research! 📍 MMFM Workshop - Thu, 9:40 AM 📍 Main Conference - Fri, 4:00 PM Let’s connect and chat!🤝 Also exploring Summer 2026 internships 🔍 MONDAY website: monday-dataset.github.io

I finally wrote another blogpost: ysymyth.github.io/The-Second-Hal… AI just keeps getting better over time, but NOW is a special moment that i call “the halftime”. Before it, training > eval. After it, eval > training. The reason: RL finally works. Lmk ur feedback so I’ll polish it.

LLM chatbots are moving fast, but how do we make them better? In my new blog at The Gradient, I argue that an important next step is giving them a sense of "purpose."

I love our Michigan AI Lab @michigan_AI! A group of people who not only does some of the coolest research in AI, but also care for and of each other, and enjoy each other’s company. A picture from this week’s fun picnic. ❤️

Glad to share our work at #ACL2023, "MPChat: Towards Multimodal Persona-Grounded Conversation" arxiv.org/abs/2305.17388 ! #multimodal #persona_chat authors: @AHNJAEWOO2, @__runamu__, Gunhee Kim

United States Trends

- 1. #WWERaw 55.2K posts

- 2. Purdy 24.1K posts

- 3. Panthers 32K posts

- 4. 49ers 32.8K posts

- 5. Mac Jones 4,546 posts

- 6. Penta 8,722 posts

- 7. Canales 11.8K posts

- 8. Gunther 13.2K posts

- 9. #KeepPounding 4,951 posts

- 10. Jaycee Horn 2,488 posts

- 11. #FTTB 4,582 posts

- 12. Niners 4,743 posts

- 13. #RawOnNetflix 2,036 posts

- 14. Ji'Ayir Brown 1,004 posts

- 15. Melo 17.9K posts

- 16. #CARvsSF 1,151 posts

- 17. Rico Dowdle 1,407 posts

- 18. Mark Kelly 166K posts

- 19. McMillan 2,422 posts

- 20. Kittle 3,321 posts

You might like

Something went wrong.

Something went wrong.