Alan Ritter

@alan_ritter

Computing professor at Georgia Tech - natural language processing, language models, machine learning

내가 좋아할 만한 콘텐츠

What if LLMs could predict their own accuracy on a new task before running a single experiment? We introduce PRECOG, built from real papers, to study description→performance forecasting. On both static and streaming tasks, GPT-5 beats human NLP researchers and simple baselines.

What if LLMs can forecast their own scores on unseen benchmarks from just a task description? We are the first to study text description→performance prediction, giving practitioners an early read on outcomes so they can plan what to build—before paying full price 💸

Had a great time visiting Sungkyunkwan University this week! Lots of interesting conversations, and very insightful questions from students. Thanks to @NoSyu for hosting!

We are honored to host @alan_ritter at SKKU for his talk: "Towards Cost-Efficient Use of Pre-trained Models" He explored cost-utility tradeoffs in LLM development, fine-tuning vs. preference optimization, which led to more efficient and scalable AI. Thanks a lot!

We are honored to host @alan_ritter at SKKU for his talk: "Towards Cost-Efficient Use of Pre-trained Models" He explored cost-utility tradeoffs in LLM development, fine-tuning vs. preference optimization, which led to more efficient and scalable AI. Thanks a lot!

For people attending @naaclmeeting, I created a quick script to generate ics files for all your presentations (or presentations of interest) that you can import into your Google Calendar or other calendar software: gist.github.com/neubig/b16376d…

Want to learn about Llama's pre-training? Mike Lewis will be giving a Keynote at NAACL 2025 in Albuquerque, NM on May 1. 2025.naacl.org @naaclmeeting

🚨o3-mini vastly outperforms DeepSeek-R1 on an unseen probabilistic reasoning task! Introducing k-anonymity estimation: a novel task to assess privacy risks in sensitive texts Unlike conventional math and logical reasoning, this is difficult for both humans and AI models. 1/7

Very excited about this new work by @EthanMendes3 on self-imprving state value estimation for more efficient search without labels or rewards.

🚨New Paper: Better search for reasoning (e.g., web tasks) usually requires costly💰demos/rewards What if we only self-improve LLMs on state transitions—capturing a classic RL method in natural language? Spoiler: It works (⬆️39% over base model) & enables efficient search!🚀 🧵

🚨New Paper: Better search for reasoning (e.g., web tasks) usually requires costly💰demos/rewards What if we only self-improve LLMs on state transitions—capturing a classic RL method in natural language? Spoiler: It works (⬆️39% over base model) & enables efficient search!🚀 🧵

🚨 Just Out Can LLMs extract experimental data about themselves from scientific literature to improve understanding of their behavior? We propose a semi-automated approach for large-scale, continuously updatable meta-analysis to uncover intriguing behaviors in frontier LLMs. 🧵

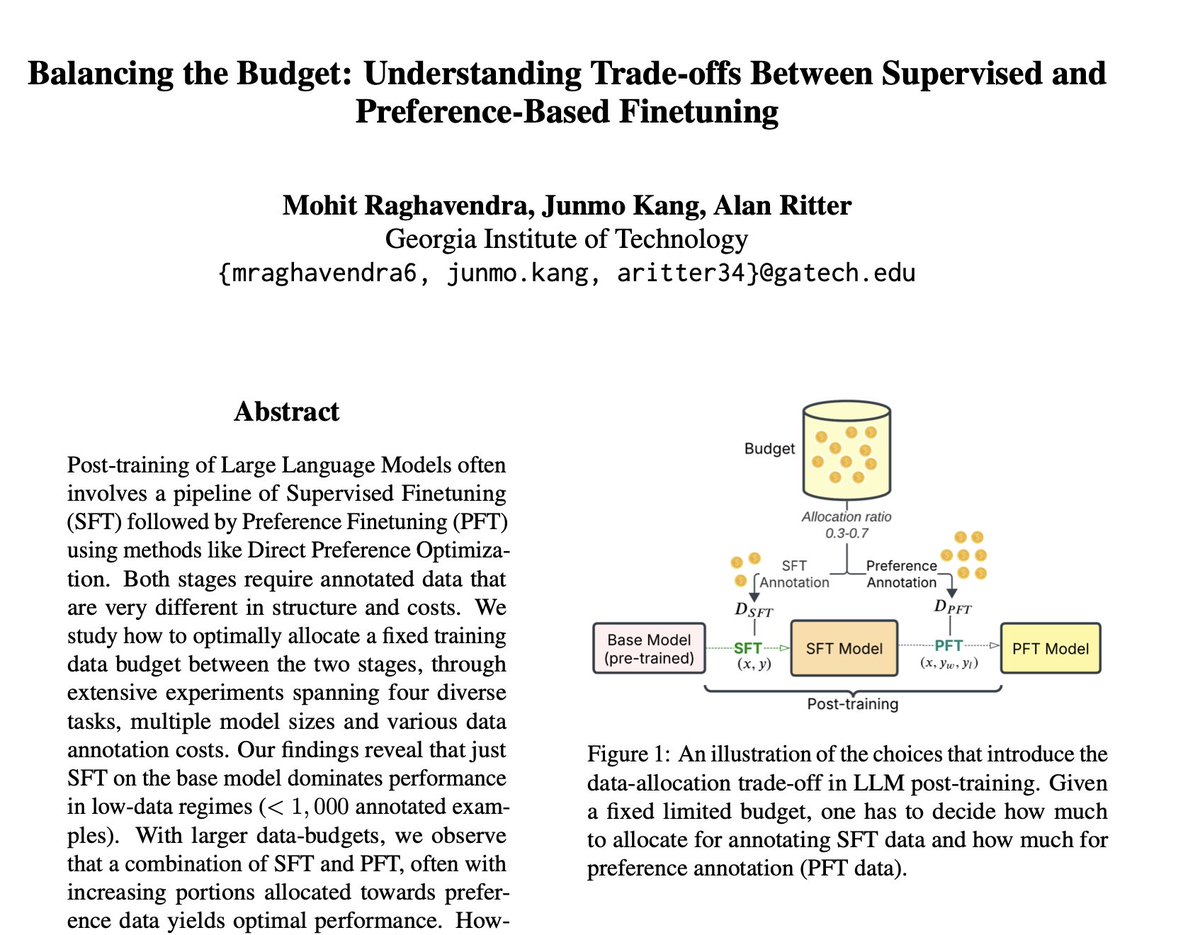

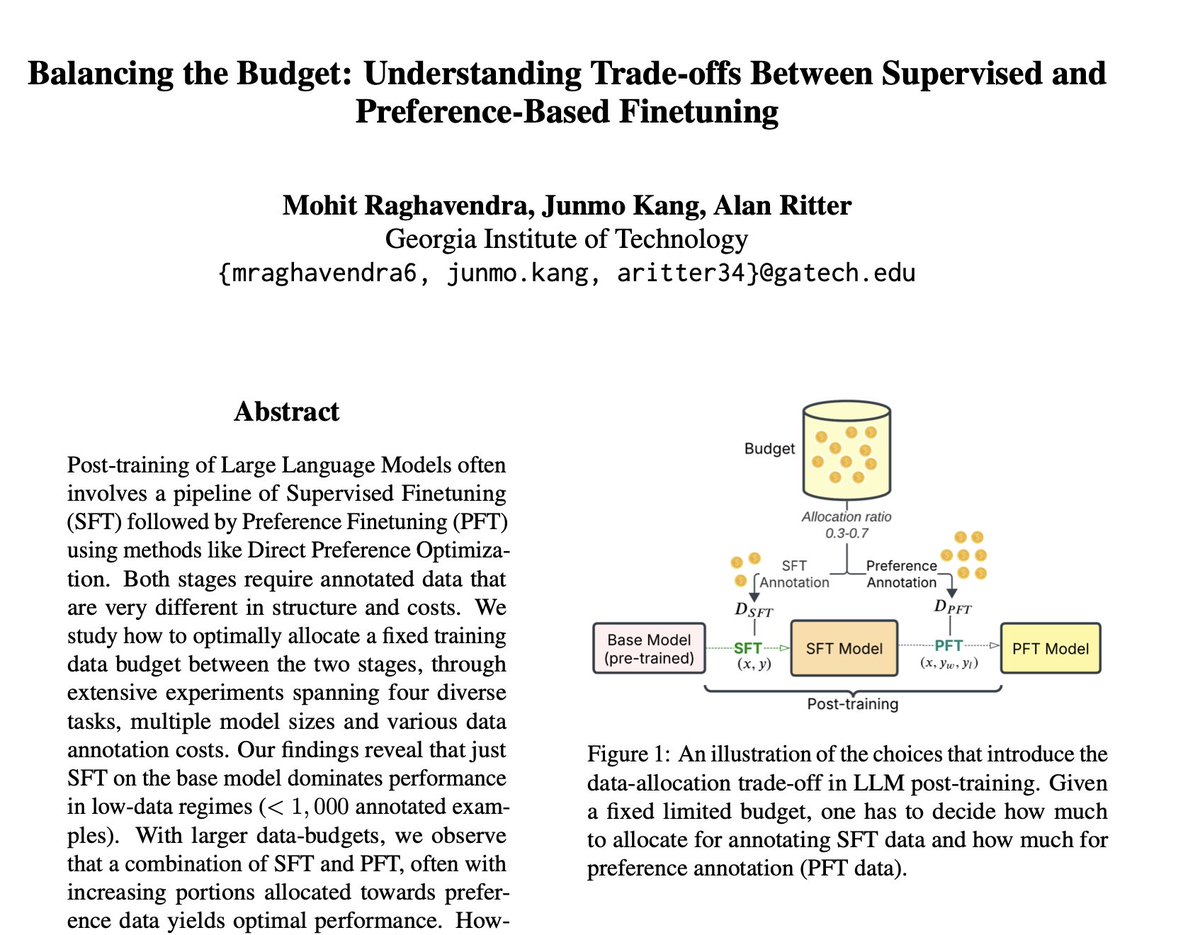

Check out @mohit_rag18's recent work analyzing data annotation costs associated with SFT vs. Preference Fine-Tuning.

🚨Just out Targeted data curation for SFT and RLHF is a significant cost factor 💰for improving LLM performance during post-training. How should you allocate your data annotation budgets between SFT and Preference Data? We ran 1000+ experiments to find out! 1/7

🚨Just out Targeted data curation for SFT and RLHF is a significant cost factor 💰for improving LLM performance during post-training. How should you allocate your data annotation budgets between SFT and Preference Data? We ran 1000+ experiments to find out! 1/7

Awesome talk yesterday by @jacobandreas! Interesting to hear how models can make better predictions by asking users the right questions, and update themselves based on implied information. @mlatgt

📢 Working on something exciting for NAACL? 🗓️ Remember the commitment deadline is December 16th! 2025.naacl.org/calls/papers/ #NLProc

Come see @EthanMendes3 talk on the surprising image geolocation capabilities of VLMs today at 2:45 in Flagler (down the escalator on the first floor). #emnlp2024 @mlatgt

Please take a moment to fill out the form below to volunteer as a reviewer or AC for NAACL!

📢 NAACL needs Reviewers & Area Chairs! 📝 If you haven't received an invite for ARR Oct 2024 & want to contribute, sign up by Oct 22nd! ➡️AC form: forms.office.com/r/8j6jXLfASt ➡️Reviewer form: forms.office.com/r/cjPNtL9gPE Please RT 🔁 and help spread the word! 🗣️ #NLProc @ReviewAcl

Great news! The @aclmeeting awarded IARPA HIATUS program performers two "Best Social Impact Paper Awards" and an "Outstanding Paper Award." Congratulations to the awardees! Access the winning papers below: aclanthology.org/2024.acl-long.… aclanthology.org/2024.acl-long.… aclanthology.org/2024.acl-short…

United States 트렌드

- 1. Auburn 44.9K posts

- 2. Brewers 63.4K posts

- 3. Georgia 67.5K posts

- 4. Cubs 55.3K posts

- 5. Kirby 23.7K posts

- 6. Arizona 41.7K posts

- 7. Utah 24.3K posts

- 8. Michigan 62.6K posts

- 9. Gilligan 5,791 posts

- 10. #AcexRedbull 3,484 posts

- 11. Hugh Freeze 3,206 posts

- 12. #BYUFootball N/A

- 13. Boots 50.3K posts

- 14. #Toonami 2,505 posts

- 15. #GoDawgs 5,547 posts

- 16. Amy Poehler 4,260 posts

- 17. #ThisIsMyCrew 3,230 posts

- 18. Kyle Tucker 3,160 posts

- 19. Dissidia 5,487 posts

- 20. Texas Tech 6,261 posts

내가 좋아할 만한 콘텐츠

-

Wei Xu

Wei Xu

@cocoweixu -

Yoav Artzi

Yoav Artzi

@yoavartzi -

Mohit Bansal

Mohit Bansal

@mohitban47 -

Kai-Wei Chang

Kai-Wei Chang

@kaiwei_chang -

UW NLP

UW NLP

@uwnlp -

UCL Natural Language Processing

UCL Natural Language Processing

@ucl_nlp -

Luke Zettlemoyer

Luke Zettlemoyer

@LukeZettlemoyer -

Isabelle Augenstein

Isabelle Augenstein

@IAugenstein -

NAACL HLT 2027

NAACL HLT 2027

@naaclmeeting -

Hanna Hajishirzi

Hanna Hajishirzi

@HannaHajishirzi -

JHU CLSP

JHU CLSP

@jhuclsp -

Mohit Iyyer

Mohit Iyyer

@MohitIyyer -

Yonatan Bisk

Yonatan Bisk

@ybisk -

Chris Dyer

Chris Dyer

@redpony -

Vered Shwartz

Vered Shwartz

@VeredShwartz

Something went wrong.

Something went wrong.