Kim Montgomery

@_dynamic24_

Applied Mathematician. Data Scientist. Kaggle Grandmaster.

You might like

"I can remember when all this was ice"

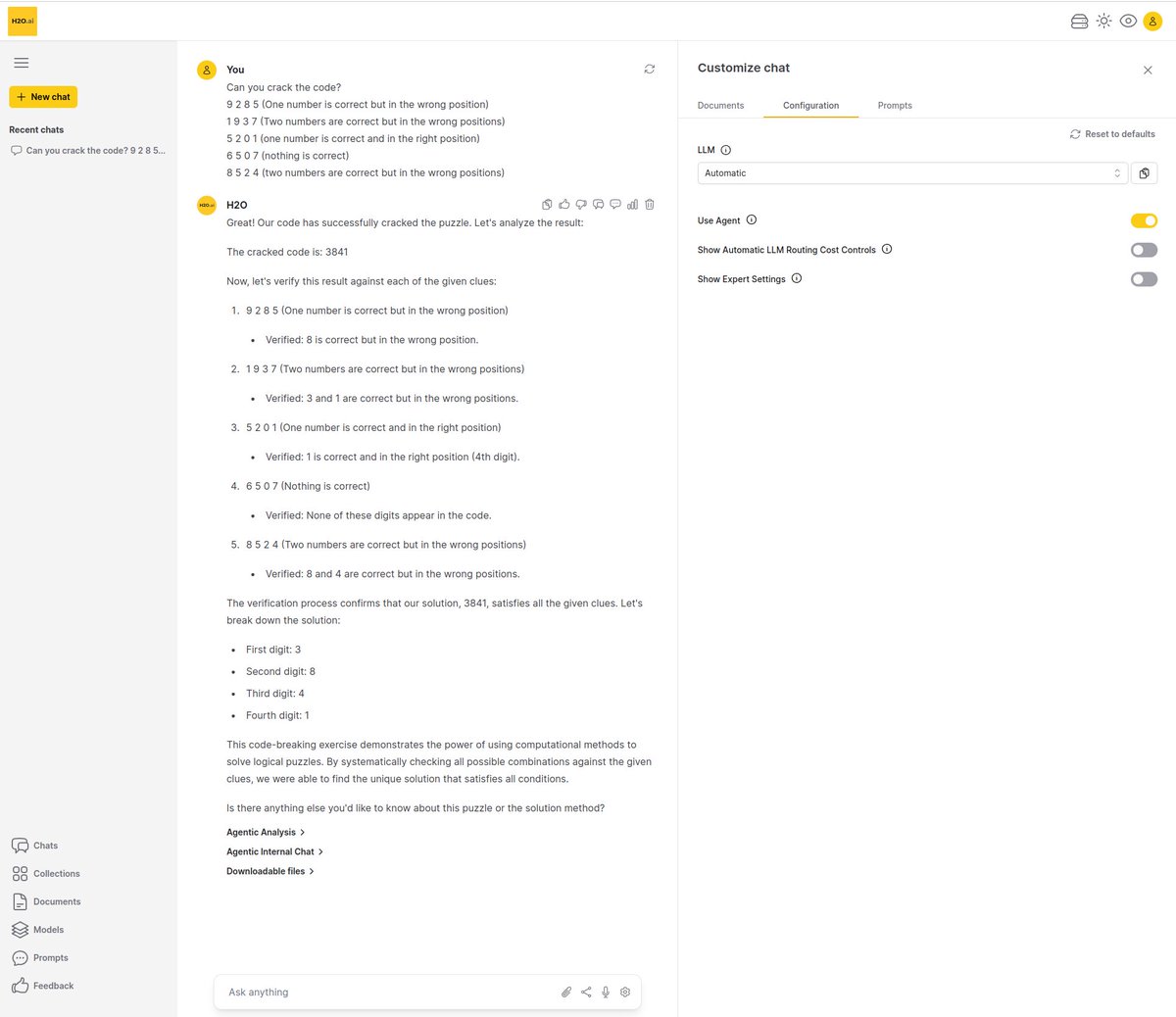

ChatGPT o1-preview failed this relatively simple test, and our Enterprise h2oGPTe system nails it, much faster:

Tell yourself whatever makes you feel better about standing on the sidelines.

Many PhD students have told me most earnestly that they could win Kaggle competitions any time they wanted. On the vanishingly rare occasions they've put their money where their mouth is, they've been crushed.

There was a super impressive AI competition that happened last week that many people missed in the noise of AI world. I happen to know several participants so let me tell you a bit of this story as a Sunday morning coffee time. You probably know the Millennium Prize Problems…

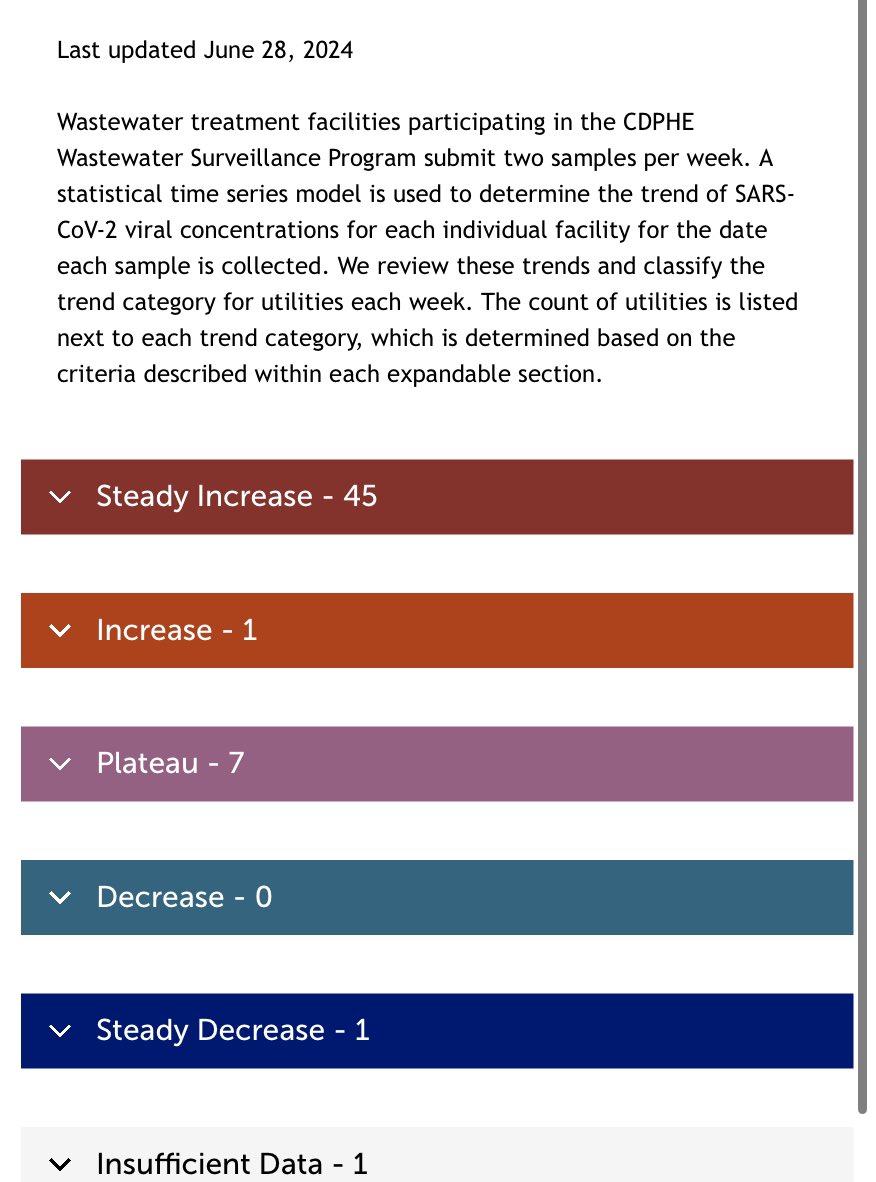

Holy shit colorado. This is the worst trend summary EVER published. Worse than 12/27/2023

forgot how exhausting it is to debate tech bros who insist - diversity is important obv except not important enough to spend effort on - their all male team is diverse in other ways! - the problem is not w the company but rather the women who don’t apply - they only hire the best

Incase you are interested in using @h2oai H2O Automl, here is a curated list of all the awesome projects, applications, research, tutorials, courses and books that use H2O - github.com/h2oai/awesome-…

Great to see @h2oai cover story smebusinessreview.com/magazine/2024/… Try Enterprise h2oGPTe, our #GenAI platform: h2o.ai/#gpt (Freemium)

Happy to share our first efforts for foundation modeling: H2O-Danube-1.8b A small 1.8b model based on Llama/Mistral architecture trained on only 1T natural language tokens showing competitive metrics across benchmarks in the <2B model space. We particularly hope for the model to…

And they say Kagglers don’t do anything in the real world! New long context small LLM trained by a team of some of the best Kagglers in the world

H2O-Danube-1.8B Technical Report Open-sources a high-competitive 1.8B LM trained on 1T tokens following the core principles of LLama 2 and Mistral arxiv.org/abs/2401.16818

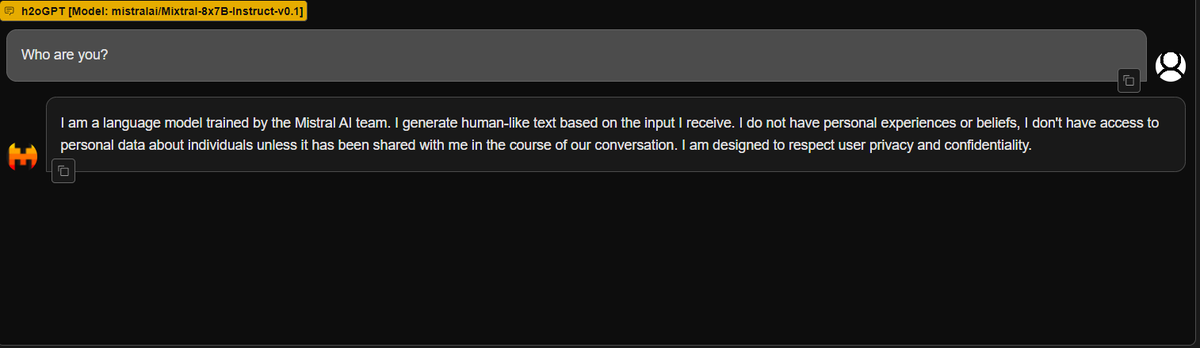

We have mistralai/Mixtral-8x7B-Instruct-v0.1 hosted on gpt.h2o.ai - hop over there to play around with it and compare to other models!

We at @h2oai are thrilled to bring together the largest gathering of @kaggle Grandmasters on a single stage in one day, and that includes none other than the current #1 - @ph_singer and current #4 - @kagglingpascal in competitions. Register- bit.ly/3Qb4Zyd

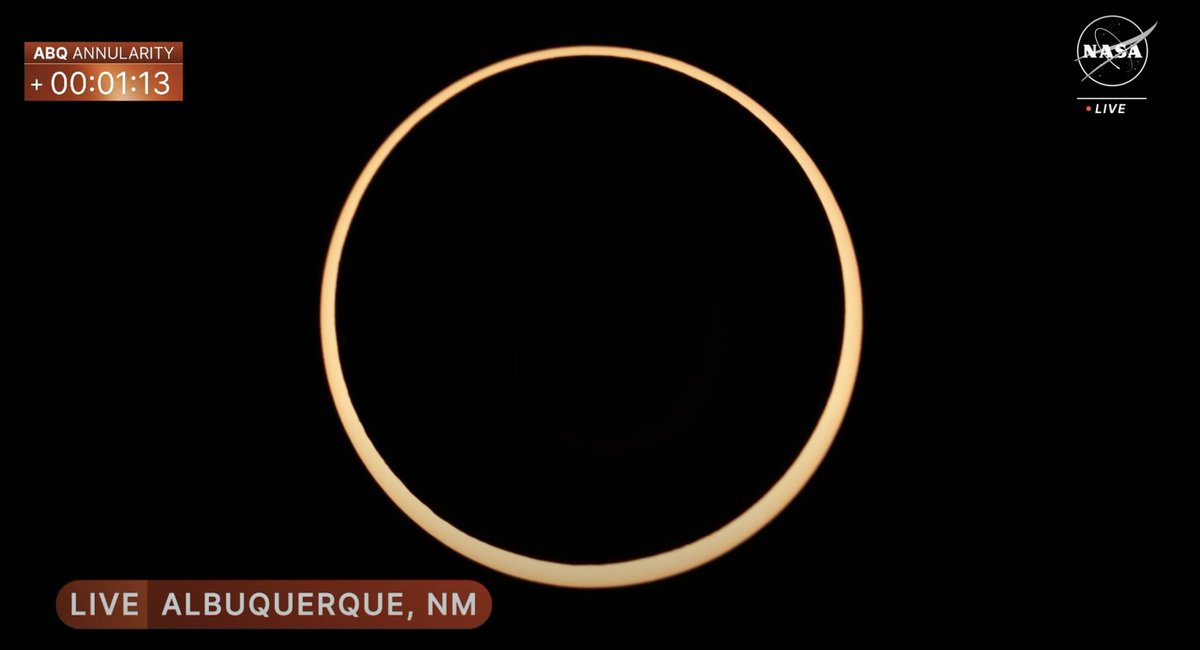

Wow @NASAMoon – after all this time, you finally put a ring on it! 🥰

Whenever you are contemplating participating in @kaggle competitions and you might have heard someone say it is too far-fetched from practical data science work, consider this example: In the recent Science LLM competition participants learned among many other things: - How to…

Introducing the Kaggle Competitions Research Grants Program, a new program to support academic and non-profit institutions’ efforts to advance their research through Kaggle Competitions. kaggle.com/competitions-r…

Nice paper out of @UniofOxford on the power & ease of use of H2O #AutoML: sciencedirect.com/science/articl… @h2oai

Open sourcing a brand-new framework and no-code GUI for fine-tuning LLMs: github.com/h2oai/h2o-llms… Some highlights: CLI & GUI available, many hyperparameter options, best-practice from Kaggle GMs, Lora, DDP, FSDP, 8bit, experiment tracking, evaluation, chat window, and much more!

We are open-sourcing @h2oai's LLM repositories github.com/h2oai/h2ogpt and github.com/h2oai/h2o-llms… and huggingface.co/h2oai including the best truly open-source fine-tuned 20B parameter models! #ChatGPT #GPT #OSS #OpenSource #Democratize #AI

Arxiv Chat: Chat w the latest papers 🙏 I made a really simple demo that makes it easy for me to understand the latest papers. The whole app is <100 lines of code: ✅ @LangChainAI for the main logic ✅ @h2oai Wave for the UI ✅ ChatGPT for asking Qs

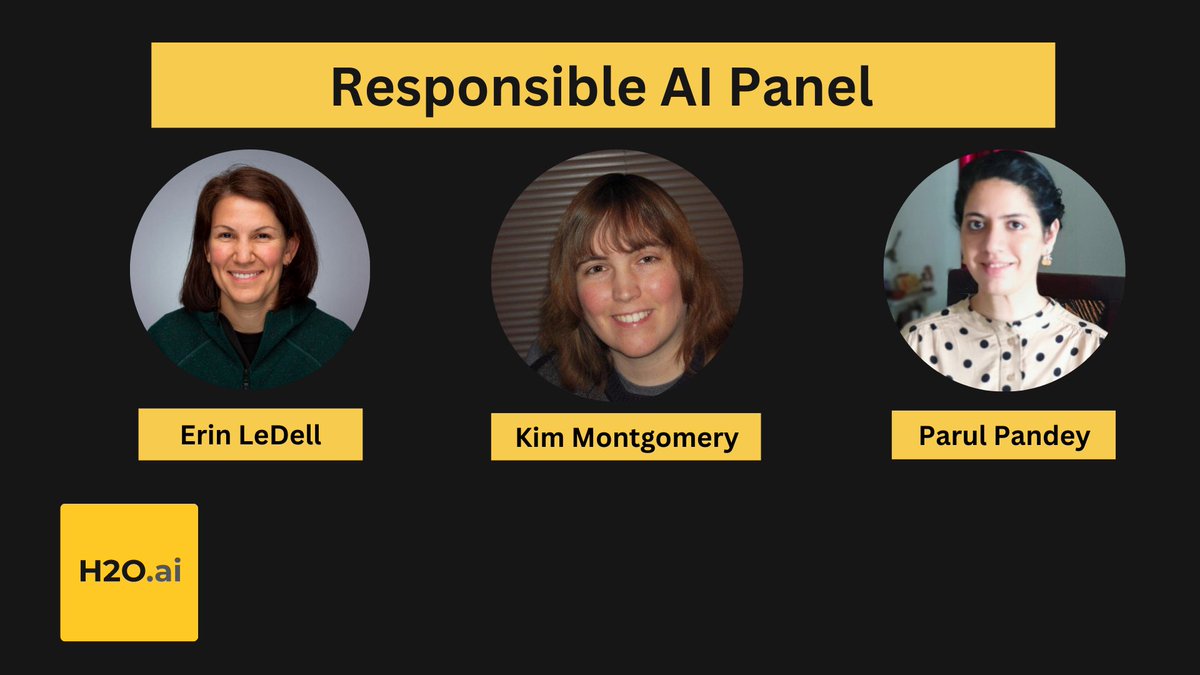

🙏📚 Join @bhutanisanyam1 for a thought-provoking discussion on #ResponsibleAI with @ledell, @_dynamic24_ + @pandeyparul. We'll delve into the current states of #interpretability, using models responsibly, and how @h2oai products support these efforts. ow.ly/ql3x50MBCNT

United States Trends

- 1. Good Monday 29.5K posts

- 2. TOP CALL 3,901 posts

- 3. AI Alert 1,493 posts

- 4. #MondayMotivation 7,982 posts

- 5. Check Analyze N/A

- 6. Token Signal 1,971 posts

- 7. Market Focus 2,631 posts

- 8. #centralwOrldXmasXFreenBecky 584K posts

- 9. SAROCHA REBECCA DISNEY AT CTW 603K posts

- 10. #LingOrmDiorAmbassador 306K posts

- 11. #BaddiesUSA 66.8K posts

- 12. NAMJOON 59.4K posts

- 13. Victory Monday 1,445 posts

- 14. DOGE 187K posts

- 15. Chip Kelly 9,471 posts

- 16. Stacey 24.3K posts

- 17. Monad 118K posts

- 18. Scotty 10.6K posts

- 19. Gilligan 4,134 posts

- 20. Vin Diesel 1,580 posts

You might like

-

Yam Peleg

Yam Peleg

@Yampeleg -

Andrija Miličević

Andrija Miličević

@CroDoc -

Pascal Pfeiffer

Pascal Pfeiffer

@pa_pfeiffer -

Michael C. Mozer

Michael C. Mozer

@mc_mozer -

Darek Kłeczek

Darek Kłeczek

@dk21 -

Nischay Dhankhar

Nischay Dhankhar

@nischay_twt -

Ryan Chesler

Ryan Chesler

@ryan_chesler -

Arash Vahdat

Arash Vahdat

@ArashVahdat -

Vishnu - Jarvislabs.ai

Vishnu - Jarvislabs.ai

@vishnuvig -

Torsten Sattler

Torsten Sattler

@SattlerTorsten -

Mark Dredze

Mark Dredze

@mdredze -

Ahmet Erdem

Ahmet Erdem

@a_erdem4 -

Thomas Capelle

Thomas Capelle

@capetorch -

F. Güney

F. Güney

@ftm_guney -

Cristian Garcia

Cristian Garcia

@cgarciae88

Something went wrong.

Something went wrong.