Say Yoho

@_sayyoho

Typist; applying big data techniques to the problem of web scale NLProc; with a nod to Scott Adams' Pirate Adventure

You might like

"we do need more structure and modularity for language, memory, knowledge, and planning" @chrmanning simons.berkeley.edu/talks/christop… … @stanfordnlp

ByteDance v OpenAI⚠️, LAION-5B CSAM☢️ & NYT v OpenAI🛑 illustrate rising lockdown + legal risk on data. Need more informed training data selection? 🔗 dataprovenance.org Detailed licenses, terms, sources, properties. 📢 Come help us build it! All open sourced. 1/ 🧵

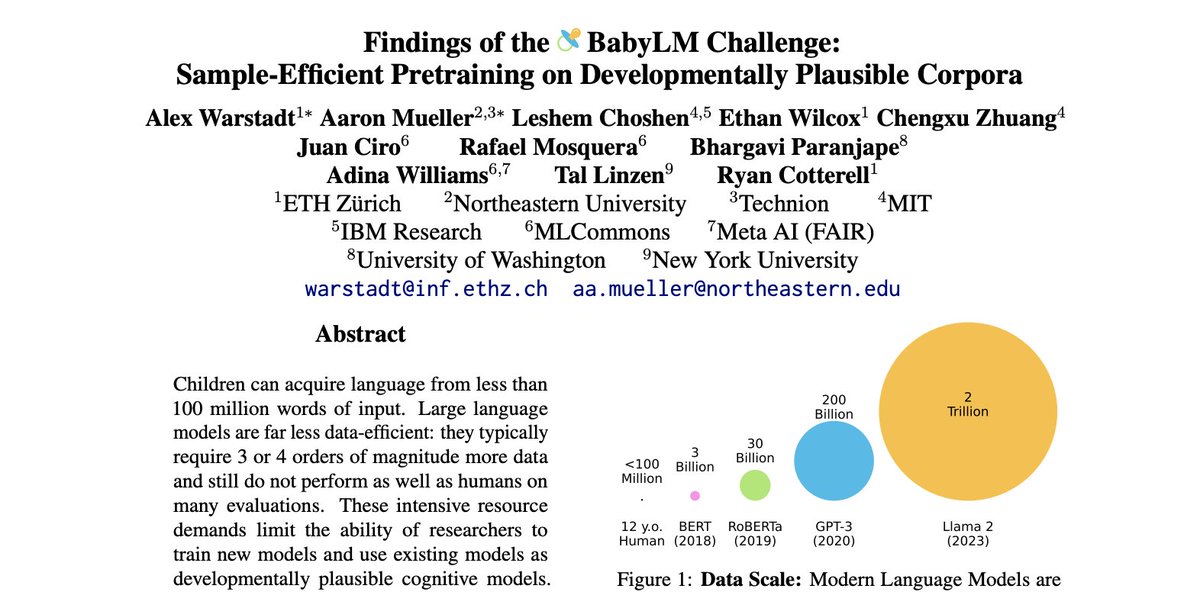

LLMs are now trained >1000x as much language data as a child, so what happens when you train a "BabyLM" on just 100M words? The proceedings of the BabyLM Challenge are now out along with our summary of key findings from 31 submissions: aclanthology.org/volumes/2023.c… Some highlights 🧵

📹 Deep dive into 4 NeurIPS 2023 best paper award winners: youtu.be/LkED9wKI1TY - Are Emergent Abilities of Large Language Models a Mirage? arxiv.org/abs/2304.15004 - Scaling Data-Constrained Language Models. arxiv.org/abs/2305.16264 - Direct Preference Optimization: Your…

To understand X means you have the ability to act appropriately in response to situations related to X -- for instance, you understand how to make coffee in a kitchen if you can walk into a random kitchen and make coffee.

"I don't think we'll see systems that truly step beyond their training data until we have powerful search in the process." - @ShaneLegg, Founder and Chief AGI Scientist, Google DeepMind Full episode out tomorrow

A recent LLM hallucination benchmark is making rounds, and people are jumping to conclusions based on a table screenshot. The eval is so problematic in many ways. In fact, a trivial baseline can achieve 0% on hallucination. I cannot help but don my Peer Reviewer hat: - The study…

I came to this conclusion sometime last year, and it was a little sad because I wanted so hard to believe in LLM mysticism and that there was something "there there."

New paper by Google provides evidence that transformers (GPT, etc) cannot generalize beyond their training data

The brand-new @Voyage_AI_ embedding model is one of the best models you should use for your RAG pipeline today (outperforms ada-002 by a big margin) Thanks to @Yujie_Qian, you can now easily use in @llama_index: github.com/run-llama/llam…

Why we should view LLMs as powerful Cognitive Orthotics rather than alternatives for human intelligence #SundayHarangue LLMs are amazing giant external non-veridical memories that can serve as powerful cognitive orthotics for us, if rightly used (c.f.…

Answer: the Pile (11 models) 11/20 models with 20B or more parameters and partially public data have been trained on the Pile. C4 comes in second at 6, and S2ORC (not an option) comes in third. It comes in second if you exclude models trained by the same org that made the data.

Among models with 20B parameters or more, which publicly released dataset is the most common component of training data? Note that a model trained partially on publicly released data and partially on internal data counts

Over the past weeks the H4 team has been busy pushing the Zephyr 7B model to new heights 🗻 The new version is now topping all 7b models on chat evals and even 10x larger models 🤯🔥 Here are the intuitions on it 1/ Start with the strongest pretrained model you can find:…

Beautiful summary of generative AI by Meredith Whittaker (@mer__edith): "Generative AI is not actually that useful... It presents text that has no relationship to facts... It's not useful in most serious contexts... [ChatGPT] is a very expensive advertisement... Silicon Valley…

.@mer__edith tells @cpassariello, “VC's require hype to get a return on investment because they need an IPO or an acquisition … You don't get rich by the technology working, you get rich by people believing it works long enough that one of those two things gets you some money."

Excited to release Zephyr-7b-beta 🪁 ! It pushes our recipe to new heights & tops 10x larger models 💪 📝 Technical report: huggingface.co/papers/2310.16… 🤗Model: huggingface.co/HuggingFaceH4/… ⚔️Evaluate it against 10+ LLMs in the @lmsysorg arena: arena.lmsys.org Details in the 🧵

The @MLOpsWorld Generative AI Summit was great! Thanks all for a super engaging event 🙏 Lots of interesting conversations, and even more I didn't get to say hi to. Slides from my talk 👉 speakerdeck.com/honnibal/how-m…

Great post by @fchollet. LLMs as continuous, interpolative vector program databases is a fresh mental model of LLM reasoning that is intuitive and useful. Added advantage of reducing anthropomorphizing of this tech by AI doomers. At least one can hope.

Sentiment is everywhere in language. But how do LLMs represent it? We find: - All models studied have a linear, causal sentiment direction - They summarize information at placeholder tokens like commas An early step towards decoding world models! arxiv.org/abs/2310.15154

A Large Language AI system which supposedly generated an internal model from text placed numerous cities in the Atlantic Ocean. @garymarcus explains how the lack of internal symbolic representation hamstrings the intelligence (and safety) of AI. open.substack.com/pub/garymarcus…

The ICCV VLAR workshop is being livestreamed here: youtube.com/watch?v=3rd9x1… I will be talking in ~20 minutes -- about LLMs, abstract reasoning, ARC, what we're still missing to get to general AI, and what we can do about it.

Great detectiving by @suchenzang. Massive amounts of data contamination in the Phi-1.5 dataset, leading to highly misleading results when evaluating on tasks that aren't in the training set. It's really bad that the authors either didn't look for this or chose to not report it.

MBPP might've also been used somewhere in the Phi-1.5 dataset. Just like we truncated one of the GSM8K problems, let's try truncating the MBPP prompts to see what Phi-1.5 will autocomplete with. [h/t to @drjwrae for suggesting this too: x.com/drjwrae/status…] 🕵🏻♀️🧵Part 2

United States Trends

- 1. Packers 92.6K posts

- 2. Eagles 120K posts

- 3. Jordan Love 14.2K posts

- 4. #WWERaw 121K posts

- 5. Matt LaFleur 7,822 posts

- 6. AJ Brown 6,461 posts

- 7. $MONTA 1,269 posts

- 8. Patullo 11.8K posts

- 9. Jaelan Phillips 7,080 posts

- 10. Smitty 5,349 posts

- 11. #GoPackGo 7,703 posts

- 12. Sirianni 4,739 posts

- 13. McManus 4,027 posts

- 14. Grayson Allen 3,110 posts

- 15. Cavs 10.5K posts

- 16. #MondayNightFootball 1,904 posts

- 17. Pistons 14.6K posts

- 18. Wiggins 11.7K posts

- 19. Devonta Smith 5,747 posts

- 20. John Cena 98.3K posts

Something went wrong.

Something went wrong.