You might like

Our tech report for Zamba-7B-v1 is out. We manage to come close to Llama 3 8B, Mistral 7B and others' level of performance, with only 1T tokens, with faster inference and less memory usage at a fixed context length. Read up to learn about our not-so-secret sauce!

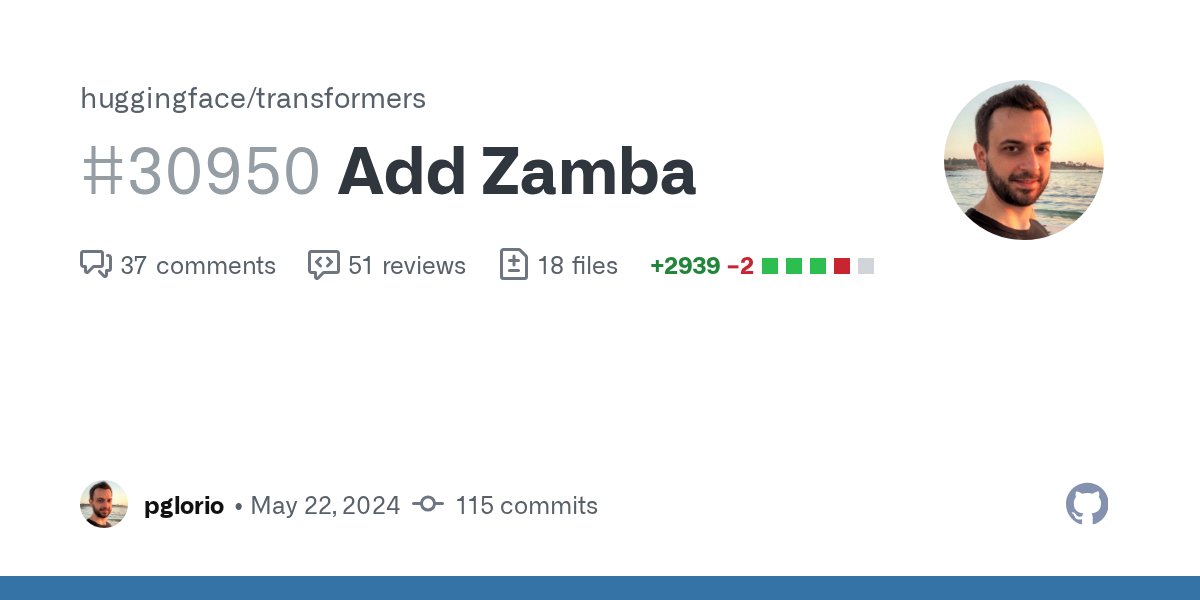

Zyphra is dropping the tech report for Zamba-7B, along with: - Model weights (phase 1 and final annealed) at huggingface.co/Zyphra - Inference/generation code (both pure PyTorch and HuggingFace) at github.com/Zyphra/Zamba-t… and github.com/huggingface/tr… Tech report:…

Another #ICML2025 paper! Why Has Predicting Downstream Capabilities of Frontier AI Models with Scale Remained Elusive? TLDR: Predicting language model performance with scale on multiple choice question-answer (MCQA) benchmarks is made difficult b/c ... 1/3

Excited to announce our paper ⬇️ was selected as an **Outstanding** paper at @TiFA_ICML2024 🔥🔥🔥 What did the paper show? Let's try to summarize the paper in a single tweet!! 1/3

❤️🔥❤️🔥Excited to share our new paper ❤️🔥❤️🔥 **Why Has Predicting Downstream Capabilities of Frontier AI Models with Scale Remained Elusive?** w/ @haileysch__ @BrandoHablando @gabemukobi @varunrmadan @herbiebradley @ai_phd @BlancheMinerva @sanmikoyejo arxiv.org/abs/2406.04391 1/N

❤️🔥❤️🔥Excited to share our new paper ❤️🔥❤️🔥 **Why Has Predicting Downstream Capabilities of Frontier AI Models with Scale Remained Elusive?** w/ @haileysch__ @BrandoHablando @gabemukobi @varunrmadan @herbiebradley @ai_phd @BlancheMinerva @sanmikoyejo arxiv.org/abs/2406.04391 1/N

Look at our preprint on Continual Learning for increasing the scalability of LLMs pretraining. A great piece of work led by @ai_phd @benjamintherien and @kshitijkgupta 🔥

Interested in seamlessly updating your #LLM on new datasets to avoid wasting previous efforts & compute, all while maintaining performance on past data? Excited to present Simple and Scalable Strategies to Continually Pre-train Large Language Models! 🧵arxiv.org/abs/2403.08763 1/N

Here is the full paper of the continual pretraining project I have been working on last year. I encourage you to check it out if you pretrain LLMs (in particular, I recommend to start with takeaways in Section 2 and the Table of Contents at the start of the appendix).

Interested in seamlessly updating your #LLM on new datasets to avoid wasting previous efforts & compute, all while maintaining performance on past data? Excited to present Simple and Scalable Strategies to Continually Pre-train Large Language Models! 🧵arxiv.org/abs/2403.08763 1/N

Simple and Scalable Strategies to Continually Pre-train Large Language Models Large language models (LLMs) are routinely pre-trained on billions of tokens, only to start the process over again once new data becomes available. A much more efficient solution is to continually

Mila presents Simple and Scalable Strategies to Continually Pre-train Large Language Models Shows efficient updates to LLMs using simple strategies, achieving re-training results with less compute arxiv.org/abs/2403.08763

State-space models (SSMs) like Mamba and mixture-of-experts (MoE) models like Mixtral both seek to reduce the computational cost to train/infer compared to transformers, while maintaining generation quality. Learn more in our paper: zyphra.com/blackmamba

Looking forward to see you at the #NeurIPS2023 #NeurIPS23 ENLSP workshop (rooms 206-207), where we'll have a poster about this work at 16:15 !

1 Ever wondered how to keep pretraining your LLM as new datasets continue to become available, instead of pretraining from scratch every time, wasting prior effort and compute ? A thread 🧵

Hi-NOLIN Hindi model will be presented by our @NolanoOrg team (@imtejas13 @_AyushKaushal) and collaborators from our CERC-AAI team (@kshitijkgupta @benjamintherien @ai_phd) at the #NeurIPS2023 this Fri, at this workshop: sites.google.com/mila.quebec/6t…

Rarely been so excited about a paper. Our model has a quality level higher than Stable Diffusion 2.1 at a fraction (less than 12%) of the training cost, less than 20% of the carbon footprint, and it is twice as fast at inference too! That's what I call a leap forward.

Würstchen is a high-fidelity text2image model working at a fraction of the compute needed for StableDiffusion achieving similar/better results. Now the preprint to v2 is out. Thanks @M_L_Richter, @pabloppp, @dome_271, @chrisjpal for the great collab!

United States Trends

- 1. Caleb Love 2,435 posts

- 2. Sengun 8,494 posts

- 3. Mamdani 443K posts

- 4. Reed Sheppard 3,619 posts

- 5. #SmackDown 44.9K posts

- 6. Norvell 3,445 posts

- 7. Suns 18.5K posts

- 8. Marjorie Taylor Greene 65.5K posts

- 9. Lando 42.7K posts

- 10. Collin Gillespie 3,608 posts

- 11. Morgan Geekie N/A

- 12. Rockets 16.6K posts

- 13. Florida State 10.8K posts

- 14. UNLV 2,113 posts

- 15. Blazers 3,758 posts

- 16. NC State 5,787 posts

- 17. Wolves 16.5K posts

- 18. #OPLive 2,552 posts

- 19. #LasVegasGP 67.5K posts

- 20. Booker 7,572 posts

You might like

-

Shiva

Shiva

@ShivaSujit -

Ryan D'Orazio

Ryan D'Orazio

@RyanDOrazio -

Gopeshh Subbaraj

Gopeshh Subbaraj

@gopeshh1 -

Amin Mansouri

Amin Mansouri

@m_amin_mansouri -

Miguel Saavedra

Miguel Saavedra

@miguelSaaRuiz -

Mehrnaz Mofakhami

Mehrnaz Mofakhami

@mhrnz_m -

Sacha Morin

Sacha Morin

@SachMorin -

Vineet Jain

Vineet Jain

@thevineetjain -

Roger Creus Castanyer

Roger Creus Castanyer

@creus_roger -

Daniel Levy

Daniel Levy

@dnllvy -

Jack Stanley

Jack Stanley

@jackhtstanley -

Andrew Williams

Andrew Williams

@CluelessAndrew -

Mehran Shakerinava

Mehran Shakerinava

@MShakerinava -

Nikita Saxena (she/her)

Nikita Saxena (she/her)

@nikitasaxena02 -

Reza Bayat

Reza Bayat

@reza_byt

Something went wrong.

Something went wrong.