你可能會喜歡

United States 趨勢

- 1. Ravens 59.4K posts

- 2. Ravens 59.4K posts

- 3. Drake Maye 24.3K posts

- 4. Lamar 27.5K posts

- 5. Derrick Henry 9,484 posts

- 6. Zay Flowers 7,511 posts

- 7. Harbaugh 10.1K posts

- 8. Pats 14.9K posts

- 9. Steelers 83.5K posts

- 10. Mark Andrews 4,929 posts

- 11. Tyler Huntley 2,102 posts

- 12. Diggs 11.9K posts

- 13. Marlon Humphrey 1,941 posts

- 14. Kyle Williams 2,252 posts

- 15. Lions 89K posts

- 16. 60 Minutes 51.2K posts

- 17. Bari Weiss 42.4K posts

- 18. Westbrook 4,275 posts

- 19. Boutte 2,215 posts

- 20. Henderson 13.5K posts

你可能會喜歡

-

Joe Burden

Joe Burden

@slowbuddens -

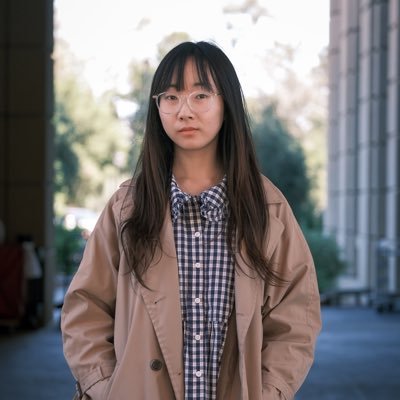

Dylan Patel

Dylan Patel

@dylan522p -

Vikas Khanna

Vikas Khanna

@TheVikasKhanna -

GaonConnection

GaonConnection

@GaonConnection -

Cabot Wealth Network

Cabot Wealth Network

@CabotAnalysts -

𝐷𝑟. 𝐼𝑎𝑛 𝐶𝑢𝑡𝑟𝑒𝑠𝑠

𝐷𝑟. 𝐼𝑎𝑛 𝐶𝑢𝑡𝑟𝑒𝑠𝑠

@IanCutress -

Samar Halarnkar

Samar Halarnkar

@samar11 -

Dr. Sanjukta Basu, M.A., LLB., PhD

Dr. Sanjukta Basu, M.A., LLB., PhD

@sanjukta -

Sabbah Haji Done With This Place

Sabbah Haji Done With This Place

@imsabbah -

Lee Weissman

Lee Weissman

@Poet_of_Peace -

Oxblood Ruffin

Oxblood Ruffin

@OxbloodRuffin -

Vipul Ramtekkar

Vipul Ramtekkar

@VipulRamtekkar -

sonia_manchanda

sonia_manchanda

@sonia_manchanda -

Raju Yadav(P D A parivar)

Raju Yadav(P D A parivar)

@JlnYadav -

Leo

Leo

@Leo678232370

Loading...

Something went wrong.

Something went wrong.