Man every GPU API seems to be the same. Can we just have the ISA and hardware manual to the chip, slap some GPU-specific features to compilers and call it a day?

Literally everyone is freaking out over Codex like they didn’t do the exact same thing for Devin, Cursor, DeepSeek, and every GPT drop since 2.0. The hype cycle resets every 3 weeks, and we all start everything all over again. This is what we'll see over the next few days: •…

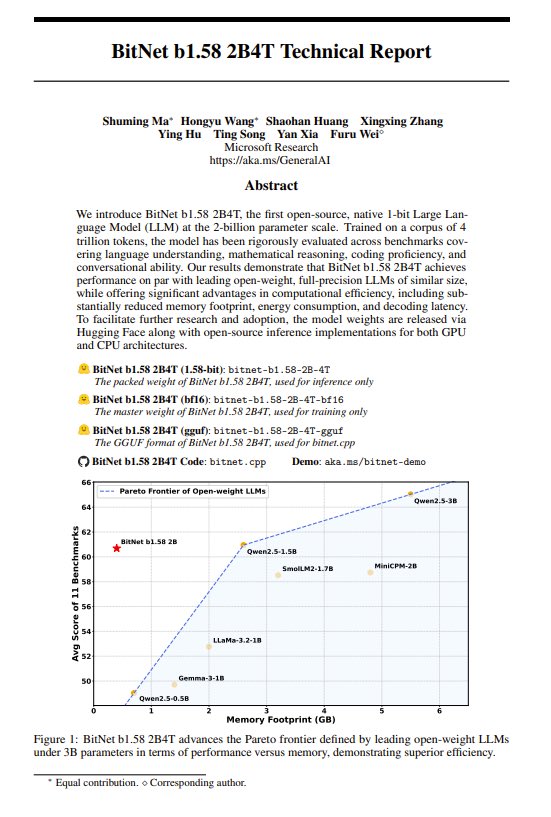

Really hoping that the next SOTA open source model uses this precision. Although, if it it's anything like Deepseek V3 in terms of size 1.58 will still require ~140GB of RAM for the full 685B params. Obviously still much better than ~700GB. Here's to hoping 🙏🏿

Technical report for BitNet b1.58 2B4T is now public!🔥 We envision a future where everyone can deploy a DeepSeek-level model at home — cheap, fast, and powerful. Join us on the journey to democratize 1-bit AI💪💪 We share how to train a powerful native 1-bit LLM from scratch👇🏻

"We are living in a timeline where a [Chinese] company is keeping the original mission of OpenAI alive - truly open, frontier research that empowers all."

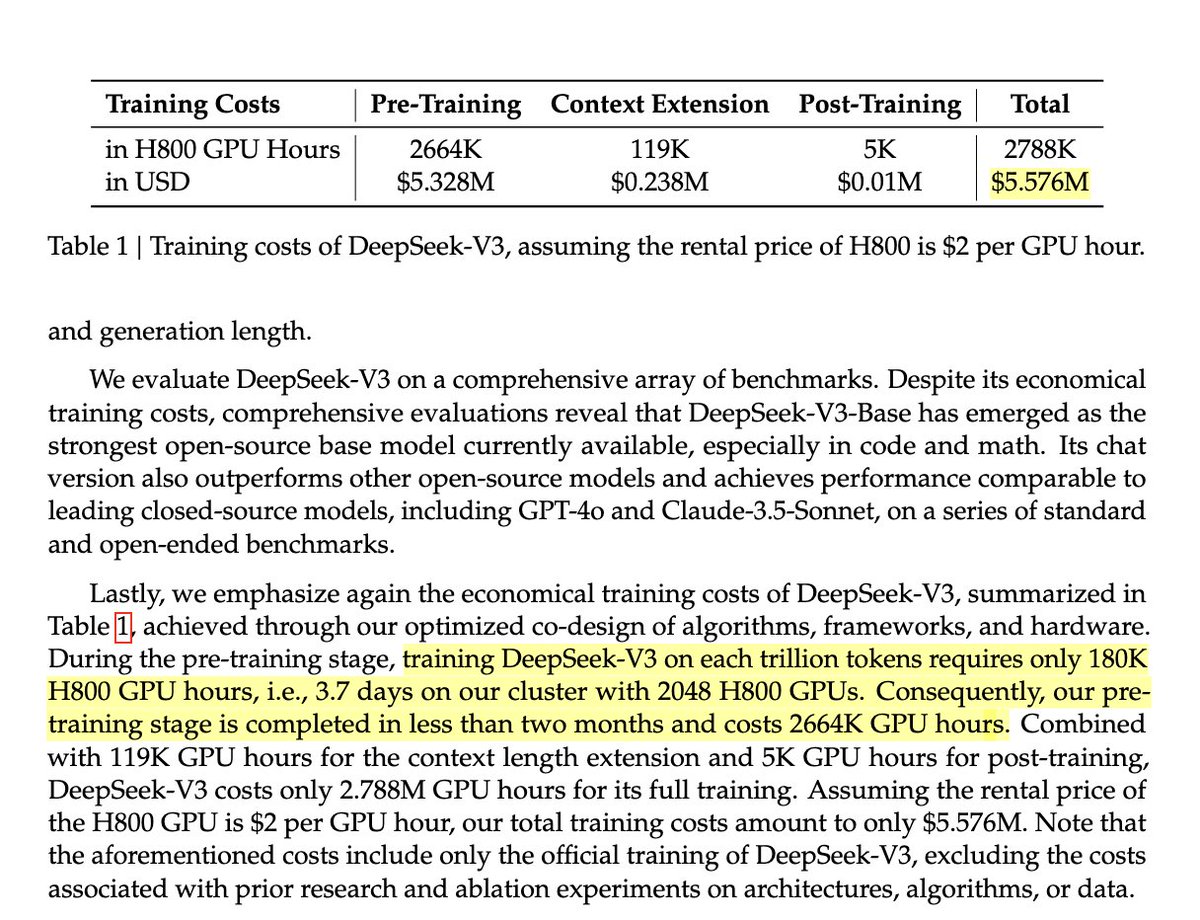

We are living in a timeline where a non-US company is keeping the original mission of OpenAI alive - truly open, frontier research that empowers all. It makes no sense. The most entertaining outcome is the most likely. DeepSeek-R1 not only open-sources a barrage of models but…

"Necessity is the mother of all invention" exemplified.

> $5.5M for Sonnet tier it's unsurprising that they're proud of it, but it sure feels like they're rubbing it in. «$100M runs, huh? 30.84M H100-hours on 405B, yeah? Half-witted Western hacks, your silicon is wasted on you, your thoughts wouldn't reduce loss of your own models»

Storage is cheap, everything should be statically-linked by default would save so much time.

I’m an AGI skeptic or believer based on the tokens on the quality of the tokens generated by whatever LLM i’m using 😂.

Man was really asking all the right questions with this one

Revaluation of priors is a must in this age of constant data absorption. Terrifying that some don't do it at all.

United States Trends

- 1. #BaddiesUSA 40.9K posts

- 2. Rams 25.9K posts

- 3. Cowboys 93.8K posts

- 4. Eagles 133K posts

- 5. #TROLLBOY 1,512 posts

- 6. Stafford 11.7K posts

- 7. Bucs 11.3K posts

- 8. Scotty 8,048 posts

- 9. Baker 19.5K posts

- 10. Chip Kelly 6,284 posts

- 11. Raiders 62K posts

- 12. Teddy Bridgewater 1,064 posts

- 13. #RHOP 9,252 posts

- 14. Stacey 29.2K posts

- 15. #ITWelcomeToDerry 10.9K posts

- 16. Todd Bowles 1,593 posts

- 17. Pickens 30.3K posts

- 18. Ahna 4,899 posts

- 19. Browns 107K posts

- 20. Shedeur 123K posts

Something went wrong.

Something went wrong.