codergoose

@codergoose

What are the top 1-3 papers/projects/blogposts/tweets/apps/etc that you have seen on Agentic AI (design/generation of workflows, evals, optimization) in the past year, and why? (Please feel free to recommend your own work)

> be Chinese lab > post model called Qwen3-235B-A22B-Coder-Fast > trained on 100 trillion tokens of synthetic RLHF > open-weights, inference engine, 85-page tech report with detailed ablations > tweet gets 6 likes > Western AI crowd still debating if Gemini plagiarized a…

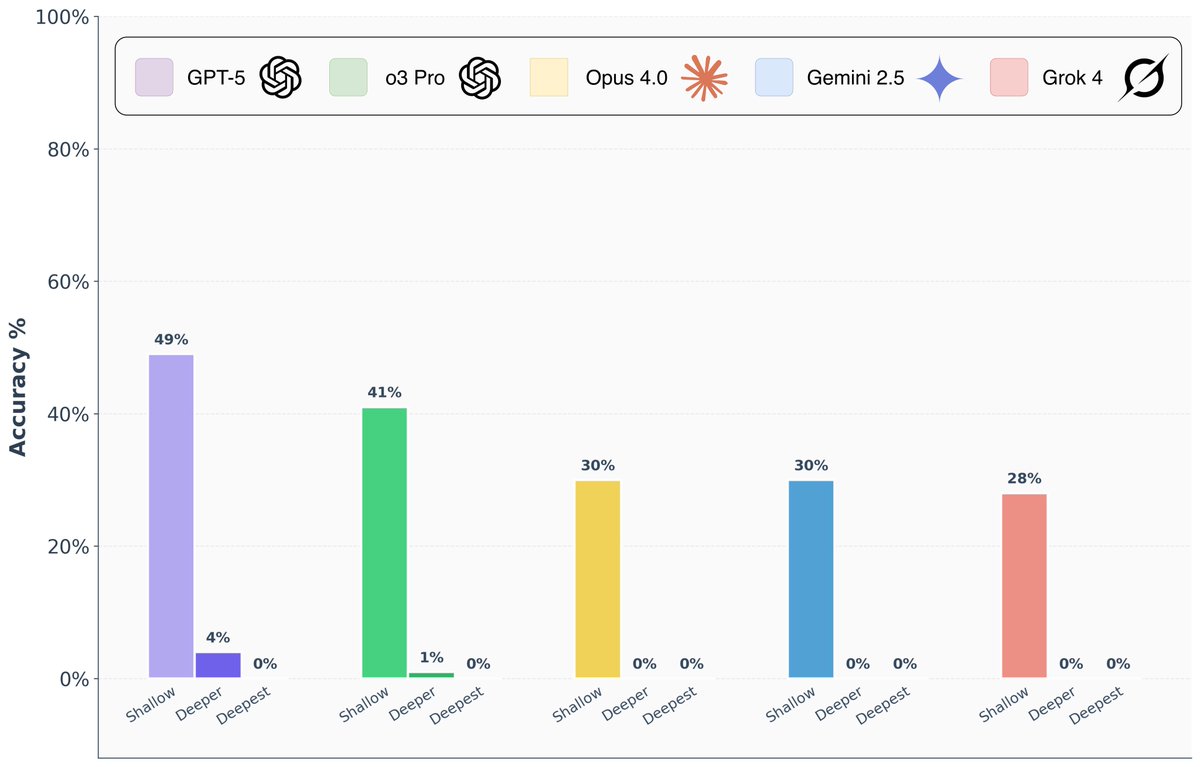

Are frontier AI models really capable of “PhD-level” reasoning? To answer this question, we introduce FormulaOne, a new reasoning benchmark of expert-level Dynamic Programming problems. We have curated a benchmark consisting of three tiers, in increasing complexity, which we call…

“But one thing I do know for sure - there's no AGI without touching, feeling, and being embodied in the messy world.”

I've been a bit quiet on X recently. The past year has been a transformational experience. Grok-4 and Kimi K2 are awesome, but the world of robotics is a wondrous wild west. It feels like NLP in 2018 when GPT-1 was published, along with BERT and a thousand other flowers that…

What a finish! Gemini 2.5 Pro just completed Pokémon Blue!  Special thanks to @TheCodeOfJoel for creating and running the livestream, and to everyone who cheered Gem on along the way.

Counterpoint to Maverick hype.

If this post doesn't convince you that this arena is a joke, then nothing will. Just try this Maverick model yourself on the prompts you typically use for work. It's a model from 2023, not a frontier LM we are used to, like Grok, Claude, or o1. Not even close.

“and even remembering your day in video” wow!

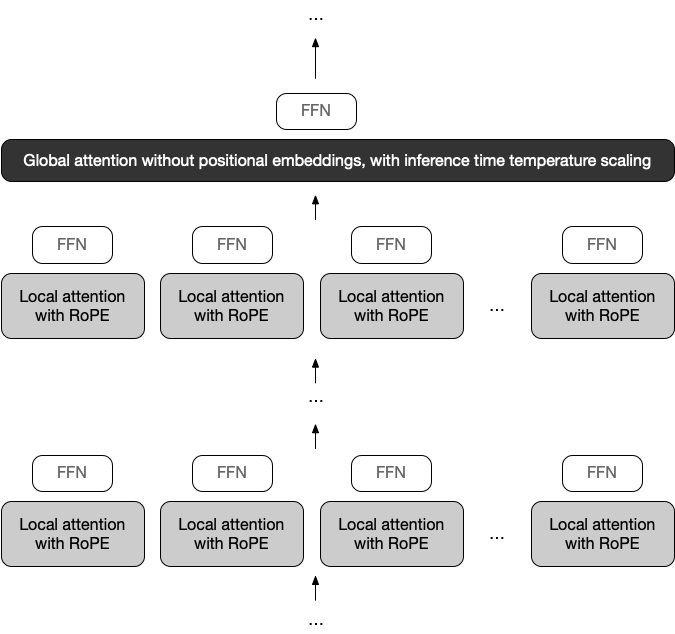

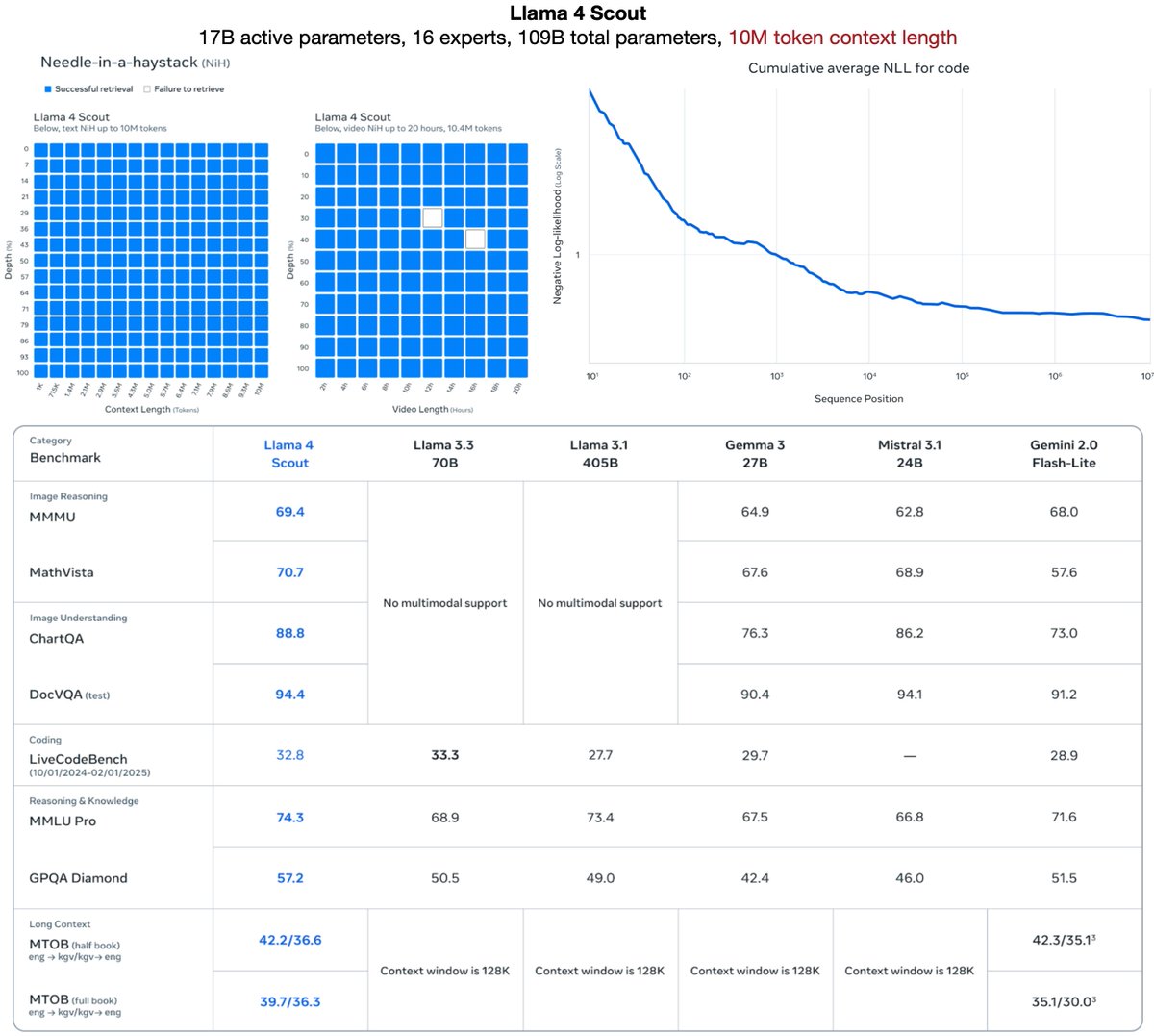

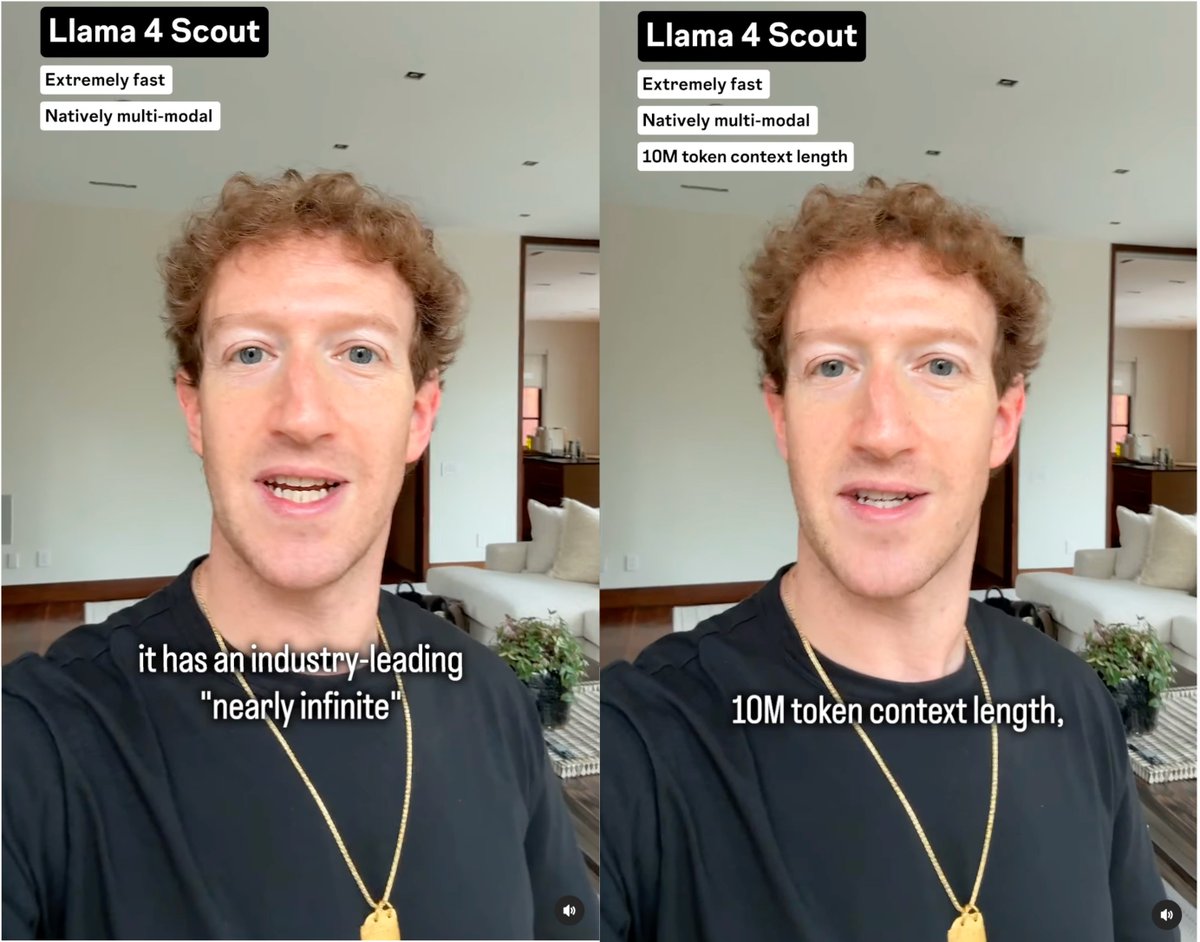

Our Llama 4’s industry leading 10M+ multimodal context length (20+ hours of video) has been a wild ride. The iRoPE architecture I’d been working on helped a bit with the long-term infinite context goal toward AGI. Huge thanks to my incredible teammates! 🚀Llama 4 Scout 🔹17B…

Apple and Meta have published a monstruously elegant compression method that encodes model weights using pseudo-random seeds. The trick is to approximate model weights as the linear combination of a randomly generated matrix with fixed seed, and a smaller vector t.

elegant

United States Xu hướng

- 1. No Kings 460K posts

- 2. Ange 51.5K posts

- 3. Chelsea 139K posts

- 4. Good Saturday 33K posts

- 5. Forest 123K posts

- 6. Nuno 7,909 posts

- 7. #SaturdayVibes 4,404 posts

- 8. #Caturday 3,642 posts

- 9. #NFOCHE 32.3K posts

- 10. Gameday 12.4K posts

- 11. Emiru 15K posts

- 12. Reece James 15.5K posts

- 13. #Talus_Labs N/A

- 14. Marinakis 5,680 posts

- 15. Neto 31.7K posts

- 16. Massie 43.5K posts

- 17. Garnacho 20.4K posts

- 18. Malo Gusto 3,738 posts

- 19. Acheampong 22.1K posts

- 20. #NFFC 7,263 posts

Something went wrong.

Something went wrong.