Gerstner Lab

@compneuro_epfl

The Laboratory of Computational Neuroscience @EPFL_en studies models of #neurons, #networks of #neurons, #synapticplasticity, and #learning in the brain.

Vous pourriez aimer

Our latest results (with @nickyclayton22) is now out in @NatureComms: doi.org/10.1038/s41467… 🥳 We propose a model of *28* behavioral experiments with food caching jays using a *single* neural network equipped with episodic-like memory and 3-factor RL plasticity rules. 1/6

The LCN is gradually moving away from X. You can follow our most recent news on our new account on BlueSky at gerstnerlab.bsky.social

If you're at #foragingconference2024 , come check out our poster (#60) with @modirshanechi and @compneuro_epfl today! Using a unified computational framework and two open-access datasets, we show how novelty and novelty-guided behaviors are influenced by stimulus similarities😊🤩

I'm thrilled to share that I was recently awarded the @EPFL_en Dimitris N. Chorafas Foundation Award for my Ph.D. thesis, "Seeking the new, learning from the unexpected: Computational models of #surprise and #novelty in the #brain." Award news: actu.epfl.ch/news/dimitris-…

Episode #22 in #TheoreticalNeurosciencePodcast: On 50 years with the Hopfield network model - with Wulfram Gerstner @compneuro_epfl theoreticalneuroscience.no/thn22 John Hopfield received the 2024 Physics Nobel prize for his model published in 1982. What is the model all about?

📢 I'm on the faculty job market this year! My research explores the foundations of deep learning and analyzes learning and feature geometry for Gaussian inputs. I detail my major contributions👇Retweet if you find it interesting and help me spread the word! DM is open. 1/n

🚨Preprint alert🚨 In an amazing collaboration with @GruazL53069, @sobeckerneuro, & J Brea, we explored a major puzzle in neuroscience & psychology: *What are the merits of curiosity⁉️* osf.io/preprints/psya… 1/7

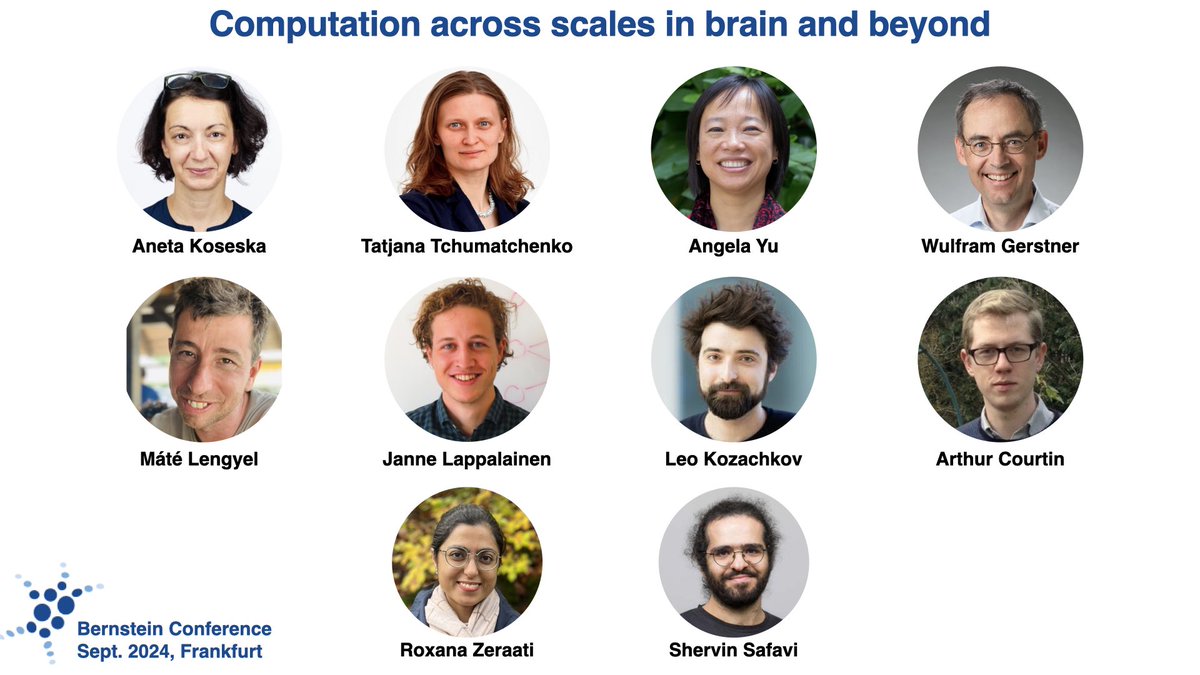

Headed to @BernsteinNeuro Conference this weekend and interested in how biological computation is performed across different scales from single neurons to populations and whole-brain and even astrocytes and the whole body, drop by our workshop co-organized w/ @neuroprinciples

1. Synaptic weight scaling in O(1/N) self-induces a form of (implicit) spatial structure in networks of spiking neurons, as the number of neurons N tends to infinity. This is what D.T. Zhou, P.-E. Jabin and I prove in arxiv.org/abs/2409.06325.

Next Monday, I'll present how we exploit symmetries to identify weights of a black-box network to the EfficientML reading group 📒 Have a look if interested in Expand-and-Cluster: sites.google.com/view/efficient… Thanks @osaukh for the invite!

📕Recovering network weights from a set of input-output neural activations 👀 Ever wondered if this is even possible? 🤔 Check out Expand-and-Cluster, our latest paper at #ICML2024! Thu. 11:30 #2713 proceedings.mlr.press/v235/martinell… A thread 🧵 ⚠️ Loss landscape and symmetries ahead ⚠️

And it's a book! Together with @okaysteve, we have gathered some of the leading experts in the field who have generously contributed with a chapter of what has become the first ever book on #engram biology! 📖🔥🧠Come take a look! ⬇️⬇️⬇️ link.springer.com/book/10.1007/9…

Approximation-free training method for deep SNNs using time-to-first-spike coding.

Today in @NatureComms . 📝 Open-puzzle: training event-based spiking neurons is mysteriously impossible. @Ana__Stan 👩🏻🔬 shows it become possible using theoretical equivalence between ReLU CNN and event-based CNN. Congrats ! 🧵 nature.com/articles/s4146…

Today in @NatureComms . 📝 Open-puzzle: training event-based spiking neurons is mysteriously impossible. @Ana__Stan 👩🏻🔬 shows it become possible using theoretical equivalence between ReLU CNN and event-based CNN. Congrats ! 🧵 nature.com/articles/s4146…

📕Recovering network weights from a set of input-output neural activations 👀 Ever wondered if this is even possible? 🤔 Check out Expand-and-Cluster, our latest paper at #ICML2024! Thu. 11:30 #2713 proceedings.mlr.press/v235/martinell… A thread 🧵 ⚠️ Loss landscape and symmetries ahead ⚠️

Excited to share a blog post on our recent work (arxiv.org/abs/2311.01644) on neural network distillation bsimsek.com/post/copy-aver… If you liked toy models of superposition or pizza and clock papers, you might enjoy reading this blog post!

Normative theories show that a surprise signal is necessary to speed up learning after an abrupt change in the environment; but how can such a speed-up be implemented in the brain? 🧠 We make a proposition in our new paper in @PLOSCompBiol. doi.org/10.1371/journa…

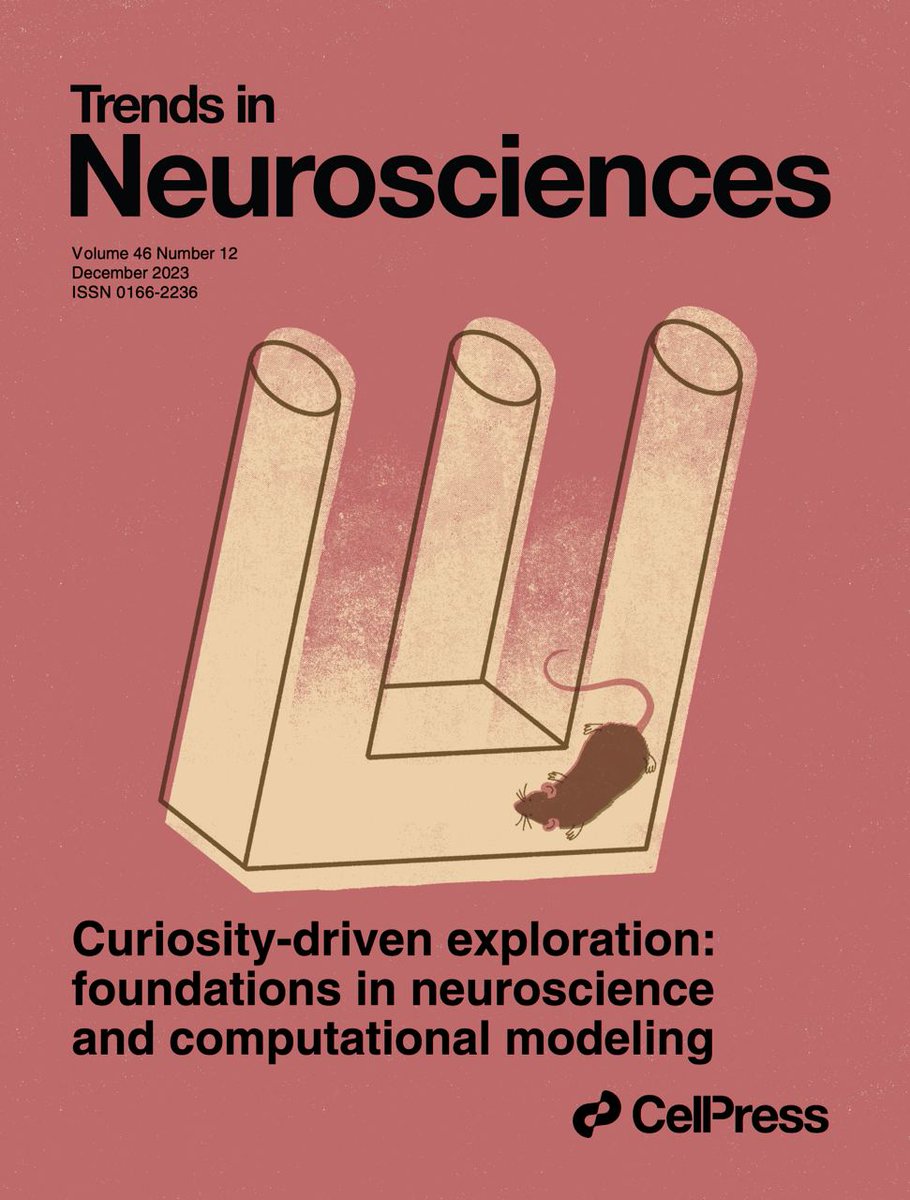

What do we talk about when we talk about "curiosity"? 🤔 In our new paper in @TrendsNeuro (with @KacperKond, @compneuro_epfl & @sebhaesler), we address this question by reviewing the behavioral signatures, neural mechanisms, and comp. models of curiosity: doi.org/10.1016/j.tins…

Excited that our new position piece is out! In this article, @summerfieldlab and I review three recent advances in using deep RL to model cognitive flexibility, a hallmark of human cognition: sciencedirect.com/science/articl… (1/4)

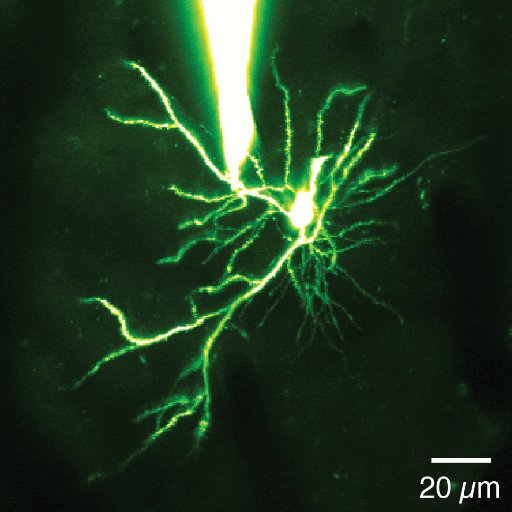

Intriguing new paper from the Gerstner lab proposes a theory for sparse coding and synaptic plasticity in cortical networks to overcome spurious input correlations. doi.org/10.1371/journa…

Most methods of sparse coding or ICA assume the 'pre-whitening' of inputs. @cstein06 shows that this is not necessary with a smart local Hebbian learning rule and ReLU neurons! Paper just out in @PLOSCompBiol: doi.org/10.1371/journa…

United States Tendances

- 1. Blake Snell 4,452 posts

- 2. #AEWDynamite 6,749 posts

- 3. #Survivor49 N/A

- 4. Jaylen Brown 6,904 posts

- 5. Davis Schneider 3,432 posts

- 6. Game 5 55.1K posts

- 7. #AbbottElementary N/A

- 8. Sam Hauser N/A

- 9. Liverpool 188K posts

- 10. Derek Shelton N/A

- 11. Donovan Mitchell 2,757 posts

- 12. #ChicagoMed N/A

- 13. Boasberg 29K posts

- 14. Erika Kirk 28.8K posts

- 15. #StandXHalloween N/A

- 16. Magic 330K posts

- 17. BACK TO BACK 1M posts

- 18. Orange Cassidy N/A

- 19. Slot 112K posts

- 20. Darby Allin N/A

Vous pourriez aimer

-

Kenneth D Harris

Kenneth D Harris

@kennethd_harris -

Stefano Fusi

Stefano Fusi

@StefanoFusi2 -

CosyneMeeting

CosyneMeeting

@CosyneMeeting -

Richard Naud

Richard Naud

@NeuroNaud -

Tim Vogels

Tim Vogels

@TPVogels -

Robert Yang

Robert Yang

@GuangyuRobert -

Friedemann Zenke

Friedemann Zenke

@hisspikeness -

SueYeon Chung

SueYeon Chung

@s_y_chung -

Gatsby Computational Neuroscience Unit

Gatsby Computational Neuroscience Unit

@GatsbyUCL -

Adrienne Fairhall

Adrienne Fairhall

@alfairhall -

Neural Computation Lab

Neural Computation Lab

@NeuralCompLab -

Kanaka Rajan

Kanaka Rajan

@KanakaRajanPhD -

Bernstein Network Computational Neuroscience

Bernstein Network Computational Neuroscience

@BernsteinNeuro -

Andreas Tolias Lab @ Stanford University

Andreas Tolias Lab @ Stanford University

@AToliasLab -

Il Memming Park

Il Memming Park

@memming

Something went wrong.

Something went wrong.