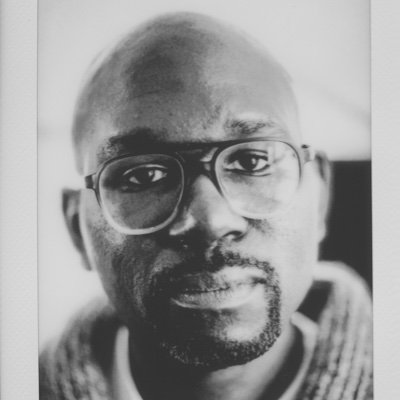

Danny Wilf-Townsend

@drmtown

Associate Professor of Law @GeorgetownLaw, thinking, writing, and teaching about civil procedure, consumer protection, and artificial intelligence.

You might like

Happy to see a cameo here from one of my favorite tests in all of the law: whether a procedural rule is really a procedural rule depends on whether it "really regulates procedure."

First big civ pro case of the term is out—Berk v. Choy. What's more fun than the Erie Doctrine?

I’m good at using the internet, and better than most people at extracting value from AI. This is despite the fact that I grew up when none of this existed. The idea that we have to teach kids today’s technology so that they’re good with future technology is obviously nonsense.

Regulating static AI models is already difficult and if AI tools will become ones that can learn, regulations will need to adapt quickly. @drmtown explores what new regulatory approaches could look like in a future where change is common and comes fast.

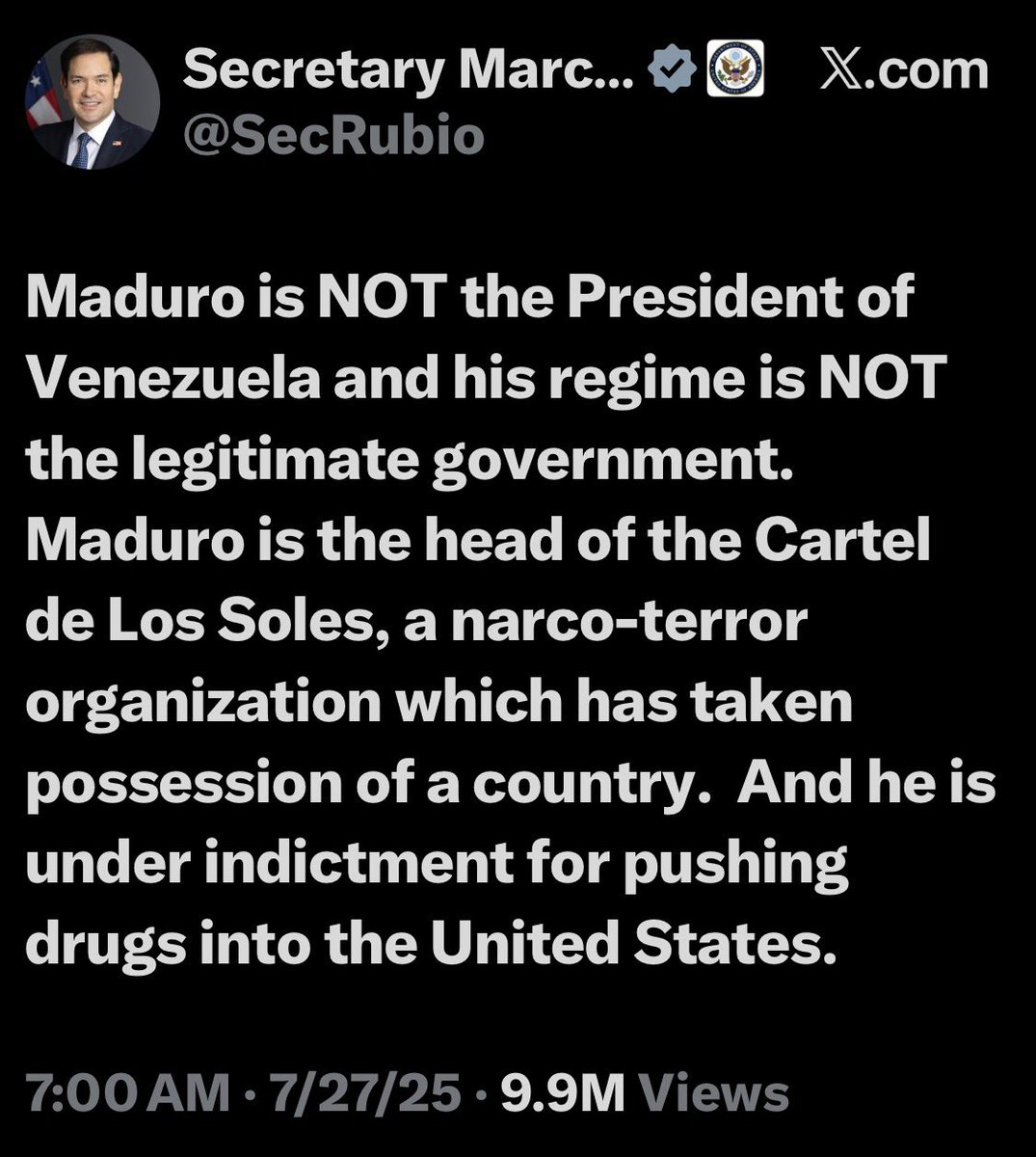

Maduro will likely claim head-of-state immunity when he is prosecuted in SDNY. Rubio is previewing the government response, which is that it does not recognize Maduro as the legitimate head of state of V, an executive branch determination that courts may very well defer to.

Interestingly, I think the information environment around capabilities is bad in law in basically the opposite direction than it seems in math. There are lots of posts/news stories about hallucinated cases, while lawyers are not really incentivized to post about successes.

It's good for academics to publicly experiment with new AI tools, but important to report both successes and failures when doing so. Audience capture incentivizes only doing one or the other, which is part of the reason the information environment around capabilities is so bad.

In the late summer and spring, GPT 5 (and then 5.1 and 5.2) launched. The GPT 5/5.1/5.2 pro model seemed again like another step change. I again spent a lot of time with it. It could provide real complements to my expertise and work through long and complicated lines of reasoning

I have a new post out in Lawfare today about continual learning, the goal of many model developers to build AI tools that can learn from their users. That technology could have many uses, but also will challenge existing ways we are trying to regulate AI.

I avoided reading this for a while because I read about AI for many hours a week already, and who needs another NYT Op Ed on it. But I succumbed after enough recommendations, and I have to say, I'm glad I did—it's a great read. nytimes.com/2025/12/03/mag…

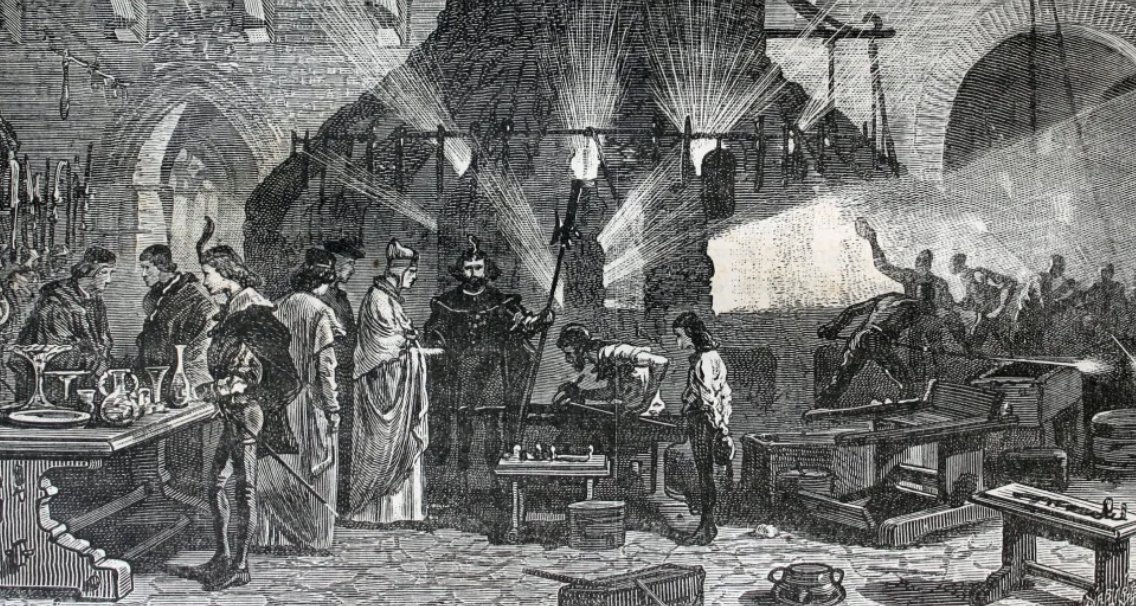

At the #Neurips2025 mechanistic interpretability workshop I gave a brief talk about Venetian glassmaking, since I think we face a similar moment in AI research today. Here is a blog post summarizing the talk: davidbau.com/archives/2025/…

this is such a wild media story and just the latest reminder that we’re going to need so many new ways of verifying what’s real and not with AI thelocal.to/investigating-…

United States Trends

- 1. Air Force One N/A

- 2. Jamal Murray N/A

- 3. Luis Robert N/A

- 4. #LakeShow N/A

- 5. Acuna N/A

- 6. Nuggets N/A

- 7. White Sox N/A

- 8. Davos N/A

- 9. Reed Sheppard N/A

- 10. Spurs N/A

- 11. The Flash N/A

- 12. Baker N/A

- 13. UNLV N/A

- 14. Grassley N/A

- 15. #kubball N/A

- 16. UCLA N/A

- 17. JS NEXT JOURNEY BEGINS N/A

- 18. #DREAMSCAPExJimmySea N/A

- 19. Melvin Council N/A

- 20. Joint Base Andrews N/A

You might like

-

Payvand Ahdout

Payvand Ahdout

@PAhdout -

Nina Varsava

Nina Varsava

@NinaVarsava -

Rory van Loo

Rory van Loo

@RoryVanLoo -

Tara Leigh Grove

Tara Leigh Grove

@TaraLeighGrove1 -

Matthew B. Lawrence

Matthew B. Lawrence

@mjblawrence -

Joshua Macey

Joshua Macey

@Maceyjoshua -

Travis Crum

Travis Crum

@TravisCrumLaw -

James (Jamie) Macleod (@jammacleod1.bsky.social)

James (Jamie) Macleod (@jammacleod1.bsky.social)

@jammacleod1 -

Rachel Bayefsky

Rachel Bayefsky

@RachelBayefsky -

Daniel Rice

Daniel Rice

@daniel_b_rice -

Russell Gold

Russell Gold

@ProfRGold -

Tinu Adediran

Tinu Adediran

@ProfAdediranT -

Elizabeth Chamblee Burch

Elizabeth Chamblee Burch

@elizabethcburch -

QENNY | THE SPOOKY LAWYER ON YOUTUBE

QENNY | THE SPOOKY LAWYER ON YOUTUBE

@AKBrews

Something went wrong.

Something went wrong.