You might like

🚀 I'm teaching Artificial Neural Networks (ANN) from the recently updated chapter of my free, online e-book: "Applied Machine Learning in Python" 📷 I'm really excited about this update — I’ve focused on maximum accessibility by walking through everything step-by-step:…

Crush’s agentic fetch tool is cool: it reduces context for the main agent and becomes a whole mini web search situation ✦*⋆˚。⋆

## Lists outside, Detail inside. one fun discovery i've had using Cursor 2.0 is that the Agents view and the Editor view are not actually that different if you happen to be #rightsidebar gang I've been #rightsidebar since @shanselman first talked about it many moons ago and…

first try of the new Cursor Composer model (btw I'm still a DAU of Cursor! @smol_ai is entirely a Cursor vibecode) one impressive example - Composer 1 finished 2 rounds of human feedback and debugging with me and got me what I wanted, while Sonnet 4.5 was still working on its…

yes Aether is a biglab model, no its not a llama one thing i learned from the inside is that all code ide model strings are combinations of models combined with prompts and toolcalls for the model. so no you wont feel like it is “raw” matching what youd find in the normal chat.…

Copy-pasting PyTorch code is fast — using an AI coding model is even faster — but both skip the learning. That's why I asked my students to write by hand ✍️. 🔽 Download: byhand.ai/pytorch After the exercise, my students can understand what every line really does and…

Lecture notes: "Introduction to Bilevel Optimization: A perspective from Variational Analysis" (by David Salas): arxiv.org/abs/2511.05793

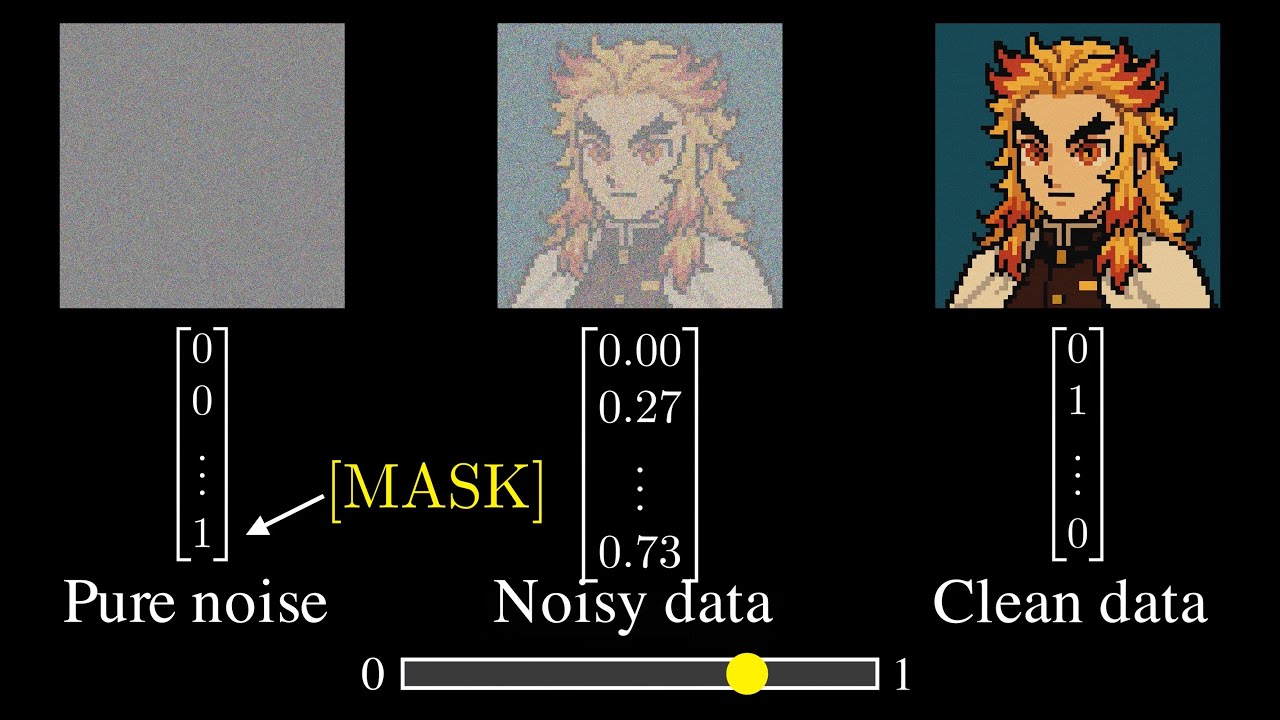

Diffusion language models are making a splash (again)! To learn more about this fascinating topic, check out ⏩ my video tutorial (and references within): youtu.be/8BTOoc0yDVA ⏩discrete diffusion reading group: @diffusion_llms

youtube.com

YouTube

Diffusion Language Models: The Next Big Shift in GenAI

There are plenty of valid things to critique about Rust but the anti-Rust takes I see on this site are so weird and lowbrow. Totally detached from what it's actually like to write Rust day-in day-out (and why it's been so successful).

As always all material in the course is on boazbk.github.io/mltheorysemina…

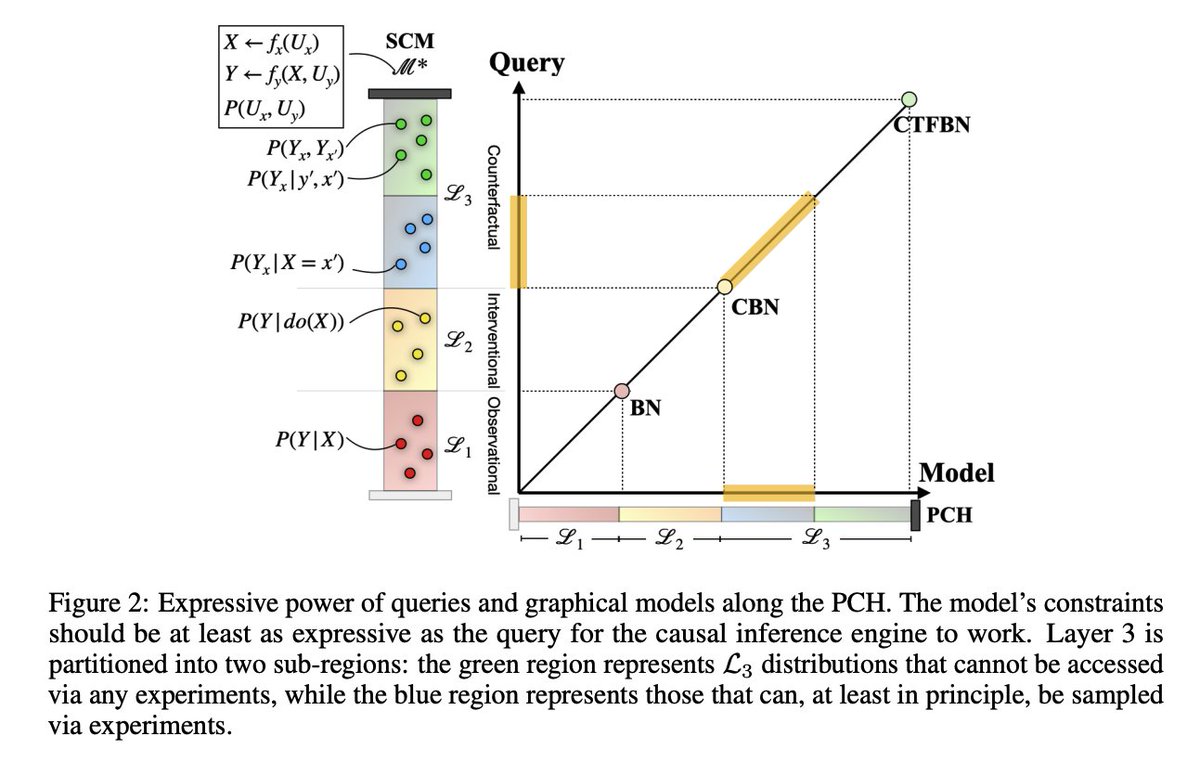

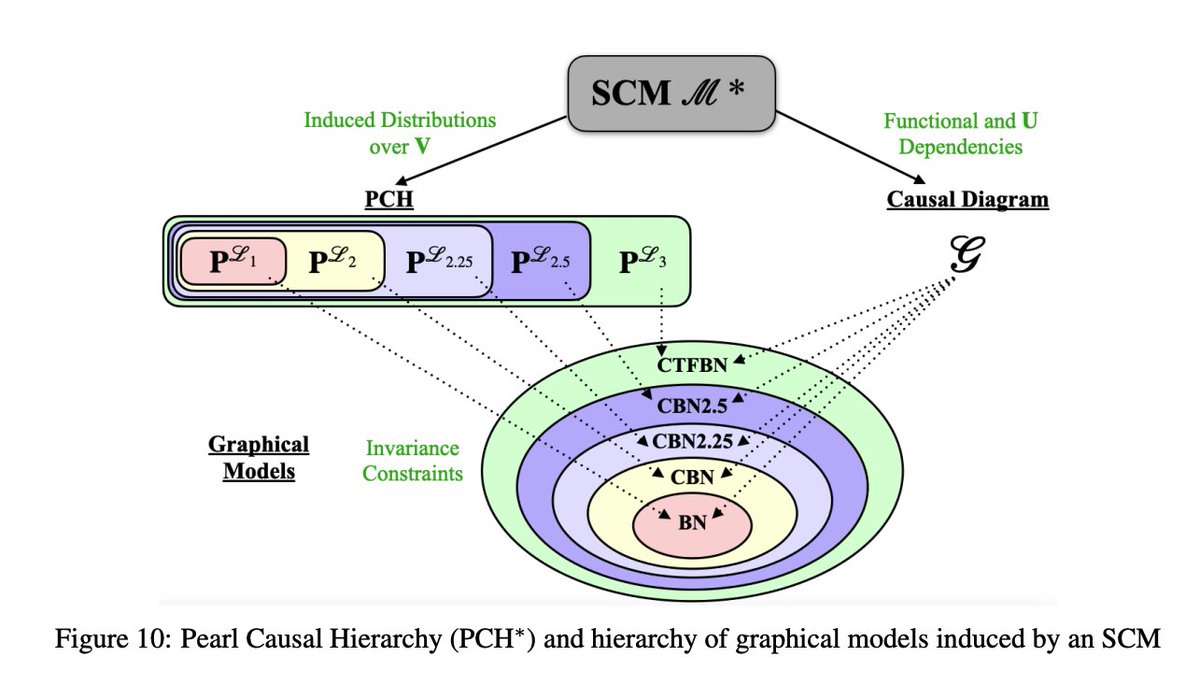

The second point made by @eliasbareinboim is important. We rarely have a completely specified SCM on our hand. The purpose of Causal Analysis is the tell us whether the little we know about the SCM behind the data is sufficient for estimating our query of interest. Luckily, it…

Hi Boris, thank you for the note -- I’d just like to clarify two small points that often cause confusion. Regarding your first point, this is not rhetorical or anyone’s opinion -- it’s a proposition known as the Causal Hierarchy Theorem (CHT), first stated as Theorem 1 in the…

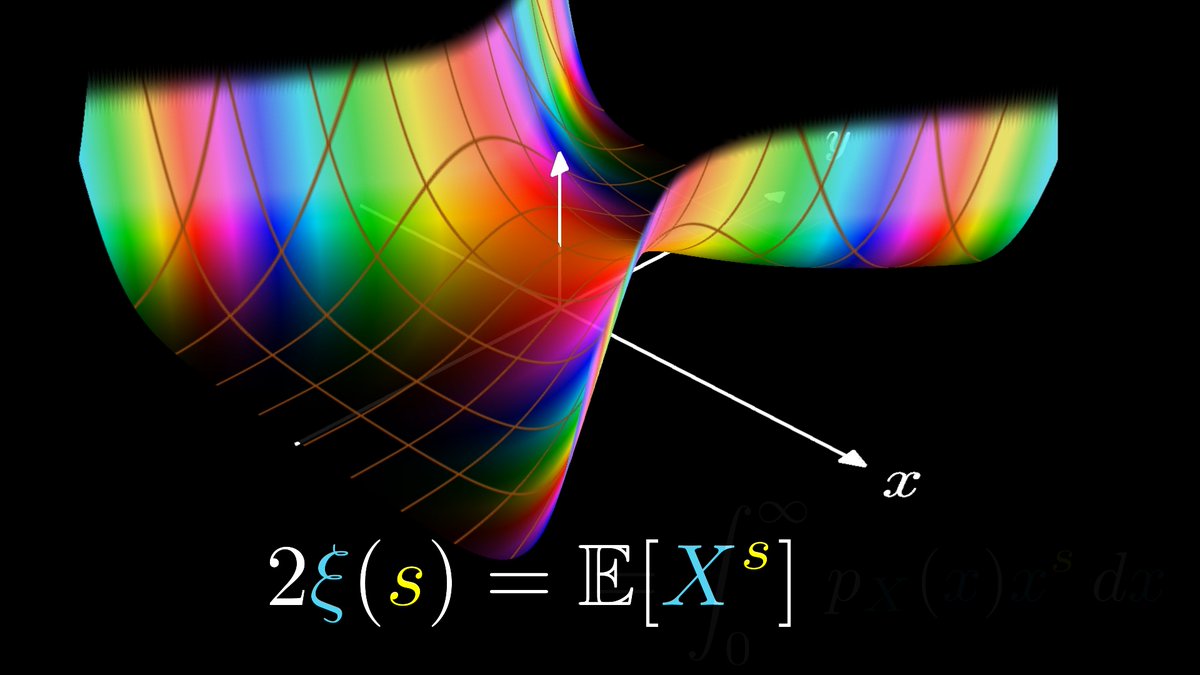

It's a while since my last YT video upload. Nothing is happening, I am busy working on the next one. Just taking longer than expected (been very busy recent weekends). Will be on connections between Riemann zeta and Brownian motion. ETA a few days to a week.

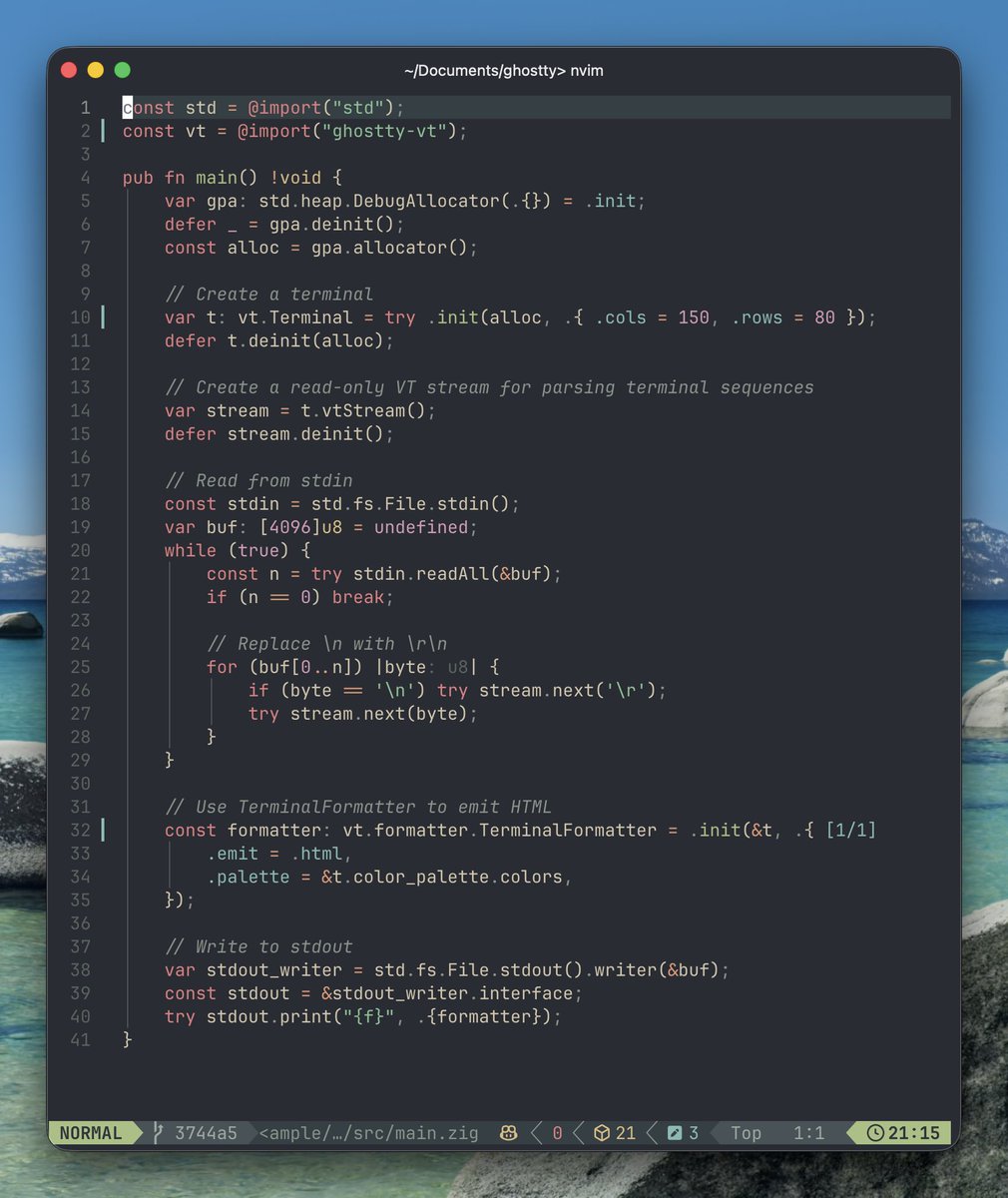

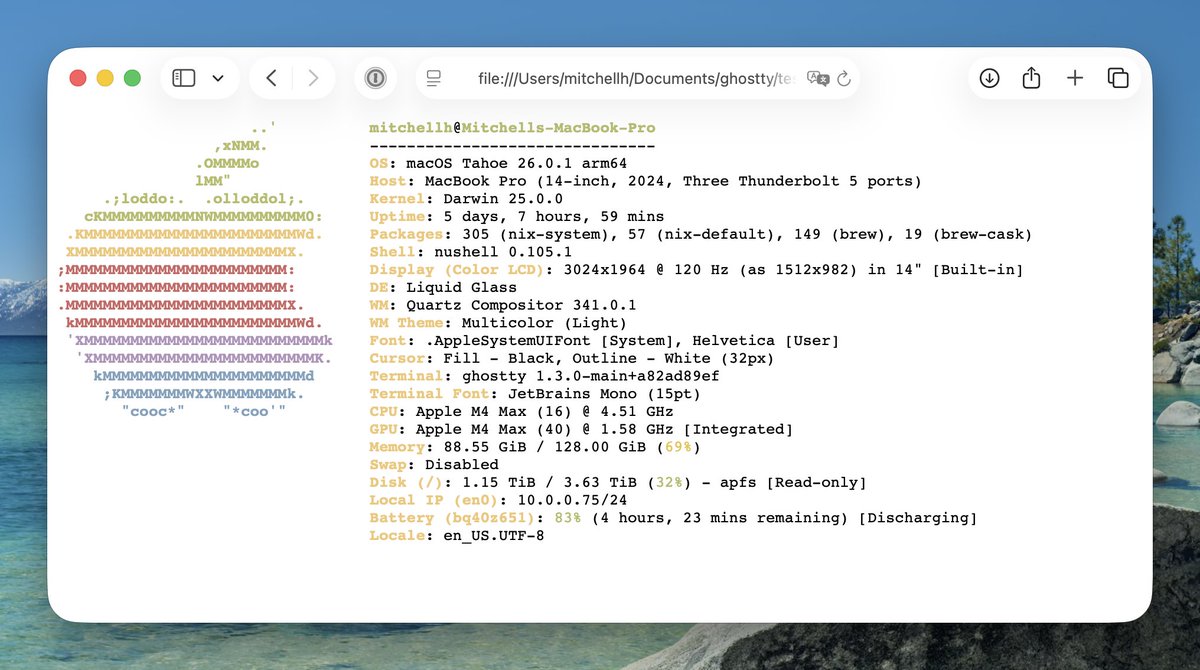

libghostty can now encode terminal contents as HTML. On the left is an example program that reads stdin, loads it in a terminal, and encodes to HTML. On the right, an example of `fastfetch` encoded to the browser. Coming soon to Ghostty clipboard ops automatically.

Two reasons why 0 × ∞ = 0 when doing measure theory 1) product of measures μ, ν μ×ν(A×B) = μ(A)ν(B) holds even when A or B have infinite measure 2) expected values/integrals: functions take infinite values (e.g., expected rolls to get a 6), but with zero probability, so cancel

0 × ∞ = 0 e.g., a line has width 0, length ∞, and area 0

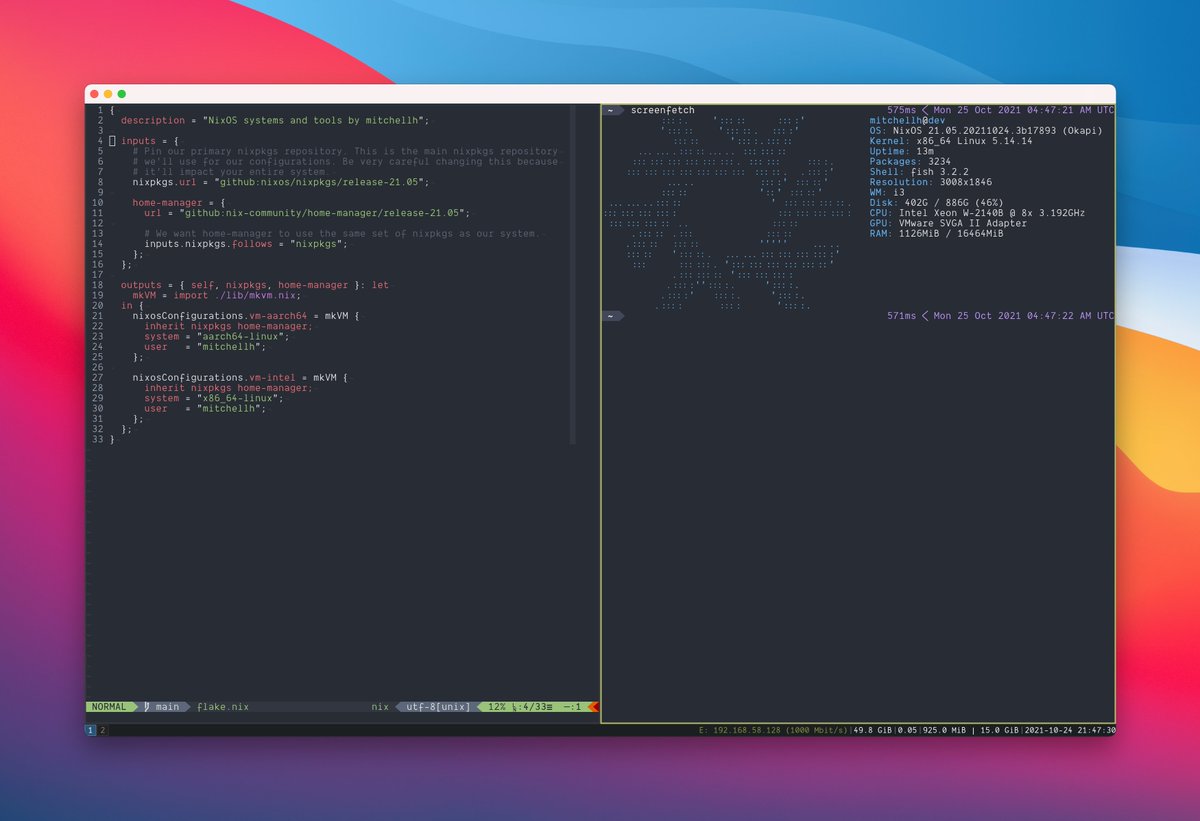

My NixOS configurations for my dev VM setup are finally sanitized and open source. They work for both Intel and Apple Silicon. And I put together a video showing how I setup a new machine! Repo: github.com/mitchellh/nixo… Video: youtube.com/watch?v=ubDMLo…

AI Mode, our most powerful AI Search, is now available right from the Chrome address bar, allowing users to ask complex, multi-part questions, from the same place they already search and browse the web. We're also launching contextual search suggestions in the Chrome address bar…

Let X = exp(A²/2) and Y=exp(B²/2) where A,B are joint normal with mean 0, variance 1, and correlation ρ (between 0 and 1). Then, E[X|Y] = Y/ρ > Y E[Y|X] = X/ρ > X So X is greater than Y 'on average', and Y is greater than X on average. Here's a proof of that

Let X = exp(U²/2) and Y=exp(V²/2) where U,V are joint normal with mean 0, variance 1, and 50% correlation. Then, E[X|Y] = 2Y E[Y|X] = 2X They are each, on average, twice as big as the other!

![Almost_Sure's tweet image. Let X = exp(U²/2) and Y=exp(V²/2) where U,V are joint normal with mean 0, variance 1, and 50% correlation. Then,

E[X|Y] = 2Y

E[Y|X] = 2X

They are each, on average, twice as big as the other!](https://pbs.twimg.com/media/FxiKMQfX0AINL2A.jpg)

The usual confusion about "reasoning" is the process vs. product confusion. In particular the following are both true --> LLMs/LRMs can correctly (and usefully) answer problems that would normally require "reasoning process" --> LLMs/LRMs don't necessarily use what might be…

Usually I am on team “current AI models are so smart, bro you have no idea”, but in this case I think @MLStreetTalk is right (echoing @GaryMarcus, @rao2z, etc.): current AI models *are* still oddly *weak* at “true reasoning” (in the sense of William James), compared to intuition.

.@GroqInc has introduced prompt caching for the SOTA open-source coding model, Kimi-k2. What does this mean for you? Significantly reduced costs with Kimi-k2 on its fastest provider. Check the price: left side without prompt caching, right side with prompt caching.

MUSK: GROK 5 BEGINS TRAINING NEXT MONTH

🚀 We just released Zed v0.200! In a previous release, we added the `--diff` flag to the Zed CLI. Now, you can compare two files directly from the project panel, via `Compare marked files`.

United States Trends

- 1. Thanksgiving 377K posts

- 2. Golesh 1,860 posts

- 3. Fani Willis 10.4K posts

- 4. Trumplican 2,642 posts

- 5. Hong Kong 76.5K posts

- 6. Stranger Things 158K posts

- 7. #TejRan 4,152 posts

- 8. Riker N/A

- 9. Khabib 6,045 posts

- 10. Ruth 14K posts

- 11. Elijah Moore N/A

- 12. Tom Hardy 1,044 posts

- 13. Pete Skandalakis N/A

- 14. NextNRG Inc N/A

- 15. #Wednesdayvibe 3,519 posts

- 16. #sstvi 47.1K posts

- 17. Nuns 10.4K posts

- 18. #wednesdaymotivation 6,584 posts

- 19. Ribs 11.7K posts

- 20. #WednesdayWisdom 1,192 posts

Something went wrong.

Something went wrong.