Juan Diego Rodríguez (he/him)

@juand_r_nlp

CS PhD student at UT Austin in #NLP Interested in language, reasoning, semantics and cognitive science. You can also find me over at the other site 🦋

Może Ci się spodobać

COLM Keynote: Nicholas Carlini Are LLMs worth it? youtube.com/watch?v=PngHcm…

youtube.com

YouTube

Nicholas Carlini - Are LLMs worth it?

I’ll be in Boston attending BUCLD this week — I won’t be presenting but I’ll be cheering on @najoungkim who will present at the prestigious SLD symposium about the awesome work by her group, including our work on LMs as hypotheses generators for language acquisition! 🤠👻

Dear “15-18 yo founder”s sending me DMs, don’t. Go and hug your parents, fall in love, eat chocolate cereal for breakfast, read poetry. Nobody will give you back these years. And sure, do your homework and learn math and code if that feels fun. But stop building SaaS and…

“For a glorious decade in the 2010s, we all worked on better neural network architectures, but after that we just worked on scaling transformers and making them more efficient”

The Computer Science section of @arxiv is now requiring prior peer review for Literature Surveys and Position Papers. Details in a new blog post

236 direct/indirect PhD students!! Based on OpenReview data, an interactive webpage: prakashkagitha.github.io/manningphdtree/ Your course, NLP with Deep learning - Winter 2017, on YouTube, was my introduction to building deep learning models. This is the least I could do to say Thank You!!

Thanks! 😊 But it’d be really good to generate an updated version of those graphs!

I'm super excited to update that "Lost in Automatic Translation" is now available as an audiobook! 🔊📖 It's currently on Audible: audible.ca/pd/B0FXY8VQX5 Stay tuned (lostinautomatictranslation.com) for more retailers, including Amazon, iTunes, etc., and public libraries! 📚

A thread on the equilibria of pendulums and their connection to topology. 1/n

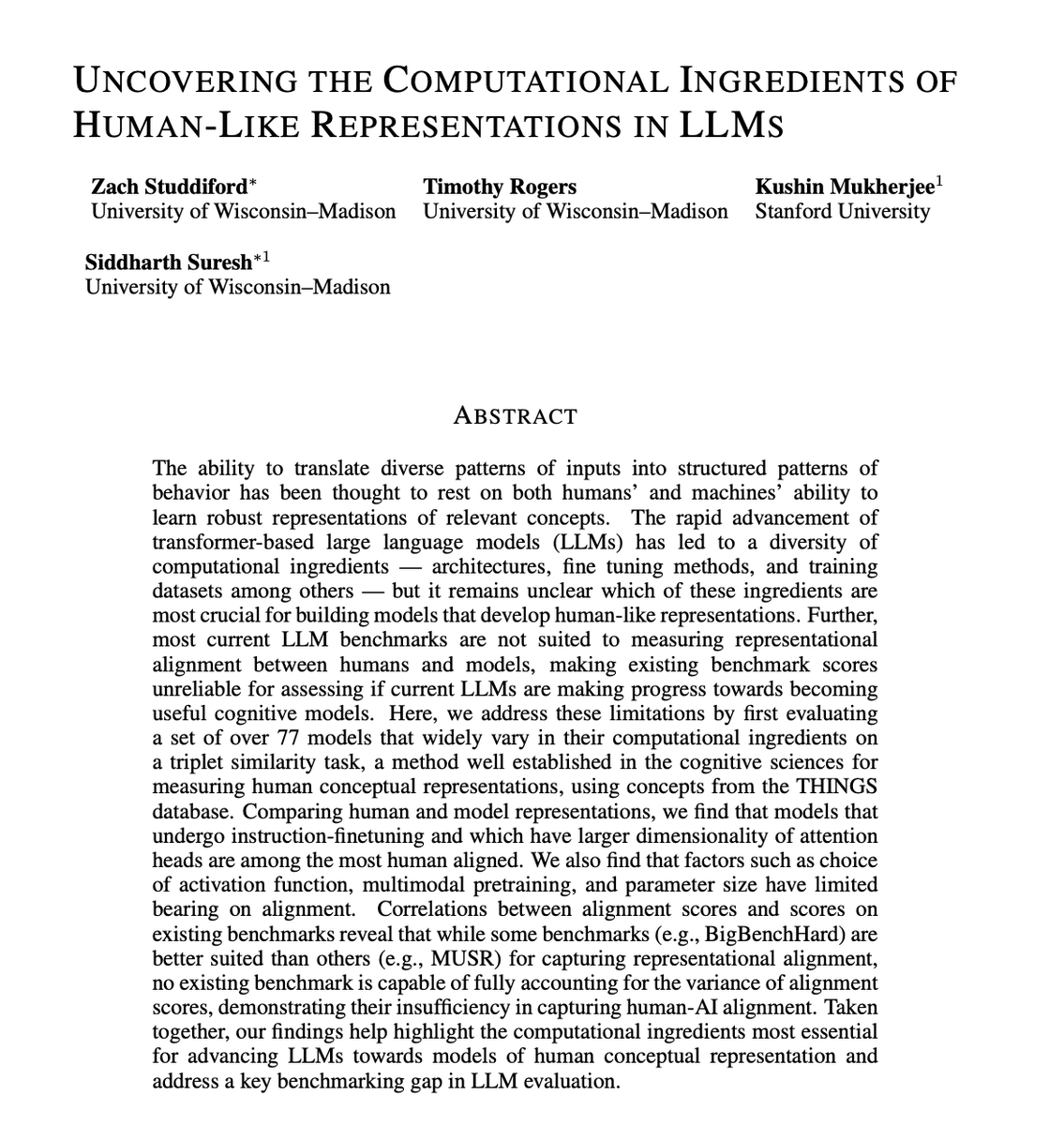

We’re drowning in language models — there are over 2 mil. of them on Huggingface! Can we use some of them to understand which computational ingredients — architecture, scale, post-training, etc. – help us build models that align with human representations? Read on to find out 🧵

one thing that really became clear to me (which admittedly makes me publish much less) is that, especially as academics, "beating the state of the art" is a crap target to aim for. the objective should be to replace the state of the art. (of course, this is unfortunately super…

𝑵𝒆𝒘 𝒃𝒍𝒐𝒈𝒑𝒐𝒔𝒕! In which I give some brief reflections on #COLM2025 and give a rundown of a few great papers I checked out!

I will be giving a short talk on this work at the COLM Interplay workshop on Friday (also to appear at EMNLP)! Will be in Montreal all week and excited to chat about LM interpretability + it’s interaction with human cognition and ling theory.

A key hypothesis in the history of linguistics is that different constructions share underlying structure. We take advantage of recent advances in mechanistic interpretability to test this hypothesis in Language Models. New work with @kmahowald and @ChrisGPotts! 🧵👇

Happy to announce the first workshop on Pragmatic Reasoning in Language Models — PragLM @ COLM 2025! 🧠🎉 How do LLMs engage in pragmatic reasoning, and what core pragmatic capacities remain beyond their reach? 🌐 sites.google.com/berkeley.edu/p… 📅 Submit by June 23rd

Interested in language models, brains, and concepts? Check out our COLM 2025 🔦 Spotlight paper! (And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

Our paper "ChartMuseum 🖼️" is now accepted to #NeurIPS2025 Datasets and Benchmarks Track! Even the latest models, such as GPT-5 and Gemini-2.5-Pro, still cannot do well on challenging 📉chart understanding questions , especially on those that involve visual reasoning 👀!

Introducing ChartMuseum🖼️, testing visual reasoning with diverse real-world charts! ✍🏻Entirely human-written questions by 13 CS researchers 👀Emphasis on visual reasoning – hard to be verbalized via text CoTs 📉Humans reach 93% but 63% from Gemini-2.5-Pro & 38% from Qwen2.5-72B

Accepted at #NeurIPS2025 -- super proud of Yulu and Dheeraj for leading this! Be on the lookout for more "nuanced yes/no" work from them in the future 👀

Does vision training change how language is represented and used in meaningful ways?🤔 The answer is a nuanced yes! Comparing VLM-LM minimal pairs, we find that while the taxonomic organization of the lexicon is similar, VLMs are better at _deploying_ this knowledge. [1/9]

![yulu_qin's tweet image. Does vision training change how language is represented and used in meaningful ways?🤔 The answer is a nuanced yes! Comparing VLM-LM minimal pairs, we find that while the taxonomic organization of the lexicon is similar, VLMs are better at _deploying_ this knowledge. [1/9]](https://pbs.twimg.com/media/GwZPQE5WUAUeZkZ.jpg)

You shall know a fascist asshole by the company he keeps... And also what he says.

Elon Musk, "There's got to be a change of government in Britain" "We don't have another four years or whenever your next election is, it's too long, something has got to be done" "There has got to be a dissolution of parliament and a new vote held" "You got to appeal to the…

Introducing ChartMuseum🖼️, testing visual reasoning with diverse real-world charts! ✍🏻Entirely human-written questions by 13 CS researchers 👀Emphasis on visual reasoning – hard to be verbalized via text CoTs 📉Humans reach 93% but 63% from Gemini-2.5-Pro & 38% from Qwen2.5-72B

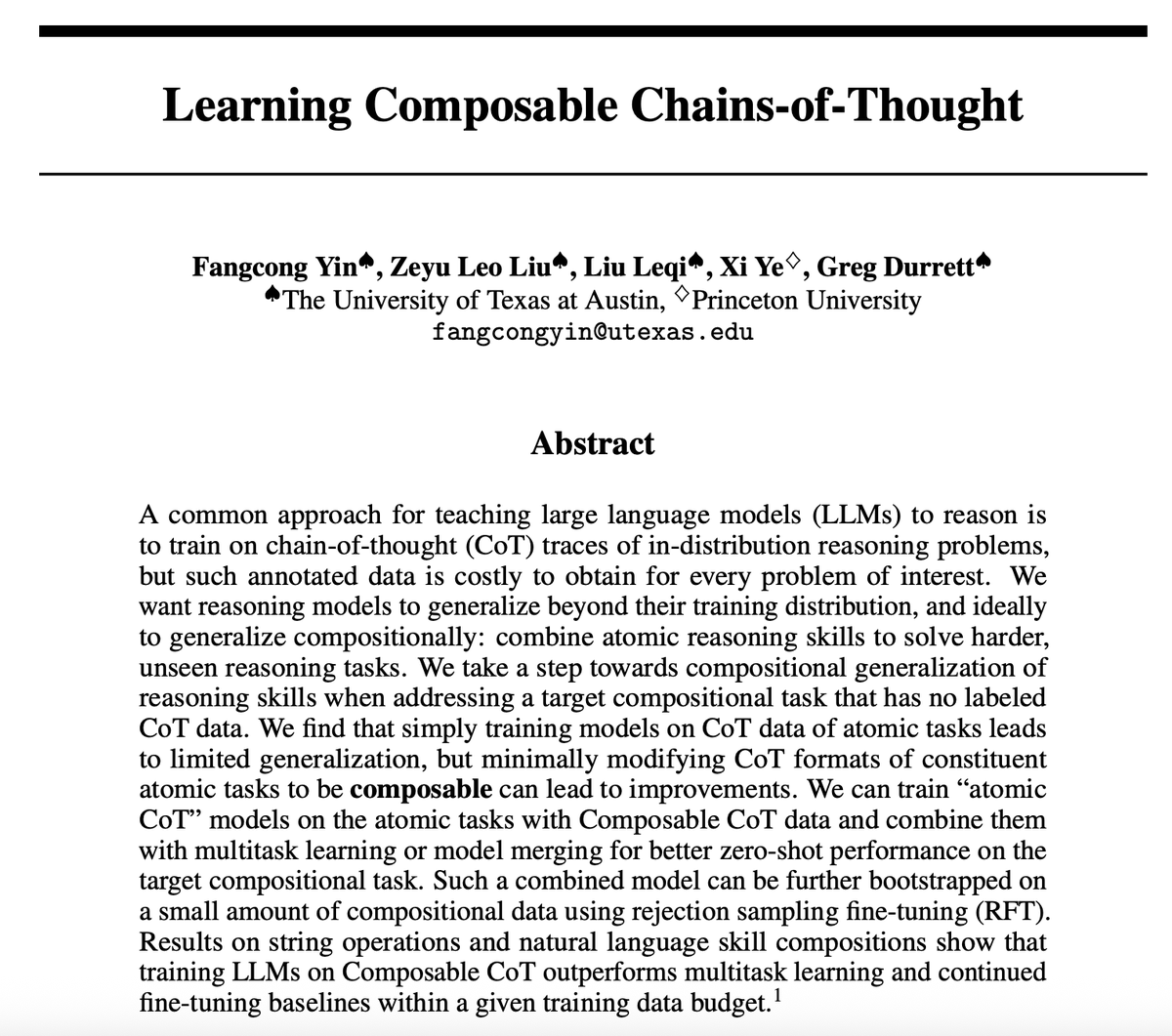

Solving complex problems with CoT requires combining different skills. We can do this by: 🧩Modify the CoT data format to be “composable” with other skills 🔥Train models on each skill 📌Combine those models Lead to better 0-shot reasoning on tasks involving skill composition!

Super thrilled that @kanishkamisra is going to join @UT_Linguistics as our newest computational linguistics faculty member -- looking forward to doing great research together! 🧑🎓Students: Kanishka is a GREAT mentor -- apply to be his PhD student in the upcoming cycle!!

News🗞️ I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘 Excited to develop ideas about linguistic and conceptual generalization! Recruitment details soon

United States Trendy

- 1. Marshawn Kneeland 4,262 posts

- 2. Nancy Pelosi 13K posts

- 3. #MichaelMovie 16K posts

- 4. ESPN Bet 1,754 posts

- 5. Good Thursday 32.8K posts

- 6. Gremlins 3 1,596 posts

- 7. #thursdayvibes 2,592 posts

- 8. Madam Speaker N/A

- 9. Jaafar 4,474 posts

- 10. Happy Friday Eve N/A

- 11. Joe Dante N/A

- 12. Penn 9,078 posts

- 13. Mega Chimecho 2,414 posts

- 14. #ThursdayThoughts 1,745 posts

- 15. Baxcalibur 2,219 posts

- 16. Chris Columbus 1,546 posts

- 17. Korrina 2,301 posts

- 18. #LosdeSiemprePorelNO N/A

- 19. #thursdaymotivation 1,639 posts

- 20. Erik Spoelstra 1,995 posts

Może Ci się spodobać

-

Tanya Goyal

Tanya Goyal

@tanyaagoyal -

Puyuan Peng

Puyuan Peng

@PuyuanPeng -

Jessy Li

Jessy Li

@jessyjli -

Prasann Singhal

Prasann Singhal

@prasann_singhal -

Greg Durrett

Greg Durrett

@gregd_nlp -

Xi Ye

Xi Ye

@xiye_nlp -

Freda Shi @EMNLP 2025

Freda Shi @EMNLP 2025

@fredahshi -

Nathan Schneider

Nathan Schneider

@complingy -

Liyan Tang

Liyan Tang

@LiyanTang4 -

Shiyue Zhang

Shiyue Zhang

@byryuer -

Fangyuan Xu

Fangyuan Xu

@brunchavecmoi -

Anuj Diwan

Anuj Diwan

@anuj_diwan -

Isabel Papadimitriou

Isabel Papadimitriou

@isabelpapad -

Eunsol Choi

Eunsol Choi

@eunsolc -

Hung-Ting Chen

Hung-Ting Chen

@hungting_chen

Something went wrong.

Something went wrong.