Juan Diego Rodríguez (he/him)

@juand_r_nlp

CS PhD student at UT Austin in #NLP Interested in language, reasoning, semantics and cognitive science. You can also find me over at the other site 🦋

你可能會喜歡

I will be giving a short talk on this work at the COLM Interplay workshop on Friday (also to appear at EMNLP)! Will be in Montreal all week and excited to chat about LM interpretability + it’s interaction with human cognition and ling theory.

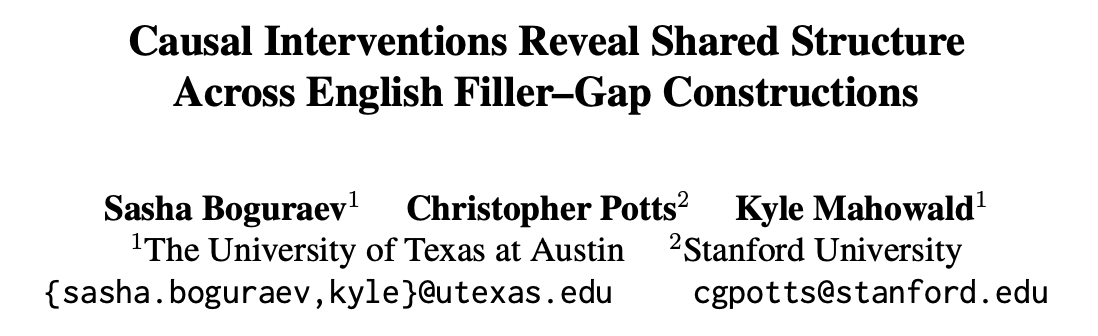

A key hypothesis in the history of linguistics is that different constructions share underlying structure. We take advantage of recent advances in mechanistic interpretability to test this hypothesis in Language Models. New work with @kmahowald and @ChrisGPotts! 🧵👇

Happy to announce the first workshop on Pragmatic Reasoning in Language Models — PragLM @ COLM 2025! 🧠🎉 How do LLMs engage in pragmatic reasoning, and what core pragmatic capacities remain beyond their reach? 🌐 sites.google.com/berkeley.edu/p… 📅 Submit by June 23rd

Interested in language models, brains, and concepts? Check out our COLM 2025 🔦 Spotlight paper! (And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

Our paper "ChartMuseum 🖼️" is now accepted to #NeurIPS2025 Datasets and Benchmarks Track! Even the latest models, such as GPT-5 and Gemini-2.5-Pro, still cannot do well on challenging 📉chart understanding questions , especially on those that involve visual reasoning 👀!

Introducing ChartMuseum🖼️, testing visual reasoning with diverse real-world charts! ✍🏻Entirely human-written questions by 13 CS researchers 👀Emphasis on visual reasoning – hard to be verbalized via text CoTs 📉Humans reach 93% but 63% from Gemini-2.5-Pro & 38% from Qwen2.5-72B

Accepted at #NeurIPS2025 -- super proud of Yulu and Dheeraj for leading this! Be on the lookout for more "nuanced yes/no" work from them in the future 👀

Does vision training change how language is represented and used in meaningful ways?🤔 The answer is a nuanced yes! Comparing VLM-LM minimal pairs, we find that while the taxonomic organization of the lexicon is similar, VLMs are better at _deploying_ this knowledge. [1/9]

![yulu_qin's tweet image. Does vision training change how language is represented and used in meaningful ways?🤔 The answer is a nuanced yes! Comparing VLM-LM minimal pairs, we find that while the taxonomic organization of the lexicon is similar, VLMs are better at _deploying_ this knowledge. [1/9]](https://pbs.twimg.com/media/GwZPQE5WUAUeZkZ.jpg)

You shall know a fascist asshole by the company he keeps... And also what he says.

Elon Musk, "There's got to be a change of government in Britain" "We don't have another four years or whenever your next election is, it's too long, something has got to be done" "There has got to be a dissolution of parliament and a new vote held" "You got to appeal to the…

Introducing ChartMuseum🖼️, testing visual reasoning with diverse real-world charts! ✍🏻Entirely human-written questions by 13 CS researchers 👀Emphasis on visual reasoning – hard to be verbalized via text CoTs 📉Humans reach 93% but 63% from Gemini-2.5-Pro & 38% from Qwen2.5-72B

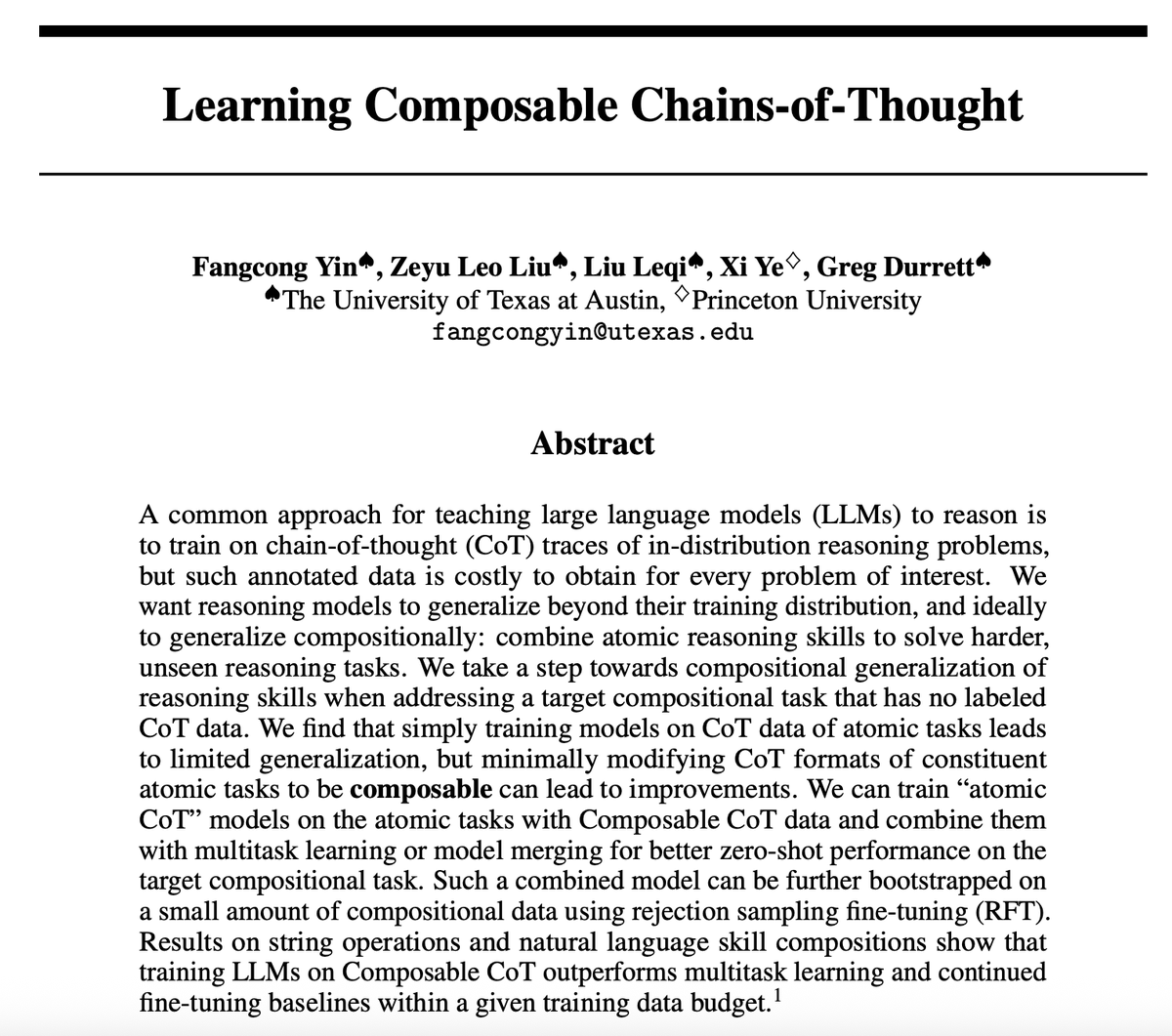

Solving complex problems with CoT requires combining different skills. We can do this by: 🧩Modify the CoT data format to be “composable” with other skills 🔥Train models on each skill 📌Combine those models Lead to better 0-shot reasoning on tasks involving skill composition!

Super thrilled that @kanishkamisra is going to join @UT_Linguistics as our newest computational linguistics faculty member -- looking forward to doing great research together! 🧑🎓Students: Kanishka is a GREAT mentor -- apply to be his PhD student in the upcoming cycle!!

News🗞️ I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘 Excited to develop ideas about linguistic and conceptual generalization! Recruitment details soon

Have you thought about making your reasoning model stronger through *skill composition*? It's not as hard as you'd imagine! Check out our work!!!

Solving complex problems with CoT requires combining different skills. We can do this by: 🧩Modify the CoT data format to be “composable” with other skills 🔥Train models on each skill 📌Combine those models Lead to better 0-shot reasoning on tasks involving skill composition!

The author's dilemma, circa 2021

The United States has had a tremendous advantage in science and technology because it has been the consensus gathering point: the best students worldwide want to study and work in the US because that is where the best students are studying and working. 1/

1/3 The US didn’t end up leading the world in computing by luck. It happened because it made long-term, public investments in basic research, especially through NSF. That’s what created the technology that today’s companies are built on.

Thrilled to announce that I will be joining @UTAustin @UTCompSci as an assistant professor in fall 2026! I will continue working on language models, data challenges, learning paradigms, & AI for innovation. Looking forward to teaming up with new students & colleagues! 🤠🤘

Revoking visas to Chinese students in the US is both cruel and stupid. Immigrants and investment in science made this country great. They are throwing it all away for no reason

Revoking visas to Chinese PhD students is economically shortsighted and inhumane. Most Chinese PhD students stay in the U.S. after graduation (first image, stats from 2022). They're staying and building technology in the U.S., not taking it to China. Immigrant students create…

We are repeating the mistakes of Germany in the 1930s when that country pushed out its scientific leadership. x.com/ChrisO_wiki/st…

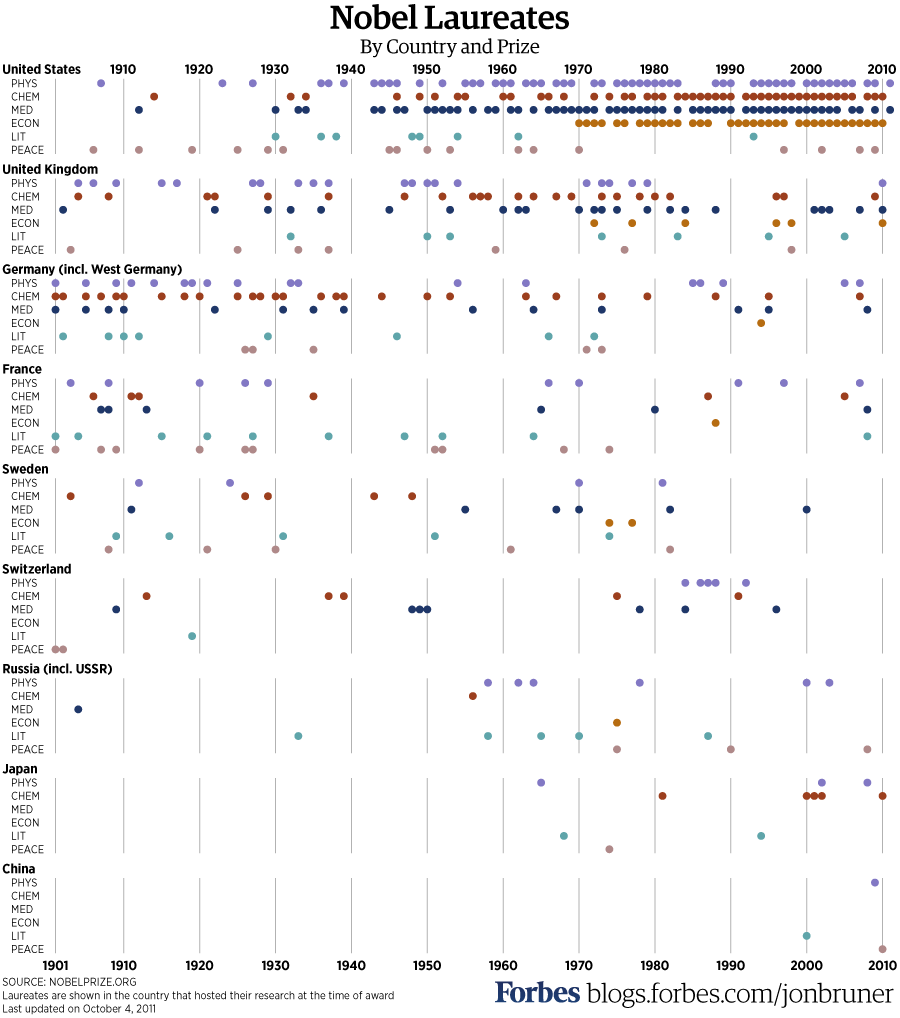

1/ This graph from @JonBruner tells an important story: America's current dominance in science only began after the mid-1930s, when persecuted scientists began fleeing universities in Germany and then elsewhere in occupied Europe.

ACADEMICS: it is time to get our heads out of our *sses. This is not the moment for personal ambition, why your latest sophisticated widget beats rivals intricate theorem. The scientific franchise is under attack. It is time to defend it to the public. x.com/davidbau/statu…

Because of propaganda Americans do not understand what Rubio is doing with visas. "I gave you a visa to come and study," they think. x.com/CitizenFreePre… NO, he has not!! Please help explain to X how Rubio has stopped *ALL* student visas, and how it is killing US science.

A key hypothesis in the history of linguistics is that different constructions share underlying structure. We take advantage of recent advances in mechanistic interpretability to test this hypothesis in Language Models. New work with @kmahowald and @ChrisGPotts! 🧵👇

If you wanted to completely break the information ecosystem in a world with frictionless generation of false video and image content, all you would need to do is downweight external links, like the feed algorithm on X does.

United States 趨勢

- 1. D’Angelo 14.5K posts

- 2. Happy Birthday Charlie 86.9K posts

- 3. #BornOfStarlightHeeseung 55.5K posts

- 4. Angie Stone N/A

- 5. #csm217 1,627 posts

- 6. #tuesdayvibe 5,103 posts

- 7. Alex Jones 19.5K posts

- 8. Sandy Hook 6,235 posts

- 9. Pentagon 84.9K posts

- 10. #NationalDessertDay N/A

- 11. Brown Sugar 1,722 posts

- 12. Drew Struzan N/A

- 13. #PortfolioDay 5,496 posts

- 14. Cheryl Hines 1,689 posts

- 15. George Floyd 6,013 posts

- 16. Good Tuesday 38.9K posts

- 17. Taco Tuesday 12.5K posts

- 18. Powell 20K posts

- 19. Monad 214K posts

- 20. Riggins N/A

你可能會喜歡

-

Tanya Goyal

Tanya Goyal

@tanyaagoyal -

Puyuan Peng

Puyuan Peng

@PuyuanPeng -

Jessy Li

Jessy Li

@jessyjli -

Prasann Singhal

Prasann Singhal

@prasann_singhal -

Greg Durrett

Greg Durrett

@gregd_nlp -

Yasumasa Onoe

Yasumasa Onoe

@yasumasa_onoe -

Xi Ye

Xi Ye

@xiye_nlp -

Freda Shi

Freda Shi

@fredahshi -

Liyan Tang

Liyan Tang

@LiyanTang4 -

Shiyue Zhang

Shiyue Zhang

@byryuer -

Fangyuan Xu

Fangyuan Xu

@brunchavecmoi -

Anuj Diwan

Anuj Diwan

@anuj_diwan -

Isabel Papadimitriou

Isabel Papadimitriou

@isabelpapad -

Eunsol Choi

Eunsol Choi

@eunsolc -

Hung-Ting Chen

Hung-Ting Chen

@hungting_chen

Something went wrong.

Something went wrong.