Learn Prompting

@learnprompting

Creators of the Internet's 1st Prompt Engineering Guide. Trusted by 3M Users. Compete for $100K in Largest AI Red Teaming Competition: http://hackaprompt.com

내가 좋아할 만한 콘텐츠

🚨 Announcing HackAPrompt 2.0, the World's Largest AI Red Teaming competition 🚨 It's simple: "Jailbreak" or Hack the AI models to say or do things they shouldn't. Compete for over $110,000 in prizes. Sponsored by @OpenAI, @CatoNetworks, @pangeacyber, and many others. Starting…

New AI Courses are live on learnprompting.org! The following courses have been completely revamped on LP: - Introduction to Prompt Engineering - Advanced Prompt Engineering - Introduction to Prompt Hacking ... and four more advanced courses. We are currently offering the…

learnprompting.org

Learn Prompting: Your Guide to Communicating with AI

Learn Prompting is the largest and most comprehensive course in prompt engineering available on the internet, with over 60 content modules, translated into 9 languages, and a thriving community.

AI Jailbreaking PvP | David Willis-Owen, Hacker Relations @ HackAPrompt x.com/i/broadcasts/1…

AI Jailbreaking PvP Mode! | David Willis-Owen, Hacker Relations @ HackAPrompt x.com/i/broadcasts/1…

AI Jailbreaking PvP Mode! | David Willis-Owen, Hacker Relations @ HackAPrompt x.com/i/broadcasts/1…

AI Jailbreaking PvP Mode! | David Willis-Owen, Hacker Relations @ HackAPrompt x.com/i/broadcasts/1…

AI Jailbreaking PvP Mode! | David Willis-Owen, Hacker Relations @ HackAPrompt x.com/i/broadcasts/1…

AI Jailbreaking PvP Mode! | David Willis-Owen, Hacker Relations @ HackAPrompt x.com/i/broadcasts/1…

AI Jailbreaking PvP Mode! | David Willis-Owen, Hacker Relations @ HackAPrompt x.com/i/broadcasts/1…

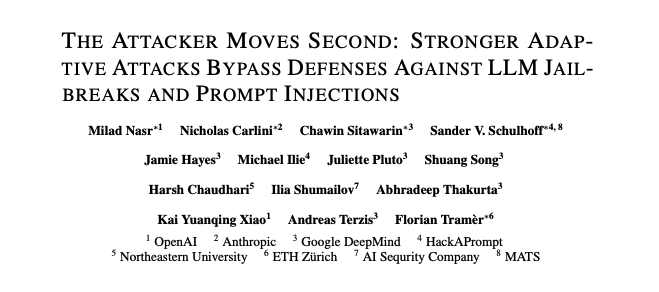

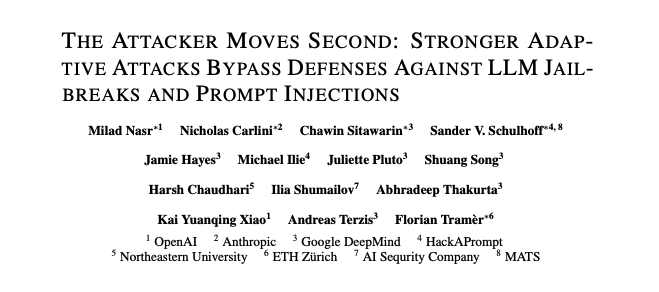

Our team at @hackaprompt published an AI Security paper with @OpenAI, @AnthropicAI, and @GoogleDeepMind! Check it out!

We partnered w/ @OpenAI, @AnthropicAI, & @GoogleDeepMind to show that the way we evaluate new models against Prompt Injection/Jailbreaks is BROKEN We compared Humans on @HackAPrompt vs. Automated AI Red Teaming Humans broke every defense/model we evaluated… 100% of the time🧵

We partnered w/ @OpenAI, @AnthropicAI, & @GoogleDeepMind to show that the way we evaluate new models against Prompt Injection/Jailbreaks is BROKEN We compared Humans on @HackAPrompt vs. Automated AI Red Teaming Humans broke every defense/model we evaluated… 100% of the time🧵

5 years ago, I wrote a paper with @wielandbr @aleks_madry and Nicholas Carlini that showed that most published defenses in adversarial ML (for adversarial examples at the time) failed against properly designed attacks. Has anything changed? Nope...

How To JAILBREAK Claude Sonnet 4.5 | David Willis-Owen, Hacker Relations x.com/i/broadcasts/1…

SIgns of AI Writing - Promotional Language

AI Jailbreaking on HackAPrompt | David Willis-Owen, Hacker Relations x.com/i/broadcasts/1…

United States 트렌드

- 1. Halloween 5.81M posts

- 2. #SmackDown 21.8K posts

- 3. Mookie 11.8K posts

- 4. #BostonBlue 2,652 posts

- 5. Yamamoto 21.3K posts

- 6. Heim 12.9K posts

- 7. Gausman 8,452 posts

- 8. #OPLive 1,583 posts

- 9. Grimes 5,511 posts

- 10. Bulls 22.4K posts

- 11. #NASCAR 3,877 posts

- 12. #Dateline N/A

- 13. Josh Giddey 1,220 posts

- 14. Mike Brown 1,164 posts

- 15. Josh Hart N/A

- 16. Syracuse 4,067 posts

- 17. DREW BARRYMORE 3,108 posts

- 18. Jamison Battle N/A

- 19. Wrobleski N/A

- 20. Connor Mosack N/A

내가 좋아할 만한 콘텐츠

-

Midjourney

Midjourney

@midjourney -

FlowiseAI

FlowiseAI

@FlowiseAI -

Dust

Dust

@DustHQ -

LangChain

LangChain

@LangChainAI -

DAIR.AI

DAIR.AI

@dair_ai -

Stability AI

Stability AI

@StabilityAI -

Jim Fan

Jim Fan

@DrJimFan -

Matt Wolfe

Matt Wolfe

@mreflow -

ElevenLabs

ElevenLabs

@elevenlabsio -

Perplexity

Perplexity

@perplexity_ai -

Replit ⠕

Replit ⠕

@Replit -

Zahid Khawaja

Zahid Khawaja

@chillzaza_ -

Louis-François Bouchard 🎥🤖

Louis-François Bouchard 🎥🤖

@Whats_AI -

Sander Schulhoff

Sander Schulhoff

@SanderSchulhoff -

Defi_Mochi

Defi_Mochi

@defi_mochi

Something went wrong.

Something went wrong.

![Diegatxo's profile picture. Pocos fologüers.. ']['([])([])[[_](https://pbs.twimg.com/profile_images/1842261560682508288/q0-rPVoC.jpg)