Meng Li

@limengnlp

PhD student @unipotsdam, supervised by @davidschlangen. Working on NLP, ML and CogSci. Prev @LstSaar. Former NLP engineer.

this is a zero sum war of U.S...U.S made a mistake...when they let China freely do what they like from platform, AI structure to Chip, HBM....congratulations they lost 60% of their customers.

Hello, community! We’re Tongyi Lab — the AI research institute under Alibaba Group, and the team behind Qwen, Wan, Tongyi Fun, and a growing ecosystem of models and frameworks loved by millions of developers worldwide. From this week forward, we will be sharing the latest updates…

Ilya Sutskever: bald Demis Hassabis: bald Noam Shazeer: bald Greg Brockman: bald forget AGI. forget curing cancer. cure baldness now. My hairline is on gradient descent.

Day 1/5 of #MiniMaxWeek: We’re open-sourcing MiniMax-M1, our latest LLM — setting new standards in long-context reasoning. - World’s longest context window: 1M-token input, 80k-token output - State-of-the-art agentic use among open-source models - RL at unmatched efficiency:…

⏰ We introduce Reinforcement Pre-Training (RPT🍒) — reframing next-token prediction as a reasoning task using RLVR ✅ General-purpose reasoning 📑 Scalable RL on web corpus 📈 Stronger pre-training + RLVR results 🚀 Allow allocate more compute on specific tokens

In Hinton's NN class, there is an interesting tip to get a geometric view of high dimensional space. I think authors of interpretability papers did the opposite; they stare at LLMs and pray in their minds that it's linear and interpretable.

I just read this WSJ article on why Europe's tech scene is so much smaller than the US's and China's. I'm afraid that, like most articles on this topic, it largely misses the mark. Which in itself illustrates a key reason why Europe is lagging behind: when you fail to…

📢 I am on the JOB market this year 📢 I am looking for both faculty and research scientist positions. My research makes AI agents useful and safe for humans. I enable them to effectively convey uncertainty, ask for help, learn from human feedback, and pursue goals that benefit…

Excited to be at #NAACL2025! Let’s meet (and grab a Char's Zaku sticker 🚀). 📅 May 4, 11–12, RepL4NLP: "Amuro&Char: Analyzing the Relationship between Pre-Training and Fine-Tuning" 📅 May 2, 12 PM, Ballroom B: "SHADES: Towards a Multilingual Assessment of Stereotypes in LLMs"

Every ChatGPT query costs more energy than the entire life of a fruit fly.

AI phone agent realizes it is talking to a parrot

🚀 Day 0: Warming up for #OpenSourceWeek! We're a tiny team @deepseek_ai exploring AGI. Starting next week, we'll be open-sourcing 5 repos, sharing our small but sincere progress with full transparency. These humble building blocks in our online service have been documented,…

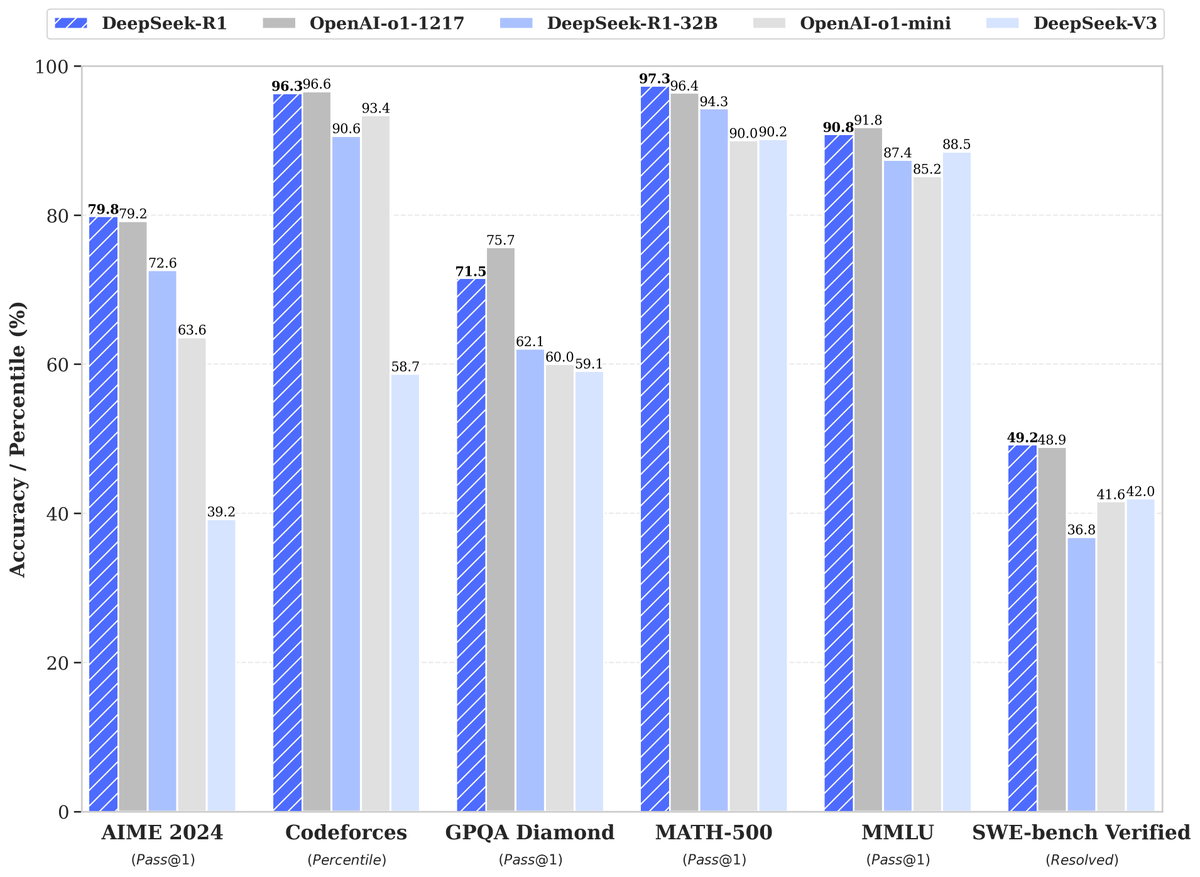

🚀 DeepSeek-R1 is here! ⚡ Performance on par with OpenAI-o1 📖 Fully open-source model & technical report 🏆 MIT licensed: Distill & commercialize freely! 🌐 Website & API are live now! Try DeepThink at chat.deepseek.com today! 🐋 1/n

The #NobelPrizeinPhysics2024 for Hopfield & Hinton rewards plagiarism and incorrect attribution in computer science. It's mostly about Amari's "Hopfield network" and the "Boltzmann Machine." 1. The Lenz-Ising recurrent architecture with neuron-like elements was published in…

My Bet: Strawberry is algorithm distillation/procedural cloning. Everyone right now is coming up with ways to distill System 2 into System 1, but that will always be limited. We need to train the model to run the algorithms, not just outputs (and post-train with RL of course).

Pretty fun paper, finetuning llama to produce blender code for synthetic renderings

Good Scientific American piece on the idea of AGI -I think and argue here that its incoherent - there is no general intelligence natural or artificial but different cognitive abilities that often trade-off.. scientificamerican.com/article/what-d…

cognitive scientist: so the lesson of Clever Hans is we need.. engineer: more horses cognitive scientist: engineer: stacked horses. parallel horses. pooled horses. horse dropout. RL with horses in the loop. cognitive scientist: engineer: Hans is All You Need

United States Trends

- 1. Daboll 36.8K posts

- 2. Pond 238K posts

- 3. Schoen 18.9K posts

- 4. Schoen 18.9K posts

- 5. Veterans Day 21.6K posts

- 6. Giants 72.9K posts

- 7. Go Birds 11.6K posts

- 8. Joe Burrow 5,766 posts

- 9. Zendaya 8,402 posts

- 10. Joe Dirt N/A

- 11. Dart 23.5K posts

- 12. #jimromeonx N/A

- 13. Kim Davis 13K posts

- 14. Marines 62.5K posts

- 15. #ROBOGIVE 1,105 posts

- 16. Hanoi Jane 1,036 posts

- 17. Johnny Carson N/A

- 18. Marte 3,684 posts

- 19. Jeffries 41.5K posts

- 20. Semper Fi 11.9K posts

Something went wrong.

Something went wrong.