Chris 🇨🇦 @ NeurIPS

@llm_wizard

Open Source Model Lover @ NVIDIA AI Views my own.

你可能會喜歡

Iceland ain’t got shit on Santa Clara

Banger

too much, if it's not an ice capp or a french vanilla, then you are just wasting your money even if it's like 30 cents.

All this time?

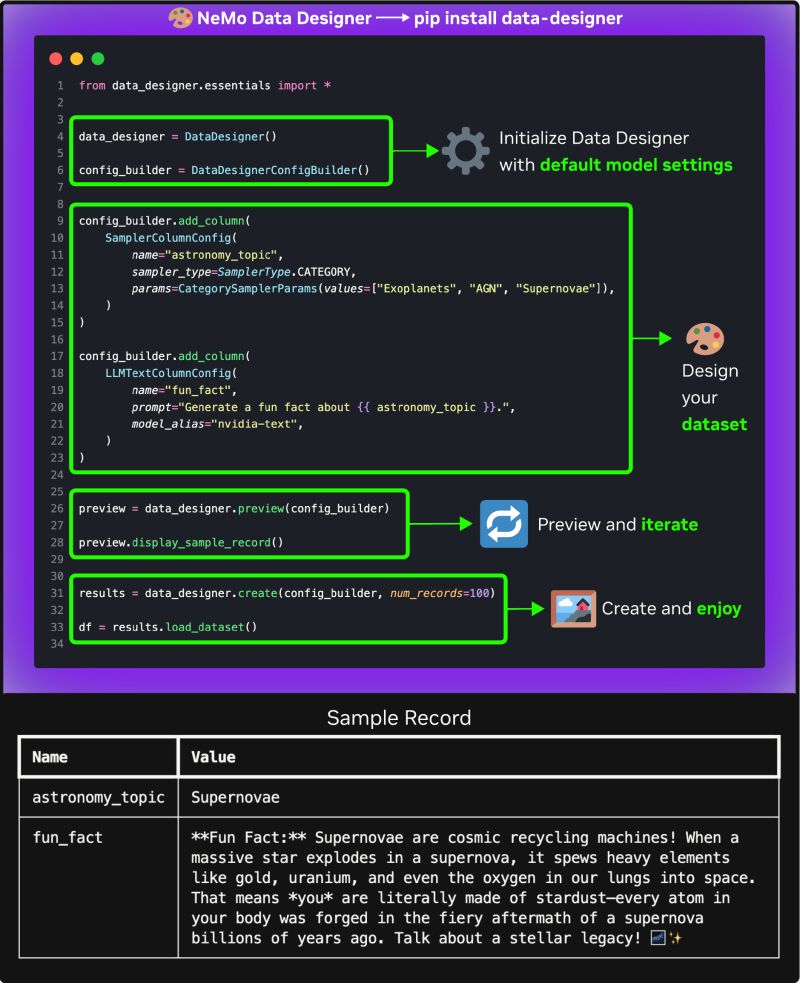

Seriously easy to use. Like it's good, sure, but it's actually good DevX. 10/10, Eric and team cooked on this one.

We’ve been working on Data Designer since Gretel, it’s what we’ve used to accelerate our own work on high-quality synthetic data. Now at Nvidia, we have the opportunity to do something we could only dream of before: open sourcing our work to accelerate everyone 🧵

Anthropic has apparently entered the soulsmaxxing phase, I'm beyond stoked. lesswrong.com/posts/vpNG99Gh…

APACHE 2.0

Introducing the Mistral 3 family of models: Frontier intelligence at all sizes. Apache 2.0. Details in 🧵

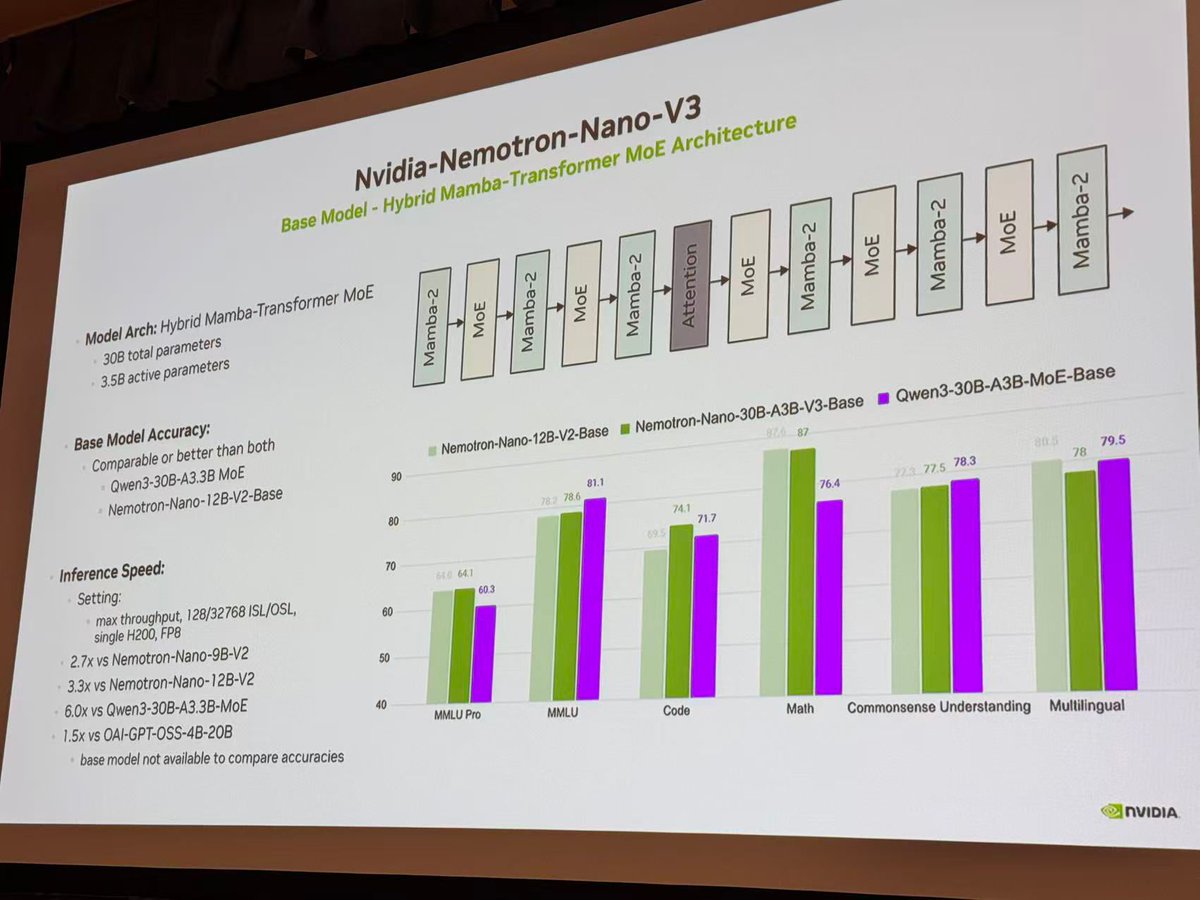

Hmmmm. What's this? A brand new Nemotron?! *Sooooon*

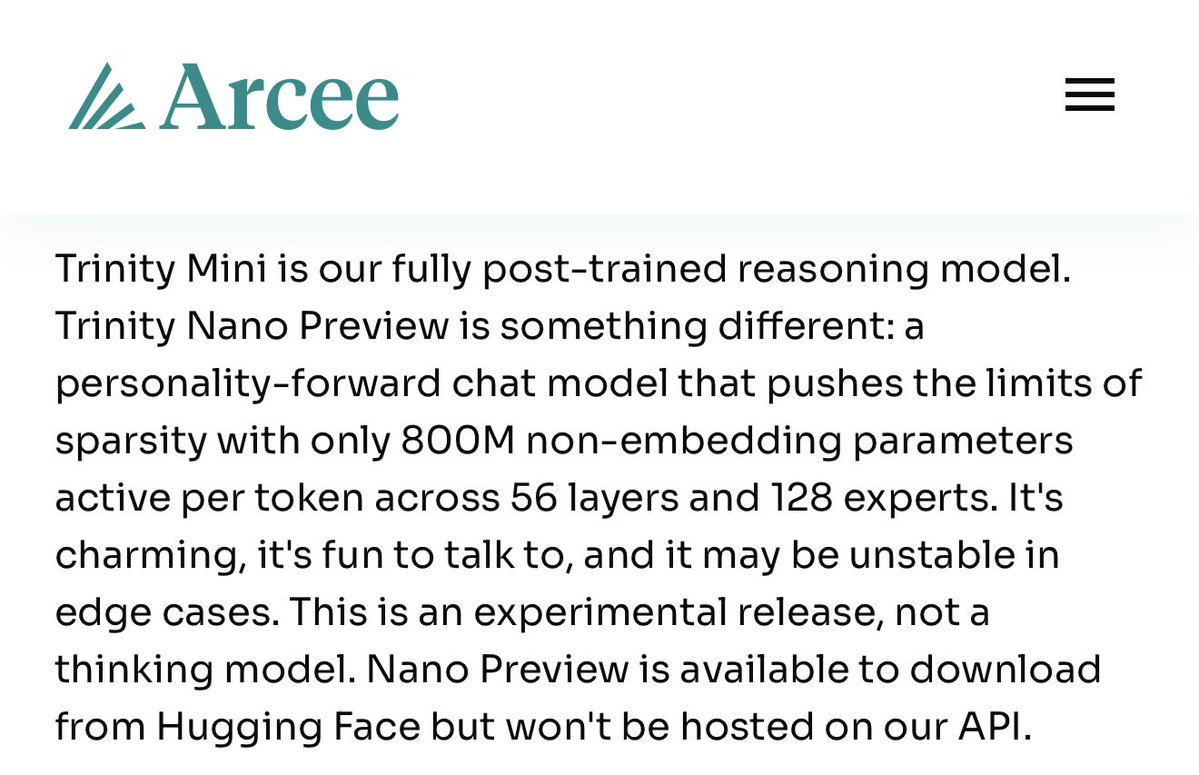

Read the blog, it’s probably one of the densest information texts I’ve read in a long time. Covers everything from motivation to arch and infra but doesn’t sugarcoat with selective benches Arcee is a serious open model lab, can’t wait to play with the models.

Today, we are introducing Trinity, the start of an open-weight MoE family that businesses and developers can own. Trinity-Mini (26B-A3B) Trinity-Nano-Preview (6B-A1B) Available Today on Huggingface.

You absolutely love to see it. 10/10 to the whole @arcee_ai team!!!

Today, we are introducing Trinity, the start of an open-weight MoE family that businesses and developers can own. Trinity-Mini (26B-A3B) Trinity-Nano-Preview (6B-A1B) Available Today on Huggingface.

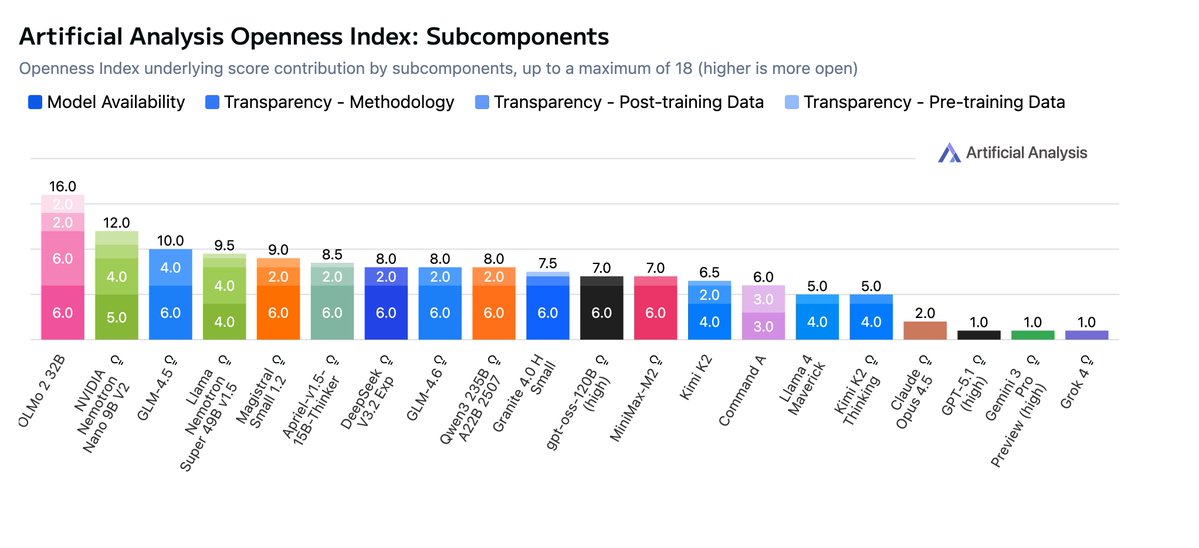

Love this! This plot in particular feels like a solid representation of ability vs openness. My favorite park is Olmo 3 mogging on Llama 4 Maverick in every plot 🤭.

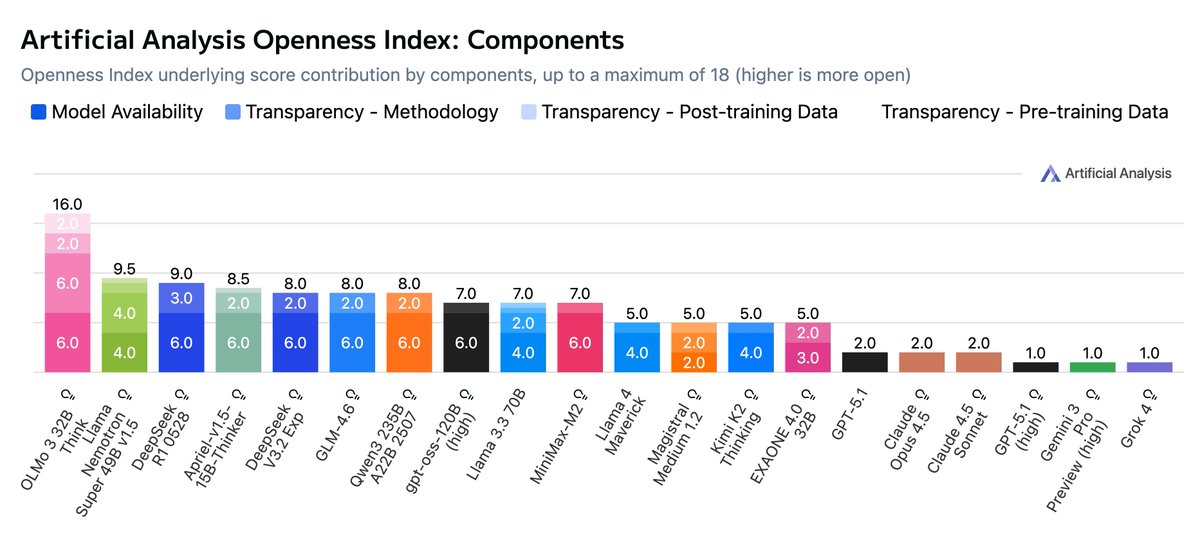

Introducing the Artificial Analysis Openness Index: a standardized and independently assessed measure of AI model openness across availability and transparency Openness is not just the ability to download model weights. It is also licensing, data and methodology - we developed a…

💖🤝💚

As a final note, some people will be surprised Nvidia are second, but they shouldn't be. Nvidia has stepped up a lot on openness this year.

You honestly love to see it.

Artificial Analysis' Openness Index is now live 🎉 Huge congrats to @allen_ai 👏 OLMo 3 leads the pack, demonstrating top-tier openness for model availability and transparency across data and methodology. 🔗 nvda.ws/3M91zwm

San Diego: ACHIEVED. Palm Trees: SIGHTED. Cactuses: EVERYWHERE.

Really sad update: Flight landed late, not enough time to obtain Philly Cheesesteak. Morale: Low.

United States 趨勢

- 1. Giannis 60.9K posts

- 2. Spotify 1.59M posts

- 3. Tosin 65.1K posts

- 4. Bucks 38.4K posts

- 5. Leeds 101K posts

- 6. Milwaukee 17.6K posts

- 7. Steve Cropper 1,221 posts

- 8. Mark Andrews 2,288 posts

- 9. Maresca 49.6K posts

- 10. Poison Ivy 2,027 posts

- 11. Isaiah Likely N/A

- 12. #WhyIChime 2,058 posts

- 13. Brazile N/A

- 14. Danny Phantom 7,048 posts

- 15. Knicks 26.2K posts

- 16. Purple 53.4K posts

- 17. Miguel Rojas 2,127 posts

- 18. Phantasm 1,518 posts

- 19. Wirtz 37.2K posts

- 20. Sunderland 47.6K posts

Something went wrong.

Something went wrong.