Lorenzo Basile

@lorebasile

Postdoc @AreaSciencePark

You might like

🤖Seminar Series Next week, Giorgos Nikolaou and Tommaso Mencattini (@EPFL) will present "Language Models are Injective and Hence Invertible". @GiorgosNik02 @tommaso_mncttn 📅Nov 27, 11 CET (Online) 👉Register to attend: bit.ly/4ieKRYq

Excited to share that 2/2 papers from our Lab (LADE) were accepted to #NeurIPS2025 (one spotlight 🎉) Great work from all the students and collaborators involved! @AreaSciencePark @aleserra1998 @lorebasile @francescortu @lucrevaleriani @DiegoDoimo @ansuin @FrancescoLocat8

Thrilled to announce that our paper is accepted at #NeurIPS 2025!! See you in San Diego! 🇺🇸

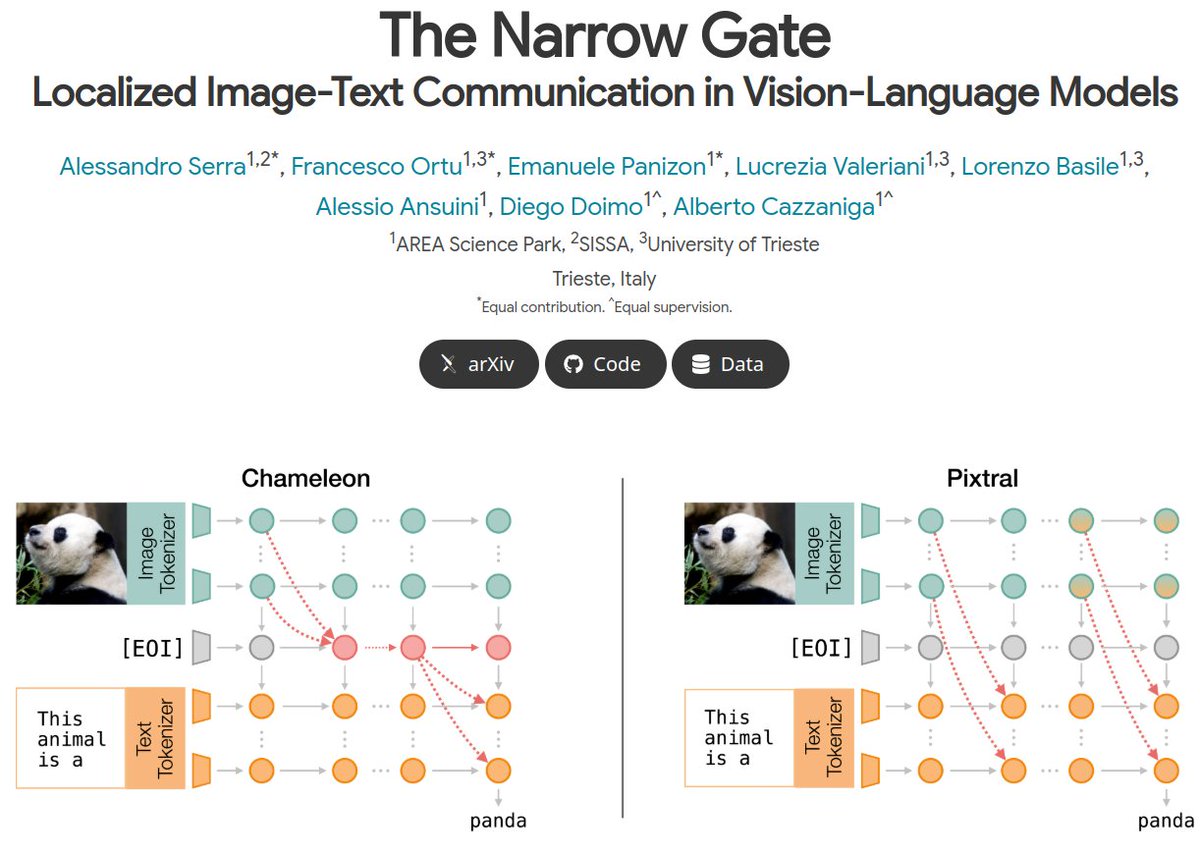

🚨🚨 Excited to share our latest paper, now on @arxiv! 🖼️ We studied how unified VLMs, trained to generate both text and images (e.g., @MetaAI's Chameleon), exchange information between modalities, comparing them to standard VLMs. Deep dive:👇

I just landed in Vancouver to present @NeurIPSConf the findings of our new work! Few-shot learning and fine-tuning change the hidden layers of LLMs in a dramatically different way, even when they perform equally well on multiple-choice question-answering tasks. 🧵1/6

✨ Meet #ResiDual, a novel perspective on the alignment of multimodal latent spaces! Think of it as a spectral "panning for gold" along the residual stream. It improves text-image alignment by simply amplifying task-related directions! 🌌🔍 arxiv.org/abs/2411.00246 [1/6]

![ValeMaiorca's tweet image. ✨ Meet #ResiDual, a novel perspective on the alignment of multimodal latent spaces!

Think of it as a spectral "panning for gold" along the residual stream. It improves text-image alignment by simply amplifying task-related directions! 🌌🔍

arxiv.org/abs/2411.00246

[1/6]](https://pbs.twimg.com/media/GbirFHEXcAALYcg.jpg)

United States Trends

- 1. #WWERaw 73.1K posts

- 2. Moe Odum N/A

- 3. Brock 39.6K posts

- 4. Panthers 37.4K posts

- 5. Bryce 20.9K posts

- 6. Finch 14.2K posts

- 7. Timberwolves 3,725 posts

- 8. Keegan Murray 1,397 posts

- 9. 49ers 41.4K posts

- 10. Gonzaga 3,994 posts

- 11. Canales 13.3K posts

- 12. Niners 5,834 posts

- 13. #FTTB 5,808 posts

- 14. Amen Thompson 2,162 posts

- 15. Penta 10.6K posts

- 16. Malik Monk N/A

- 17. Mac Jones 4,944 posts

- 18. Alan Dershowitz 2,287 posts

- 19. Jauan Jennings 2,823 posts

- 20. Zags N/A

You might like

-

Benedetta Liberatori

Benedetta Liberatori

@bliberatori_ -

Alberto Cazzaniga

Alberto Cazzaniga

@albecazzaniga -

Lucrezia Valeriani

Lucrezia Valeriani

@lucrevaleriani -

AI Student Society (AI2S)

AI Student Society (AI2S)

@aitwos -

CNR Istituto Nazionale di Ottica

CNR Istituto Nazionale di Ottica

@CNR_INO -

Bert Maher

Bert Maher

@tensorbert -

Calvin Luo

Calvin Luo

@calvinyluo -

Yuejiang Liu

Yuejiang Liu

@liu_yuejiang -

Giovanni Iacca

Giovanni Iacca

@gih82 -

Aleksandra Ciprijanovic

Aleksandra Ciprijanovic

@ACiprijanovic -

Vignesh Pamu

Vignesh Pamu

@vigneshpamu -

Edoardo Debenedetti

Edoardo Debenedetti

@edoardo_debe -

Luca Manzoni

Luca Manzoni

@mnzluca

Something went wrong.

Something went wrong.