Manikandan Ananth

@mananth23

Opinions are my own. I like to build high performance computer systems

You might like

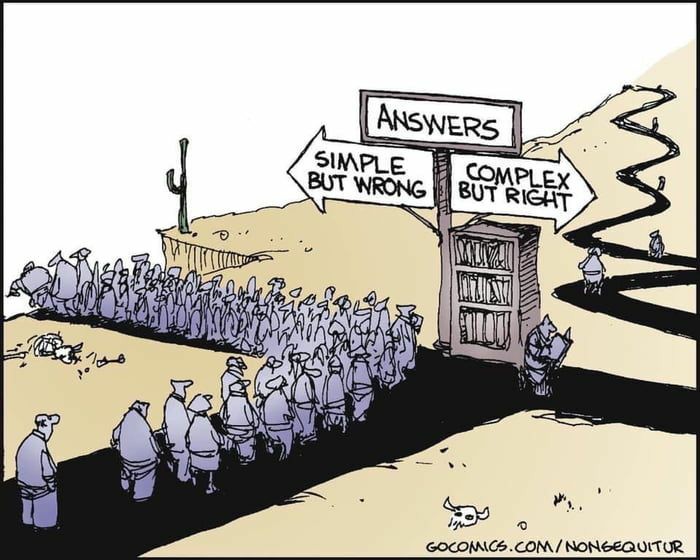

Humans reason in two modes: precise calculation (e.g., 17 cents change owed) and fuzzy intuition (e.g., 6-8 apples for $5). Traditional computers excel at the first. For AGI, we need both.. fewer hallucinations and better ballpark reasoning.

Llama 4 is here and it’s coming to Cerebras! Starting next week, we will be serving Llama 4 on Cerebras Inference at instant speed. Thank you to the @AIatMeta team for your partnership. Be the first to get access here: cerebras.ai/build-with-us

Need best 7B-70B models? Use LLaMA 2. Need best 3B model? Use BTLM. That's it. That's the tweet.

📣 Today we are announcing Condor Galaxy-1: a 4 exaflop AI supercomputer built in partnership with @G42ai. Powered by 64 Cerebras CS-2 systems, 54M cores, and 82TB of memory – it's the largest AI supercomputer we've ever built. But that's not all: CG-1 is just the start..

Using CUTLASS int4b GEMM to train Transformer lnkd.in/gKFucNii

🎉 Exciting news! Today we are releasing Cerebras-GPT, a family of 7 GPT models from 111M to 13B parameters trained using the Chinchilla formula. These are the highest accuracy models for a compute budget and are available today open-source! (1/5) Press: businesswire.com/news/home/2023…

The race has publicly begun! - GPT still blows everyone else out of the water wrt coding abilities, with Claude coming a close second. - Bard can't do coding yet (according to their FAQ) - LLaMa / Alpaca, etc. is having a huge community-driven moment

(1/3) Our sparsity research is now publicly available on arxiv (arxiv.org/abs/2303.10464) and has been accepted into the ICLR 2023 Sparsity in Neural Networks (@sparsenn) workshop! (1/3)

With @JasperAI, we strive to dramatically advance AI work, including training GPT networks to fit AI outputs to all levels of end-user complexity and granularity. Read more here: hubs.li/Q01tMGsK0

Today we announced the Cerebras AI Model Studio, a pay-by-the-model computing service that makes training large Transformer models fast, easy, and affordable. Learn more here: • Press release – hubs.li/Q01tvJ4p0 • Webpage - hubs.li/Q01tvMpS0 #cloud #ai #ml

UPDATE: In response to high demand for employment-based visas, the U.S. Mission to India recently released over 100,000 appointments for H&L workers and their families.

Drinks, tacos, and the world's most powerful #AI accelerator! Join our CEO @andrewdfeldman and @colovore on September 12! RSVP here: hubs.li/Q01lTCjw0 #artificialintelligence #deeplearning #AIHardwaresummit

Are you challenged by the size and scope of training massive #NLP models with legacy compute? Join our VP of Product Andy Hock at the @aiandsystems #AIHW Summit to learn how to simplify and accelerate your #AI: hubs.li/Q01lHY040

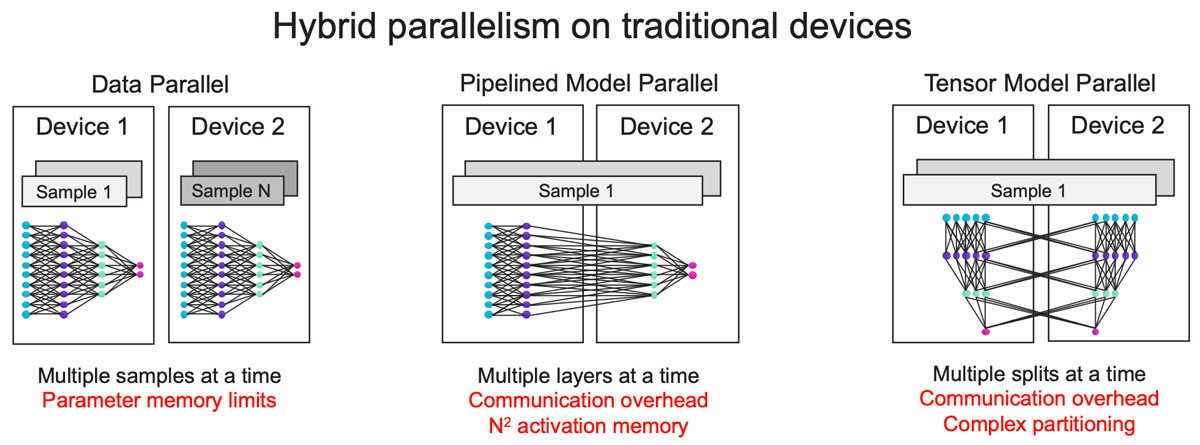

Clustering solutions already exist today. So why is it still so hard for #AI to scale? hubs.li/Q01lcDrW0 #scaleout #deeplearning

I was in Europe. Lost 10 pounds in under 2 weeks. Came back to the US. Inflamed and stomach is in pain. What the hell is in our food?

If you are fortunate enough to work in the tech industry, you may well not have to work very hard to do well in your career. Not true for everyone, and not true for lots of other industries, but plenty of evidence all over Twitter for this.

What does this AI know that we don't?

Hi @SingaporeAir , I had booked a ticket on your flight from MAA to SFO for Dec 31st 2021. The ticket was cancelled from your end on Dec3rd 2021. I have already filled out the form for refund 2 months ago. Where is my refund? Worst experience ever!!

My mind was randomly re-blown by this realization few days ago 🤯. Clocks can go to ~5GHz (so light travels ~7cm/clock) and chips are around ~3cm on a side (4.2cm diagonal), so if you like global clocks we're actually running chips very near fundamental limits of communication.

A typical ~2GHz CPU will clock pulse every 0.5ns. Since the speed of light is ~0.3m/ns, light only traverses ~15cm (half a foot) in each pulse :|

United States Trends

- 1. #WWERaw 61.5K posts

- 2. Purdy 25.6K posts

- 3. Panthers 34.8K posts

- 4. Bryce 19.1K posts

- 5. 49ers 36.6K posts

- 6. Canales 12.8K posts

- 7. Mac Jones 4,673 posts

- 8. #FTTB 5,184 posts

- 9. Penta 9,425 posts

- 10. #KeepPounding 5,184 posts

- 11. Niners 5,271 posts

- 12. Gunther 13.7K posts

- 13. Jaycee Horn 2,601 posts

- 14. Jennings 7,963 posts

- 15. #RawOnNetflix 2,071 posts

- 16. TMac 1,438 posts

- 17. Ji'Ayir Brown 1,235 posts

- 18. Melo 18.3K posts

- 19. Moehrig N/A

- 20. Rico Dowdle 1,599 posts

Something went wrong.

Something went wrong.