CMU Robotics Institute

@CMU_Robotics

Pioneering the future of robotics since 1979. We’re transforming industries and everyday life through cutting-edge innovation and world-class education.

내가 좋아할 만한 콘텐츠

@CMU_Robotics Institute alum Lalitesh Katragadda has spent his career using technology to make a world that works for everyone. On Thursday, Oct. 30, he'll share his experience with the CMU community during the Bruce Nelson Memorial Lecture. cs.cmu.edu/news/2025/infi…

We take Halloween very seriously here at the Robotics Institute 🎃🦇 #spooky Check this awesome demo out! 👇

Spot dressed up for Halloween!🎃 It's on a mission for its favorite 'candy'! 🔋 But two 'ghosts' were blocking the path… A fun demo of our new paper on how robots can intelligently 'make way' on cluttered stairs! (1/4) @CMU_Robotics

SCS faculty members Carolyn Rosé (@LTIatCMU) and Jun-Yan Zhu (@CMU_Robotics) recently received endowed chairs to recognize their research contributions and support their future work. cs.cmu.edu/news/2025/rose…

Congrats to Min Liu, Deepak Pathak, and Ananye Agarwal for being Best Paper Award finalists at #CoRL2025 (1 out of 8! 🔥) Check out their work: "LocoFormer: Generalist Locomotion via Long-context Adaptation" –arxiv.org/abs/2509.23745 @minliu01 @anag004 @pathak2206 #TartanProud

IROS Best Student Paper Award for Neural MP: A Generalist Neural Motion Planner! Congratulations to @mihdalal & @Jiahui_Yang6709 for leading this work at @SCSatCMU with @mendonca_rl, Youssef Khaky, @pathak2206. Check out our paper/code: mihdalal.github.io/neuralmotionpl… Conventional…

#ICCV2025 best paper award & best paper honorable mention! RI researchers collaborated with @CSDatCMU and @CMU_ECE to bring some incredible work to the conference this year👏🧠🔥 Check out the SCS news post on BrickGPT, which brought home best paper! bit.ly/4hqz7lc

Ready to meet the next-gen of American robotics innovators? 🤖 Join experts from @CarnegieMellon and #NVIDIAInception startups @fieldai_ , @genrobotics_ai, @Saronic, and @shieldaitech to learn how their work, from simulation to edge deployment, is driving innovation, growth, and…

Researchers from the Robotics Institute + Meta Reality Labs have built a model that reconstructs images, camera data or depth scans into 3D maps within a unified system! MapAnything captures both small details and large spaces with high precision 🌎🗺️📌 bit.ly/4haR7Qm

🤖What if a robot could understand hair dynamics well enough to style your hair, just like your favorite barber💈? 🔥Excited to announce DYMO-Hair, a model-based robot hair styling system powered by a generalizable 3D hair dynamics model. 🚀A new step toward robots that can…

✈️🤖 What if an embodiment-agnostic visuomotor policy could adapt to diverse robot embodiments at inference with no fine-tuning? Introducing UMI-on-Air, a framework that brings embodiment-aware guidance to diffusion policies for precise, contact-rich aerial manipulation.

A new tool that better tracks pollution worldwide has CMU research at its core‼️ Climate TRACE, co-founded by former Vice President Al Gore, released an air pollution monitoring tool that relies on visualizations and models developed by CMU's CREATE Lab cs.cmu.edu/news/2025/clim…

We introduce RAVEN, a 3D open-set memory-based behavior tree framework for aerial outdoor semantic navigation. RAVEN not only navigates reliably toward detected targets, but also performs long-range semantic reasoning and LVLM-guided informed search

Dexterous robots are moving from CMU labs to real-world industries with support from the National Science Foundation @NSF! For Altus Dexterity, this means moving beyond prototypes to pilot programs + partnerships where dexterous robots can shine. ☀️🤖 bit.ly/4gP9H09

Just a reminder, applications for our 16-week, full-time coding bootcamp close on September 24 at 11:59 p.m. ET! Get your application in today! bootcamps.cs.cmu.edu/coding-bootcam…

‼️CMU Vision-Language-Autonomy Challenge update 3: The third video has been released of their robot "finding the microwave near the refrigerator" After checking out a few rooms, the system spotted the microwave! 👏 Check it out: bit.ly/4nsofFu

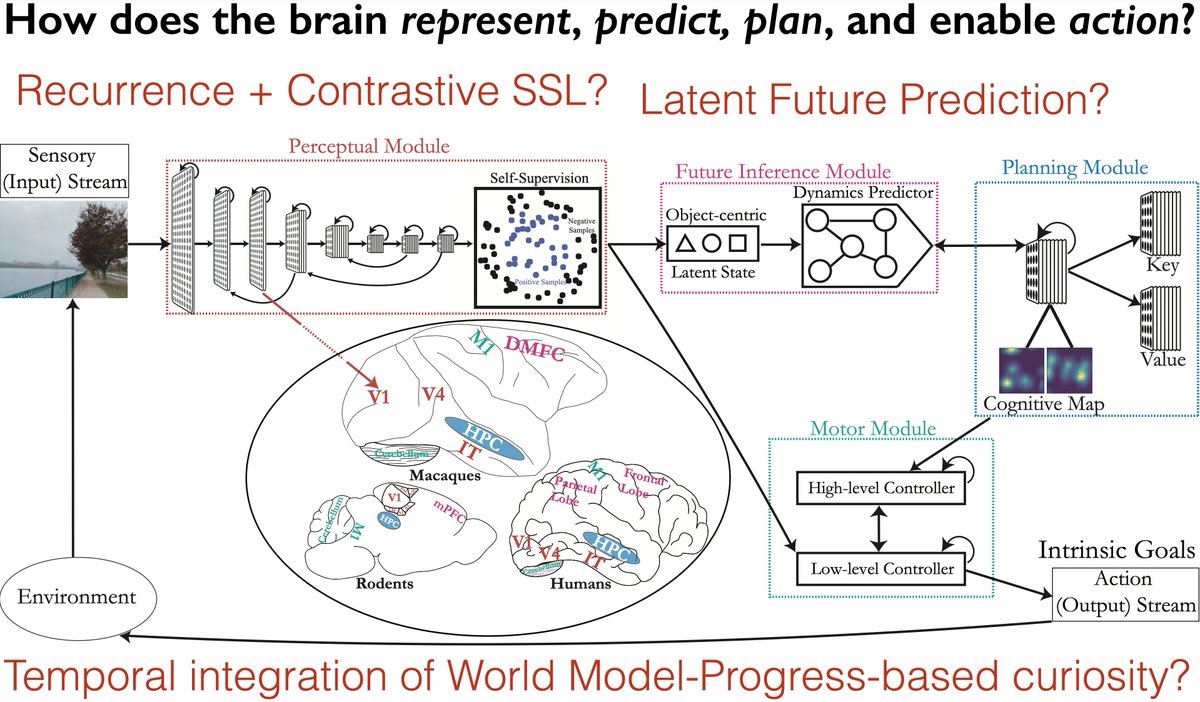

Recent discussions (e.g. @RichardSSutton on @dwarkesh_sp’s podcast) have highlighted why animals are a better target for intelligence — and why scaling alone isn’t enough. In my recent @CMU_Robotics seminar talk, “Using Embodied Agents to Reverse-Engineer Natural Intelligence”,…

Does your imitation-based robot policy fail out of distribution, even when the demos should cover the needed skills? Check out Adapting by Analogy (ABA), our test-time method for steering visuomotor policies using functionally corresponding training observations. #CoRL2025 (1/9)

Robots in the home may one day help with tasks like sorting our dishes & organizing our shelves 👀🤖 But to do so, they need to plan over long sequences– figuring out how to move objects and where to put them. Check out SPOT: a system that can rearrange objects into any layout!

🚨Introducing SPOT: Search over Point Cloud Object Transformations. SPOT is a combined learning-and-planning approach that searches in the space of object transformations. Website: planning-from-point-clouds.github.io Paper: arxiv.org/abs/2509.04645 Code: github.com/kallol-saha/SP…

Big congratulations to Assistant Professor @andrea_bajcsy for earning the DARPA YFA award! 👏💡💪 Read about her project: “Unifying Uncertainty and Safety for Embodied AI Agents" on our news site: ri.cmu.edu/bajcsy-earns-d… #TartanProud #CMUrobotics

I'm very honored to receive the DARPA Young Faculty Award (YFA) this year! 🎉 This award will support my lab's work on unifying uncertainty + safety for embodied AI and foundation-model-powered robots 🤖✨

🚀CMU VLA Update 2: The team has released another video showing their system "finding the blue trashcan in the classroom" 👀🗑️ Stay tuned for further updates! youtube.com/watch?v=QuIEHK…

United States 트렌드

- 1. Jeremiah Smith 5,636 posts

- 2. Vandy 8,640 posts

- 3. Julian Sayin 4,557 posts

- 4. Ohio State 14.1K posts

- 5. Caleb Downs 1,069 posts

- 6. Pavia 3,302 posts

- 7. Caicedo 24.5K posts

- 8. Arch Manning 3,468 posts

- 9. Vanderbilt 6,923 posts

- 10. CJ Donaldson N/A

- 11. Clemson 8,264 posts

- 12. #HookEm 3,218 posts

- 13. Jim Knowles 1,039 posts

- 14. Buckeyes 4,498 posts

- 15. French Laundry 4,840 posts

- 16. Christmas 130K posts

- 17. Gus Johnson N/A

- 18. #GoBucks 2,614 posts

- 19. Arvell Reese N/A

- 20. Dawson 3,631 posts

내가 좋아할 만한 콘텐츠

-

David Held

David Held

@davheld -

IEEE RAS

IEEE RAS

@ieeeras -

IEEE ICRA

IEEE ICRA

@ieee_ras_icra -

CMU School of Computer Science

CMU School of Computer Science

@SCSatCMU -

Robot Operating System (ROS)

Robot Operating System (ROS)

@rosorg -

AirLab

AirLab

@AirLabCMU -

Conference on Robot Learning

Conference on Robot Learning

@corl_conf -

Davide Scaramuzza

Davide Scaramuzza

@davsca1 -

Clearpath Robotics by Rockwell Automation

Clearpath Robotics by Rockwell Automation

@clearpathrobots -

WeeklyRobotics

WeeklyRobotics

@WeeklyRobotics -

Machine Learning Dept. at Carnegie Mellon

Machine Learning Dept. at Carnegie Mellon

@mldcmu -

AutonomousRobotsLab

AutonomousRobotsLab

@arlteam -

Learning Systems and Robotics Lab (is hiring!)

Learning Systems and Robotics Lab (is hiring!)

@learnsyslab -

Luca Carlone

Luca Carlone

@lucacarlone1 -

Robotic Systems Lab

Robotic Systems Lab

@leggedrobotics

Something went wrong.

Something went wrong.