Nathan Barry

@nathanbarrydev

Man in the arena allocator, prev ML Intern @Apple, CS + Math @UTAustin, @zfellows

You might like

I implemented gpt-2 using graphics shaders

vibecoded a visualizer for latent representations of tokens as they flow through gpt-2 (runs entirely in browser on webgl) visualizing high dim space is hard so rather than just PCAing 768 -> 2, it renders similarity as graph connectedness at an adjustable cosine threshold

xAI should fork VS Code and drop a cursor competitor. They could name it Xcode

I’m surprised that no app uses face verification (like what dating apps have) to combat bots. Deleting an account is terrible for false positives, but having to do face verification or be locked out of your account is just an inconvenience. Would solve most of the issue imo

My experiments with fine-tuning RoBERTa to do language diffusion are at an end. Surprisingly cohesive with such a minimum implementation but not as good as gpt-2. A more thorough implementation (and better training) should be able to reach parity on quality and speed though.

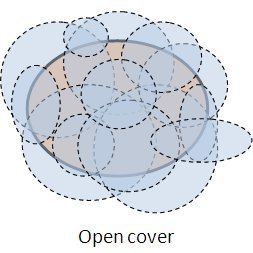

Just read how Fourier transforms “work” because sines and cosines form an orthogonal basis for a specific Hilbert space of functions. Math is beautiful but it always feels like a bottomless pit of knowledge where there’s always an infinite amount of things you don’t know

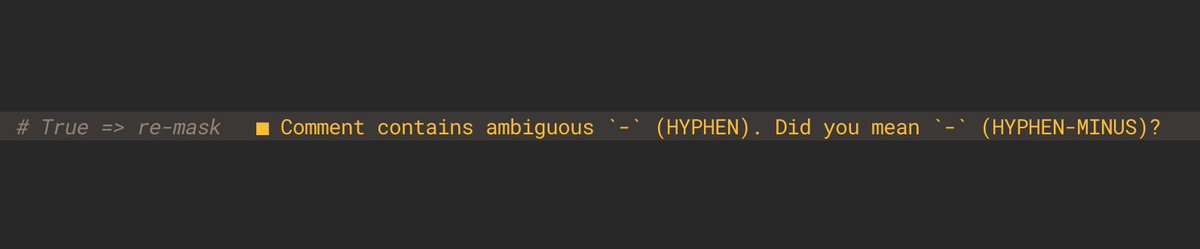

I don’t know why LLM companies don’t watermark their output by using rare UTF-8 code points for similar looking characters. If they just replaced all U+002D: HYPHEN-MINUS with U+2010: HYPHEN, basically no one would notice but it’d be obvious to software that it’s generated output

I was not put on this earth to use python libraries all day

In the early days of X/PayPal, fraud was their biggest problem and they built out tools for anomaly detection which eventually led to Palantir. It’s ironic that 20 years later, the reincarnation of X (via twitter) does such a bad job of anomaly (bot account) detection

Increased the number of diffusion steps for my RoBERTa Diffusion model and it’s wild how surprisingly good this is. Will fine-tune it on OpenWebText and compare it to GPT-2 later

I’m am surprised at the amount of coherency I’ve gotten by trying to fine-tune RoBERTa into a language diffusion model. Pretty decent for a 6 year-old model with only 125 million parameters

New Paper! Darwin Godel Machine: Open-Ended Evolution of Self-Improving Agents A longstanding goal of AI research has been the creation of AI that can learn indefinitely. One path toward that goal is an AI that improves itself by rewriting its own code, including any code…

Day 1 of messing with masked language diffusion models 🫠🫠

Don't use structured output mode for reasoning tasks. We’re open sourcing Osmosis-Structure-0.6B: an extremely small model that can turn any unstructured data into any format (e.g. JSON schema). Use it with any model - download and blog below!

When I started using Arch Linux years ago, any time something would randomly break I’d have to spend at least an hour sifting through forums to find a solution. Now ChatGPT can diagnose and fix it in a few seconds and Pewdiepie uses Arch

United States Trends

- 1. Happy Birthday Charlie 63K posts

- 2. #tuesdayvibe 3,887 posts

- 3. Good Tuesday 34.2K posts

- 4. Pentagon 77.6K posts

- 5. #NationalDessertDay N/A

- 6. Shilo 2,116 posts

- 7. Sandy Hook 2,136 posts

- 8. Dissidia 7,169 posts

- 9. Happy Heavenly 9,566 posts

- 10. happy 32nd 10.6K posts

- 11. Victory Tuesday 1,093 posts

- 12. #TacoTuesday N/A

- 13. Monad 195K posts

- 14. #14Oct 2,428 posts

- 15. #PutThatInYourPipe N/A

- 16. Larry Fink 5,942 posts

- 17. Standard Time 3,030 posts

- 18. $MON 15.5K posts

- 19. Martin Sheen 6,784 posts

- 20. Nomura 1,757 posts

You might like

-

ishan

ishan

@0xishand -

Brian

Brian

@TheAustinIPGuy -

Hongpeng Jin

Hongpeng Jin

@HongpengJin -

Bhasker Sri Harsha

Bhasker Sri Harsha

@BhaskerSriHarsh -

Andy M.

Andy M.

@omgwtfitsp -

Edgars Liepa

Edgars Liepa

@liepa_edgars -

Rich Wyatt - Author

Rich Wyatt - Author

@ManTheForce -

Pranav Mishra

Pranav Mishra

@navamishra -

V. Pan

V. Pan

@AI_ML_iQ -

Alessandro Scarcella

Alessandro Scarcella

@__alesca__ -

peter chung

peter chung

@peterkchung -

Madysn Watkins

Madysn Watkins

@MadysnWatkins

Something went wrong.

Something went wrong.